Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Apache Spark is a fast engine for large-scale data processing. As of the Spark 2.3.0 release, Apache Spark supports native integration with Kubernetes clusters. Azure Kubernetes Service (AKS) is a managed Kubernetes environment running in Azure. This document details preparing and running Apache Spark jobs on an Azure Kubernetes Service (AKS) cluster.

Prerequisites

In order to complete the steps within this article, you need the following.

- Basic understanding of Kubernetes and Apache Spark.

- Docker Hub account, or an Azure Container Registry.

- Azure CLI installed on your development system.

- JDK 8 installed on your system.

- Apache Maven installed on your system.

- SBT (Scala Build Tool) installed on your system.

- Git command-line tools installed on your system.

Create an AKS cluster

Spark is used for large-scale data processing and requires that Kubernetes nodes are sized to meet the Spark resources requirements. We recommend a minimum size of Standard_D3_v2 for your Azure Kubernetes Service (AKS) nodes.

If you need an AKS cluster that meets this minimum recommendation, run the following commands.

Create a resource group for the cluster.

az group create --name mySparkCluster --location chinaeast2

Create a Service Principal for the cluster. After it is created, you will need the Service Principal appId and password for the next command.

az ad sp create-for-rbac --name SparkSP --role Contributor

Create the AKS cluster with nodes that are of size Standard_D3_v2, and values of appId and password passed as service-principal and client-secret parameters.

az aks create --resource-group mySparkCluster --name mySparkCluster --node-vm-size Standard_D3_v2 --generate-ssh-keys --service-principal <APPID> --client-secret <PASSWORD>

Connect to the AKS cluster.

az aks get-credentials --resource-group mySparkCluster --name mySparkCluster

If you are using Azure Container Registry (ACR) to store container images, configure authentication between AKS and ACR. See the ACR authentication documentation for these steps.

Build the Spark source

Before running Spark jobs on an AKS cluster, you need to build the Spark source code and package it into a container image. The Spark source includes scripts that can be used to complete this process.

Clone the Spark project repository to your development system.

git clone -b branch-2.4 https://github.com/apache/spark

Change into the directory of the cloned repository and save the path of the Spark source to a variable.

cd spark

sparkdir=$(pwd)

If you have multiple JDK versions installed, set JAVA_HOME to use version 8 for the current session.

export JAVA_HOME=`/usr/libexec/java_home -d 64 -v "1.8*"`

Run the following command to build the Spark source code with Kubernetes support.

./build/mvn -Pkubernetes -DskipTests clean package

The following commands create the Spark container image and push it to a container image registry. Replace registry.example.com with the name of your container registry and v1 with the tag you prefer to use. If using Docker Hub, this value is the registry name. If using Azure Container Registry (ACR), this value is the ACR login server name.

REGISTRY_NAME=registry.example.com

REGISTRY_TAG=v1

./bin/docker-image-tool.sh -r $REGISTRY_NAME -t $REGISTRY_TAG build

Push the container image to your container image registry.

./bin/docker-image-tool.sh -r $REGISTRY_NAME -t $REGISTRY_TAG push

Prepare a Spark job

Next, prepare a Spark job. A jar file is used to hold the Spark job and is needed when running the spark-submit command. The jar can be made accessible through a public URL or pre-packaged within a container image. In this example, a sample jar is created to calculate the value of Pi. This jar is then uploaded to Azure storage. If you have an existing jar, feel free to substitute

Create a directory where you would like to create the project for a Spark job.

mkdir myprojects

cd myprojects

Create a new Scala project from a template.

sbt new sbt/scala-seed.g8

When prompted, enter SparkPi for the project name.

name [Scala Seed Project]: SparkPi

Navigate to the newly created project directory.

cd sparkpi

Run the following commands to add an SBT plugin, which allows packaging the project as a jar file.

touch project/assembly.sbt

echo 'addSbtPlugin("com.eed3si9n" % "sbt-assembly" % "0.14.10")' >> project/assembly.sbt

Run these commands to copy the sample code into the newly created project and add all necessary dependencies.

EXAMPLESDIR="src/main/scala/org/apache/spark/examples"

mkdir -p $EXAMPLESDIR

cp $sparkdir/examples/$EXAMPLESDIR/SparkPi.scala $EXAMPLESDIR/SparkPi.scala

cat <<EOT >> build.sbt

// https://mvnrepository.com/artifact/org.apache.spark/spark-sql

libraryDependencies += "org.apache.spark" %% "spark-sql" % "2.3.0" % "provided"

EOT

sed -ie 's/scalaVersion.*/scalaVersion := "2.11.11"/' build.sbt

sed -ie 's/name.*/name := "SparkPi",/' build.sbt

To package the project into a jar, run the following command.

sbt assembly

After successful packaging, you should see output similar to the following.

[info] Packaging /Users/me/myprojects/sparkpi/target/scala-2.11/SparkPi-assembly-0.1.0-SNAPSHOT.jar ...

[info] Done packaging.

[success] Total time: 10 s, completed Mar 6, 2018 11:07:54 AM

Copy job to storage

Create an Azure storage account and container to hold the jar file.

RESOURCE_GROUP=sparkdemo

STORAGE_ACCT=sparkdemo$RANDOM

az group create --name $RESOURCE_GROUP --location chinaeast2

az storage account create --resource-group $RESOURCE_GROUP --name $STORAGE_ACCT --sku Standard_LRS

export AZURE_STORAGE_CONNECTION_STRING=`az storage account show-connection-string --resource-group $RESOURCE_GROUP --name $STORAGE_ACCT -o tsv`

Upload the jar file to the Azure storage account with the following commands.

CONTAINER_NAME=jars

BLOB_NAME=SparkPi-assembly-0.1.0-SNAPSHOT.jar

FILE_TO_UPLOAD=target/scala-2.11/SparkPi-assembly-0.1.0-SNAPSHOT.jar

echo "Creating the container..."

az storage container create --name $CONTAINER_NAME

az storage container set-permission --name $CONTAINER_NAME --public-access blob

echo "Uploading the file..."

az storage blob upload --container-name $CONTAINER_NAME --file $FILE_TO_UPLOAD --name $BLOB_NAME

jarUrl=$(az storage blob url --container-name $CONTAINER_NAME --name $BLOB_NAME | tr -d '"')

Variable jarUrl now contains the publicly accessible path to the jar file.

Submit a Spark job

Start kube-proxy in a separate command-line with the following code.

kubectl proxy

Navigate back to the root of Spark repository.

cd $sparkdir

Create a service account that has sufficient permissions for running a job.

kubectl create serviceaccount spark

kubectl create clusterrolebinding spark-role --clusterrole=edit --serviceaccount=default:spark --namespace=default

Submit the job using spark-submit.

./bin/spark-submit \

--master k8s://http://127.0.0.1:8001 \

--deploy-mode cluster \

--name spark-pi \

--class org.apache.spark.examples.SparkPi \

--conf spark.executor.instances=3 \

--conf spark.kubernetes.authenticate.driver.serviceAccountName=spark \

--conf spark.kubernetes.container.image=$REGISTRY_NAME/spark:$REGISTRY_TAG \

$jarUrl

This operation starts the Spark job, which streams job status to your shell session. While the job is running, you can see Spark driver pod and executor pods using the kubectl get pods command. Open a second terminal session to run these commands.

kubectl get pods

NAME READY STATUS RESTARTS AGE

spark-pi-2232778d0f663768ab27edc35cb73040-driver 1/1 Running 0 16s

spark-pi-2232778d0f663768ab27edc35cb73040-exec-1 0/1 Init:0/1 0 4s

spark-pi-2232778d0f663768ab27edc35cb73040-exec-2 0/1 Init:0/1 0 4s

spark-pi-2232778d0f663768ab27edc35cb73040-exec-3 0/1 Init:0/1 0 4s

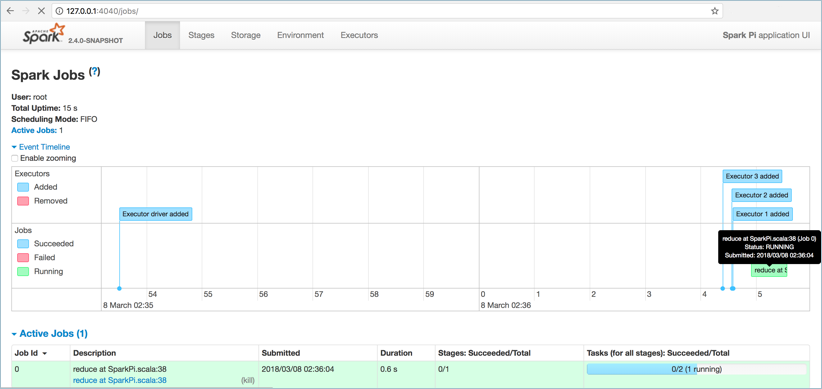

While the job is running, you can also access the Spark UI. In the second terminal session, use the kubectl port-forward command provide access to Spark UI.

kubectl port-forward spark-pi-2232778d0f663768ab27edc35cb73040-driver 4040:4040

To access Spark UI, open the address 127.0.0.1:4040 in a browser.

Get job results and logs

After the job has finished, the driver pod will be in a "Completed" state. Get the name of the pod with the following command.

kubectl get pods --show-all

Output:

NAME READY STATUS RESTARTS AGE

spark-pi-2232778d0f663768ab27edc35cb73040-driver 0/1 Completed 0 1m

Use the kubectl logs command to get logs from the spark driver pod. Replace the pod name with your driver pod's name.

kubectl logs spark-pi-2232778d0f663768ab27edc35cb73040-driver

Within these logs, you can see the result of the Spark job, which is the value of Pi.

Pi is roughly 3.152155760778804

Package jar with container image

In the above example, the Spark jar file was uploaded to Azure storage. Another option is to package the jar file into custom-built Docker images.

To do so, find the dockerfile for the Spark image located at $sparkdir/resource-managers/kubernetes/docker/src/main/dockerfiles/spark/ directory. Add an ADD statement for the Spark job jar somewhere between WORKDIR and ENTRYPOINT declarations.

Update the jar path to the location of the SparkPi-assembly-0.1.0-SNAPSHOT.jar file on your development system. You can also use your own custom jar file.

WORKDIR /opt/spark/work-dir

ADD /path/to/SparkPi-assembly-0.1.0-SNAPSHOT.jar SparkPi-assembly-0.1.0-SNAPSHOT.jar

ENTRYPOINT [ "/opt/entrypoint.sh" ]

Build and push the image with the included Spark scripts.

./bin/docker-image-tool.sh -r <your container repository name> -t <tag> build

./bin/docker-image-tool.sh -r <your container repository name> -t <tag> push

When running the job, instead of indicating a remote jar URL, the local:// scheme can be used with the path to the jar file in the Docker image.

./bin/spark-submit \

--master k8s://https://<k8s-apiserver-host>:<k8s-apiserver-port> \

--deploy-mode cluster \

--name spark-pi \

--class org.apache.spark.examples.SparkPi \

--conf spark.executor.instances=3 \

--conf spark.kubernetes.authenticate.driver.serviceAccountName=spark \

--conf spark.kubernetes.container.image=<spark-image> \

local:///opt/spark/work-dir/<your-jar-name>.jar

Warning

From Spark documentation: "The Kubernetes scheduler is currently experimental. In future versions, there may be behavioral changes around configuration, container images and entrypoints".

Kubernetes scheduler is currently experimental. In future versions, there may be behavioral changes around configuration, container images and entrypoints".

Next steps

Check out Spark documentation for more details.