Physical network requirements for Azure Stack HCI

Applies to: Azure Stack HCI, version 22H2

This article discusses physical (fabric) network considerations and requirements for Azure Stack HCI, particularly for network switches.

Note

Requirements for future Azure Stack HCI versions may change.

Network switches for Azure Stack HCI

Microsoft tests Azure Stack HCI to the standards and protocols identified in the Network switch requirements section below. While Microsoft doesn't certify network switches, we do work with vendors to identify devices that support Azure Stack HCI requirements.

Important

While other network switches using technologies and protocols not listed here may work, We cannot guarantee they will work with Azure Stack HCI and may be unable to assist in troubleshooting issues that occur.

When purchasing network switches, contact your switch vendor and ensure that the devices meet the Azure Stack HCI requirements for your specified role types. The following vendors (in alphabetical order) have confirmed that their switches support Azure Stack HCI requirements:

Click on a vendor tab to see validated switches for each of the Azure Stack HCI traffic types. These network classifications can be found here.

Important

We update these lists as we're informed of changes by network switch vendors.

If your switch isn't included, contact your switch vendor to ensure that your switch model and the version of the switch's operating system supports the requirements in the next section.

Network switch requirements

This section lists industry standards that are mandatory for the specific roles of network switches used in Azure Stack HCI deployments. These standards help ensure reliable communications between nodes in Azure Stack HCI cluster deployments.

Note

Network adapters used for compute, storage, and management traffic require Ethernet. For more information, see Host network requirements.

Here are the mandatory IEEE standards and specifications:

22H2 Role Requirements

| Requirement | Management | Storage | Compute (Standard) | Compute (SDN) |

|---|---|---|---|---|

| Virtual LANS | ✓ | ✓ | ✓ | ✓ |

| Priority Flow Control | ✓ | |||

| Enhanced Transmission Selection | ✓ | |||

| LLDP Port VLAN ID | ✓ | |||

| LLDP VLAN Name | ✓ | ✓ | ✓ | |

| LLDP Link Aggregation | ✓ | ✓ | ✓ | ✓ |

| LLDP ETS Configuration | ✓ | |||

| LLDP ETS Recommendation | ✓ | |||

| LLDP PFC Configuration | ✓ | |||

| LLDP Maximum Frame Size | ✓ | ✓ | ✓ | ✓ |

| Maximum Transmission Unit | ✓ | |||

| Border Gateway Protocol | ✓ | |||

| DHCP Relay Agent | ✓ |

Note

Guest RDMA requires both Compute (Standard) and Storage.

Standard: IEEE 802.1Q

Ethernet switches must comply with the IEEE 802.1Q specification that defines VLANs. VLANs are required for several aspects of Azure Stack HCI and are required in all scenarios.

Standard: IEEE 802.1Qbb

Ethernet switches used for Azure Stack HCI storage traffic must comply with the IEEE 802.1Qbb specification that defines Priority Flow Control (PFC). PFC is required where Data Center Bridging (DCB) is used. Since DCB can be used in both RoCE and iWARP RDMA scenarios, 802.1Qbb is required in all scenarios. A minimum of three Class of Service (CoS) priorities are required without downgrading the switch capabilities or port speeds. At least one of these traffic classes must provide lossless communication.

Standard: IEEE 802.1Qaz

Ethernet switches used for Azure Stack HCI storage traffic must comply with the IEEE 802.1Qaz specification that defines Enhanced Transmission Select (ETS). ETS is required where DCB is used. Since DCB can be used in both RoCE and iWARP RDMA scenarios, 802.1Qaz is required in all scenarios.

A minimum of three CoS priorities are required without downgrading the switch capabilities or port speed. Additionally, if your device allows ingress QoS rates to be defined, we recommend that you do not configure ingress rates or configure them to the exact same value as the egress (ETS) rates.

Note

Hyper-converged infrastructure has a high reliance on East-West Layer-2 communication within the same rack and therefore requires ETS. Microsoft doesn't test Azure Stack HCI with Differentiated Services Code Point (DSCP).

Standard: IEEE 802.1AB

Ethernet switches must comply with the IEEE 802.1AB specification that defines the Link Layer Discovery Protocol (LLDP). LLDP is required for Azure Stack HCI and enables troubleshooting of physical networking configurations.

Configuration of the LLDP Type-Length-Values (TLVs) must be dynamically enabled. Switches must not require additional configuration beyond enablement of a specific TLV. For example, enabling 802.1 Subtype 3 should automatically advertise all VLANs available on switch ports.

Custom TLV requirements

LLDP allows organizations to define and encode their own custom TLVs. These are called Organizationally Specific TLVs. All Organizationally Specific TLVs start with an LLDP TLV Type value of 127. The table below shows which Organizationally Specific Custom TLV (TLV Type 127) subtypes are required.

| Organization | TLV Subtype |

|---|---|

| IEEE 802.1 | Port VLAN ID (Subtype = 1) |

| IEEE 802.1 | VLAN Name (Subtype = 3) Minimum of 10 VLANS |

| IEEE 802.1 | Link Aggregation (Subtype = 7) |

| IEEE 802.1 | ETS Configuration (Subtype = 9) |

| IEEE 802.1 | ETS Recommendation (Subtype = A) |

| IEEE 802.1 | PFC Configuration (Subtype = B) |

| IEEE 802.3 | Maximum Frame Size (Subtype = 4) |

Maximum Transmission Unit

New Requirement in 22H2

The maximum transmission unit (MTU) is the largest size frame or packet that can be transmitted across a data link. A range of 1514 - 9174 is required for SDN encapsulation.

Border Gateway Protocol

New Requirement in 22H2

Ethernet switches used for Azure Stack HCI SDN compute traffic must support Border Gateway Protocol (BGP). BGP is a standard routing protocol used to exchange routing and reachability information between two or more networks. Routes are automatically added to the route table of all subnets with BGP propagation enabled. This is required to enable tenant workloads with SDN and dynamic peering. RFC 4271: Border Gateway Protocol 4

DHCP Relay Agent

New Requirement in 22H2

Ethernet switches used for Azure Stack HCI management traffic must support DHCP relay agent. The DHCP relay agent is any TCP/IP host which is used to forward requests and replies between the DHCP server and client when the server is present on a different network. It is required for PXE boot services. RFC 3046: DHCPv4 or RFC 6148: DHCPv4

Network traffic and architecture

This section is predominantly for network administrators.

Azure Stack HCI can function in various data center architectures including 2-tier (Spine-Leaf) and 3-tier (Core-Aggregation-Access). This section refers more to concepts from the Spine-Leaf topology that is commonly used with workloads in hyper-converged infrastructure such as Azure Stack HCI.

Network models

Network traffic can be classified by its direction. Traditional Storage Area Network (SAN) environments are heavily North-South where traffic flows from a compute tier to a storage tier across a Layer-3 (IP) boundary. Hyperconverged infrastructure is more heavily East-West where a substantial portion of traffic stays within a Layer-2 (VLAN) boundary.

Important

We highly recommend that all cluster nodes in a site are physically located in the same rack and connected to the same top-of-rack (ToR) switches.

Note

Stretched cluster functionality is only available in Azure Stack HCI, version 22H2.

North-South traffic for Azure Stack HCI

North-South traffic has the following characteristics:

- Traffic flows out of a ToR switch to the spine or in from the spine to a ToR switch.

- Traffic leaves the physical rack or crosses a Layer-3 boundary (IP).

- Includes management (PowerShell, Windows Admin Center), compute (VM), and inter-site stretched cluster traffic.

- Uses an Ethernet switch for connectivity to the physical network.

East-West traffic for Azure Stack HCI

East-West traffic has the following characteristics:

- Traffic remains within the ToR switches and Layer-2 boundary (VLAN).

- Includes storage traffic or Live Migration traffic between nodes in the same cluster and (if using a stretched cluster) within the same site.

- May use an Ethernet switch (switched) or a direct (switchless) connection, as described in the next two sections.

Using switches

North-South traffic requires the use of switches. Besides using an Ethernet switch that supports the required protocols for Azure Stack HCI, the most important aspect is the proper sizing of the network fabric.

It is imperative to understand the "non-blocking" fabric bandwidth that your Ethernet switches can support and that you minimize (or preferably eliminate) oversubscription of the network.

Common congestion points and oversubscription, such as the Multi-Chassis Link Aggregation Group used for path redundancy, can be eliminated through proper use of subnets and VLANs. Also see Host network requirements.

Work with your network vendor or network support team to ensure your network switches have been properly sized for the workload you are intending to run.

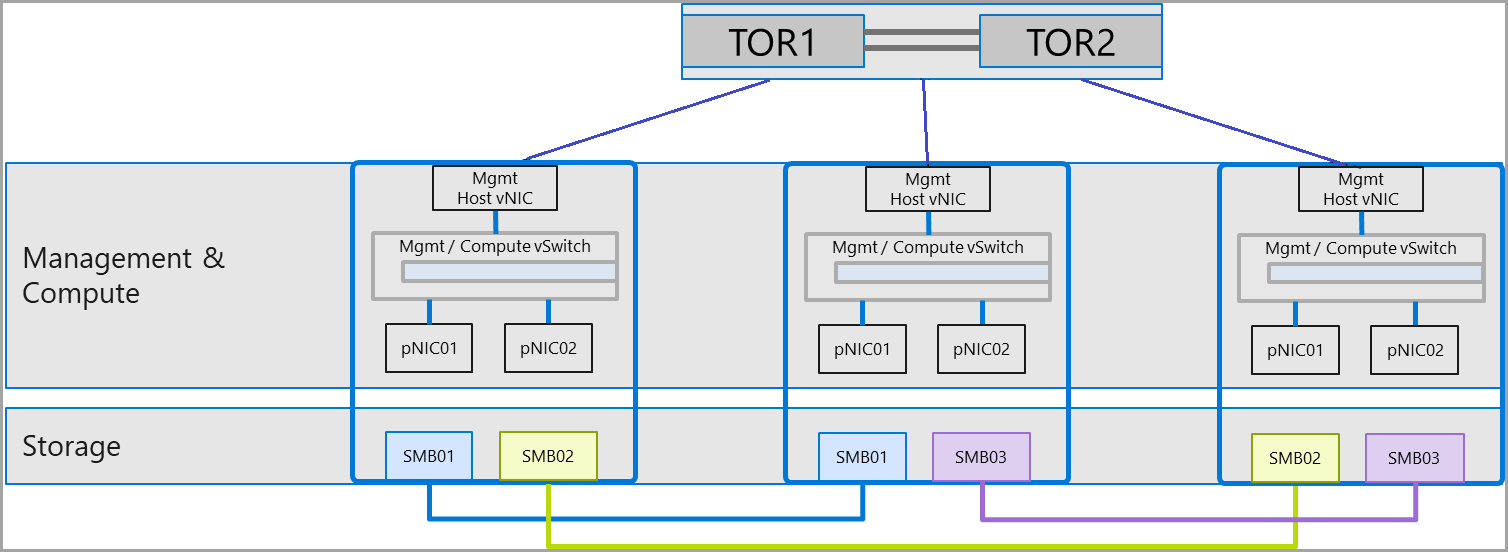

Using switchless

Azure Stack HCI supports switchless (direct) connections for East-West traffic for all cluster sizes so long as each node in the cluster has a redundant connection to every node in the cluster. This is called a "full-mesh" connection.

| Interface pair | Subnet | VLAN |

|---|---|---|

| Mgmt host vNIC | Customer-specific | Customer-specific |

| SMB01 | 192.168.71.x/24 | 711 |

| SMB02 | 192.168.72.x/24 | 712 |

| SMB03 | 192.168.73.x/24 | 713 |

Note

The benefits of switchless deployments diminish with clusters larger than three-nodes due to the number of network adapters required.

Advantages of switchless connections

- No switch purchase is necessary for East-West traffic. A switch is required for North-South traffic. This may result in lower capital expenditure (CAPEX) costs but is dependent on the number of nodes in the cluster.

- Because there is no switch, configuration is limited to the host, which may reduce the potential number of configuration steps needed. This value diminishes as the cluster size increases.

Disadvantages of switchless connections

- More planning is required for IP and subnet addressing schemes.

- Provides only local storage access. Management traffic, VM traffic, and other traffic requiring North-South access cannot use these adapters.

- As the number of nodes in the cluster grows, the cost of network adapters could exceed the cost of using network switches.

- Doesn't scale well beyond three-node clusters. More nodes incur additional cabling and configuration that can surpass the complexity of using a switch.

- Cluster expansion is complex, requiring hardware and software configuration changes.

Next steps

- For deployment, see Create a cluster using Windows Admin Center.

- For deployment, see Create a cluster using Windows PowerShell.