Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

NLog is a flexible and free logging platform for various .NET platforms, including .NET standard. NLog allows you to write to several targets, such as a database, file, or console. With NLog, you can change the logging configuration on-the-fly. The NLog sink is a target for NLog that allows you to send your log messages to a KQL cluster. The plugin is built on top of the Azure-Kusto-Data library and provides an efficient way to sink your logs to your cluster.

In this article you will learn how to ingest data with nLog sink.

For a complete list of data connectors, see Data connectors overview.

Prerequisites

- .NET SDK 6.0 or later

- An Azure Data Explorer cluster and database

Set up your environment

In this section, you'll prepare your environment to use the NLog connector.

Install the package

Add the NLog.Azure.Kusto NuGet package. Use the Install-Package command specifying the name of the NuGet package.

Install-Package NLog.Azure.Kusto

Create a Microsoft Entra app registration

Microsoft Entra application authentication is used for applications that need to access the platform without a user present. To get data using the NLog connector, you need to create and register a Microsoft Entra service principal, and then authorize this principal to get data from a database.

The Microsoft Entra service principal can be created through the Azure portal or programatically, as in the following example.

This service principal will be the identity used by the connector to write data your table in Kusto. You'll later grant permissions for this service principal to access Kusto resources.

Sign in to your Azure subscription via Azure CLI. Then authenticate in the browser.

az loginChoose the subscription to host the principal. This step is needed when you have multiple subscriptions.

az account set --subscription YOUR_SUBSCRIPTION_GUIDCreate the service principal. In this example, the service principal is called

my-service-principal.az ad sp create-for-rbac -n "my-service-principal" --role Contributor --scopes /subscriptions/{SubID}From the returned JSON data, copy the

appId,password, andtenantfor future use.{ "appId": "00001111-aaaa-2222-bbbb-3333cccc4444", "displayName": "my-service-principal", "name": "my-service-principal", "password": "00001111-aaaa-2222-bbbb-3333cccc4444", "tenant": "00001111-aaaa-2222-bbbb-3333cccc4444" }

You've created your Microsoft Entra application and service principal.

Save the following values to be used in later steps: * Application (client) ID * Directory (tenant) ID * Client secret key value

Grant the Microsoft Entra app permissions

In your query environment, run the following management command, replacing the placeholders. Replace DatabaseName with the name of the target database and ApplicationID with the previously saved value. This command grants the app the database ingestor role. For more information, see Manage database security roles.

.add database <DatabaseName> ingestors ('aadapp=<ApplicationID>') 'NLOG Azure App Registration role'Note

The last parameter is a string that shows up as notes when you query the roles associated with a database. For more information, see View existing security roles.

Create a table and ingestion mapping

Create a target table for the incoming data.

In your query editor, run the following table creation command, replacing the placeholder TableName with the name of the target table:

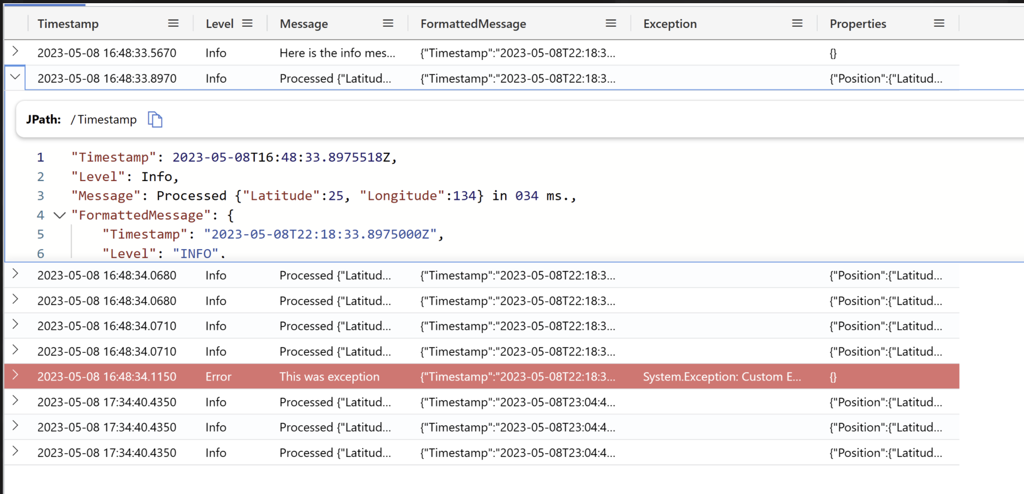

.create table <TableName> (Timestamp:datetime, Level:string, Message:string, FormattedMessage:dynamic, Exception:string, Properties:dynamic)

Add the target configuration to your app

Use the following steps to:

- Add the target configuration

- Build and run the app

Add the target in your NLog configuration file.

<targets> <target name="targettable" xsi:type="TargetTable" IngestionEndpointUri="<Connection string>" Database="<Database name>" TableName="<Table name>" ApplicationClientId="<Entra App clientId>" ApplicationKey="<Entra App key>" Authority="<Entra tenant id>" /> </targets> ##Rules <rules> <logger name="*" minlevel="Info" writeTo="adxtarget" /> </rules>For more options, see Nlog connector.

Send data using the NLog sink. For example:

logger.Info("Processed {@Position} in {Elapsed:000} ms.", position, elapsedMs); logger.Error(exceptionObj, "This was exception"); logger.Debug("Processed {@Position} in {Elapsed:000} ms. ", position, elapsedMs); logger.Warn("Processed {@Position} in {Elapsed:000} ms. ", position, elapsedMs);Build and run the app. For example, if you're using Visual Studio, press F5.

Verify that the data is in your cluster. In your query environment, run the following query replacing the placeholder with the name of the table that you used earlier:

<TableName> | take 10

Run the sample app

Use the sample log generator app as an example showing how to configure and use the NLog sink.

Clone the NLog sink's git repo using the following git command:

git clone https://github.com/Azure/azure-kusto-nlog-sink.gitSet the following environmental variables, so that NLog config file can read them right away from environment:

Variable Description INGEST_ENDPOINT The ingest URI for your data target. You have copied this URI in the prerequisites. DATABASE The case-sensitive name of the target database. APP_ID Application client ID required for authentication. You saved this value in Create a Microsoft Entra app registration. APP_KEY Application key required for authentication. You saved this value in Create a Microsoft Entra App registration. AZURE_TENANT_ID The ID of the tenant in which the application is registered. You saved this value in Create a Microsoft Entra App registration. You can set the environment variables manually or using the following commands:

Within your terminal, navigate to the root folder of the cloned repo and run the following

dotnetcommand to build the app:cd .\NLog.Azure.Kusto.Samples\ dotnet buildWithin your terminal, navigate to the samples folder and run the following

dotnetcommand to run the app:dotnet runIn your query environment, select the target database, and run the following query to explore the ingested data.

ADXNLogSample | take 10Your output should look similar to the following image: