Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

APPLIES TO:  Azure Data Factory

Azure Data Factory  Azure Synapse Analytics

Azure Synapse Analytics

This article describes a solution template that you can use to copy data in bulk from Azure Data Lake Storage Gen2 to Azure Synapse Analytics / Azure SQL Database.

About this solution template

This template retrieves files from Azure Data Lake Storage Gen2 source. Then it iterates over each file in the source and copies the file to the destination data store.

Currently this template only supports copying data in DelimitedText format. Files in other data formats can also be retrieved from source data store, but can not be copied to the destination data store.

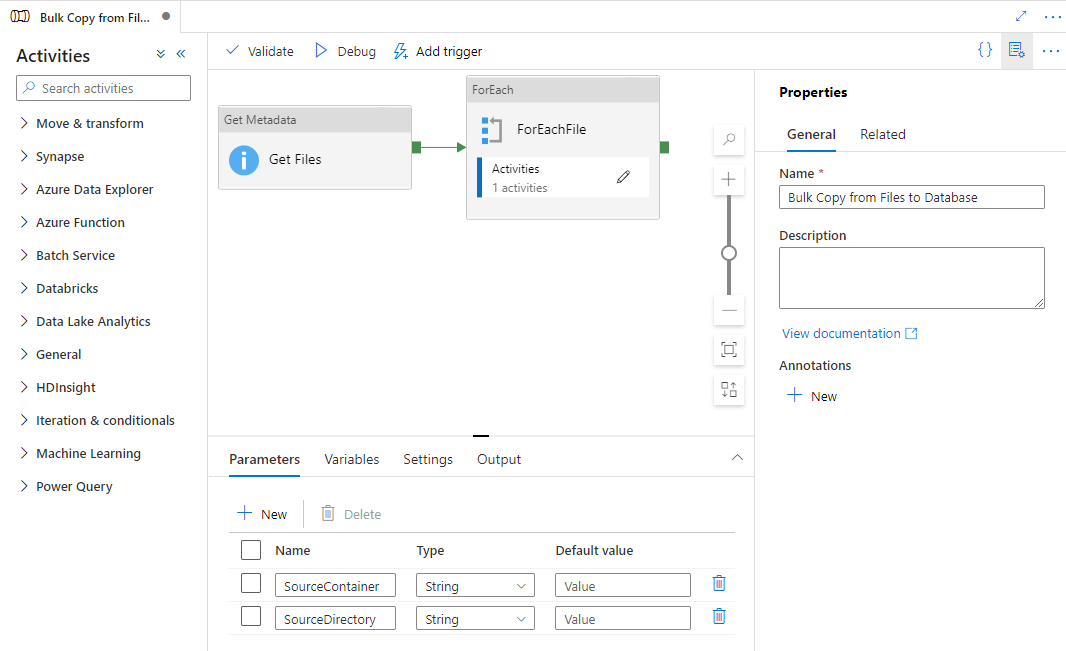

The template contains three activities:

- Get Metadata activity retrieves files from Azure Data Lake Storage Gen2, and passes them to subsequent ForEach activity.

- ForEach activity gets files from the Get Metadata activity and iterates each file to the Copy activity.

- Copy activity resides in ForEach activity to copy each file from the source data store to the destination data store.

The template defines the following two parameters:

- SourceContainer is the root container path where the data is copied from in your Azure Data Lake Storage Gen2.

- SourceDirectory is the directory path under the root container where the data is copied from in your Azure Data Lake Storage Gen2.

How to use this solution template

Open the Azure Data Factory Studio and select the Author tab with the pencil icon.

Hover over the Pipelines section and select the ellipsis that appears to the right side. Select Pipeline from template then.

Select the Bulk Copy from Files to Database template, then select Continue.

Create a New connection to the source Gen2 store as your source, and one to the database for your sink. Then select Use this template.

A new pipeline is created as shown in the following example:

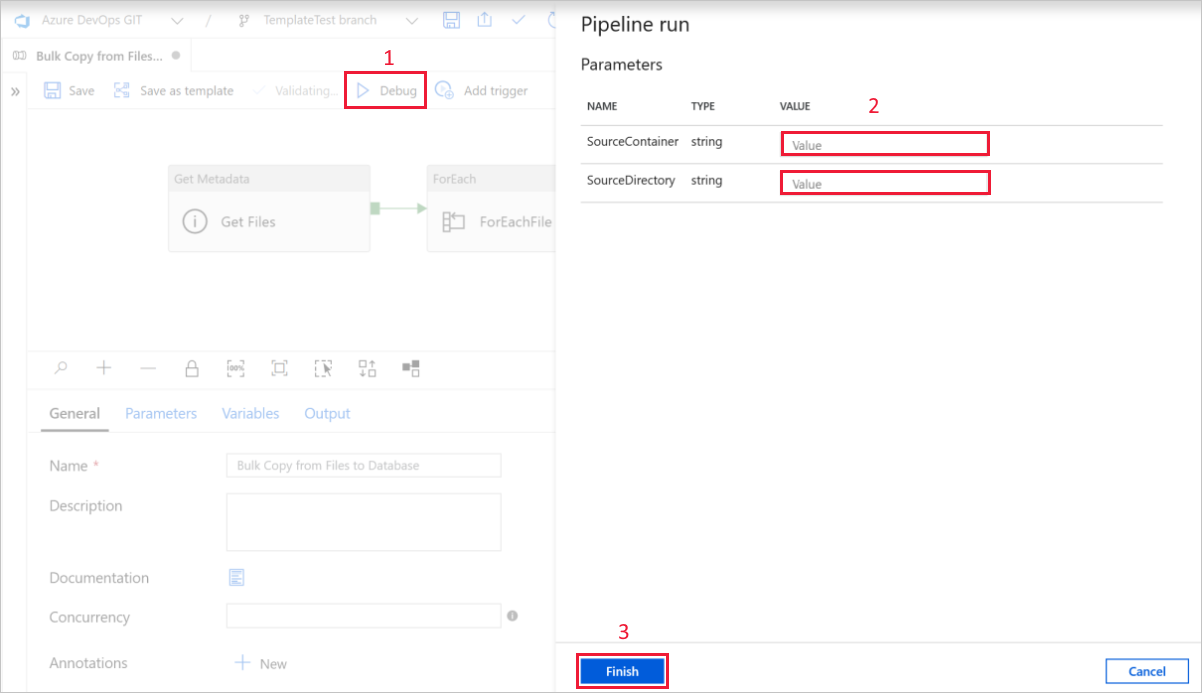

Select Debug, enter the Parameters, and then select Finish.

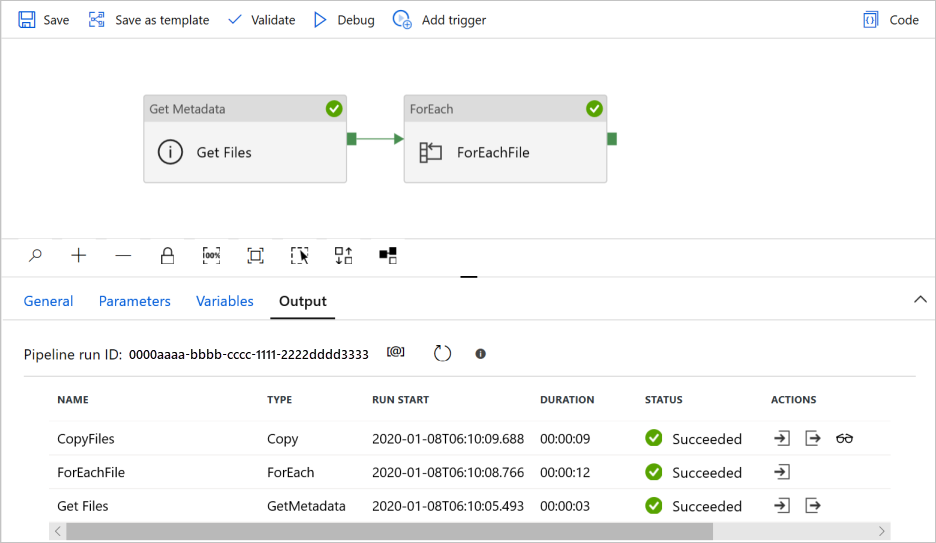

When the pipeline run completes successfully, you will see results similar to the following example: