Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

This article outlines a few debugging options available for your Apache Spark application:

- Spark UI

- Driver logs

- Executor logs

See Diagnose cost and performance issues using the Spark UI to walk through diagnosing cost and performance issues using the Spark UI.

Spark UI

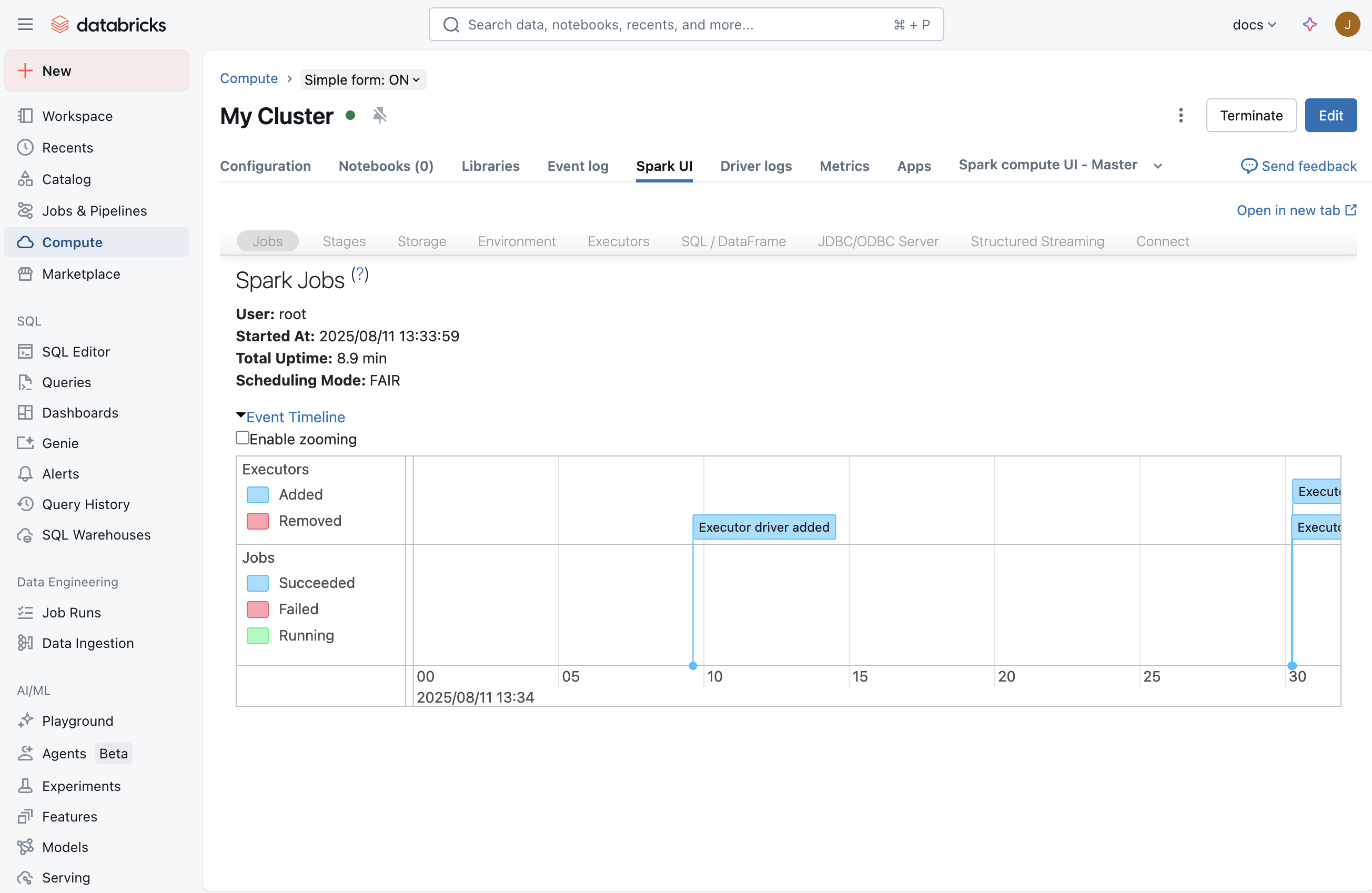

Once you start a job, the Spark UI shows information about what's happening in your application. To get to the Spark UI, select your compute from the Compute page, then click the Spark UI tab:

Streaming tab

Within the Spark UI, you will see a Streaming tab if a streaming job is running on the compute. If there is no streaming job running in this compute, this tab is not visible. You can skip to Driver logs to learn how to check for exceptions that might have happened while starting the streaming job.

This page allows you to check if your streaming application is receiving any input events from your source. For example, you may see that the job receives 1000 events/second.

Note

For TextFileStream, since files are input, the # of input events is always 0. In such cases, you can look at the Completed Batches section in the notebook to figure out how to find more information.

If you have an application that receives multiple input streams, you can click the Input Rate link which will show the # of events received for each receiver.

Processing time

As you scroll down, find the graph for Processing Time. This is one of the key graphs to understand the performance of your streaming job. As a general rule of thumb, it is good if you can process each batch within 80% of your batch processing time.

If the average processing time is closer or greater than your batch interval, then you will have a streaming application that will start queuing up resulting in backlog soon which can bring down your streaming job eventually.

Completed batches

Towards the end of the page, you will see a list of all the completed batches. The page displays details about the last 1000 batches that completed. From the table, you can get the # of events processed for each batch and their processing time. If you want to know more about what happened on one of the batches, you can click the batch link to get to the Batch details page.

Batch details page

The Batch details page has all the details about a batch. Two key things are:

- Input: Has details about the input to the batch. In this case, it has details about the Apache Kafka topic, partition and offsets read by Spark Structured Streaming for this batch. In case of TextFileStream, you see a list of file names that was read for this batch. This is the best way to start debugging a Streaming application reading from text files.

- Processing: You can click the link to the Job ID which has all the details about the processing done during this batch.

Job details page

The job details page shows a DAG visualization. This is useful to understand the order of operations and dependencies for every batch. For example, this could show that a batch read input from a Kafka direct stream followed by a flat map operation and then a map operation, and that the resulting stream was then used to update a global state using updateStateByKey.

Grayed boxes represent skipped stages. Spark is smart enough to skip some stages if they don't need to be recomputed. If the data is checkpointed or cached, then Spark skips recomputing those stages. In the previous streaming example, those stages correspond to the dependency on previous batches because of updateStateBykey. Since Spark Structured Streaming internally checkpoints the stream and it reads from the checkpoint instead of depending on the previous batches, they are shown as grayed stages.

At the bottom of the page, you will also find the list of jobs that were executed for this batch. You can click the links in the description to drill further into the task level execution.

Task details page

This is the most granular level of debugging you can get into from the Spark UI for a Spark application. This page has all the tasks that were executed for this batch. If you are investigating performance issues of your streaming application, then this page provides information such as the number of tasks that were executed and where they were executed (on which executors) and shuffle information.

Tip

Ensure that the tasks are executed on multiple executors (nodes) in your compute to have enough parallelism while processing. If you have a single receiver, sometimes only one executor might be doing all the work though you have more than one executor in your compute.

Thread dump

A thread dump shows a snapshot of a JVM's thread states.

Thread dumps are useful in debugging a specific hanging or slow-running task. To view a specific task's thread dump in the Spark UI:

Click the Jobs tab.

In the Jobs table, find the target job that corresponds to the thread dump you want to see, and click the link in the Description column.

In the job's Stages table, find the target stage that corresponds to the thread dump you want to see, and click the link in the Description column.

In the stage's Tasks list, find the target task that corresponds to the thread dump you want to see, and note its Task ID and Executor ID values.

Click the Executors tab.

In the Executors table, find the row that contains the Executor ID value that corresponds to the Executor ID value that you noted earlier. In that row, click the link in the Thread Dump column.

In the Thread dump for executor table, click the row where the Thread Name column contains TID followed by the Task ID value that you noted earlier. (If the task has finished running, you will not find a matching thread). The task's thread dump is shown.

Thread dumps are also useful for debugging issues where the driver appears to be hanging (for example, no Spark progress bars are showing) or making no progress on queries (for example, Spark progress bars are stuck at 100%). To view the driver's thread dump in the Spark UI:

Click the Executors tab.

In the Executors table, in the driver row, click the link in the Thread Dump column. The driver's thread dump is shown.

Driver logs

Driver logs are helpful in the following cases:

- Exceptions: Sometimes, you may not see the Streaming tab in the Spark UI. This is because the Streaming job was not started because of some exception. You can drill into the Driver logs to look at the stack trace of the exception. In some cases, the streaming job may have started properly. But you will see all the batches never going to the Completed batches section. They might all be in processing or failed state. In these cases, driver logs are also helpful to understand the nature of the underlying issues.

- Prints: Any print statements as part of the DAG shows up in the logs too.

Note

Who can access driver logs depends on the access mode of the compute resource. For compute with Standard access mode, only workspace admins can access driver logs. For compute with Dedicated access mode, the dedicated user or group and workspace admins can access the driver logs.

Executor logs

Executor logs are helpful if you see certain tasks are misbehaving and would like to see the logs for specific tasks. From the task details page shown above, you can get the executor where the task was run. Once you have that, you can go to the compute UI page, click the # nodes, and then the master. The master page lists all the workers. You can choose the worker where the suspicious task was run and then get to the log4j output.

Note

Executor logs are not available for compute with Standard access mode. For compute with Dedicated access mode, the dedicated user or group and workspace admins can access the executor logs.