Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

These features and Azure Databricks platform improvements were released in July 2020.

Note

The release date and content listed below only corresponds to actual deployment of the Azure Public Cloud in most case.

It provide the evolution history of Azure Databricks service on Azure Public Cloud for your reference that may not be consistent with the actual deployment on Azure operated by 21Vianet.

Note

Releases are staged. Your Azure Databricks account may not be updated until up to a week after the initial release date.

Web terminal (Public Preview)

July 29-Aug 4, 2020: Version 3.25

Web terminal provides a convenient and highly interactive way for users with CAN ATTACH TO permission on a cluster to run shell commands, including editors such as Vim or Emacs. Example uses of the web terminal include monitoring resource usage and installing Linux packages.

For details, see Run shell commands in Azure Databricks web terminal.

New, more secure global init script framework (Public Preview)

July 29 - August 4, 2020: Version 3.25

The new global init script framework brings significant improvements over legacy global init scripts:

- Init scripts are more secure, requiring admin permissions to create, view, and delete.

- Script-related launch failures are logged.

- You can set the execution order of multiple init scripts.

- Init scripts can reference cluster-related environment variables.

- Init scripts can be created and managed using the admin settings page or the new Global Init Scripts REST API.

Databricks recommends that you migrate existing legacy global init scripts to the new framework to take advantage of these improvements.

For details, see Global init scripts.

IP access lists now GA

July 29 - August 4, 2020: Version 3.25

The IP Access List API is now generally available.

The GA version includes one change, which is the renaming of the list_type values:

WHITELISTtoALLOWBLACKLISTtoBLOCK

Use the IP Access List API to configure your Azure Databricks workspaces so that users connect to the service only through existing corporate networks with a secure perimeter. Azure Databricks admins can use the IP Access List API to define a set of approved IP addresses, including allow and block lists. All incoming access to the web application and REST APIs requires that the user connect from an authorized IP address, guaranteeing that workspaces cannot be accessed from a public network like a coffee shop or an airport unless your users use VPN.

This feature requires the Premium plan.

For more information, see Configure IP access lists for workspaces.

New file upload dialog

July 29 - August 4, 2020: Version 3.25

You can now upload small tabular data files (like CSVs) and access them from a notebook by selecting Add data from the notebook File menu. Generated code shows you how to load the data into Pandas or DataFrames. Admins can disable this feature on the Admin Console Advanced tab.

For more information, see Browse files in DBFS.

SCIM API filter and sort improvements

July 29 - Aug 4, 2020: Version 3.25

The SCIM API now includes these filtering and sorting improvements:

- Admin users can filter users on the

activeattribute. - All users can sort results using the

sortByandsortOrderquery parameters. The default is to sort by ID.

Azure Government regions added

July 25, 2020

Azure Databricks recently became available in the US Gov Arizona and US Gov Virginia regions for US government entities and their partners.

Databricks Runtime 7.1 GA

July 21, 2020

Databricks Runtime 7.1 brings many additional features and improvements over Databricks Runtime 7.0, including:

- Google BigQuery connector

%pipcommands to manage Python libraries installed in a notebook session- Koalas installed

- Many Delta Lake improvements, including:

- Setting user-defined commit metadata

- Getting the version of the last commit written by the current

SparkSession - Converting Parquet tables created by Structured Streaming using the

_spark_metadatatransaction log MERGE INTOperformance improvements

For details, see the complete Databricks Runtime 7.1 (EoS) release notes.

Databricks Runtime 7.1 ML GA

July 21, 2020

Databricks Runtime 7.1 for Machine Learning is built on top of Databricks Runtime 7.1 and brings the following new features and library changes:

- pip and conda magic commands enabled by default

- spark-tensorflow-distributor: 0.1.0

- pillow 7.0.0 -> 7.1.0

- pytorch 1.5.0 -> 1.5.1

- torchvision 0.6.0 -> 0.6.1

- horovod 0.19.1 -> 0.19.5

- mlflow 1.8.0 -> 1.9.1

For details, see the complete Databricks Runtime 7.1 for ML (EoS) release notes.

Databricks Runtime 7.1 Genomics GA

July 21, 2020

Databricks Runtime 7.1 for Genomics is built on top of Databricks Runtime 7.1 and brings the following new features:

- LOCO transformation

- GloWGR output reshaping function

- RNASeq outputs unpaired alignments

Databricks Connect 7.1 (Public Preview)

July 17, 2020

Databricks Connect 7.1 is now in public preview.

IP Access List API updates

July 15-21, 2020: Version 3.24

The following IP Access List API properties have changed:

updator_user_idtoupdated_bycreator_user_idtocreated_by

Python notebooks now support multiple outputs per cell

July 15-21, 2020: Version 3.24

Python notebooks now support multiple outputs per cell. This means you can have any number of display, displayHTML, or print statements in a cell. Take advantage of the ability to view the raw data and the plot in the same cell, or all of the outputs that succeeded before you hit an error.

This feature requires Databricks Runtime 7.1 or above and is disabled by default in Databricks Runtime 7.1. Enable it by setting spark.databricks.workspace.multipleResults.enabled true.

View notebook code and results cells side by side

July 15-21, 2020: Version 3.24

The new Side-by-Side notebook display option lets you view code and results next to each other. This display option joins the "Standard" option (formerly "Code") and the "Results Only" option.

Pause job schedules

July 15-21, 2020: Version 3.24

Jobs schedules now have Pause and Unpause buttons making it easy to pause and resume jobs. Now you can make changes to a job schedule without additional job runs starting while you are making the changes. Current runs or runs triggered by Run Now are not affected. For details, see Pause and resume job triggers.

Jobs API endpoints validate run ID

July 15-21, 2020: Version 3.24

The jobs/runs/cancel and jobs/runs/output API endpoints now validate that the run_id parameter is valid. For invalid parameters these API endpoints now return HTTP status code 400 instead of code 500.

Microsoft Entra ID tokens to authorize to the Databricks REST API GA

July 15-21, 2020: Version 3.24

Using Microsoft Entra ID tokens to authenticate to the Workspace API is now generally available. Microsoft Entra ID tokens enable you to automate the creation and setup of new workspaces. Service principals are application objects in Microsoft Entra ID. You can also use service principals within your Azure Databricks workspaces to automate workflows. For details, see Authorize access to Azure Databricks resources.

Format SQL in notebooks automatically

July 15-21, 2020: Version 3.24

You can now format SQL notebook cells from a keyboard shortcut, the command context menu, and the notebook Edit menu (select Edit > Format SQL Cells). SQL formatting makes it easy to read and maintain code with little effort. It works for SQL notebooks as well as %sql cells.

Reproducible order of installation for Maven and CRAN libraries

July 1-9, 2020: Version 3.23

Azure Databricks now processes Maven and CRAN libraries in the order that they were installed on the cluster.

Take control of your users' personal access tokens with the Token Management API (Public Preview)

July 1-9, 2020: Version 3.23

Now Azure Databricks administrators can use the Token Management API to manage their users' Azure Databricks personal access tokens:

- Monitor and revoke users' personal access tokens.

- Control the lifetime of future tokens in your workspace.

- Control which users can create and use tokens.

See Monitor and revoke personal access tokens.

Restore cut notebook cells

July 1-9, 2020: Version 3.23

You can now restore notebook cells that have been cut either by using the (Z) keyboard shortcut or by selecting Edit > Undo Cut Cells. This functionality is analogous to that for undoing deleted cells.

Assign jobs CAN MANAGE permission to non-admin users

July 1-9, 2020: Version 3.23

You can now assign non-admin users and groups to the CAN MANAGE permission for jobs. This permission level allows users to manage all settings on the job, including assigning permissions, changing the owner, and changing the cluster configuration (for example, adding libraries and modifying the cluster specification). See Control access to a job.

Non-admin Azure Databricks users can view and filter by username using the SCIM API

July 1-9, 2020: Version 3.23

Non-admin users can now view usernames and filter users by username using the SCIM /Users endpoint.

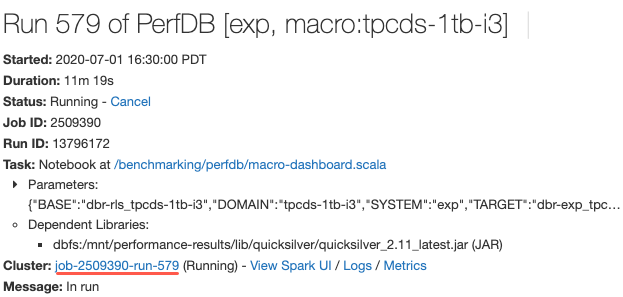

Link to view cluster specification when you view job run details

July 1-9, 2020: Version 3.23

Now when you view the details for a job run, you can click a link to the cluster configuration page to view the cluster specification. Previously, you would have to copy the job ID from the URL and go to the cluster list to search for it.