Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

This article shows you how you can protect Azure Kubernetes Service (AKS) clusters by using Azure Firewall to secure outbound and inbound traffic.

Background

Azure Kubernetes Service (AKS) offers a managed Kubernetes cluster on Azure. For more information, see Azure Kubernetes Service.

Despite AKS being a fully managed solution, it doesn't offer a built-in solution to secure ingress and egress traffic between the cluster and external networks. Azure Firewall offers a solution to this.

AKS clusters are deployed on a virtual network. This network can be managed (created by AKS) or custom (preconfigured by the user beforehand). In either case, the cluster has outbound dependencies on services outside of that virtual network (the service has no inbound dependencies). For management and operational purposes, nodes in an AKS cluster need to access certain ports and fully qualified domain names (FQDNs) describing these outbound dependencies. This is required for various functions including, but not limited to, the nodes that communicate with the Kubernetes API server. They download and install core Kubernetes cluster components and node security updates, or pull base system container images from Microsoft Container Registry (MCR), and so on. These outbound dependencies are almost entirely defined with FQDNs, which don't have static addresses behind them. The lack of static addresses means that Network Security Groups can't be used to lock down outbound traffic from an AKS cluster. For this reason, by default, AKS clusters have unrestricted outbound (egress) Internet access. This level of network access allows nodes and services you run to access external resources as needed.

However, in a production environment, communications with a Kubernetes cluster should be protected to prevent against data exfiltration along with other vulnerabilities. All incoming and outgoing network traffic must be monitored and controlled based on a set of security rules. If you want to do this, you have to restrict egress traffic, but a limited number of ports and addresses must remain accessible to maintain healthy cluster maintenance tasks and satisfy those outbound dependencies previously mentioned.

The simplest solution uses a firewall device that can control outbound traffic based on domain names. A firewall typically establishes a barrier between a trusted network and an untrusted network, such as the Internet. Azure Firewall, for example, can restrict outbound HTTP and HTTPS traffic based on the FQDN of the destination, giving you fine-grained egress traffic control, but at the same time allows you to provide access to the FQDNs encompassing an AKS cluster's outbound dependencies (something that NSGs can't do). Likewise, you can control ingress traffic and improve security by enabling threat intelligence-based filtering on an Azure Firewall deployed to a shared perimeter network. This filtering can provide alerts, and deny traffic to and from known malicious IP addresses and domains.

See the following video by Abhinav Sriram for a quick overview on how this works in practice on a sample environment:

You can download a zip file from the Download Center that contains a bash script file and a yaml file to automatically configure the sample environment used in the video. It configures Azure Firewall to protect both ingress and egress traffic. The following guides walk through each step of the script in more detail so you can set up a custom configuration.

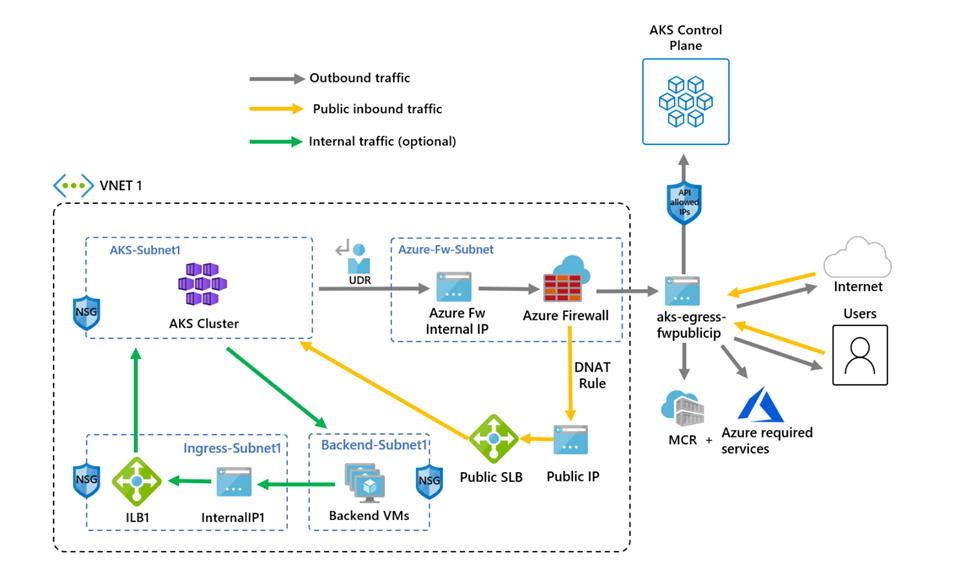

The following diagram shows the sample environment from the video that the script and guide configure:

There's one difference between the script and the following guide. The script uses managed identities, but the guide uses a service principal. This shows you two different ways to create an identity to manage and create cluster resources.

Restrict egress traffic using Azure Firewall

Set configuration via environment variables

Define a set of environment variables to be used in resource creations.

PREFIX="aks-egress"

RG="${PREFIX}-rg"

LOC="chinaeast"

PLUGIN=azure

AKSNAME="${PREFIX}"

VNET_NAME="${PREFIX}-vnet"

AKSSUBNET_NAME="aks-subnet"

# DO NOT CHANGE FWSUBNET_NAME - This is currently a requirement for Azure Firewall.

FWSUBNET_NAME="AzureFirewallSubnet"

FWNAME="${PREFIX}-fw"

FWPUBLICIP_NAME="${PREFIX}-fwpublicip"

FWIPCONFIG_NAME="${PREFIX}-fwconfig"

FWROUTE_TABLE_NAME="${PREFIX}-fwrt"

FWROUTE_NAME="${PREFIX}-fwrn"

FWROUTE_NAME_INTERNET="${PREFIX}-fwinternet"

Create a virtual network with multiple subnets

Create a virtual network with two separate subnets, one for the cluster, one for the firewall. Optionally you could also create one for internal service ingress.

Create a resource group to hold all of the resources.

# Create Resource Group

az group create --name $RG --location $LOC

Create a virtual network with two subnets to host the AKS cluster and the Azure Firewall. Each has their own subnet. Let's start with the AKS network.

# Dedicated virtual network with AKS subnet

az network vnet create \

--resource-group $RG \

--name $VNET_NAME \

--location $LOC \

--address-prefixes 10.42.0.0/16 \

--subnet-name $AKSSUBNET_NAME \

--subnet-prefix 10.42.1.0/24

# Dedicated subnet for Azure Firewall (Firewall name cannot be changed)

az network vnet subnet create \

--resource-group $RG \

--vnet-name $VNET_NAME \

--name $FWSUBNET_NAME \

--address-prefix 10.42.2.0/24

Create and set up an Azure Firewall with a UDR

Azure Firewall inbound and outbound rules must be configured. The main purpose of the firewall is to enable organizations to configure granular ingress and egress traffic rules into and out of the AKS Cluster.

Important

If your cluster or application creates a large number of outbound connections directed to the same or small subset of destinations, you might require more firewall frontend IPs to avoid maxing out the ports per frontend IP. For more information on how to create an Azure firewall with multiple IPs, see here

Create a standard SKU public IP resource that is used as the Azure Firewall frontend address.

az network public-ip create -g $RG -n $FWPUBLICIP_NAME -l $LOC --sku "Standard"

Register the preview cli-extension to create an Azure Firewall.

# Install Azure Firewall preview CLI extension

az extension add --name azure-firewall

# Deploy Azure Firewall

az network firewall create -g $RG -n $FWNAME -l $LOC --enable-dns-proxy true

The IP address created earlier can now be assigned to the firewall frontend.

Note

Set up of the public IP address to the Azure Firewall may take a few minutes. To leverage FQDN on network rules we need DNS proxy enabled, when enabled the firewall will listen on port 53 and will forward DNS requests to the DNS server specified previously. This will allow the firewall to translate that FQDN automatically.

# Configure Firewall IP Config

az network firewall ip-config create -g $RG -f $FWNAME -n $FWIPCONFIG_NAME --public-ip-address $FWPUBLICIP_NAME --vnet-name $VNET_NAME

When the previous command has succeeded, save the firewall frontend IP address for configuration later.

# Capture Firewall IP Address for Later Use

FWPUBLIC_IP=$(az network public-ip show -g $RG -n $FWPUBLICIP_NAME --query "ipAddress" -o tsv)

FWPRIVATE_IP=$(az network firewall show -g $RG -n $FWNAME --query "ipConfigurations[0].privateIPAddress" -o tsv)

# set fw as vnet dns server so dns queries are visible in fw logs

az network vnet update -g $RG --name $VNET_NAME --dns-servers $FWPRIVATE_IP

Note

If you use secure access to the AKS API server with authorized IP address ranges, you need to add the firewall public IP into the authorized IP range.

Create a UDR with a hop to Azure Firewall

Azure automatically routes traffic between Azure subnets, virtual networks, and on-premises networks. If you want to change any of Azure's default routing, you do so by creating a route table.

Create an empty route table to be associated with a given subnet. The route table will define the next hop as the Azure Firewall created previously. Each subnet can have zero or one route table associated to it.

# Create UDR and add a route for Azure Firewall

az network route-table create -g $RG -l $LOC --name $FWROUTE_TABLE_NAME

az network route-table route create -g $RG --name $FWROUTE_NAME --route-table-name $FWROUTE_TABLE_NAME --address-prefix 0.0.0.0/0 --next-hop-type VirtualAppliance --next-hop-ip-address $FWPRIVATE_IP

az network route-table route create -g $RG --name $FWROUTE_NAME_INTERNET --route-table-name $FWROUTE_TABLE_NAME --address-prefix $FWPUBLIC_IP/32 --next-hop-type Internet

See virtual network route table documentation about how you can override Azure's default system routes or add more routes to a subnet's route table.

Adding firewall rules

Note

For applications outside of the kube-system or gatekeeper-system namespaces that needs to talk to the API server, an additional network rule to allow TCP communication to port 443 for the API server IP in addition to adding application rule for fqdn-tag AzureKubernetesService is required.

You can use the following three network rules to configure your firewall. You might need to adapt these rules based on your deployment. The first rule allows access to port 9000 via TCP. The second rule allows access to port 1194 and 123 via UDP. Both these rules only allow traffic destined to the Azure Region CIDR that we're using, in this case China East.

Finally, we add a third network rule opening port 123 to an Internet time server FQDN (for example:ntp.ubuntu.com) via UDP. Adding an FQDN as a network rule is one of the specific features of Azure Firewall, and you need to adapt it when using your own options.

After setting the network rules, we'll also add an application rule using the AzureKubernetesService that covers the needed FQDNs accessible through TCP port 443 and port 80. In addition, you might need to configure more network and application rules based on your deployment. For more information, see Outbound network and FQDN rules for Azure Kubernetes Service (AKS) clusters.

Add FW Network Rules

az network firewall network-rule create -g $RG -f $FWNAME --collection-name 'aksfwnr' -n 'apiudp' --protocols 'UDP' --source-addresses '*' --destination-addresses "AzureCloud.$LOC" --destination-ports 1194 --action allow --priority 100

az network firewall network-rule create -g $RG -f $FWNAME --collection-name 'aksfwnr' -n 'apitcp' --protocols 'TCP' --source-addresses '*' --destination-addresses "AzureCloud.$LOC" --destination-ports 9000

az network firewall network-rule create -g $RG -f $FWNAME --collection-name 'aksfwnr' -n 'time' --protocols 'UDP' --source-addresses '*' --destination-fqdns 'ntp.ubuntu.com' --destination-ports 123

Add FW Application Rules

az network firewall application-rule create -g $RG -f $FWNAME --collection-name 'aksfwar' -n 'fqdn' --source-addresses '*' --protocols 'http=80' 'https=443' --fqdn-tags "AzureKubernetesService" --action allow --priority 100

# set fw application rule to allow kubernetes to reach storage and image resources

az network firewall application-rule create -g $RG -f $FWNAME --collection-name 'aksfwarweb' -n 'storage' --source-addresses '10.42.1.0/24' --protocols 'https=443' --target-fqdns '*.blob.storage.chinacloudapi.cn' '*.blob.core.chinacloudapi.cn' --action allow --priority 101

az network firewall application-rule create -g $RG -f $FWNAME --collection-name 'aksfwarweb' -n 'website' --source-addresses '10.42.1.0/24' --protocols 'https=443' --target-fqdns 'ghcr.io' '*.docker.io' '*.docker.com' '*.githubusercontent.com'

See Azure Firewall documentation to learn more about the Azure Firewall service.

Associate the route table to AKS

To associate the cluster with the firewall, the dedicated subnet for the cluster's subnet must reference the route table created previously. Association can be done by issuing a command to the virtual network holding both the cluster and firewall to update the route table of the cluster's subnet.

# Associate route table with next hop to Firewall to the AKS subnet

az network vnet subnet update -g $RG --vnet-name $VNET_NAME --name $AKSSUBNET_NAME --route-table $FWROUTE_TABLE_NAME

Deploy AKS with outbound type of UDR to the existing network

Now an AKS cluster can be deployed into the existing virtual network. You also use outbound type userDefinedRouting, this feature ensures any outbound traffic is forced through the firewall and no other egress paths exist (by default the Load Balancer outbound type could be used).

The target subnet to be deployed into is defined with the environment variable, $SUBNETID. We didn't define the $SUBNETID variable in the previous steps. To set the value for the subnet ID, you can use the following command:

SUBNETID=$(az network vnet subnet show -g $RG --vnet-name $VNET_NAME --name $AKSSUBNET_NAME --query id -o tsv)

You define the outbound type to use the UDR that already exists on the subnet. This configuration enables AKS to skip the setup and IP provisioning for the load balancer.

Important

For more information on outbound type UDR including limitations, see egress outbound type UDR.

Tip

Additional features can be added to the cluster deployment such as Private Cluster or changing the OS SKU.

The AKS feature for API server authorized IP ranges can be added to limit API server access to only the firewall's public endpoint. The authorized IP ranges feature is denoted in the diagram as optional. When enabling the authorized IP range feature to limit API server access, your developer tools must use a jumpbox from the firewall's virtual network or you must add all developer endpoints to the authorized IP range.

az aks create -g $RG -n $AKSNAME -l $LOC \

--node-count 3 \

--network-plugin azure \

--outbound-type userDefinedRouting \

--vnet-subnet-id $SUBNETID \

--api-server-authorized-ip-ranges $FWPUBLIC_IP

Note

To create and use your own VNet and route table with kubenet network plugin, you need to use a user-assigned managed identity. For a system-assigned managed identity, we cannot get the identity ID before creating cluster, which causes a delay in the role assignment taking effect.

To create and use your own VNet and route table with azure network plugin, both system-assigned and user-assigned managed identities are supported.

Enable developer access to the API server

If you used authorized IP ranges for the cluster on the previous step, you must add your developer tooling IP addresses to the AKS cluster list of approved IP ranges in order to access the API server from there. Another option is to configure a jumpbox with the needed tooling inside a separate subnet in the Firewall's virtual network.

Add another IP address to the approved ranges with the following command

# Retrieve your IP address

CURRENT_IP=$(dig @resolver1.opendns.com ANY myip.opendns.com +short)

# Add to AKS approved list

az aks update -g $RG -n $AKSNAME --api-server-authorized-ip-ranges $CURRENT_IP/32

Use the az aks get-credentials command to configure kubectl to connect to your newly created Kubernetes cluster.

az aks get-credentials -g $RG -n $AKSNAME

Restrict ingress traffic using Azure Firewall

You can now start exposing services and deploying applications to this cluster. In this example, we expose a public service, but you can also choose to expose an internal service via internal load balancer.

Review the AKS Store Demo quickstart manifest to see all the resources that will be created.

Deploy the service using the

kubectl applycommand.kubectl apply -f https://raw.githubusercontent.com/Azure-Samples/aks-store-demo/main/aks-store-quickstart.yaml

Add a DNAT rule to Azure Firewall

Important

When you use Azure Firewall to restrict egress traffic and create a user-defined route (UDR) to force all egress traffic, make sure you create an appropriate DNAT rule in Firewall to correctly allow ingress traffic. Using Azure Firewall with a UDR breaks the ingress setup due to asymmetric routing. (The issue occurs if the AKS subnet has a default route that goes to the firewall's private IP address, but you're using a public load balancer - ingress or Kubernetes service of type: LoadBalancer). In this case, the incoming load balancer traffic is received via its public IP address, but the return path goes through the firewall's private IP address. Because the firewall is stateful, it drops the returning packet because the firewall isn't aware of an established session. To learn how to integrate Azure Firewall with your ingress or service load balancer, see Integrate Azure Firewall with Azure Standard Load Balancer.

To configure inbound connectivity, a DNAT rule must be written to the Azure Firewall. To test connectivity to your cluster, a rule is defined for the firewall frontend public IP address to route to the internal IP exposed by the internal service.

The destination address can be customized as it's the port on the firewall to be accessed. The translated address must be the IP address of the internal load balancer. The translated port must be the exposed port for your Kubernetes service.

You need to specify the internal IP address assigned to the load balancer created by the Kubernetes service. Retrieve the address by running:

kubectl get services

The IP address needed is listed in the EXTERNAL-IP column, similar to the following.

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.41.0.1 <none> 443/TCP 10h

store-front LoadBalancer 10.41.185.82 203.0.113.254 80:32718/TCP 9m

order-service ClusterIP 10.0.104.144 <none> 3000/TCP 11s

product-service ClusterIP 10.0.237.60 <none> 3002/TCP 10s

rabbitmq ClusterIP 10.0.161.128 <none> 5672/TCP,15672/TCP 11s

Get the service IP by running:

SERVICE_IP=$(kubectl get svc store-front -o jsonpath='{.status.loadBalancer.ingress[*].ip}')

Add the NAT rule by running:

az network firewall nat-rule create --collection-name exampleset --destination-addresses $FWPUBLIC_IP --destination-ports 80 --firewall-name $FWNAME --name inboundrule --protocols Any --resource-group $RG --source-addresses '*' --translated-port 80 --action Dnat --priority 100 --translated-address $SERVICE_IP

Validate connectivity

Navigate to the Azure Firewall frontend IP address in a browser to validate connectivity.

You should see the AKS store app. In this example, the Firewall public IP was 203.0.113.32.

On this page, you can view products, add them to your cart, and then place an order.

Clean up resources

To clean up Azure resources, delete the AKS resource group.

az group delete -g $RG

Next steps

- Learn more about Azure Kubernetes Service, see Kubernetes core concepts for Azure Kubernetes Service (AKS).