Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Important

This article provides information on using the Azure Machine Learning SDK v1. SDK v1 is deprecated as of March 31, 2025. Support for it will end on June 30, 2026. You can install and use SDK v1 until that date. Your existing workflows using SDK v1 will continue to operate after the end-of-support date. However, they could be exposed to security risks or breaking changes in the event of architectural changes in the product.

We recommend that you transition to the SDK v2 before June 30, 2026. For more information on SDK v2, see What is Azure Machine Learning CLI and Python SDK v2? and the SDK v2 reference.

In this article, you learn how to add code to designer pipelines to enable log metrics. You also learn how to view those logs using the Azure Machine Learning studio web portal.

For more information on logging metrics using the SDK authoring experience, see Log & view metrics and log files.

Enable logging with Execute Python Script

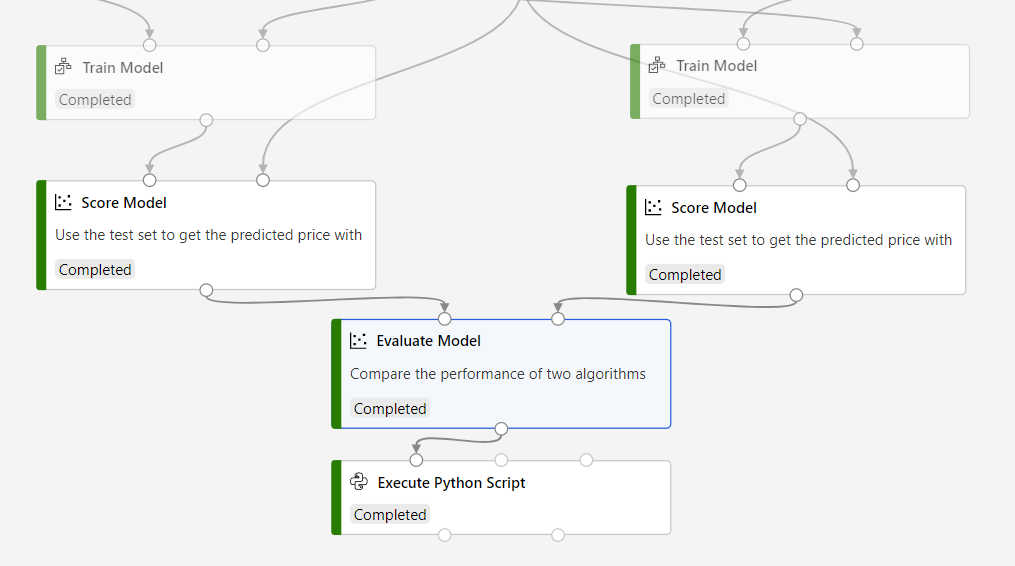

Use the Execute Python Script component to enable logging in designer pipelines. Although you can log any value with this workflow, it's especially useful to log metrics from the Evaluate Model component to track model performance across runs.

The following example shows how to log the mean squared error of two trained models using the Evaluate Model and Execute Python Script components.

Connect an Execute Python Script component to the output of the Evaluate Model component.

Paste the following code into the Execute Python Script code editor to log the mean absolute error for your trained model. You can use a similar pattern to log any other value in the designer:

APPLIES TO:

Azure Machine Learning SDK v1 for Python

Azure Machine Learning SDK v1 for Python# dataframe1 contains the values from Evaluate Model def azureml_main(dataframe1=None, dataframe2=None): print(f'Input pandas.DataFrame #1: {dataframe1}') from azureml.core import Run run = Run.get_context() # Log the mean absolute error to the parent run to see the metric in the run details page. # Note: 'run.parent.log()' should not be called multiple times because of performance issues. # If repeated calls are necessary, cache 'run.parent' as a local variable and call 'log()' on that variable. parent_run = Run.get_context().parent # Log left output port result of Evaluate Model. This also works when evaluate only 1 model. parent_run.log(name='Mean_Absolute_Error (left port)', value=dataframe1['Mean_Absolute_Error'][0]) # Log right output port result of Evaluate Model. The following line should be deleted if you only connect one Score component to the` left port of Evaluate Model component. parent_run.log(name='Mean_Absolute_Error (right port)', value=dataframe1['Mean_Absolute_Error'][1]) return dataframe1,

This code uses the Azure Machine Learning Python SDK to log values. It uses Run.get_context() to get the context of the current run. It then logs values to that context with the run.parent.log() method. It uses parent to log values to the parent pipeline run rather than the component run.

For more information on how to use the Python SDK to log values, see Log & view metrics and log files.

View logs

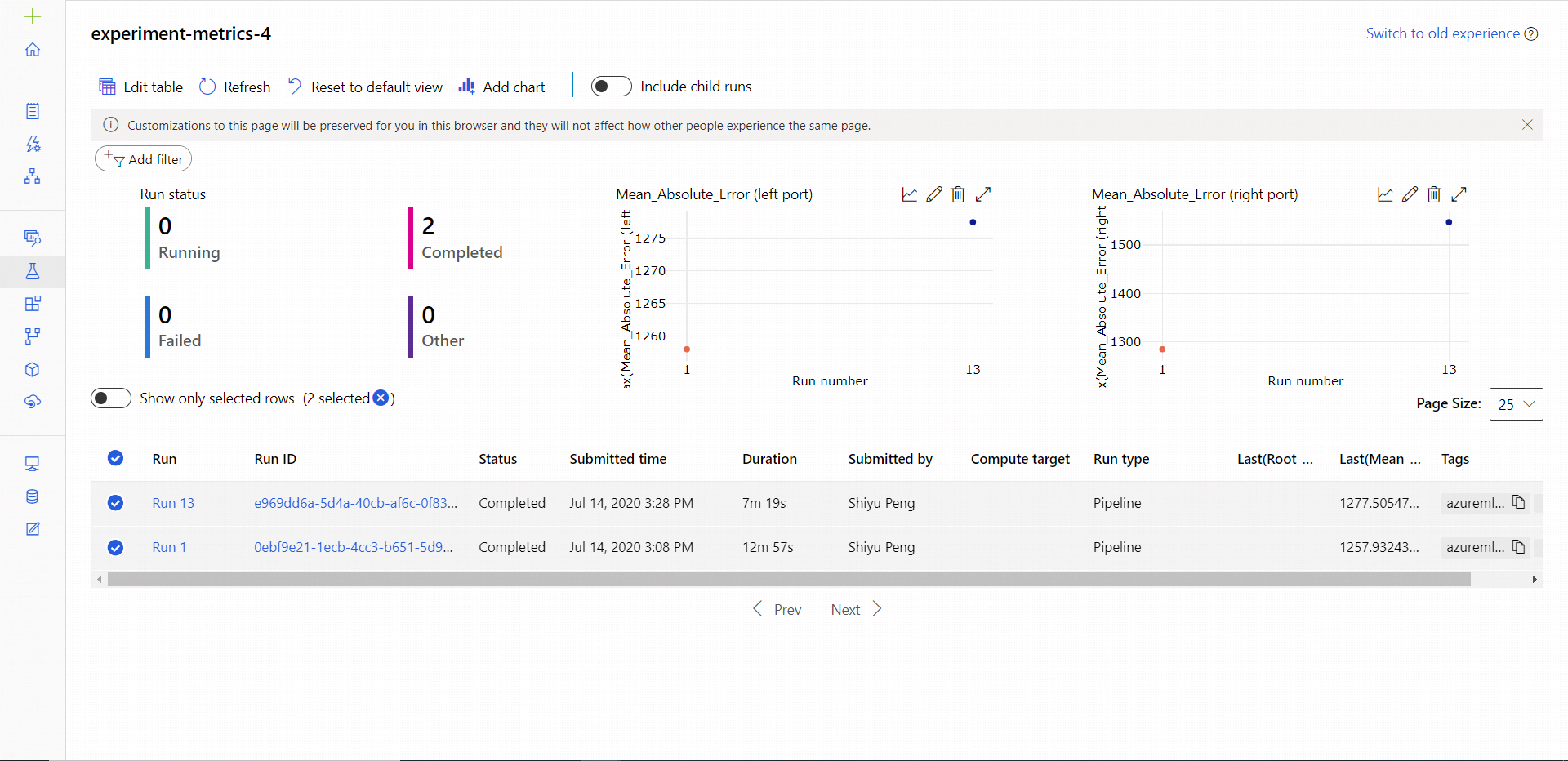

After the pipeline run completes, you can see the Mean_Absolute_Error in the Experiments page.

Navigate to the Jobs section.

Select your experiment.

Select the job in your experiment that you want to view.

Select Metrics.