Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

APPLIES TO:

Azure CLI ml extension v2 (current)

Azure CLI ml extension v2 (current)

Python SDK azure-ai-ml v2 (current)

Python SDK azure-ai-ml v2 (current)

In this article, you learn how to automate hyperparameter tuning in Azure Machine Learning pipelines. The article describes using both Azure Machine Learning CLI v2 and Azure Machine Learning SDK for Python v2.

Hyperparameters are adjustable parameters that let you control the model training process. Hyperparameter tuning is the process of finding the configuration of hyperparameters that results in the best performance. Azure Machine Learning lets you automate hyperparameter tuning and run experiments in parallel to efficiently optimize hyperparameters.

Prerequisites

- Have an Azure Machine Learning account and workspace.

- Understand Azure Machine Learning pipelines and hyperparameter tuning a model.

Create and run a hyperparameter tuning pipeline

The following examples come from Run a pipeline job using sweep (hyperdrive) in pipeline in the Azure Machine Learning examples repository. For more information about creating pipelines with components, see Create and run machine learning pipelines using components with the Azure Machine Learning CLI.

Create a command component with hyperparameter inputs

The Azure Machine Learning pipeline must have a command component with hyperparameter inputs. The following train.yml file from the example projects defines a trial component that has the c_value, kernel, and coef hyperparameter inputs. It runs the source code that's located in the ./train-src folder.

$schema: https://azuremlschemas.azureedge.net/latest/commandComponent.schema.json

type: command

name: train_model

display_name: train_model

version: 1

inputs:

data:

type: uri_folder

c_value:

type: number

default: 1.0

kernel:

type: string

default: rbf

degree:

type: integer

default: 3

gamma:

type: string

default: scale

coef0:

type: number

default: 0

shrinking:

type: boolean

default: false

probability:

type: boolean

default: false

tol:

type: number

default: 1e-3

cache_size:

type: number

default: 1024

verbose:

type: boolean

default: false

max_iter:

type: integer

default: -1

decision_function_shape:

type: string

default: ovr

break_ties:

type: boolean

default: false

random_state:

type: integer

default: 42

outputs:

model_output:

type: mlflow_model

test_data:

type: uri_folder

code: ./train-src

environment: azureml://registries/azureml/environments/sklearn-1.5/labels/latest

command: >-

python train.py

--data ${{inputs.data}}

--C ${{inputs.c_value}}

--kernel ${{inputs.kernel}}

--degree ${{inputs.degree}}

--gamma ${{inputs.gamma}}

--coef0 ${{inputs.coef0}}

--shrinking ${{inputs.shrinking}}

--probability ${{inputs.probability}}

--tol ${{inputs.tol}}

--cache_size ${{inputs.cache_size}}

--verbose ${{inputs.verbose}}

--max_iter ${{inputs.max_iter}}

--decision_function_shape ${{inputs.decision_function_shape}}

--break_ties ${{inputs.break_ties}}

--random_state ${{inputs.random_state}}

--model_output ${{outputs.model_output}}

--test_data ${{outputs.test_data}}

Create the trial component source code

The source code for this example is a single train.py file. This code runs in every trial of the sweep job.

# imports

import os

import mlflow

import argparse

import pandas as pd

from pathlib import Path

from sklearn.svm import SVC

from sklearn.model_selection import train_test_split

# define functions

def main(args):

# enable auto logging

mlflow.autolog()

# setup parameters

params = {

"C": args.C,

"kernel": args.kernel,

"degree": args.degree,

"gamma": args.gamma,

"coef0": args.coef0,

"shrinking": args.shrinking,

"probability": args.probability,

"tol": args.tol,

"cache_size": args.cache_size,

"class_weight": args.class_weight,

"verbose": args.verbose,

"max_iter": args.max_iter,

"decision_function_shape": args.decision_function_shape,

"break_ties": args.break_ties,

"random_state": args.random_state,

}

# read in data

df = pd.read_csv(args.data)

# process data

X_train, X_test, y_train, y_test = process_data(df, args.random_state)

# train model

model = train_model(params, X_train, X_test, y_train, y_test)

# Output the model and test data

# write to local folder first, then copy to output folder

mlflow.sklearn.save_model(model, "model")

from distutils.dir_util import copy_tree

# copy subdirectory example

from_directory = "model"

to_directory = args.model_output

copy_tree(from_directory, to_directory)

X_test.to_csv(Path(args.test_data) / "X_test.csv", index=False)

y_test.to_csv(Path(args.test_data) / "y_test.csv", index=False)

def process_data(df, random_state):

# split dataframe into X and y

X = df.drop(["species"], axis=1)

y = df["species"]

# train/test split

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.2, random_state=random_state

)

# return split data

return X_train, X_test, y_train, y_test

def train_model(params, X_train, X_test, y_train, y_test):

# train model

model = SVC(**params)

model = model.fit(X_train, y_train)

# return model

return model

def parse_args():

# setup arg parser

parser = argparse.ArgumentParser()

# add arguments

parser.add_argument("--data", type=str)

parser.add_argument("--C", type=float, default=1.0)

parser.add_argument("--kernel", type=str, default="rbf")

parser.add_argument("--degree", type=int, default=3)

parser.add_argument("--gamma", type=str, default="scale")

parser.add_argument("--coef0", type=float, default=0)

parser.add_argument("--shrinking", type=bool, default=False)

parser.add_argument("--probability", type=bool, default=False)

parser.add_argument("--tol", type=float, default=1e-3)

parser.add_argument("--cache_size", type=float, default=1024)

parser.add_argument("--class_weight", type=dict, default=None)

parser.add_argument("--verbose", type=bool, default=False)

parser.add_argument("--max_iter", type=int, default=-1)

parser.add_argument("--decision_function_shape", type=str, default="ovr")

parser.add_argument("--break_ties", type=bool, default=False)

parser.add_argument("--random_state", type=int, default=42)

parser.add_argument("--model_output", type=str, help="Path of output model")

parser.add_argument("--test_data", type=str, help="Path of output model")

# parse args

args = parser.parse_args()

# return args

return args

# run script

if __name__ == "__main__":

# parse args

args = parse_args()

# run main function

main(args)

Note

Make sure to log the metrics in the trial component source code with the same name as the primary_metric value in the pipeline file. This example uses mlflow.autolog(), which is the recommended way to track machine learning experiments. For more information about MLflow, see Track ML experiments and models with MLflow.

Create a pipeline with a hyperparameter sweep step

Given the command component defined in train.yml, the following code creates a two-step train and predict pipeline definition file. In the sweep_step, the required step type is sweep, and the c_value, kernel, and coef hyperparameter inputs for the trial component are added to the search_space.

The following example highlights the hyperparameter tuning sweep_step.

$schema: https://azuremlschemas.azureedge.net/latest/pipelineJob.schema.json

type: pipeline

display_name: pipeline_with_hyperparameter_sweep

description: Tune hyperparameters using TF component

settings:

default_compute: azureml:cpu-cluster

jobs:

sweep_step:

type: sweep

inputs:

data:

type: uri_file

path: wasbs://datasets@azuremlexamples.blob.core.windows.net/iris.csv

degree: 3

gamma: "scale"

shrinking: False

probability: False

tol: 0.001

cache_size: 1024

verbose: False

max_iter: -1

decision_function_shape: "ovr"

break_ties: False

random_state: 42

outputs:

model_output:

test_data:

sampling_algorithm: random

trial: ./train.yml

search_space:

c_value:

type: uniform

min_value: 0.5

max_value: 0.9

kernel:

type: choice

values: ["rbf", "linear", "poly"]

coef0:

type: uniform

min_value: 0.1

max_value: 1

objective:

goal: minimize

primary_metric: training_f1_score

limits:

max_total_trials: 5

max_concurrent_trials: 3

timeout: 7200

predict_step:

type: command

inputs:

model: ${{parent.jobs.sweep_step.outputs.model_output}}

test_data: ${{parent.jobs.sweep_step.outputs.test_data}}

outputs:

predict_result:

component: ./predict.yml

For the full sweep job schema, see CLI (v2) sweep job YAML schema.

Submit the hyperparameter tuning pipeline job

After you submit this pipeline job, Azure Machine Learning runs the trial component multiple times to sweep over hyperparameters, based on the search space and limits you defined in the sweep_step.

View hyperparameter tuning results in studio

After you submit a pipeline job, the SDK or CLI widget gives you a web URL link to the pipeline graph in the Azure Machine Learning studio UI.

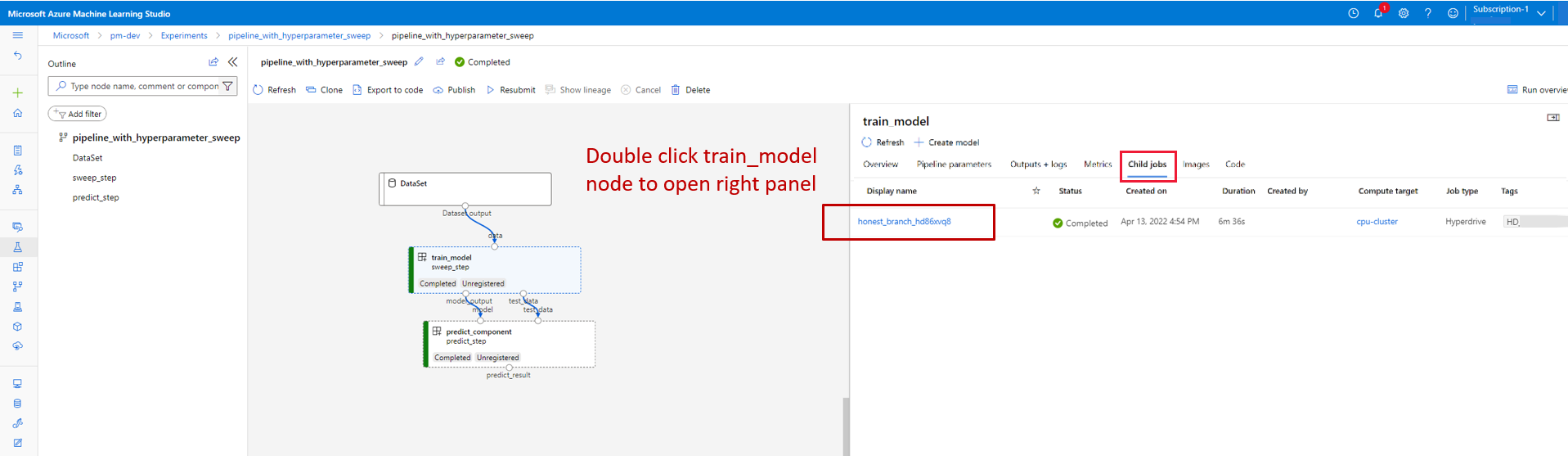

To view hyperparameter tuning results, double-click the sweep step in the pipeline graph. In the details area, select Child jobs, and then select the child job.

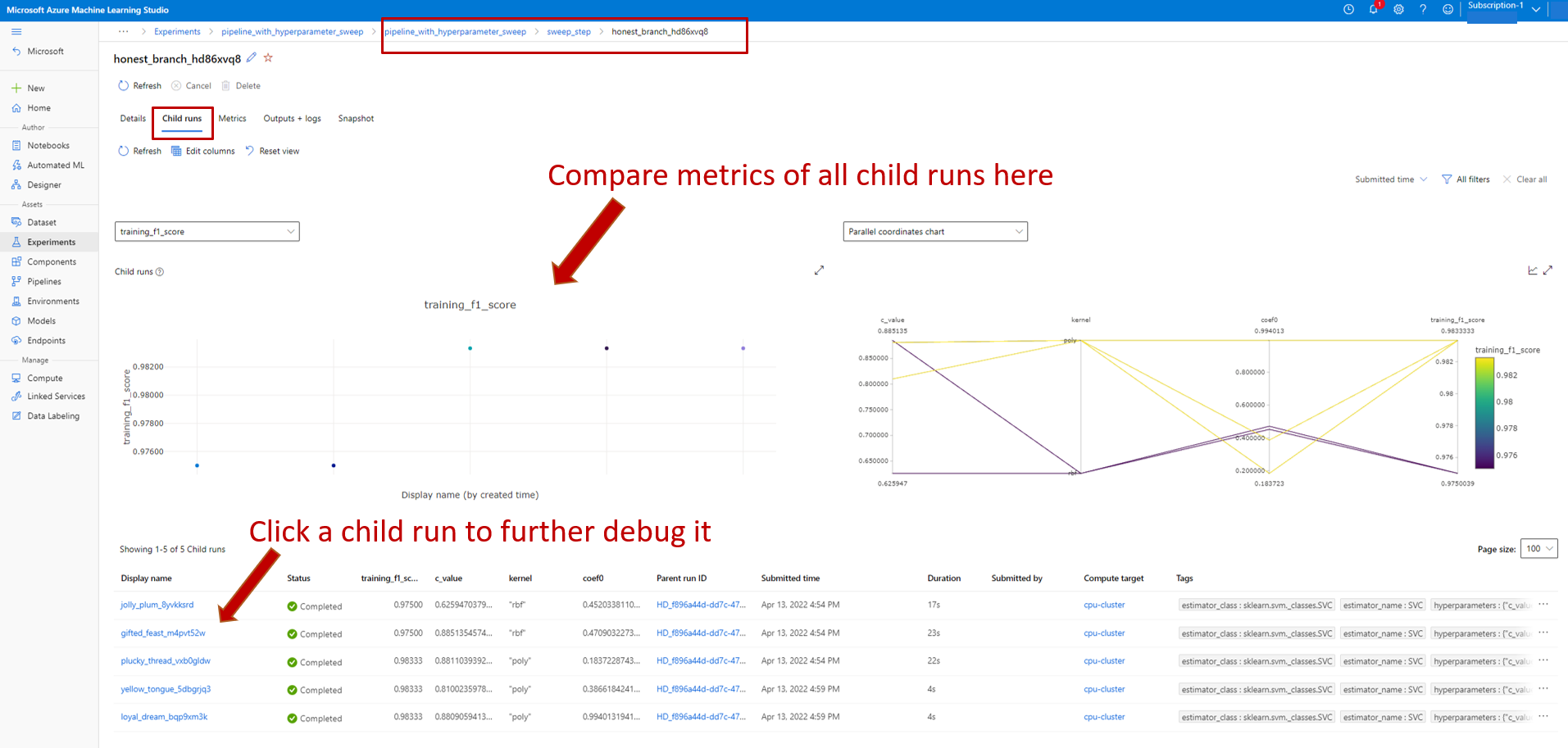

On the child job page, select Trials to see and compare metrics for the child runs. Select any child run to see the details for that run.

If a child run failed, you can select Outputs + logs on the child run page to see useful debug information.