Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

This article helps you troubleshoot issues with agentless dependency analysis which can be performed on VMware, Hyper-V and physical servers. Learn more about the types of dependency visualization supported in Azure Migrate.

Visualize dependencies for >1 hour with agentless dependency analysis

With agentless dependency analysis, you can visualize dependencies in a multi-server visualization or export them in a CSV file for a duration of up to 30 days.

Visualize dependencies for >10 servers with agentless dependency analysis

You can now visualize dependencies across all servers where dependency analysis is auto-enabled and running without any errors in the multi-server dependency visualization.

Unable to view all the connections in the new dependency analysis visualization and export

In the new dependency analysis capabilities, the unresolved connections between discovered or undiscovered servers are omitted from the single-server, multi-server visualization and the exported CSV file. This is done to reduce the unwanted noise from the dependency data for ease of analysis and interpretation.

- Resolved connections : Network connections between servers discovered by Azure Migrate where Application & Process information was successfully gathered from both source and destination servers.

- Unresolved connections: Network connections either between a discovered and undiscovered server (not discovered by Azure Migrate) or the connection where Application & Process information could not be gathered from any of the source or destination servers, that were discovered by Azure Migrate (Dependency anlaysis either not enabled or failing due to errors).

If you want to visualize or export all network connections (including unresolved connections), you can switch to the Classic experience on through a footer note on the Overview page of the project on the portal.

Dependencies export CSV shows "Unknown process" with agentless dependency analysis

In agentless dependency analysis, the process names are captured on a best-effort basis. In certain scenarios, although the source and destination server names and the destination port are captured, it isn't feasible to determine the process names at both ends of the dependency. In such cases, the process is marked as "Unknown process."

Unable to export dependency data in a CSV due to the error "403: This request is not authorized to perform this operation"

If your Azure Migrate project has private endpoint connectivity, the request to export dependency data should be initiated from a client connected to the Azure virtual network over a private network. To resolve this error, open the Azure portal in your on-premises network or on your appliance server and try exporting again.

Export the dependency analysis errors

You can export all the errors and remediations from the Action Center in your project by filtering the errors for affected features as dependency mapping and selecting Export issues. The exported CSV file also contains additional information like the timestamp at which the error was encountered.

Common agentless dependency analysis errors

Azure Migrate supports agentless dependency analysis by using Azure Migrate: Discovery and assessment. Learn more about how to perform agentless dependency analysis.

For VMware VMs, agentless dependency analysis is performed by connecting to the servers via the vCenter Server using the VMware APIs. For Hyper-V VMs and physical servers, agentless dependency analysis is performed by directly connecting to Windows servers using PowerShell remoting on port 5985 (HTTP) and to Linux servers using SSH connectivity on port 22 (TCP).

The table below summarizes all errors encountered when gathering dependency data through VMware APIs or by directly connecting to servers:

Note

The same errors can also be encountered with software inventory because it follows the same methodology as agentless dependency analysis to collect the required data.

| Error | Cause | Action |

|---|---|---|

| 60001:UnableToConnectToPhysicalServer | Either the prerequisites to connect to the server have not been met or there are network issues in connecting to the server, for instance some proxy settings. | - Ensure that the server meets the prerequisites and port access requirements. - Add the IP addresses of the remote machines (discovered servers) to the WinRM TrustedHosts list on the Azure Migrate appliance, and retry the operation. This is to allow remote inbound connections on servers - Windows: WinRM port 5985 (HTTP) and Linux: SSH port 22 (TCP). - Ensure that you have chosen the correct authentication method on the appliance to connect to the server. - If the issue persists, submit an Azure support case, providing the appliance machine ID (available in the footer of the appliance configuration manager). |

| 60002:InvalidServerCredentials | Unable to connect to server. Either you have provided incorrect credentials on the appliance or the credentials previously provided have expired. | - Ensure that you have provided the correct credentials for the server on the appliance. You can check that by trying to connect to the server using those credentials. - If the credentials added are incorrect or have expired, edit the credentials on the appliance and revalidate the added servers. If the validation succeeds, the issue is resolved. - If the issue persists, submit an Azure support case, providing the appliance machine ID (available in the footer of the appliance configuration manager). |

| 60005:SSHOperationTimeout | The operation took longer than expected either due to network latency issues or due to the lack of latest updates on the server. | - Ensure that the impacted server has the latest kernel and OS updates installed. - Ensure that there is no network latency between the appliance and the server. It is recommended to have the appliance and source server on the same domain to avoid latency issues. - Connect to the impacted server from the appliance and run the commands documented here to check if they return null or empty data. - If the issue persists, submit an Azure support case providing the appliance machine ID (available in the footer of the appliance configuration manager). |

| 9000: VMware tools status on the server can't be detected. | VMware tools might not be installed on the server or the installed version is corrupted. | Ensure that VMware tools later than version 10.2.1 are installed and running on the server. |

| 9001: VMware tools aren't installed on the server. | VMware tools might not be installed on the server or the installed version is corrupted. | Ensure that VMware tools later than version 10.2.1 are installed and running on the server. |

| 9002: VMware tools aren't running on the server. | VMware tools might not be installed on the server or the installed version is corrupted. | Ensure that VMware tools later than version 10.2.0 are installed and running on the server. |

| 9003: Operation system type running on the server isn't supported. | Operating system running on the server isn't Windows or Linux. | Only Windows and Linux OS types are supported. If the server is indeed running Windows or Linux OS, check the operating system type specified in vCenter Server. |

| 9004: Server isn't in a running state. | Server is in a powered-off state. | Ensure that the server is in a running state. |

| 9005: Operation system type running on the server isn't supported. | Operating system running on the server isn't Windows or Linux. | Only Windows and Linux OS types are supported. The <FetchedParameter> operating system isn't supported currently. |

| 9006: The URL needed to download the discovery metadata file from the server is empty. | This issue could be transient because of the discovery agent on the appliance not working as expected. | The issue should automatically resolve in the next cycle within 24 hours. If the issue persists, submit an Azure support case. |

| 9007: The process that runs the script to collect the metadata isn't found in the server. | This issue could be transient because of the discovery agent on the appliance not working as expected. | The issue should automatically resolve in the next cycle within 24 hours. If the issue persists, submit an Azure support case. |

| 9008: The status of the process running on the server to collect the metadata can't be retrieved. | This issue could be transient because of an internal error. | The issue should automatically resolve in the next cycle within 24 hours. If the issue persists, submit an Azure support case. |

| 9009: Windows User Account Control (UAC) prevents the execution of discovery operations on the server. | Windows UAC settings restrict the discovery of installed applications from the server. | On the affected server, lower the level of the User Account Control settings on the Control Panel. |

| 9010: The server is powered off. | The server is in a powered-off state. | Ensure that the server is in a powered-on state. |

| 9011: The file containing the discovered metadata can't be found on the server. | This issue could be transient because of an internal error. | The issue should automatically resolve in the next cycle within 24 hours. If the issue persists, submit an Azure support case. |

| 9012: The file containing the discovered metadata on the server is empty. | This issue could be transient because of an internal error. | The issue should automatically resolve in the next cycle within 24 hours. If the issue persists, submit an Azure support case. |

| 9013: A new temporary user profile is created on logging in to the server each time. | A new temporary user profile is created on logging in to the server each time. | Submit an Azure support case to help troubleshoot this issue. |

| 9014: Unable to retrieve the file containing the discovered metadata because of an error encountered on the ESXi host. Error code: %ErrorCode; Details: %ErrorMessage | Encountered an error on the ESXi host <HostName>. Error code: %ErrorCode; Details: %ErrorMessage. | Ensure that port 443 is open on the ESXi host on which the server is running. Learn more on how to remediate the issue. |

| 9015: The vCenter Server user account provided for server discovery doesn't have guest operations privileges enabled. | The required privileges of guest operations haven't been enabled on the vCenter Server user account. | Ensure that the vCenter Server user account has privileges enabled for Virtual Machines > Guest Operations to interact with the server and pull the required data. Learn more on how to set up the vCenter Server account with required privileges. |

| 9016: Unable to discover the metadata because the guest operations agent on the server is outdated. | Either the VMware tools aren't installed on the server or the installed version isn't up to date. | Ensure that the VMware tools are installed and running and up to date on the server. The VMware Tools version must be version 10.2.1 or later. |

| 9017: The file containing the discovered metadata can't be found on the server. | This could be a transient issue because of an internal error. | Submit an Azure support case to help troubleshoot this issue. |

| 9018: PowerShell isn't installed on the server. | PowerShell can't be found on the server. | Ensure that PowerShell version 2.0 or later is installed on the server. Learn more about how to remediate the issue. |

| 9019: Unable to discover the metadata because of guest operation failures on the server. | VMware guest operations failed on the server. The issue was encountered when trying the following credentials on the server: <FriendlyNameOfCredentials>. | Ensure that the server credentials on the appliance are valid and the username in the credentials is in the user principal name (UPN) format. (Find the friendly name of the credentials tried by Azure Migrate in the possible causes.) |

| 9020: Unable to create the file required to contain the discovered metadata on the server. | The role associated to the credentials provided on the appliance or a group policy on-premises is restricting the creation of the file in the required folder. The issue was encountered when trying the following credentials on the server: <FriendlyNameOfCredentials>. | 1. Check if the credentials provided on the appliance have created file permission on the folder <folder path/folder name> in the server. 2. If the credentials provided on the appliance don't have the required permissions, either provide another set of credentials or edit an existing one. (Find the friendly name of the credentials tried by Azure Migrate in the possible causes.) |

| 9021: Unable to create the file required to contain the discovered metadata at the right path on the server. | VMware tools are reporting an incorrect file path to create the file. | Ensure that VMware tools later than version 10.2.0 are installed and running on the server. |

| 9022: The access is denied to run the Get-WmiObject cmdlet on the server. | The role associated to the credentials provided on the appliance or a group policy on-premises is restricting access to the WMI object. The issue was encountered when trying the following credentials on the server: <FriendlyNameOfCredentials>. | 1. Check if the credentials provided on the appliance have created file administrator privileges and have WMI enabled. 2. If the credentials provided on the appliance don't have the required permissions, either provide another set of credentials or edit an existing one. (Find the friendly name of the credentials tried by Azure Migrate in the possible causes.) Learn more on how to remediate the issue. |

| 9023: Unable to run PowerShell because the %SystemRoot% environment variable value is empty. | The value of the %SystemRoot% environment variable is empty for the server. | 1. Check if the environment variable is returning an empty value by running echo %systemroot% command on the affected server. 2. If issue persists, submit an Azure support case. |

| 9024: Unable to perform discovery as the %TEMP% environment variable value is empty. | The value of %TEMP% environment variable is empty for the server. | 1. Check if the environment variable is returning an empty value by running the echo %temp% command on the affected server. 2. If the issue persists, submit an Azure support case. |

| 9025: Unable to perform discovery because PowerShell is corrupted on the server. | PowerShell is corrupted on the server. | Reinstall PowerShell and verify that it's running on the affected server. |

| 9026: Unable to run guest operations on the server. | The current state of the server doesn't allow the guest operations to run. | 1. Ensure that the affected server is up and running. 2. If the issue persists, submit an Azure support case. |

| 9027: Unable to discover the metadata because the guest operations agent isn't running on the server. | Unable to contact the guest operations agent on the server. | Ensure that VMware tools later than version 10.2.0 are installed and running on the server. |

| 9028: Unable to create the file required to contain the discovered metadata because of insufficient storage on the server. | There's a lack of sufficient storage space on the server disk. | Ensure that enough space is available on the disk storage of the affected server. |

| 9029: The credentials provided on the appliance don't have access permissions to run PowerShell. | The credentials on the appliance don't have access permissions to run PowerShell. The issue was encountered when trying the following credentials on the server: <FriendlyNameOfCredentials>. | 1. Ensure that the credentials on the appliance can access PowerShell on the server. 2. If the credentials on the appliance don't have the required access, either provide another set of credentials or edit an existing one. (Find the friendly name of the credentials tried by Azure Migrate in the possible causes.) |

| 9030: Unable to gather the discovered metadata because the ESXi host where the server is hosted is in a disconnected state. | The ESXi host on which the server is residing is in a disconnected state. | Ensure that the ESXi host running the server is in a connected state. |

| 9031: Unable to gather the discovered metadata because the ESXi host where the server is hosted isn't responding. | The ESXi host on which the server is residing is in an invalid state. | Ensure that the ESXi host running the server is in a running and connected state. |

| 9032: Unable to discover because of an internal error. | The issue encountered is because of an internal error. | Follow the steps on this website to remediate the issue. If the issue persists, open an Azure support case. |

| 9033: Unable to discover because the username of the credentials provided on the appliance for the server has invalid characters. | The credentials on the appliance contain invalid characters in the username. The issue was encountered when trying the following credentials on the server: <FriendlyNameOfCredentials>. | Ensure that the credentials on the appliance don't have any invalid characters in the username. You can go back to the appliance configuration manager to edit the credentials. (Find the friendly name of the credentials tried by Azure Migrate in the possible causes.) |

| 9034: Unable to discover because the username of the credentials provided on the appliance for the server isn't in the UPN format. | The credentials on the appliance don't have the username in the UPN format. The issue was encountered when trying the following credentials on the server: <FriendlyNameOfCredentials>. | Ensure that the credentials on the appliance have their username in the UPN format. You can go back to the appliance configuration manager to edit the credentials. (Find the friendly name of the credentials tried by Azure Migrate in the possible causes.) |

| 9035: Unable to discover because the PowerShell language mode isn't set correctly. | The PowerShell language mode isn't set to Full language. | Ensure that the PowerShell language mode is set to Full language. |

| 9036: Unable to discover because the username of the credentials provided on the appliance for the server isn't in the UPN format. | The credentials on the appliance don't have the username in the UPN format. The issue was encountered when trying the following credentials on the server: <FriendlyNameOfCredentials>. | Ensure that the credentials on the appliance have their username in the UPN format. You can go back to the appliance configuration manager to edit the credentials. (Find the friendly name of the credentials tried by Azure Migrate in the possible causes.) |

| 9037: The metadata collection is temporarily paused because of high response time from the server. | The server is taking too long to respond. | The issue should automatically resolve in the next cycle within 24 hours. If the issue persists, submit an Azure support case. |

| 10000: The operation system type running on the server isn't supported. | The operating system running on the server isn't Windows or Linux. | Only Windows and Linux OS types are supported. <GuestOSName> operating system isn't supported currently. |

| 10001: The script required to gather discovery metadata isn't found on the server. | The script required to perform discovery might have been deleted or removed from the expected location. | Submit an Azure support case to help troubleshoot this issue. |

| 10002: The discovery operations timed out on the server. | This issue could be transient because the discovery agent on the appliance isn't working as expected. | The issue should automatically resolve in the next cycle within 24 hours. If it isn't resolved, follow the steps on this website to remediate the issue. If the issue persists, open an Azure support case. |

| 10003: The process executing the discovery operations exited with an error. | The process executing the discovery operations exited abruptly because of an error. | The issue should automatically resolve in the next cycle within 24 hours. If the issue persists, submit an Azure support case. |

| 10004: Credentials aren't provided on the appliance for the server OS type. | The credentials for the server OS type weren't added on the appliance. | 1. Ensure that you add the credentials for the OS type of the affected server on the appliance. 2. You can now add multiple server credentials on the appliance. |

| 10005: Credentials provided on the appliance for the server are invalid. | The credentials provided on the appliance aren't valid. The issue was encountered when trying the following credentials on the server: <FriendlyNameOfCredentials>. | 1. Ensure that the credentials provided on the appliance are valid and the server is accessible by using the credentials. 2. You can now add multiple server credentials on the appliance. 3. Go back to the appliance configuration manager to either provide another set of credentials or edit an existing one. (Find the friendly name of the credentials tried by Azure Migrate in the possible causes.) Learn more about how to remediate the issue. |

| 10006: The operation system type running on the server isn't supported. | The operating system running on the server isn't Windows or Linux. | Only Windows and Linux OS types are supported. <GuestOSName> operating system isn't supported currently. |

| 10007: Unable to process the discovered metadata from the server. | An error occurred when parsing the contents of the file containing the discovered metadata. | Submit an Azure support case to help troubleshoot this issue. |

| 10008: Unable to create the file required to contain the discovered metadata on the server. | The role associated to the credentials provided on the appliance or a group policy on-premises is restricting the creation of file in the required folder. The issue was encountered when trying the following credentials on the server: <FriendlyNameOfCredentials>. | 1. Check if the credentials provided on the appliance have created file permission on the folder <folder path/folder name> in the server. 2. If the credentials provided on the appliance don't have the required permissions, either provide another set of credentials or edit an existing one. (Find the friendly name of the credentials tried by Azure Migrate in the possible causes.) |

| 10009: Unable to write the discovered metadata in the file on the server. | The role associated to the credentials provided on the appliance or a group policy on-premises is restricting writing in the file on the server. The issue was encountered when trying the following credentials on the server: <FriendlyNameOfCredentials>. | 1. Check if the credentials provided on the appliance have write file permission on the folder <folder path/folder name> in the server. 2. If the credentials provided on the appliance don't have the required permissions, either provide another set of credentials or edit an existing one. (Find the friendly name of the credentials tried by Azure Migrate in the possible causes.) |

| 10010: Unable to discover because the command- %CommandName; required to collect some metadata is missing on the server. | The package containing the command %CommandName; isn't installed on the server. | Ensure that the package that contains the command %CommandName; is installed on the server. |

| 10011: The credentials provided on the appliance were used to log in and log out for an interactive session. | The interactive login and logout forces the registry keys to be unloaded in the profile of the account being used. This condition makes the keys unavailable for future use. | Use the resolution methods documented on this website. |

| 10012: Credentials haven't been provided on the appliance for the server. | Either no credentials have been provided for the server or you've provided domain credentials with an incorrect domain name on the appliance. Learn more about the cause of this error. | 1. Ensure that the credentials are provided on the appliance for the server and the server is accessible by using the credentials. 2. You can now add multiple credentials on the appliance for servers. Go back to the appliance configuration manager to provide credentials for the server. |

Error 970: DependencyMapInsufficientPrivilegesException

Cause

The error usually appears for Linux servers when you haven't provided credentials with the required privileges on the appliance.

Remediation

You have two options:

- Ensure that you've provided a root user account.

- Ensure that an account has these permissions on /bin/netstat and /bin/ls files:

- CAP_DAC_READ_SEARCH

- CAP_SYS_PTRACE

To check if the user account provided on the appliance has the required privileges:

Log in to the server where you encountered this error with the same user account as mentioned in the error message.

Run the following commands in Azure Shell. You'll get errors if you don't have the required privileges for agentless dependency analysis.

ps -o pid,cmd | grep -v ]$ netstat -atnp | awk '{print $4,$5,$7}'Set the required permissions on /bin/netstat and /bin/ls files by running the following commands:

sudo setcap CAP_DAC_READ_SEARCH,CAP_SYS_PTRACE=ep /bin/ls sudo setcap CAP_DAC_READ_SEARCH,CAP_SYS_PTRACE=ep /bin/netstatYou can validate if the preceding commands assigned the required permissions to the user account or not.

getcap /usr/bin/ls getcap /usr/bin/netstatRerun the commands provided in step 2 to get a successful output.

Error 9014: HTTPGetRequestToRetrieveFileFailed

Cause

The issue happens when the VMware discovery agent in appliance tries to download the output file containing dependency data from the server file system through the ESXi host on which the server is hosted.

Remediation

You can test TCP connectivity to the ESXi host (name provided in the error message) on port 443 (required to be open on ESXi hosts to pull dependency data) from the appliance. Open PowerShell on the appliance server and run the following command:

Test -NetConnection -ComputeName <Ip address of the ESXi host> -Port 443If the command returns successful connectivity, go to the Azure Migrate project > Discovery and assessment > Overview > Manage > Appliances, select the appliance name, and select Refresh services.

Error 9018: PowerShellNotFound

Cause

The error usually appears for servers running Windows Server 2008 or lower.

Remediation

Install Windows PowerShell 5.1 at this location on the server. Follow the instructions in Install and Configure WMF 5.1 to install PowerShell in Windows Server.

After you install the required PowerShell version, verify if the error was resolved by following the steps on this website.

Error 9022: GetWMIObjectAccessDenied

Remediation

Make sure that the user account provided in the appliance has access to the WMI namespace and subnamespaces. To set the access:

Go to the server that's reporting this error.

Search and select Run from the Start menu. In the Run dialog, enter wmimgmt.msc in the Open text box, and select Enter.

The wmimgmt console opens where you can find WMI Control (Local) in the left pane. Right-click it, and select Properties from the menu.

In the WMI Control (Local) Properties dialog, select the Securities tab.

On the Securities tab, select Security to open the Security for ROOT dialog.

Select Advanced to open the Advanced Security Settings for Root dialog.

Select Add to open the Permission Entry for Root dialog.

Select Select a principal to open the Select Users, Computers, Service Accounts, or Groups dialog.

Select the usernames or groups you want to grant access to the WMI, and select OK.

Ensure you grant execute permissions, and select This namespace and subnamespaces in the Applies to dropdown list.

Select Apply to save the settings and close all dialogs.

After you get the required access, verify if the error was resolved by following the steps on this website.

Error 9032: InvalidRequest

Cause

There can be multiple reasons for this issue. One reason is when the username provided (server credentials) on the appliance configuration manager has invalid XML characters. Invalid characters cause an error in parsing the SOAP request.

Remediation

- Make sure the username of the server credentials doesn't have invalid XML characters and is in the username@domain.com format. This format is popularly known as the UPN format.

- After you edit the credentials on the appliance, verify if the error was resolved by following the steps on this website.

Error 10002: ScriptExecutionTimedOutOnVm

Cause

- This error occurs when the server is slow or unresponsive and the script executed to pull the dependency data starts timing out.

- After the discovery agent encounters this error on the server, the appliance doesn't attempt agentless dependency analysis on the server thereafter to avoid overloading the unresponsive server.

- You'll continue to see the error until you check the issue with the server and restart the discovery service.

Remediation

Log in to the server that's encountering this error.

Run the following commands on PowerShell:

Get-WMIObject win32_operatingsystem; Get-WindowsFeature | Where-Object {$_.InstallState -eq 'Installed' -or ($_.InstallState -eq $null -and $_.Installed -eq 'True')}; Get-WmiObject Win32_Process; netstat -ano -p tcp | select -Skip 4;If commands output the result in a few seconds, go to the Azure Migrate project > Discovery and assessment > Overview > Manage > Appliances, select the appliance name, and select Refresh services to restart the discovery service.

If the commands are timing out without giving any output, you need to:

- Figure out which processes are consuming high CPU or memory on the server.

- Try to provide more cores or memory to that server and run the commands again.

Error 10005: GuestCredentialNotValid

Remediation

- Ensure the validity of credentials (friendly name provided in the error) by selecting Revalidate credentials on the appliance configuration manager.

- Ensure that you can log in to the affected server by using the same credential provided in the appliance.

- You can try using another user account (for the same domain, in case the server is domain joined) for that server instead of the administrator account.

- The issue can happen when Global Catalog <-> Domain Controller communication is broken. Check for this problem by creating a new user account in the domain controller and providing the same in the appliance. You might also need to restart the domain controller.

- After you take the remediation steps, verify if the error was resolved by following the steps on this website.

Error 10012: CredentialNotProvided

Cause

This error occurs when you've provided a domain credential with the wrong domain name on the appliance configuration manager. For example, if you've provided domain credentials with the username user@abc.com but provided the domain name as def.com, those credentials won't be attempted if the server is connected to def.com and you'll get this error message.

Remediation

- Go to the appliance configuration manager to add a server credential or edit an existing one as explained in the cause.

- After you take the remediation steps, verify if the error was resolved by following the steps on this website.

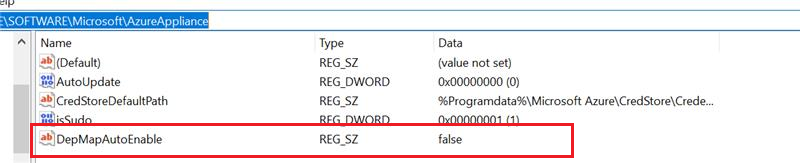

Error 9014: HTTPGetRequestToRetrieveFileFailed/ 975: MaxLimitExceededForDepMap /976: AutoenableDisabledForDepMap

Remediation

On the server running the appliance, open the Registry Editor.

Navigate to HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\AzureAppliance (Use the folder without space).

Add a registry key

DepMapAutoEnablewith a type of "String" and value as "false".Ensure that you have manually enabled dependency analysis for one or more discovered servers in your project.

Restart the appliance server. Wait for an hour and check if the issues have been resolved.

Mitigation verification

After you use the mitigation steps for the preceding errors, verify if the mitigation worked by running a few PowerCLI commands from the appliance server. If the commands succeed, it means that the issue is resolved. Otherwise, check and follow the remediation steps again.

For VMware VMs (using VMware pipe)

Run the following commands to set up PowerCLI on the appliance server:

Install-Module -Name VMware.PowerCLI -AllowClobber Set-PowerCLIConfiguration -InvalidCertificateAction IgnoreConnect to the vCenter server from the appliance by providing the vCenter server IP address in the command and credentials in the prompt:

Connect-VIServer -Server <IPAddress of vCenter Server>Connect to the target server from the appliance by providing the server name and server credentials, as provided on the appliance:

$vm = get-VM <VMName> $credential = Get-CredentialFor agentless dependency analysis, run the following commands to see if you get a successful output.

For Windows servers:

Invoke-VMScript -VM $vm -ScriptText "powershell.exe 'Get-WmiObject Win32_Process'" -GuestCredential $credential Invoke-VMScript -VM $vm -ScriptText "powershell.exe 'netstat -ano -p tcp'" -GuestCredential $credentialFor Linux servers:

Invoke-VMScript -VM $vm -ScriptText "ps -o pid,cmd | grep -v ]$" -GuestCredential $credential Invoke-VMScript -VM $vm -ScriptText "netstat -atnp | awk '{print $4,$5,$7}'" -GuestCredential $credential

For Hyper-V VMs and physical servers (using direct connect pipe)

For Windows servers:

Connect to Windows server by running the command:

$Server = New-PSSession -ComputerName <IPAddress of Server> -Credential <user_name>and input the server credentials in the prompt.

Run the following commands to validate for agentless dependency analysis to see if you get a successful output:

Invoke-Command -Session $Server -ScriptBlock {Get-WmiObject Win32_Process} Invoke-Command -Session $Server -ScriptBlock {netstat -ano -p tcp}

For Linux servers:

Install the OpenSSH client

Add-WindowsCapability -Online -Name OpenSSH.Client~~~~0.0.1.0Install the OpenSSH server

Add-WindowsCapability -Online -Name OpenSSH.Server~~~~0.0.1.0Start and configure OpenSSH Server

Start-Service sshd Set-Service -Name sshd -StartupType 'Automatic'Connect to OpenSSH Server

ssh username@servernameRun the following commands to validate for agentless dependency analysis to see if you get a successful output:

ps -o pid,cmd | grep -v ]$ netstat -atnp | awk '{print $4,$5,$7}'

After you verify that the mitigation worked, go to the Azure Migrate project > Discovery and assessment > Overview > Manage > Appliances, select the appliance name, and select Refresh services to start a fresh discovery cycle.

Agent-based dependency visualization in Azure operated by 21Vianet

Agent-based dependency analysis isn't supported in Azure operated by 21Vianet. You can use agentless dependency analysis instead.

Capture network traffic

To collect network traffic logs:

Sign in to the Azure portal.

Select F12 to start Developer Tools. If needed, clear the Clear entries on navigation setting.

Select the Network tab, and start capturing network traffic:

- In Chrome, select Preserve log. The recording should start automatically. A red circle indicates that traffic is being captured. If the red circle doesn't appear, select the black circle to start.

- In Microsoft Edge and Internet Explorer, the recording should start automatically. If it doesn't, select the green play button.

Try to reproduce the error.

After you encounter the error while recording, stop recording, and save a copy of the recorded activity:

- In Chrome, right-click and select Save as HAR with content. This action compresses and exports the logs as an HTTP Archive (har) file.

- In Microsoft Edge or Internet Explorer, select the Export captured traffic option. This action compresses and exports the log.

Select the Console tab to check for any warnings or errors. To save the console log:

- In Chrome, right-click anywhere in the console log. Select Save as to export, and zip the log.

- In Microsoft Edge or Internet Explorer, right-click the errors and select Copy all.

Close Developer Tools.