Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Azure Synapse Analytics offers various analytics engines to help you ingest, transform, model, analyze, and distribute your data. An Apache Spark pool provides open-source big data compute capabilities. After you create an Apache Spark pool in your Synapse workspace, data can be loaded, modeled, processed, and distributed for faster analytic insight.

In this quickstart, you learn how to use the Azure portal to create an Apache Spark pool in a Synapse workspace.

Important

Billing for Spark instances is prorated per minute, whether you are using them or not. Be sure to shutdown your Spark instance after you have finished using it, or set a short timeout. For more information, see the Clean up resources section of this article.

If you don't have an Azure subscription, create a trial account before you begin.

Prerequisites

- You'll need an Azure subscription. If needed, create a trial Azure account

- You'll be using the Synapse workspace.

Sign in to the Azure portal

Sign in to the Azure portal

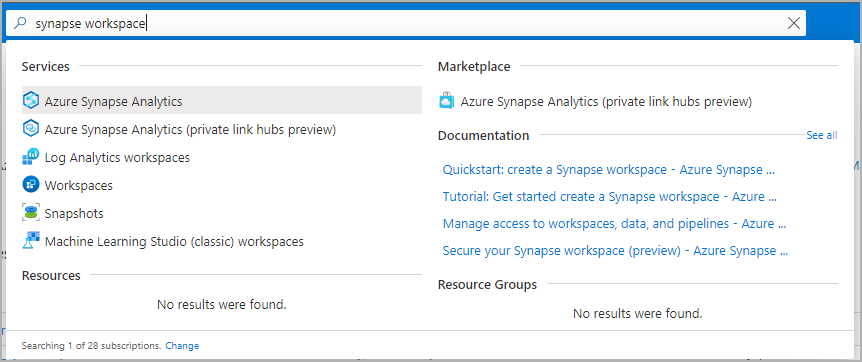

Navigate to the Synapse workspace

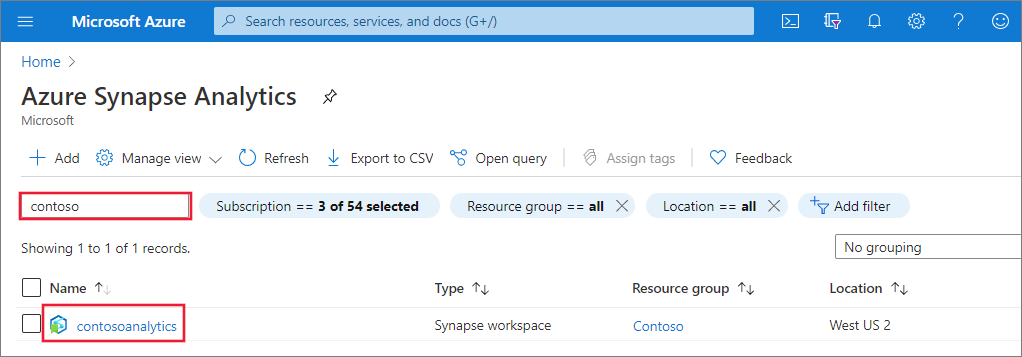

Navigate to the Synapse workspace where the Apache Spark pool will be created by typing the service name (or resource name directly) into the search bar.

From the list of workspaces, type the name (or part of the name) of the workspace to open. For this example, we use a workspace named contosoanalytics.

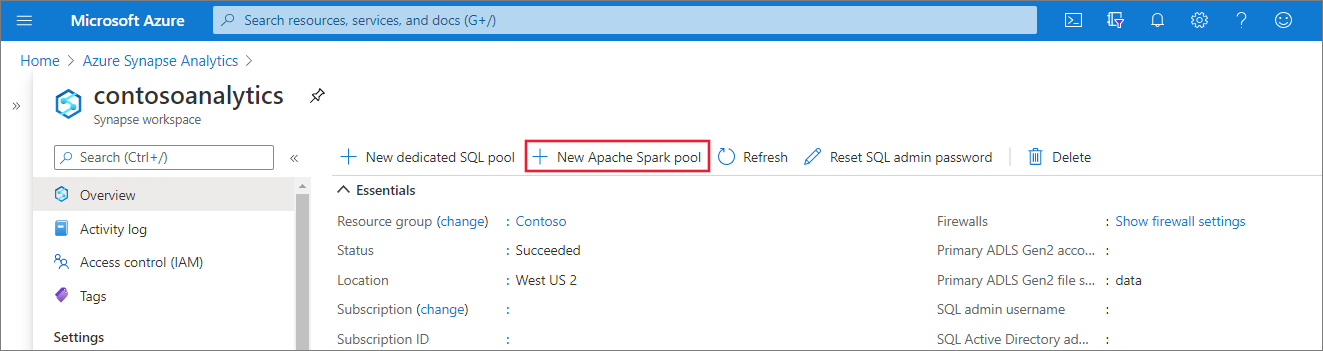

Create new Apache Spark pool

In the Synapse workspace where you want to create the Apache Spark pool, select New Apache Spark pool.

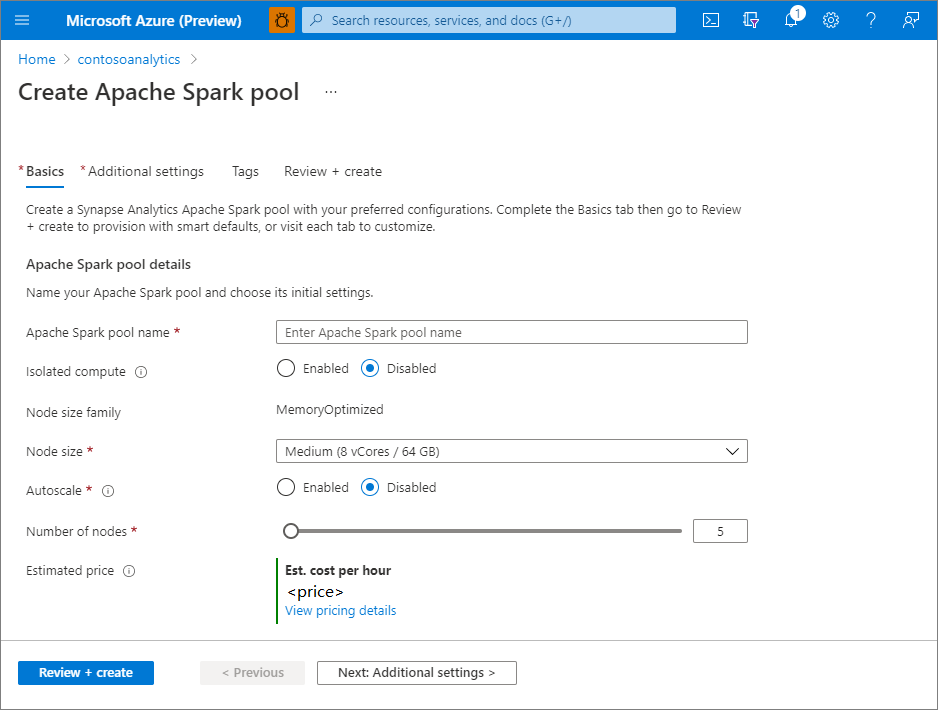

Enter the following details in the Basics tab:

Setting Suggested value Description Apache Spark pool name A valid pool name, like contososparkThis is the name that the Apache Spark pool will have. Node size Small (4 vCPU / 32 GB) Set this to the smallest size to reduce costs for this quickstart Autoscale Disabled We don't need autoscale for this quickstart Number of nodes 5 Use a small size to limit costs for this quickstart Important

There are specific limitations for the names that Apache Spark pools can use. Names must contain letters or numbers only, must be 15 or less characters, must start with a letter, not contain reserved words, and be unique in the workspace.

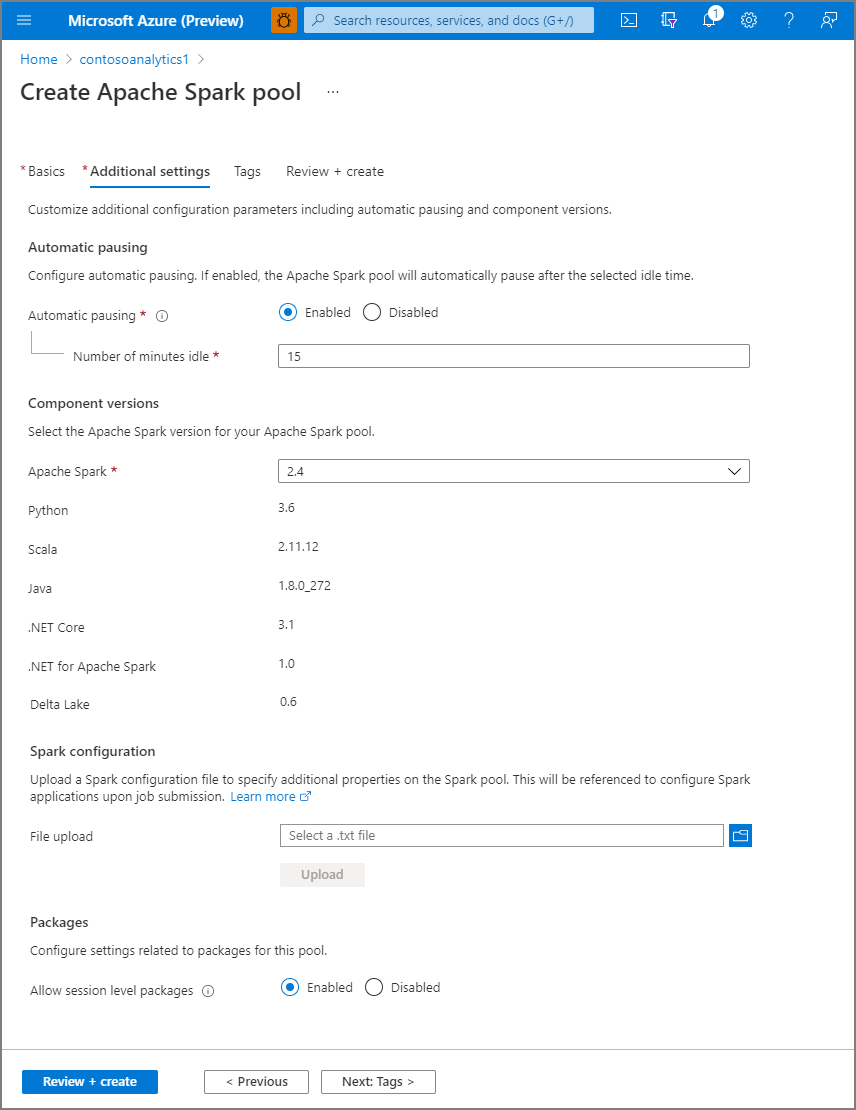

Select Next: additional settings and review the default settings. Don't modify any default settings.

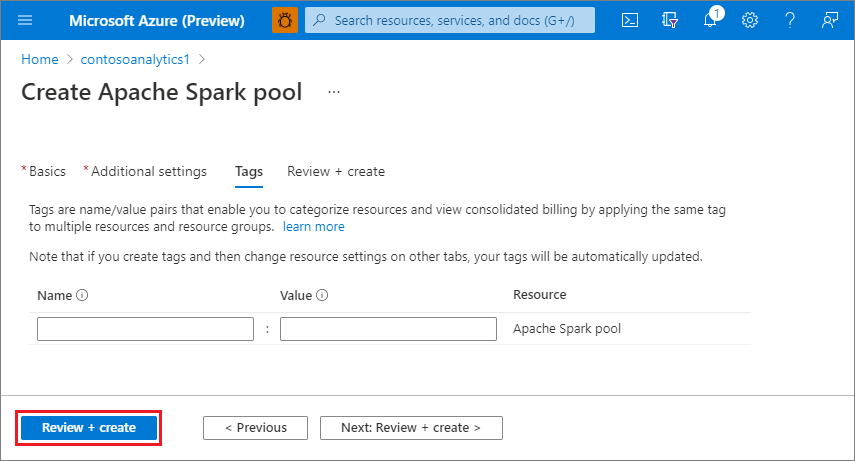

Select Next: tags. Consider using Azure tags. For example, the "Owner" or "CreatedBy" tag to identify who created the resource, and the "Environment" tag to identify whether this resource is in Production, Development, etc. For more information, see Develop your naming and tagging strategy for Azure resources.

Select Review + create.

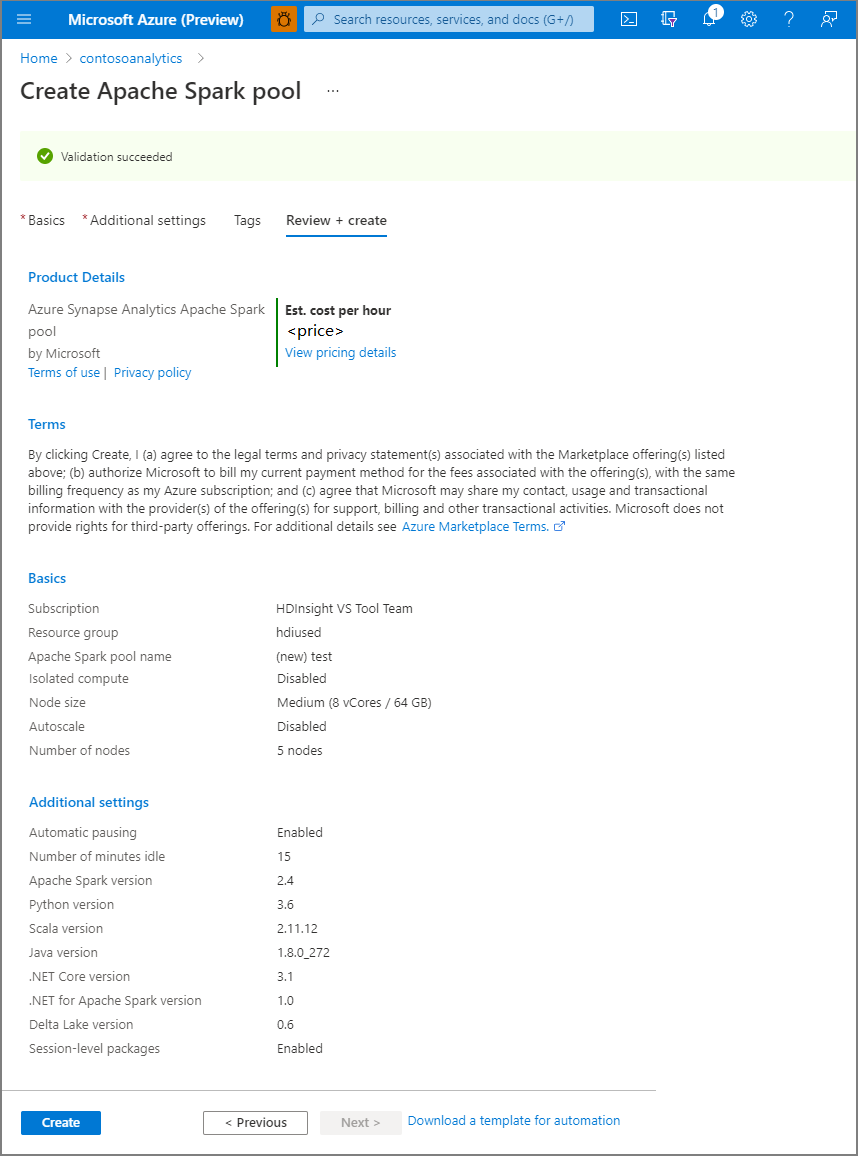

Make sure that the details look correct based on what was previously entered, and select Create.

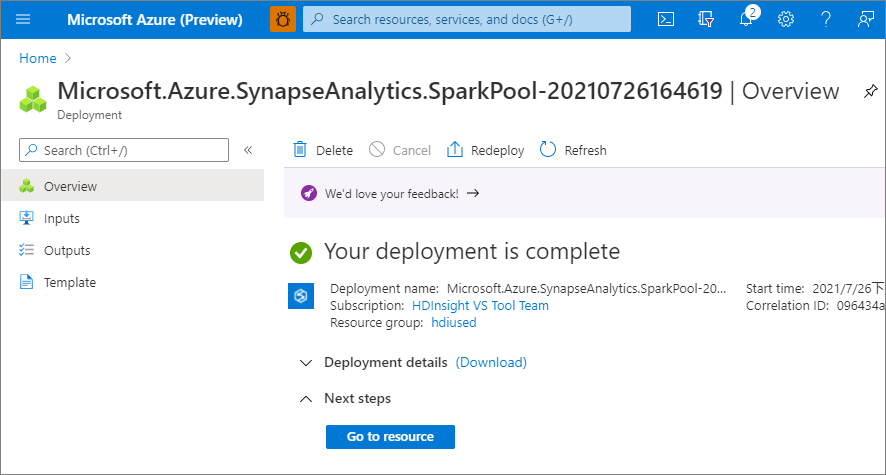

At this point, the resource provisioning flow will start, indicating once it's complete.

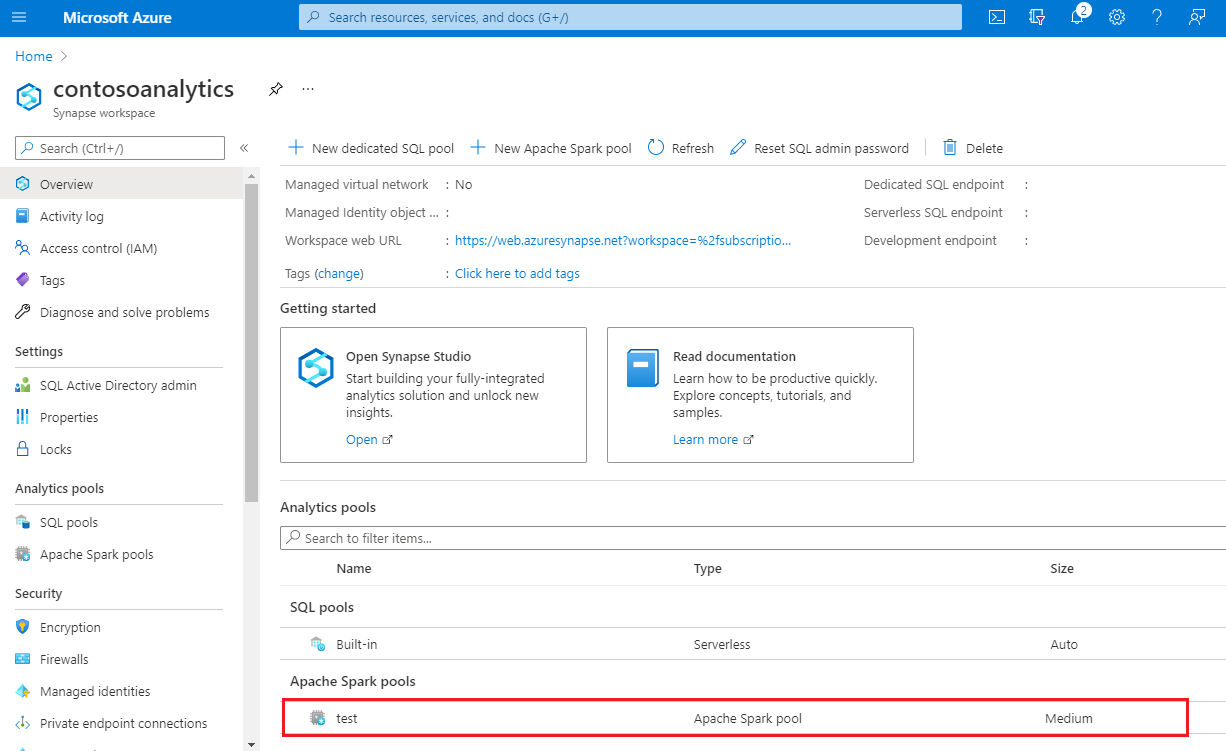

After the provisioning completes, navigating back to the workspace will show a new entry for the newly created Apache Spark pool.

At this point, there are no resources running, no charges for Spark, you have created metadata about the Spark instances you want to create.

Clean up resources

The following steps delete the Apache Spark pool from the workspace.

Warning

Deleting an Apache Spark pool will remove the analytics engine from the workspace. It will no longer be possible to connect to the pool, and all queries, pipelines, and notebooks that use this Apache Spark pool will no longer work.

If you want to delete the Apache Spark pool, do the following steps:

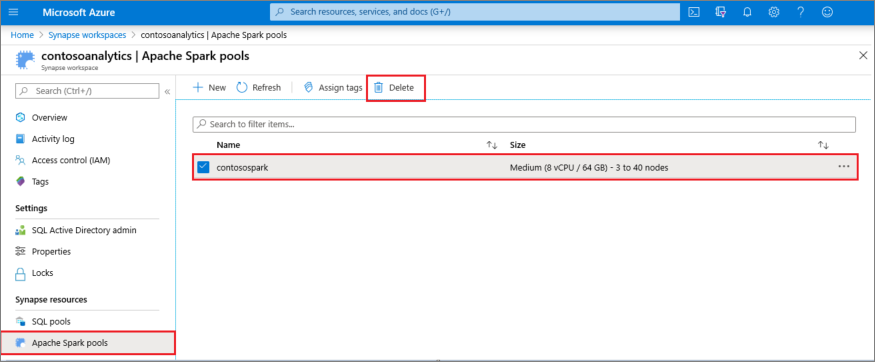

- Navigate to the Apache Spark pools pane in the workspace.

- Select the Apache Spark pool to be deleted (in this case, contosospark).

- Select Delete.

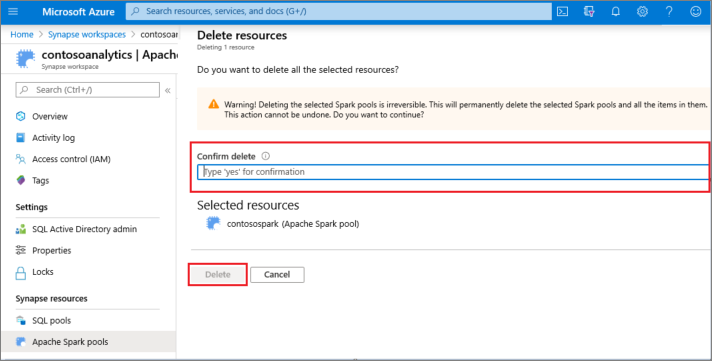

- Confirm the deletion, and select Delete button.

- When the process completes successfully, the Apache Spark pool will no longer be listed in the workspace resources.