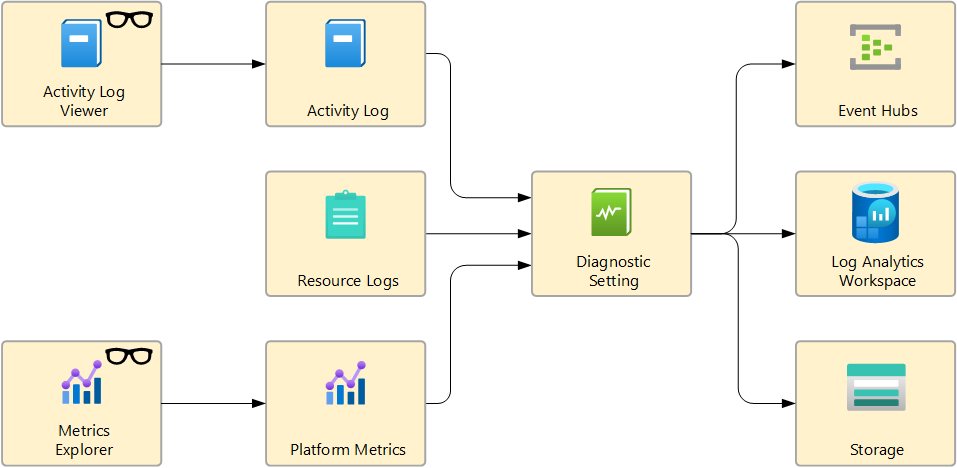

Azure 资源日志提供有关在 Azure 资源中执行的作的见解。 对于每种资源类型,资源日志的内容不同。 它们可以包含有关对资源执行的作、这些作的状态以及其他详细信息,这些详细信息可帮助你了解资源的运行状况和性能。

将资源日志发送到 Log Analytics 工作区 以获取以下功能:

收集模式

资源日志使用的 Log Analytics 工作区中的表取决于资源类型和资源正在使用的集合类型。 资源日志有两种类型的收集模式:

特定于资源

对于使用特定于资源的模式的日志,将为诊断设置中选择的每个日志类别创建所选工作区中的单个表。

特定于资源的日志在 Azure 诊断日志上具有以下优势:

- 更轻松地处理日志查询中的数据。

- 提供更好的架构及其结构的可发现性。

- 提高引入延迟和查询时间的性能。

- 提供对特定表授予 Azure 基于角色的访问控制权限的功能。

有关特定于资源的日志和表的说明,请参阅 Azure Monitor 支持的资源日志类别

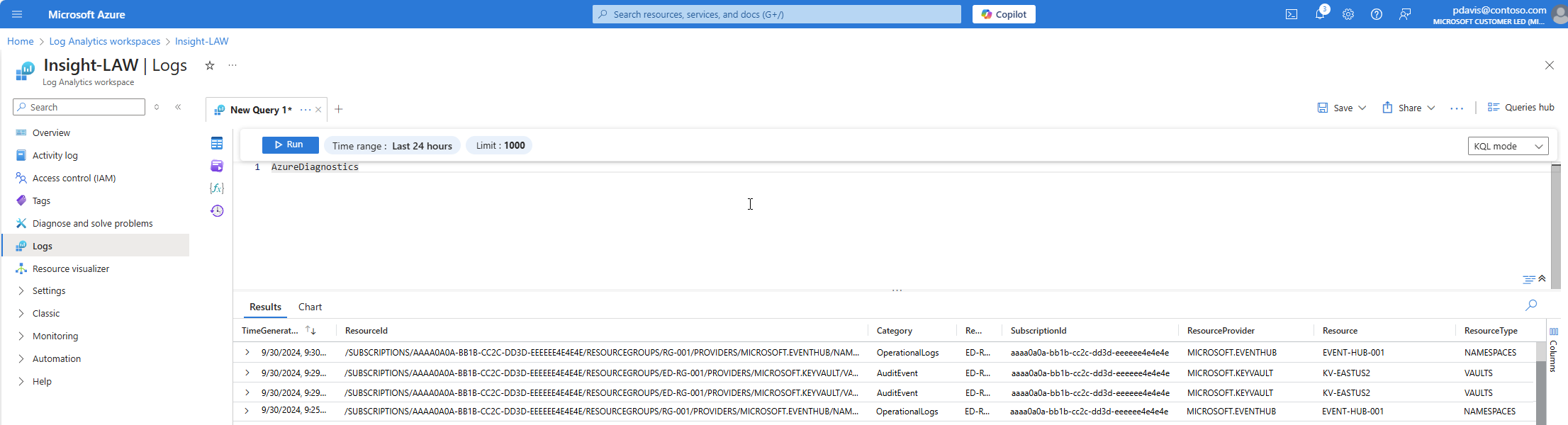

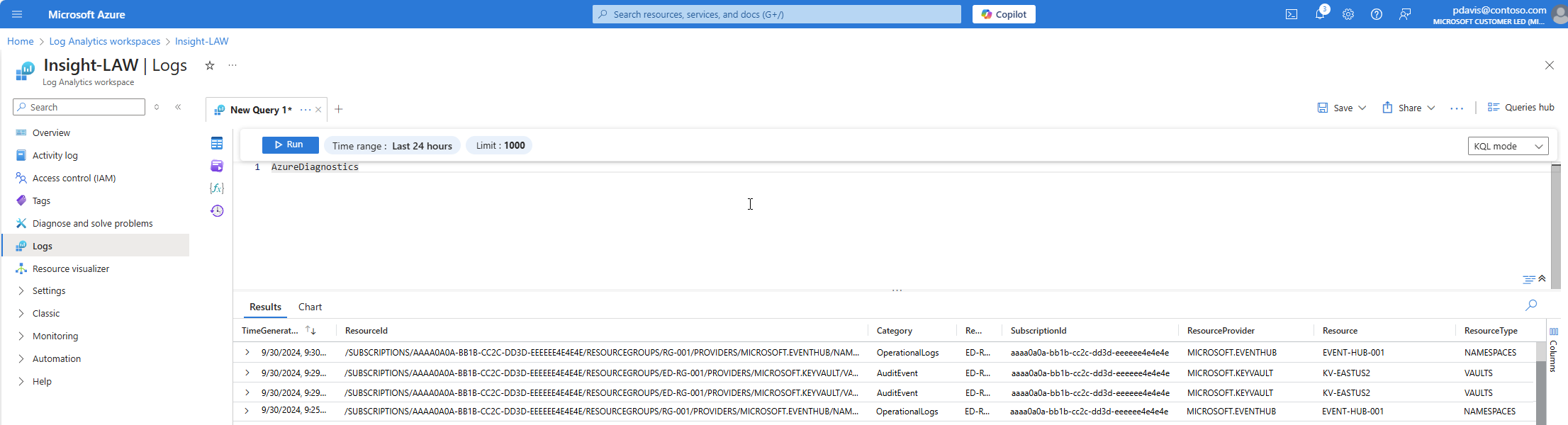

Azure 诊断模式

在 Azure 诊断模式下,任何诊断设置中的所有数据都会收集到 AzureDiagnostics 表中。 此旧方法目前由少数 Azure 服务使用。 由于多个资源类型将数据发送到同一个表,因此其架构是所收集的所有不同数据类型的架构的超集。 有关此表的结构及其处理方式(可能大量列)的详细信息,请参阅 AzureDiagnostics 参考。

AzureDiagnostics 表包含生成日志的资源的 resourceId、日志类别以及生成日志的时间以及特定于资源的属性。

选择收集模式

大多数 Azure 资源在 Azure 诊断 或 资源特定的 模式下将数据写入工作区,而无需选择。 有关详细信息,请参阅 Azure 资源日志的通用架构和服务特定的架构。

所有 Azure 服务最终都将使用特定于资源的模式。 在此转换过程中,某些资源允许你在诊断设置中选择模式。 为任何新的诊断设置指定特定于资源的模式,因为此模式使数据更易于管理。 它还可能有助于避免以后的复杂迁移。

可以将现有诊断设置修改为特定于资源的模式。 在这种情况下,已收集的数据将保留在 AzureDiagnostics 表中,直到根据工作区的保留设置将其删除。 在专用表中收集新数据。 使用 联合 运算符跨这两个表查询数据。

继续观看 Azure 更新 博客,了解有关支持特定于资源的模式的 Azure 服务的公告。

将资源日志发送到事件中心,以将其发送到 Azure 外部。 例如,资源日志可能会发送到第三方 SIEM 或其他日志分析解决方案。

事件中心的资源日志以 JSON 格式使用,其中包含 records 每个有效负载中的记录的元素。 架构取决于 Azure 资源日志的通用架构和服务特定的架构中所述的资源类型。

以下示例输出数据来自资源日志的 Azure 事件中心:

{

"records": [

{

"time": "2019-07-15T18:00:22.6235064Z",

"workflowId": "/SUBSCRIPTIONS/AAAA0A0A-BB1B-CC2C-DD3D-EEEEEE4E4E4E/RESOURCEGROUPS/JOHNKEMTEST/PROVIDERS/MICROSOFT.LOGIC/WORKFLOWS/JOHNKEMTESTLA",

"resourceId": "/SUBSCRIPTIONS/AAAA0A0A-BB1B-CC2C-DD3D-EEEEEE4E4E4E/RESOURCEGROUPS/JOHNKEMTEST/PROVIDERS/MICROSOFT.LOGIC/WORKFLOWS/JOHNKEMTESTLA/RUNS/08587330013509921957/ACTIONS/SEND_EMAIL",

"category": "WorkflowRuntime",

"level": "Error",

"operationName": "Microsoft.Logic/workflows/workflowActionCompleted",

"properties": {

"$schema": "2016-04-01-preview",

"startTime": "2016-07-15T17:58:55.048482Z",

"endTime": "2016-07-15T18:00:22.4109204Z",

"status": "Failed",

"code": "BadGateway",

"resource": {

"subscriptionId": "AAAA0A0A-BB1B-CC2C-DD3D-EEEEEE4E4E4E",

"resourceGroupName": "JohnKemTest",

"workflowId": "2222cccc-33dd-eeee-ff44-aaaaaa555555",

"workflowName": "JohnKemTestLA",

"runId": "08587330013509921957",

"location": "chinanorth",

"actionName": "Send_email"

},

"correlation": {

"actionTrackingId": "3333dddd-44ee-ffff-aa55-bbbbbbbb6666",

"clientTrackingId": "08587330013509921958"

}

}

},

{

"time": "2019-07-15T18:01:15.7532989Z",

"workflowId": "/SUBSCRIPTIONS/AAAA0A0A-BB1B-CC2C-DD3D-EEEEEE4E4E4E/RESOURCEGROUPS/JOHNKEMTEST/PROVIDERS/MICROSOFT.LOGIC/WORKFLOWS/JOHNKEMTESTLA",

"resourceId": "/SUBSCRIPTIONS/AAAA0A0A-BB1B-CC2C-DD3D-EEEEEE4E4E4E/RESOURCEGROUPS/JOHNKEMTEST/PROVIDERS/MICROSOFT.LOGIC/WORKFLOWS/JOHNKEMTESTLA/RUNS/08587330012106702630/ACTIONS/SEND_EMAIL",

"category": "WorkflowRuntime",

"level": "Information",

"operationName": "Microsoft.Logic/workflows/workflowActionStarted",

"properties": {

"$schema": "2016-04-01-preview",

"startTime": "2016-07-15T18:01:15.5828115Z",

"status": "Running",

"resource": {

"subscriptionId": "AAAA0A0A-BB1B-CC2C-DD3D-EEEEEE4E4E4E",

"resourceGroupName": "JohnKemTest",

"workflowId": "dddd3333-ee44-5555-66ff-777777aaaaaa",

"workflowName": "JohnKemTestLA",

"runId": "08587330012106702630",

"location": "chinanorth",

"actionName": "Send_email"

},

"correlation": {

"actionTrackingId": "ffff5555-aa66-7777-88bb-999999cccccc",

"clientTrackingId": "08587330012106702632"

}

}

}

]

}

将资源日志发送到 Azure 存储以保留资源日志进行存档。 创建诊断设置后,一旦某个已启用的日志类别中发生事件,就会在存储帐户中创建存储容器。

注释

存档的替代方法是,将资源日志发送到 Log Analytics 工作区中具有 低成本、长期保留期的表。

容器中的 Blob 使用以下命名约定:

insights-logs-{log category name}/resourceId=/SUBSCRIPTIONS/{subscription ID}/RESOURCEGROUPS/{resource group name}/PROVIDERS/{resource provider name}/{resource type}/{resource name}/y={four-digit numeric year}/m={two-digit numeric month}/d={two-digit numeric day}/h={two-digit 24-hour clock hour}/m=00/PT1H.json

网络安全组的 Blob 的名称可能与以下示例类似:

insights-logs-networksecuritygrouprulecounter/resourceId=/SUBSCRIPTIONS/aaaa0a0a-bb1b-cc2c-dd3d-eeeeee4e4e4e/RESOURCEGROUPS/TESTRESOURCEGROUP/PROVIDERS/MICROSOFT.NETWORK/NETWORKSECURITYGROUP/TESTNSG/y=2016/m=08/d=22/h=18/m=00/PT1H.json

每个 PT1H.json blob 都包含一个 JSON 对象,其中包含在 blob URL 中指定的小时内收到的日志文件中的事件。 在当前小时内,事件将追加到 PT1H.json 文件中,无论何时生成事件。 URL m=00 中的分钟值始终 00 是每小时创建的 blob。

在 PT1H.json 文件中,每个事件都以以下格式存储。 它使用常见的顶级架构,但对于每个 Azure 服务都是唯一的,如 资源日志架构中所述。

注释

日志将基于收到日志的时间写入 Blob,而不考虑日志的生成时间。 这意味着给定的 Blob 可以包含超出 Blob URL 中指定的小时数的日志数据。 如果数据源(如 Application Insights)支持上传过时的遥测数据,Blob 可以包含过去 48 小时内的数据。

在新小时开始时,现有日志可能仍在写入上一小时的 Blob,而新日志将写入新小时的 Blob。

{"time": "2016-07-01T00:00:37.2040000Z","systemId": "a0a0a0a0-bbbb-cccc-dddd-e1e1e1e1e1e1","category": "NetworkSecurityGroupRuleCounter","resourceId": "/SUBSCRIPTIONS/AAAA0A0A-BB1B-CC2C-DD3D-EEEEEE4E4E4E/RESOURCEGROUPS/TESTRESOURCEGROUP/PROVIDERS/MICROSOFT.NETWORK/NETWORKSECURITYGROUPS/TESTNSG","operationName": "NetworkSecurityGroupCounters","properties": {"vnetResourceGuid": "{aaaa0a0a-bb1b-cc2c-dd3d-eeeeee4e4e4e}","subnetPrefix": "10.3.0.0/24","macAddress": "000123456789","ruleName": "/subscriptions/aaaa0a0a-bb1b-cc2c-dd3d-eeeeee4e4e4e/resourceGroups/testresourcegroup/providers/Microsoft.Network/networkSecurityGroups/testnsg/securityRules/default-allow-rdp","direction": "In","type": "allow","matchedConnections": 1988}}