Azure Stack HCI 的主机网络要求

适用于:Azure Stack HCI 版本 22H2

本主题讨论 Azure Stack HCI 的主机网络注意事项和要求。 有关数据中心体系结构和服务器之间的物理连接的信息,请参阅物理网络要求。

有关如何使用网络 ATC 简化主机网络的信息,请参阅使用网络 ATC 简化主机网络。

网络流量类型

可以按照 Azure Stack HCI 网络流量的预期用途将其分类:

- 管理流量:进出于本地群集外部的流量。 例如存储副本流量或由管理员用于管理群集(例如远程桌面、Windows Admin Center、Active Directory 等)的流量。

- 计算流量:源自或发往虚拟机 (VM) 的流量。

- 存储流量:使用服务器消息块 (SMB) 的流量,例如存储空间直通或基于 SMB 的实时迁移。 此流量是第 2 层流量,不可路由。

重要

存储副本使用不基于 RDMA 的 SMB 流量。 该行为以及流量的方向性(北-南)使其与上面列出的“管理”流量紧密联合,类似于传统的文件共享。

选择网络适配器

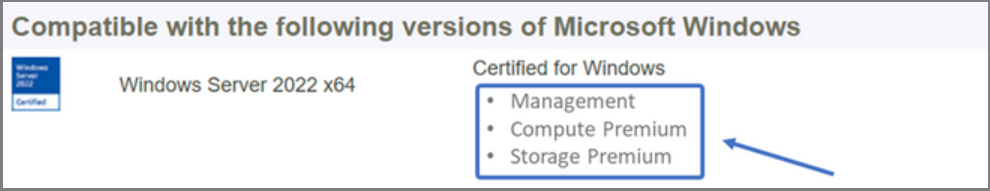

网络适配器由支持与它们一起使用的网络流量类型(见上文)确定是否符合资格。 查看 Windows Server 目录时,Windows Server 2022 认证现在会指示以下一个或多个角色。 在为 Azure Stack HCI 购买服务器之前,必须至少拥有一个符合管理、计算和存储资格的适配器,因为 Azure Stack HCI 上同时需要这三种流量类型。 然后,可以使用网络 ATC 针对相应的流量类型配置适配器。

若要详细了解这项基于角色的 NIC 资格,请参阅此 Windows Server 博客文章。

重要

不支持将适配器用于其符合资格的流量类型范围之外。

| Level | 管理角色 | 计算角色 | 存储角色 |

|---|---|---|---|

| 区别(基于角色) | 管理 | 计算标准 | 存储标准 |

| 最高奖金 | 不适用 | 计算高级 | 存储高级 |

注意

生态系统中任何适配器的最高资格将包含“管理”、“计算高级”和“存储高级”资格。

驱动程序要求

不支持将内核驱动程序与 Azure Stack HCI 一起使用。 若要确定适配器是否在使用内核驱动程序,请运行以下 cmdlet。 如果 DriverProvider 属性为 Microsoft,则表示适配器在使用内核驱动程序。

Get-NetAdapter -Name <AdapterName> | Select *Driver*

关键网络适配器功能概述

Azure Stack HCI 使用的重要网络适配器功能包括:

- 动态虚拟机多队列(动态 VMMQ 或 d.VMMQ)

- 远程直接内存访问 (RDMA)

- 来宾 RDMA

- 交换机嵌入式组合 (SET)

动态 VMMQ

所有符合计算(高级)要求的网络适配器都支持动态 VMMQ。 动态 VMMQ 要求使用交换机嵌入式组合。

适用的流量类型:计算

需要认证:计算(高级)

动态 VMMQ 是一项非常智能的接收端技术。 它建立在其前身(技术虚拟机队列 (VMQ)、虚拟接收端缩放 (vRSS) 和 VMMQ)的基础之上,提供以下三项主要改进:

- 使用较少 CPU 核心优化主机效率。

- 自动优化 CPU 核心的网络流量处理,从而使 VM 能够满足并维持预期的吞吐量。

- 使“突发”工作负载能够接收预期大小的流量。

有关动态 VMMQ 的详细信息,请参阅博客文章 Synthetic Accelerations(合成加速)。

RDMA

RDMA 是一种将网络堆栈负载转移到网络适配器的技术。 可使 SMB 存储流量绕过操作系统进行处理。

RDMA 可使用极少量的主机 CPU 资源实现高吞吐量、低延迟的网络。 于是,这些主机 CPU 资源可以用来运行其他 VM 或容器。

适用的流量类型:主机存储

需要认证:存储(标准)

所有符合存储(标准)或存储(高级)要求的适配器都支持主机端 RDMA。 有关将 RDMA 用于来宾工作负载的详细信息,请参阅本文稍后的“来宾 RDMA”部分。

Azure Stack HCI 使用 Internet 广域网 RDMA 协议 (iWARP) 或基于融合以太网的 RDMA (RoCE) 协议实现来支持 RDMA。

重要

RDMA 适配器只能与实现相同 RDMA 协议(iWARP 或 RoCE)的其他 RDMA 适配器配合工作。

并非供应商提供的所有网络适配器都支持 RDMA。 下表按字母顺序列出了可以供应已通过认证的 RDMA 适配器的供应商。 但是,此列表中未包含的某些硬件供应商也支持 RDMA。 请参阅 Windows Server 目录查找符合存储(标准)或存储(高级)要求且需要 RDMA 支持的适配器。

注意

Azure Stack HCI 不支持 InfiniBand (IB)。

| NIC 供应商 | iWARP | RoCE |

|---|---|---|

| Broadcom | 否 | 是 |

| Intel | 是 | 是(某些型号) |

| Marvell (Qlogic) | 是 | 是 |

| Nvidia | 否 | 是 |

要为主机部署 RDMA,我们强烈建议使用网络 ATC。 有关手动部署的信息,请参阅 SDN GitHub 存储库。

iWARP

iWARP 使用传输控制协议 (TCP),可通过基于优先级的流控制 (PFC) 和增强式传输服务 (ETS) 进行选择性增强。

在以下情况中使用 iWARP:

- 你没有 RDMA 网络管理经验。

- 你无法管理架顶式 (ToR) 交换机或者对这种管理不熟练。

- 你不会在部署后管理解决方案。

- 现有部署使用了 iWARP。

- 你不确定要选择哪个选项。

RoCE

RoCE 使用用户数据报协议 (UDP),并需要通过 PFC 和 ETS 来提供可靠性。

在以下情况中使用 RoCE:

- 数据中心内的现有部署使用了 RoCE。

- 你可以轻松地管理 DCB 网络要求。

来宾 RDMA

来宾 RDMA 可让 VM 的 SMB 工作负载获得如同在主机上使用 RDMA 一样的优势。

适用的流量类型:基于来宾的存储

需要认证:计算(高级)

使用来宾 RDMA 的主要优势是:

- 将 CPU 的网络流量处理负载转移到 NIC。

- 极低的延迟。

- 高吞吐量。

有关详细信息,请从 SDN GitHub 存储库下载文档。

交换机嵌入式组合 (SET)

SET 是基于软件的组合技术,已包含在 Windows Server 2016 及更高版本的 Windows server 操作系统中。 SET 是 Azure Stack HCI 支持的唯一一种组合技术。 SET 可以很好地处理计算、存储和管理流量,可以与同一组中的最多八个适配器配合使用。

适用的流量类型:计算、存储和管理

需要认证:计算(标准)或计算(高级)

SET 是 Azure Stack HCI 支持的唯一一种组合技术。 SET 能够很好地处理计算、存储和管理流量。

重要

Azure Stack HCI 不支持将 NIC 与早期的负载均衡/故障转移 (LBFO) 搭配使用。 有关 Azure Stack HCI 中的 LBFO 的详细信息,请参阅博客文章 Teaming in Azure Stack HCI(Azure Stack HCI 中的组合)。

SET 对于 Azure Stack HCI 非常重要,因为它是唯一能够实现以下功能的组合技术:

- RDMA 适配器组合(按需)。

- 来宾 RDMA。

- 动态 VMMQ。

- 其他关键 Azure Stack HCI 功能(请参阅 Teaming in Azure Stack HCI(Azure Stack HCI 中的组合))。

SET 要求使用对称(相同的)适配器。 对称网络适配器是指以下属性相同的适配器:

- 制造商(供应商)

- 型号(版本)

- 速度(吞吐量)

- 配置

在 22H2 中,网络 ATC 将自动检测并告知你选择的适配器是否不对称。 手动识别适配器是否对称的最简单方法是查看速度和接口说明是否完全匹配。 它们只能在说明中列出的编号上有差别。 使用 Get-NetAdapterAdvancedProperty cmdlet 来确保报告的配置列出相同的属性值。

请查看下表中的接口说明示例,其中只是编号 (#) 存在差别:

| 名称 | 接口说明 | 链接速度 |

|---|---|---|

| NIC1 | 网络适配器 #1 | 25 Gbps |

| NIC2 | 网络适配器 #2 | 25 Gbps |

| NIC3 | 网络适配器 #3 | 25 Gbps |

| NIC4 | 网络适配器 #4 | 25 Gbps |

注意

SET 仅支持使用动态或 Hyper-V 端口负载均衡算法的独立于交换机的配置。 为获得最佳性能,建议对以 10 Gbps 或更高速度运行的所有 NIC 使用 Hyper-V 端口。 网络 ATC 为 SET 完成所有所需的配置。

RDMA 流量注意事项

如果实现 DCB,则必须确保在每个网络端口(包括网络交换机)中正确实现 PFC 和 ETS 配置。 DCB 对于 RoCE 是必需的,对于 iWARP 是可选的。

有关如何部署 RDMA 的详细信息,请从 SDN GitHub 存储库下载文档。

基于 RoCE 的 Azure Stack HCI 实现要求在结构和所有主机中配置三个 PFC 流量类(包括默认流量类)。

群集流量类

此流量类确保为群集检测信号预留足够的带宽:

- 是否必需:是

- PFC 已启用:否

- 建议的流量优先级:优先级 7

- 建议的带宽预留量:

- 10 GbE 或更低 RDMA 网络 = 2%

- 25 GbE 或更高 RDMA 网络 = 1%

RDMA 流量类

此流量类确保为使用 SMB 直通进行的无损 RDMA 通信预留足够的带宽:

- 是否必需:是

- PFC 已启用:是

- 建议的流量优先级:优先级 3 或 4

- 建议的带宽预留量:50%

默认流量类

此流量类中有群集或 RDMA 流量类中未定义的所有其他流量,包括 VM 流量和管理流量:

- 必需:默认设置(无需在主机上进行配置)

- 流控制 (PFC) 已启用:否

- 建议的流量类:默认设置(优先级 0)

- 建议的带宽预留量:默认设置(无需在主机上进行配置)

存储流量模型

作为 Azure Stack HCI 的存储协议,SMB 能够提供诸多优势,包括 SMB 多通道。 SMB 多通道不在本文涵盖范围内,但我们必须了解,流量是通过 SMB 多通道可使用的每条可能链路来多路传送的。

注意

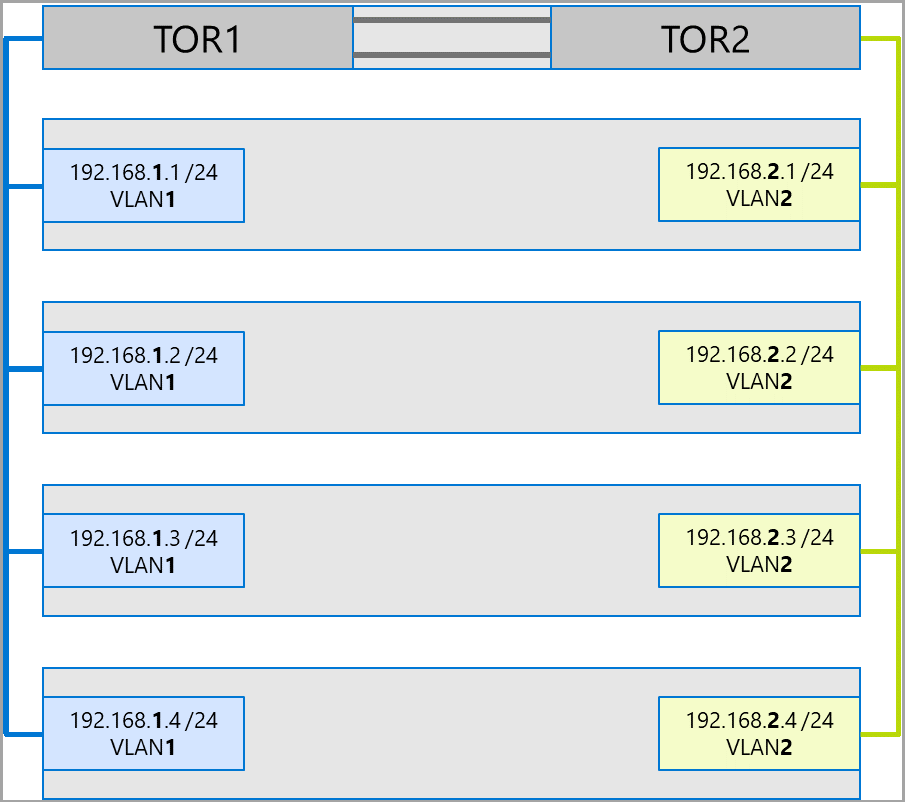

我们建议使用多个子网和 VLAN 在 Azure Stack HCI 中隔离存储流量。

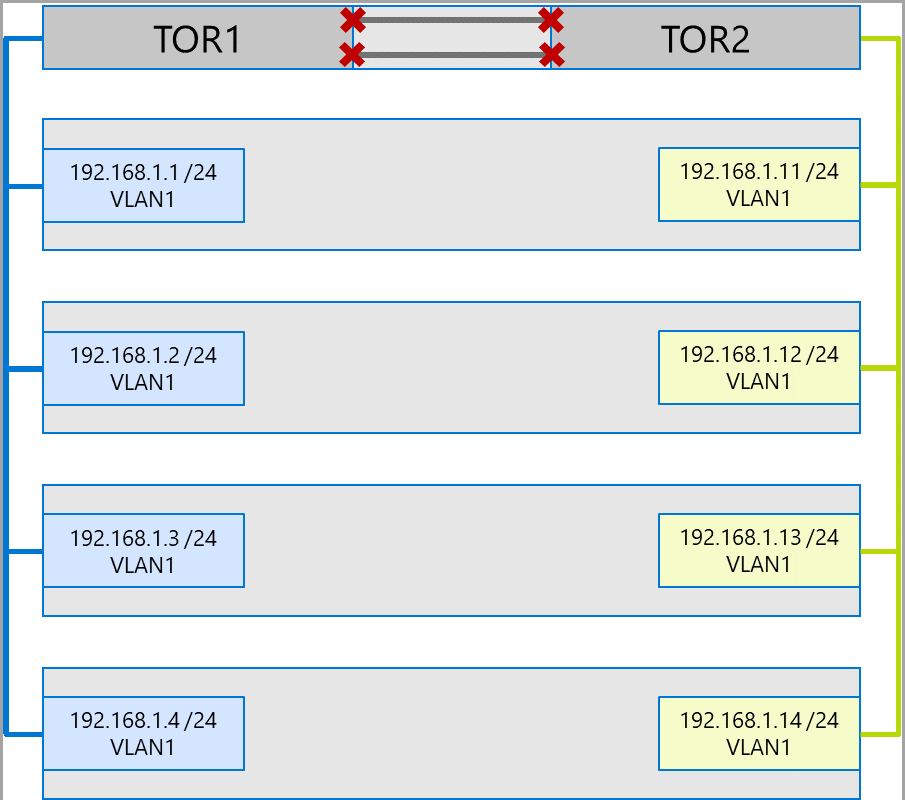

考虑以下四节点群集示例。 每台服务器有两个存储端口(左侧和右侧)。 由于每个适配器位于同一子网和 VLAN 中,SMB 多通道会将连接分散到所有可用链路。 因此,第一台服务器上的左侧端口 (192.168.1.1) 将连接到第二台服务器上的左侧端口 (192.168.1.2)。 第一台服务器上的右侧端口 (192.168.1.12) 将连接到第二台服务器上的右侧端口。 为第三和第四台服务器建立了类似的连接。

但是,这会建立不必要的连接,导致连接 ToR 交换机的互连链路(多机箱链路聚合组 (MC-LAG))发生流量拥塞(标有 X)。 参阅下图:

建议的方法是对每组适配器使用不同的子网和 VLAN。 在下图中,右侧端口现在使用子网 192.168.2.x /24 和 VLAN2。 这样,左侧端口上的流量便可以保留在 TOR1 上,右侧端口上的流量可以保留在 TOR2 上。

流量带宽分配

下表显示了 Azure Stack HCI 中使用常见适配器速度的各种流量类型的示例带宽分配。 请注意,这是融合式解决方案的一个示例,其中,所有流量类型(计算、存储和管理)在相同的物理适配器上运行,并使用 SET 进行组合。

由于此用例施加了最大约束,因此它可以很好地充当基线。 但是,考虑到适配器数量和速度的搭配方式,只能将此解决方案视为一个示例,而不是请求支持时的一项要求。

此示例中做出了以下假设:

每个组合有两个适配器。

存储总线层 (SBL)、群集共享卷 (CSV) 和 Hyper-V(实时迁移)流量:

- 使用相同的物理适配器。

- 使用 SMB。

使用 DCB 为 SMB 提供 50% 的带宽分配。

- SBL/CSV 是最高优先级流量,获得 SMB 带宽预留量的 70%。

- 使用

Set-SMBBandwidthLimitcmdlet 限制实时迁移 (LM),LM 获得剩余带宽的 29%。如果实时迁移的可用带宽是 >= 5 Gbps 且网络适配器功能正常,则使用 RDMA。 使用以下 cmdlet 实现此目的:

Set-VMHost -VirtualMachineMigrationPerformanceOption SMB如果实时迁移的可用带宽是 < 5 Gbps,则使用压缩来减少中断时间。 使用以下 cmdlet 实现此目的:

Set-VMHost -VirtualMachineMigrationPerformanceOption Compression

如果将 RDMA 用于实时迁移流量,请使用 SMB 带宽限制来确保实时迁移流量不会占用分配给 RDMA 流量类的所有带宽。 请注意,此 cmdlet 采用的输入值以每秒字节数 (Bps) 为单位,而列出的网络适配器则是以每秒位数 (bps) 为单位。 例如,使用以下 cmdlet 设置 6 Gbps 的带宽限制:

Set-SMBBandwidthLimit -Category LiveMigration -BytesPerSecond 750MB注意

在此示例中,750 MBps 等于 6 Gbps。

下面是示例带宽分配表:

| NIC 速度 | 组合带宽 | SMB 带宽预留量** | SBL/CSV % | SBL/CSV 带宽 | 实时迁移 % | 最大实时迁移带宽 | 检测信号 % | 检测信号带宽 |

|---|---|---|---|---|---|---|---|---|

| 10 Gbps | 20 Gbps | 10 Gbps | 70% | 7 Gbps | * | 200 Mbps | ||

| 25 Gbps | 50 Gbps | 25 Gbps | 70% | 17.5 Gbps | 29% | 7.25 Gbps | 1% | 250 Mbps |

| 40 Gbps | 80 Gbps | 40 Gbps | 70% | 28 Gbps | 29% | 11.6 Gbps | 1% | 400 Mbps |

| 50 Gbps | 100 Gbps | 50 Gbps | 70% | 35 Gbps | 29% | 14.5 Gbps | 1% | 500 Mbps |

| 100 Gbps | 200 Gbps | 100 Gbps | 70% | 70 Gbps | 29% | 29 Gbps | 1% | 1 Gbps |

| 200 Gbps | 400 Gbps | 200 Gbps | 70% | 140 Gbps | 29% | 58 Gbps | 1% | 2 Gbps |

* 使用压缩而不是 RDMA,因为用于实时迁移流量的带宽分配是 <5 Gbps。

** 50% 是示例带宽预留比例。

延伸群集

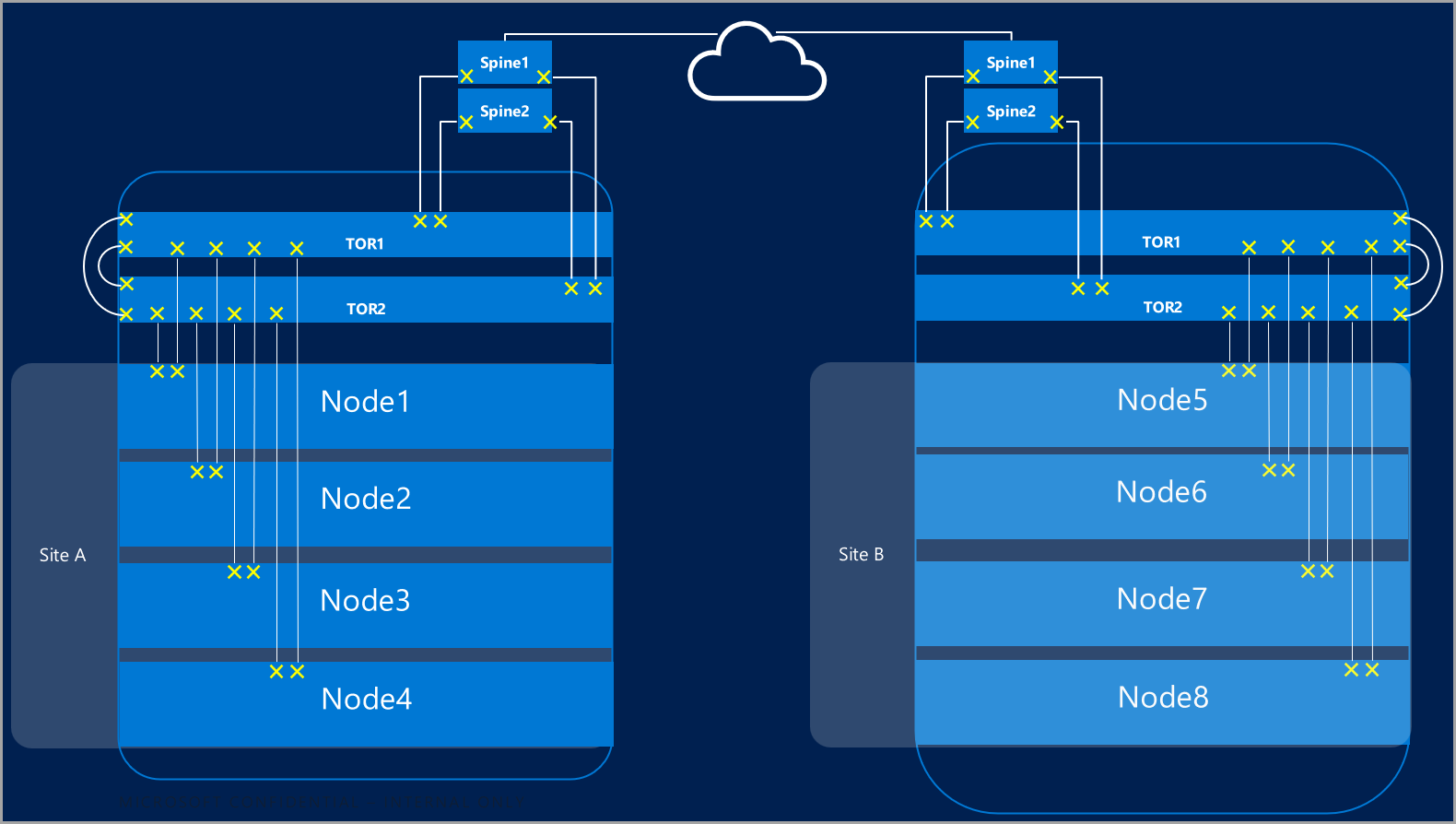

拉伸群集提供跨多个数据中心的灾难恢复。 最简单形式的拉伸 Azure Stack HCI 群集网络如下所示:

拉伸群集要求

重要

拉伸群集功能仅在 Azure Stack HCI 版本 22H2 中可用。

拉伸群集具有以下要求和特征:

RDMA 限制为单个站点,不支持在不同的站点或子网中使用。

同一站点中的服务器必须位于同一机架和第 2 层边界中。

站点之间的热通信必须跨第 3 层边界;不支持拉伸的第 2 层拓扑。

有足够的带宽来运行其他站点的工作负载。 发生故障转移时,备用站点需要运行所有流量。 建议为站点预配可用网络容量的 50%。 但是,如果能够容忍故障转移期间性能降低的问题,则这不是要求。

用于站点间通信的适配器:

可以是物理或虚拟适配器(主机 vNIC)。 如果是虚拟适配器,则必须在该适配器自身的子网和 VLAN 中,为每个物理 NIC 预配一个 vNIC。

必须位于其自身的、可以在站点之间路由的子网和 VLAN 中。

必须使用

Disable-NetAdapterRDMAcmdlet 禁用 RDMA。 建议通过Set-SRNetworkConstraintcmdlet 显式要求存储副本使用特定的接口。必须满足存储副本的任何其他要求。

后续步骤

- 了解网络交换机和物理网络要求。 参阅物理网络要求。

- 了解如何使用网络 ATC 简化主机网络。 了解使用网络 ATC 简化主机网络。

- 温习故障转移群集网络基础知识。

- 有关部署,请参阅使用 Windows Admin Center 创建群集。

- 有关部署,请参阅使用 Windows PowerShell 创建群集。