Azure Stack Hub 上支持的 虚拟机(VM)大小 是 Azure 上支持的虚拟机(VM)大小的子集。 Azure 在多方面施加资源限制,以避免资源(服务器本地和服务级别)的过度消耗。 如果没有对租户消耗施加一些限制,则当其他租户过度消耗资源时,租户体验会受到影响。 对于 VM 的网络出口,Azure Stack Hub 上存在与 Azure 限制匹配的带宽上限。 对于 Azure Stack Hub 上的存储资源,存储 IOPS 限制可避免租户为了访问存储而造成资源过度消耗。

重要

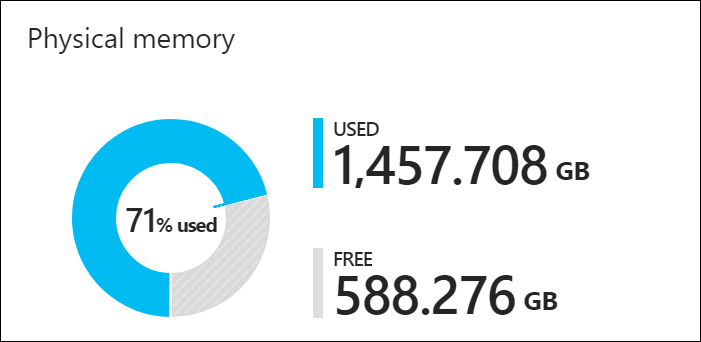

Azure Stack Hub Capacity Planner 不考虑或保证 IOPS 性能。 当系统内存总量达到 85%时,管理员门户会显示警告警报。 可以通过 添加更多容量或删除不再需要的虚拟机来修正此警报。

VM 部署

Azure Stack Hub 放置引擎将租户 VM 放置在可用主机上。

放置 VM 时,Azure Stack Hub 使用两个注意事项。 一个是,主机上是否有足够的内存用于该 VM 类型? 两个虚拟机是可用性集的一部分,还是虚拟机规模集的一部分?

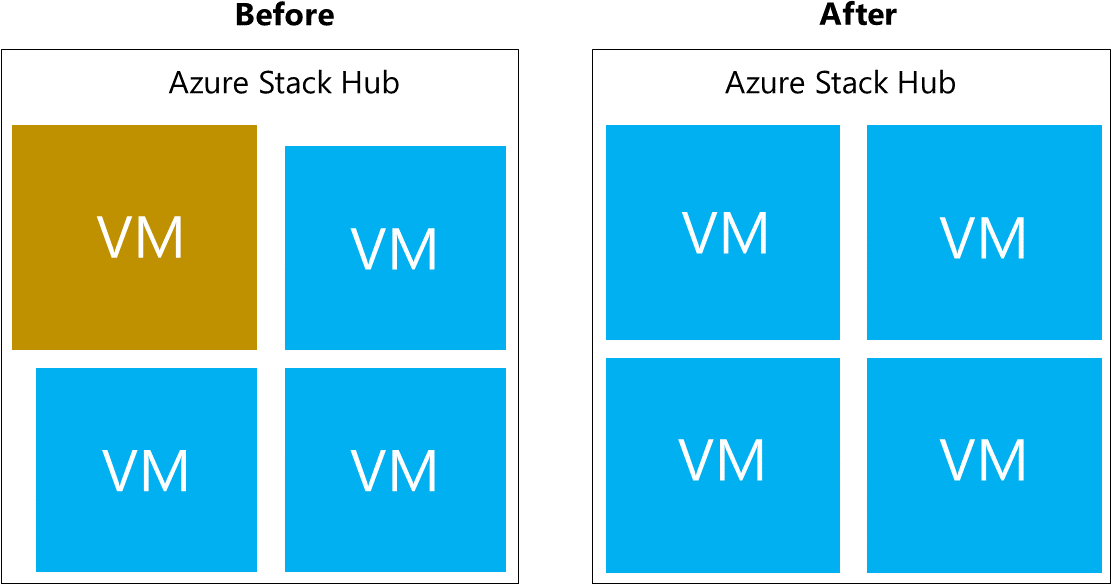

为了在 Azure Stack Hub 中实现多 VM 生产工作负荷的高可用性,虚拟机(VM)放置在一个可用性集中,可将这些工作负荷分散到多个容错域中。 可用性集中的容错域定义为缩放单元中的单个节点。 为了与 Azure 保持一致,Azure Stack Hub 支持的可用性集最多有三个容错域。 放置在可用性集中的 VM 在物理上相互隔离,方法是在多个容错域(Azure Stack Hub 节点)上尽可能均匀地分布它们。 如果出现硬件故障,则来自故障容错域的 VM 在其他容错域中重启。 如果可能,它们将保存在与同一可用性集中的其他 VM 不同的容错域中。 当主机重新联机时,将重新平衡 VM 以保持高可用性。

虚拟机规模集在后端使用可用性集,并确保每个虚拟机规模集实例都位于不同的容错域。 这意味着规模集使用不同的 Azure Stack Hub 基础结构节点。 例如,在一个四节点的 Azure Stack Hub 系统中,可能会出现一种情况:由于没有足够的容量将所需的三个虚拟机规模集实例分别安置在三个独立的 Azure Stack Hub 节点上,这些三实例的虚拟机规模集在创建时失败。 此外,Azure Stack Hub 节点可能先在不同的级别填满,然后尝试放置。

Azure Stack Hub 不会过度分配内存资源。 但是,允许过度提交物理核心数。

由于放置算法不会将现有的虚拟核心到物理核心过度预配比率视为一个因素,因此每个主机可以有不同的比率。 与 Azure 一样,由于工作负载和服务级别要求的变化,我们不提供有关物理核心与虚拟核心比率的指导。

关于虚拟机总数的考虑因素

可以创建的 VM 总数有限制。 Azure Stack Hub 上虚拟机的最大数量是 700 个,每个缩放单元节点最多 60 个。 例如,八服务器 Azure Stack Hub VM 限制为 480 (8 * 60)。 对于包含 12 到 16 个服务器的 Azure Stack Hub 解决方案,限制为 700 个 VM。 创建此限制时考虑到了所有计算容量注意事项,例如弹性预留以及操作员希望在系统中维持的 CPU 虚拟与物理比率。

如果达到了 VM 规模限制,将返回以下错误代码:VMsPerScaleUnitLimitExceeded, VMsPerScaleUnitNodeLimitExceeded

注释

最多 700 个 VM 中的一部分是为 Azure Stack Hub 基础结构 VM 预留的。 有关详细信息,请参阅 Azure Stack Hub 容量规划器。

VM 批量部署注意事项

在 2002 年及之前的版本中,每个批次部署 2 到 5 个 VM,批次之间间隔 5 分钟,这样的方式能够可靠地实现达到 700 个 VM 的规模。 从 2005 版本的 Azure Stack Hub 开始,我们能够通过每批部署 40 个 VM,各批部署之间间隔 5 分钟,可靠地预配 VM。 应以 30 的批大小执行启动、停止解除分配和更新操作,在每个批之间保留5分钟的间隔。

与 GPU 虚拟机相关的注意事项

Azure Stack Hub 会为基础结构和租户 VM 预留用于故障转移的内存。 与其他 VM 不同,GPU VM 在非 HA(高可用性)模式下运行,因此不会故障转移。 因此,基础结构进行故障转移所需的内存即为仅限 GPU VM 的戳的预留内存,而不需要同时考虑 HA 租户 VM 内存。

Azure Stack Hub 内存

Azure Stack Hub 旨在使已成功预配的 VM 保持运行。 例如,如果主机因硬件故障而脱机,Azure Stack Hub 会尝试在另一台主机上重启该 VM。 另一个示例是 Azure Stack Hub 软件的修补和更新。 如果需要重新启动物理主机,则尝试将该主机上执行的 VM 移动到解决方案中的另一个可用主机。

仅当存在允许重启或迁移的保留内存容量时,才能实现此 VM 管理或移动。 总主机内存的一部分被保留,因此不能用于租户 VM 的放置。

可以在管理员门户中查看饼图,其中显示了 Azure Stack Hub 中可用和已用的内存。 下图显示了 Azure Stack Hub 中 Azure Stack Hub 缩放单元上的物理内存容量:

已用内存由多个部分组成。 以下组件消耗饼了图中的已用内存部分:

- 主机 OS 的用量或预留量:主机上的操作系统 (OS)、虚拟内存页面表、主机 OS 上运行的程序以及空间直通内存缓存使用的内存。 由于此值依赖于主机上运行的不同 Hyper-V 进程使用的内存,因此可能会波动。

- 基础结构服务: 构成 Azure Stack Hub 的基础结构 VM。 如前所述,这些 VM 是 700 个 VM 最大值的一部分。 我们一直在努力让基础结构服务变得更具可伸缩性和弹性,因此基础结构服务组件的内存用量可能会有变化。 有关详细信息,请参阅 Azure Stack Hub 容量规划器

- 复原保留: Azure Stack Hub 保留一部分内存,以便在单个主机故障期间以及修补和更新期间允许租户可用性,以便成功实时迁移 VM。

- 租户 VM: Azure Stack Hub 用户创建的租户 VM。 除了运行 VM 以外,任何在结构上登陆的 VM 也消耗内存。 这意味着,处于“正在创建”或“失败”状态的 VM 或者从来宾内部关闭的 VM 消耗内存。 但是,使用 portal/powershell/cli 中的“停止解除分配”选项解除分配的 VM 不会消耗 Azure Stack Hub 中的内存。

- 增值资源提供程序(RPs): 为增值资源提供程序(例如 SQL、MySQL、应用服务等)部署的虚拟机。

在门户上了解内存消耗量的最佳方式是使用 Azure Stack Hub Capacity Planner 来查看各种工作负荷的影响。 以下计算方式与规划器使用的方式相同。

此计算将产生可用于租户 VM 放置的总可用内存。 该内存容量适用于整个 Azure Stack Hub 缩放单元。

VM 放置的可用内存 = 主机总内存 - 复原保留 - 运行租户 VM 使用的内存 - Azure Stack Hub 基础结构开销 1

- 主机内存总数 = 来自所有节点的内存总和

- 复原预留 = H + R * (N-1) * H) + V * (N-2)

- 租户 VM 使用的内存 = 租户工作负荷消耗的实际内存,不依赖于 HA 配置

- Azure Stack Hub 基础结构开销 = 268 GB + (4GB x N)

地点:

- H = 单个服务器内存的大小

- N = 缩放单元的大小(服务器数)

- R = 操作系统留作系统开销的保留值,在此公式2中为 .15

- V = 比例单位中的最大 HA VM

1 Azure Stack Hub 基础结构开销 = 268 GB +(4 GB x 节点数)。 大约 31 个 VM 用于托管 Azure Stack Hub 的基础结构,总共消耗大约 268 GB + (4 GB x 节点)的内存和 146 个虚拟核心。 此数目的 VM 的理由是满足所需的服务分离,以满足安全性、可伸缩性、服务和修补要求。 这种内部服务结构允许在将来引入新开发的基础结构服务。

2 操作系统针对开销的保留 = 15% (.15) 的节点内存。 作系统保留值是一个估计值,根据服务器的物理内存容量和常规作系统开销而有所不同。

规模单元中最大 HA VM 的值 V 是根据最大的租户 VM 内存大小动态确定的。 例如,最大 HA VM 值至少为 12 GB(考虑到基础结构 VM)或 112 GB 或 Azure Stack Hub 解决方案中任何其他受支持的 VM 内存大小。 更改 Azure Stack Hub 构造上最大的 HA VM 会导致复原保留增加,以及 VM 本身内存的增加。 请记住,GPU VM 在非 HA 模式下运行。

示例计算

我们有一个小型四节点 Azure Stack Hub 部署,每个节点都配备 768 GB 的 RAM。 我们计划为具有 128GB RAM(Standard_E16_v3)的 SQL Server 放置虚拟机。 可用于 VM 放置的内存是多少?

- 主机内存总数 = 所有节点的内存总和 = 4 * 768 GB = 3072 GB

- 复原预留 = H + R * (N-1) * H) + V * (N-2) = 768 + 0.15 * (4 - 1) * 768) + 128 * (4 - 2) = 1370 GB

- 租户 VM 使用的内存 = 租户工作负荷消耗的实际内存,不依赖于 HA 配置 = 0 GB

- Azure Stack Hub 基础结构开销 = 268 GB + (4GB x N) = 268 + (4 * 4) = 284 GB

VM 放置的可用内存 = 总主机内存 - 复原保留 - 运行租户 VM 使用的内存 - Azure Stack Hub 基础结构开销

VM 放置的可用内存 = 3072 - 1370 - 0 - 284 = 1418 GB

解除分配的注意事项

当 VM 处于 解除分配 状态时,不会使用内存资源。 这允许将其他 VM 放置在系统中。

如果重新启动已解除分配的 VM,则内存使用量或分配将被视为放置在系统中的新 VM,并消耗可用内存。 如果没有可用的内存,则 VM 不会启动。

当前部署的大型 VM 显示分配的内存为 112 GB,但这些 VM 的内存需求约为 2-3 GB。

| 名称 | 分配的内存(GB) | 内存需求(GB) | 计算机名 |

|---|---|---|---|

| ca7ec2ea-40fd-4d41-9d9b-b11e7838d508 | 112 | 2.2392578125 | LISSA01P-NODE01 |

| 10cd7b0f-68f4-40ee-9d98-b9637438ebf4 | 112 | 2.2392578125 | LISSA01P-NODE01 |

| 2e403868-ff81-4abb-b087-d9625ca01d84 | 112 | 2.2392578125 | LISSA01P-NODE04 |

有三种方法可以使用公式 预留冗余 = H + R * (N-1) * H + V * (N-2) 释放用于 VM 放置的内存:

- 减小最大 VM 的大小

- 增加节点的内存

- 添加节点

减小最大 VM 的大小

将最大 VM 的大小减少到集群中下一个最小 VM(24 GB)可以减少弹性储备的大小。

复原保留 = 384 + 172.8 + 48 = 604.8 GB

| 总内存量 | 基础结构 GB | 租户 GB | 弹性储备 | 保留的总内存 | 可用于分配的总存储空间 |

|---|---|---|---|---|---|

| 1536 GB (千兆字节) | 258 GB | 329.25 GB | 604.8 GB | 258 + 329.25 + 604.8 = 1168 GB | ~344 GB |

添加节点

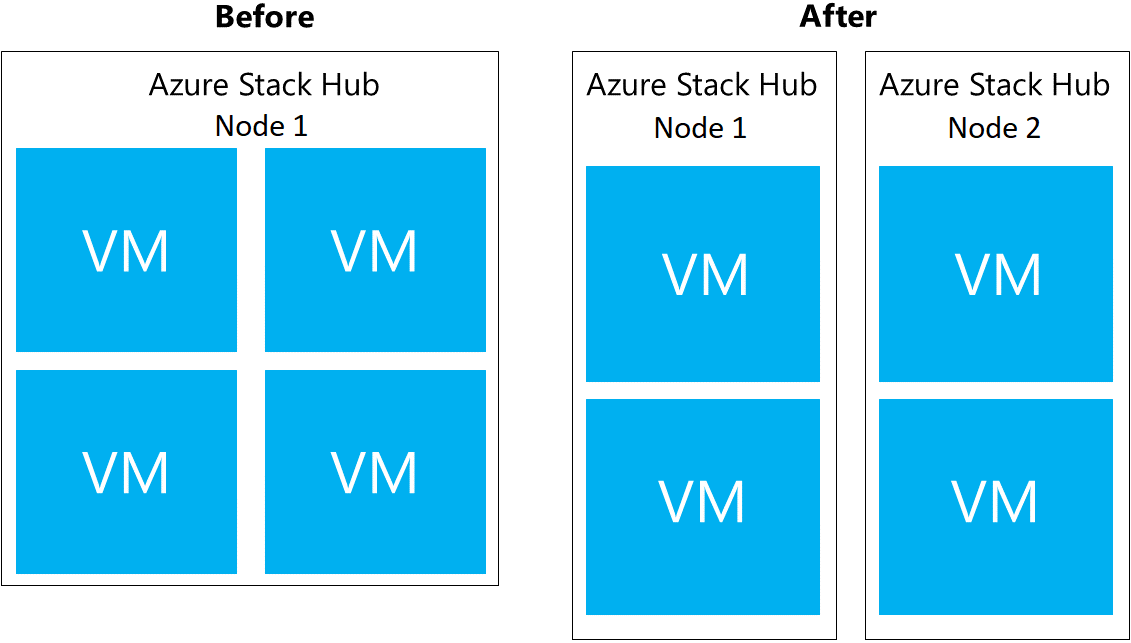

添加 Azure Stack Hub 节点 可通过在两个节点之间平均分配内存来释放内存。

复原预留 = 384 + (0.15) (5)*384) + 112 * (3) = 1008 GB

| 内存总量 | 基础结构 GB | 租户 GB | 弹性储备 | 保留的总内存 | 可用于分配的总存储空间 |

|---|---|---|---|---|---|

| 1536 GB (千兆字节) | 258 GB | 329.25 GB | 604.8 GB | 258 + 329.25 + 604.8 = 1168 GB | ~ 344 GB |

将每个节点上的内存增加到 512 GB

增加每个节点的内存 会增加可用内存总量。

复原预留 = 512 + 230.4 + 224 = 966.4 GB

| 内存总量 | 基础结构 GB | 租户 GB | 弹性储备 | 保留的总内存 | 可用于分配的总存储空间 |

|---|---|---|---|---|---|

| 2048 (4*512) GB | 258 GB | 505.75 GB | 966.4 GB | 1730.15 GB | ~ 318 GB |

常见问题解答

问:我的租户部署了一个新 VM,管理员门户上的功能图表显示剩余容量需要多长时间?

答:容量边栏选项卡每隔 15 分钟刷新一次,请考虑到这一点。

问:如何查看可用的核心和分配的核心?

答:在 PowerShell 中运行 test-azurestack -include AzsVmPlacement -debug,这会生成如下所示的输出:

Starting Test-AzureStack

Launching AzsVmPlacement

Azure Stack Scale Unit VM Placement Summary Results

Cluster Node VM Count VMs Running Physical Core Total Virtual Co Physical Memory Total Virtual Mem

------------ -------- ----------- ------------- ---------------- --------------- -----------------

LNV2-Node02 20 20 28 66 256 119.5

LNV2-Node03 17 16 28 62 256 110

LNV2-Node01 11 11 28 47 256 111

LNV2-Node04 10 10 28 49 256 101

PASS : Azure Stack Scale Unit VM Placement Summary

问:Azure Stack Hub 上已部署的 VM 数量尚未更改,但容量波动很大。 为什么?

答:VM 放置的可用内存具有多个依赖项,其中一个依赖项是主机 OS 保留。 此值取决于主机上运行的不同 Hyper-V 进程使用的内存,该内存不是常量值。

问:租户 VM 必须处于什么状态才能使用内存?

答:除了正在运行的 VM 外,任何已登陆构造的 VM 都消耗内存。 这意味着处于“创建”或“失败”状态的 VM 消耗内存。 已从来宾内部关闭的,而不是从门户/PowerShell/CLI 停止解除分配的 VM 也会消耗内存。

问:我有一个四主机的 Azure Stack Hub。 我的租户有 3 个 VM,每个 VM 占用 56 GB RAM(D5_v2)。 其中一个 VM 的大小调整为 112 GB RAM (D14_v2),仪表板上的可用内存报告导致容量边栏选项卡上出现 168 GB 的用量高峰。 随后将另外两个 D5_v2 VM 的大小调整为 D14_v2 ,结果每个 VM 的 RAM 仅增加了 56 GB。 为什么如此?

答:可用内存是 Azure Stack Hub 维护的弹性保留的函数。 复原预留量受 Azure Stack Hub 阵列上最大 VM 大小的影响。 起初,标记上最大的 VM 是 56 GB 内存。 调整 VM 大小后,阵列上的最大 VM 将变成 112 GB 内存,这不只会增大该租户 VM 使用的内存,也会增大复原预留量。 此更改导致租户 VM 内存增加 56 GB(从 56 GB 增加到 112 GB),并增加 112 GB 的复原预留内存。 调整后续 VM 大小后,最大 VM 大小仍保留为 112 GB VM,因此不会增加复原能力。 内存消耗的增加仅仅是由于租户 VM 内存增长(56 GB)。

注释

网络方面的容量规划要求很少,因为能够配置的只有公共 VIP 的大小。 有关如何向 Azure Stack Hub 添加更多公共 IP 地址的信息,请参阅 添加公共 IP 地址。