Problem

One of the following errors occurs when you use pip to install the pyodbc library.

java.lang.RuntimeException: Installation failed with message: Collecting pyodbc

"Library installation is failing due to missing dependencies. sasl and thrift_sasl are optional dependencies for SASL or Kerberos support"

Cause

Although sasl and thrift_sasl are optional dependencies for SASL or Kerberos support, they need to be present for pyodbc installation to succeed.

Solution

Cluster-scoped init script method

You can put these commands into a single init script and attach it to the cluster. This ensures that the dependent libraries for pyodbc are installed before the cluster starts.

- Create the base directory to store the init script in, if the base directory does not exist. Here, use dbfs:/databricks/<directory>as an example.

%sh dbutils.fs.mkdirs("dbfs:/databricks/<directory>/") - Create the script and save it to a file.

%sh dbutils.fs.put("dbfs:/databricks/<directory>/tornado.sh",""" #!/bin/bash pip list | egrep 'thrift-sasl|sasl' pip install --upgrade thrift dpkg -l | egrep 'thrift_sasl|libsasl2-dev|gcc|python-dev' sudo apt-get -y install unixodbc-dev libsasl2-dev gcc python-dev """,True) - Check that the script exists.

%python display(dbutils.fs.ls("dbfs:/databricks/<directory>/tornado.sh")) - On the cluster configuration page, click the Advanced Options toggle.

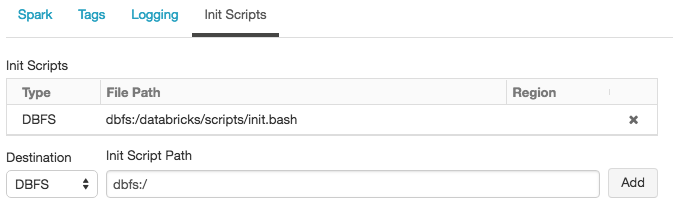

- At the bottom of the page, click the Init Scripts tab.

- In the Destination drop-down, select DBFS, provide the file path to the script, and click Add.

- Restart the cluster.

For more details about cluster-scoped init scripts, see Cluster-scoped init scripts (AWS | Azure | GCP).

Notebook method

- In a notebook, check the version of thrift and upgrade to the latest version.

%sh pip list | egrep 'thrift-sasl|sasl' pip install --upgrade thrift

- Ensure that dependent packages are installed.

%sh dpkg -l | egrep 'thrift_sasl|libsasl2-dev|gcc|python-dev'

- Install nnixodbc before installing pyodbc.

%sh sudo apt-get -y install unixodbc-dev libsasl2-dev gcc python-dev