机密

机密是一种键值对,用于存储机密材料,它具有一个密钥名称,且在机密范围中是唯一的。 每个范围限制为 1000 个机密。 允许的最大机密值大小为 128 KB。

另请参阅机密 API。

创建机密

机密名称不区分大小写。

创建机密的方法取决于使用的是 Azure Key Vault 支持的范围还是 Databricks 支持的范围。

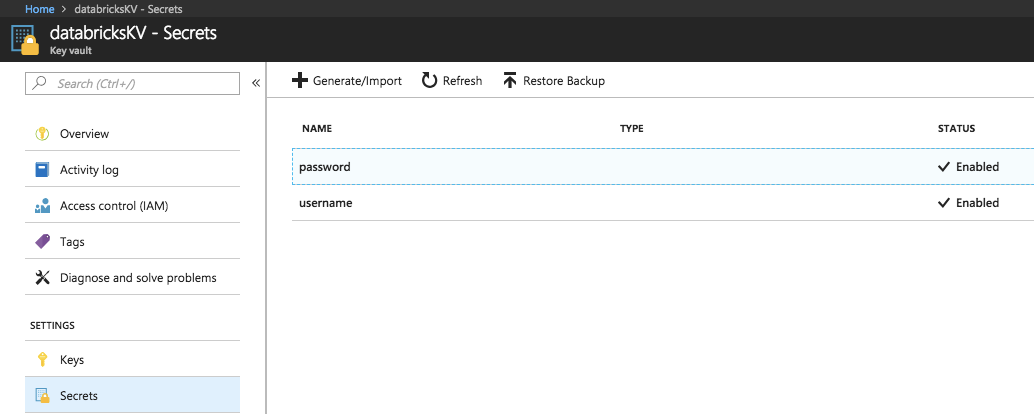

在 Azure Key Vault 支持的范围创建机密

若要在 Azure Key Vault 中创建机密,请使用 Azure 设置机密 REST API 或 Azure 门户 UI。

在 Databricks 支持的范围中创建机密

要使用 Databricks CLI(版本 0.205 及更高版本)在 Databricks 支持的范围中创建机密:

databricks secrets put-secret --json '{

"scope": "<scope-name>",

"key": "<key-name>",

"string_value": "<secret>"

}'

如果要创建多行机密,则可以使用标准输入传递机密。 例如:

(cat << EOF

this

is

a

multi

line

secret

EOF

) | databricks secrets put-secret <secret_scope> <secret_key>

还可以提供文件中的机密。 有关写入机密的详细信息,请参阅什么是 Databricks CLI?。

列出机密

列出给定范围内的机密:

databricks secrets list-secrets <scope-name>

响应显示有关机密的元数据信息,例如机密的密钥名称。 可使用笔记本或作业中的密钥实用程序 (dbutils.secrets) 来读取密钥。 例如:

dbutils.secrets.list('my-scope')

读取机密

可使用 REST API 或 CLI 创建机密,但必须使用笔记本或作业中的机密实用程序 (dbutils.secrets) 来读取机密。

删除机密

使用 Databricks CLI 从范围中删除机密:

databricks secrets delete-secret <scope-name> <key-name>

还可以使用机密 API。

若要从 Azure Key Vault 支持的范围中删除机密,请使用 Azure SetSecret REST API 或 Azure 门户 UI。

使用 Spark 配置属性或环境变量中的机密

重要

此功能目前以公共预览版提供。

注意

在 Databricks Runtime 6.4 外延支持及更高版本中可用。

可以引用 Spark 配置属性或环境变量中的机密。 检索到的机密是从笔记本的输出以及 Spark 驱动程序和执行器的日志中修订的。

重要

引用 Spark 配置属性或环境变量中的机密时,请牢记以下安全隐患:

如果在群集上没有启用表访问控制,任何在群集上具有“可附加到”权限或在笔记本上具有“运行”权限的用户都可以从笔记本中读取 Spark 配置属性。 这包括没有读取机密的直接权限的用户。 Databricks 建议在所有群集上启用表访问控制或使用机密范围管理对机密的访问。

即使启用了表访问控制,在群集上具有“可附加到”权限或在笔记本上具有“运行”权限的用户可以从笔记本中读取群集环境变量。 如果不能对群集上的所有用户使用机密,Databricks 不建议将机密存储在群集环境变量中。

不会从 Spark 驱动程序日志

stdout和stderr流中修订机密。 为了保护敏感数据,默认情况下,只有由对作业、单用户访问模式和共享访问模式群集具有“可管理”权限的用户才能查看 Spark 驱动程序日志。 若要允许具有“可附加到”或“可重启”权限的用户查看这些群集上的日志,请在群集配置中设置以下 Spark 配置属性:spark.databricks.acl.needAdminPermissionToViewLogs false。在“无隔离共享”访问模式群集上,具有“可附加到”或“可管理”权限的用户可以查看 Spark 驱动程序日志。 若要限制只有具有“可管理”权限的用户才能读取日志,请将

spark.databricks.acl.needAdminPermissionToViewLogs设置为true。

要求和限制

以下要求和限制适用于引用 Spark 配置属性和环境变量中的机密:

- 群集所有者需要具有机密范围内的“可读取”权限。

- 只有群集所有者才能将引用添加到 Spark 配置属性或环境变量中的机密,并编辑现有的范围和名称。 所有者使用机密 API 更改机密。 必须重新启动群集才能再次提取机密。

- 对群集具有“可管理”权限的用户可以删除机密 Spark 配置属性和环境变量。

用于引用 Spark 配置属性或环境变量中的机密的语法

可以使用任何有效的变量名称或 Spark 配置属性引用机密。 Azure Databricks 为根据所设置的值的语法(而不是变量名称)引用机密的变量启用特殊行为。

Spark 配置属性或环境变量值的语法必须是 {{secrets/<scope-name>/<secret-name>}}。 该值必须以 {{secrets/ 开头,以 }} 结尾。

Spark 配置属性或环境变量的变量部分为:

<scope-name>:机密关联的范围的名称。<secret-name>:范围中的机密的唯一名称。

例如 {{secrets/scope1/key1}}。

注意

- 大括号中不应有空格。 如果有空格,它们将被视为范围或机密名称的一部分。

使用 Spark 配置属性引用机密

按以下格式指定 Spark 配置属性中对机密的引用:

spark.<property-name> {{secrets/<scope-name>/<secret-name>}}

任何 Spark 配置 <property-name> 都可以引用机密。 每个 Spark 配置属性只能引用一个机密,但可以配置多个 Spark 属性来引用机密。

示例

设置 Spark 配置来引用机密:

spark.password {{secrets/scope1/key1}}

若要获取笔记本中的机密并使用它,请运行以下内容:

Python

spark.conf.get("spark.password")

SQL

SELECT ${spark.password};

引用环境变量中的机密

按以下格式指定环境变量中的机密路径:

<variable-name>={{secrets/<scope-name>/<secret-name>}}

引用机密时,可以使用任何有效的变量名称。 对环境变量中引用的机密的访问权限由配置群集的用户的权限确定。 存储在环境变量中的机密可供群集的所有用户访问,但已经过编修,而不是像其他位置引用的机密那样以纯文本显示。

可以从群集范围的初始化脚本访问引用机密的环境变量。 请参阅通过初始化脚本设置和使用环境变量。

示例

设置环境变量以引用机密:

SPARKPASSWORD={{secrets/scope1/key1}}

若要提取 init 脚本中的机密,请使用以下模式访问 $SPARKPASSWORD:

if [ -n "$SPARKPASSWORD" ]; then

# code to use ${SPARKPASSWORD}

fi

管理机密权限

本部分介绍如何使用什么是 Databricks CLI?(0.205 及更高版本)管理机密访问控制。 还可以使用机密 API 或 Databricks Terraform 提供程序。 有关机密权限级别,请参阅机密 ACL

创建机密 ACL

若要使用 Databricks CLI(旧版)为给定机密范围创建机密 ACL

databricks secrets put-acl <scope-name> <principal> <permission>

对已经有一个应用的权限的主体发出 put 请求会覆盖现有权限级别。

principal 字段指定一个现有的 Azure Databricks 主体。 用户是使用其电子邮件地址指定的,服务主体是使用其 applicationId 值指定的,组是使用其组名称指定的。

查看机密 ACL

若要查看给定机密范围的所有机密 ACL,请执行以下命令:

databricks secrets list-acls <scope-name>

若要获取应用于给定机密范围的主体的机密 ACL,请执行以下命令:

databricks secrets get-acl <scope-name> <principal>

如果给定主体和范围不存在 ACL,此请求会失败。

删除机密 ACL

若要删除应用于给定机密范围的主体的机密 ACL,请执行以下命令:

databricks secrets delete-acl <scope-name> <principal>