在本文中,你将学习如何使用自动化 ML (AutoML) Open Neural Network Exchange (ONNX) 模型在 C# 控制台应用程序中通过 ML.NET 进行预测。

ML.NET 是一种面向 .NET 生态系统的跨平台的开源机器学习框架,借助它可通过 C# 或 F# 的代码优先方法,或通过 Model Builder 和 ML.NET CLI 等低代码工具来训练和使用自定义机器学习模型。 此框架可扩展,使你能够利用其他常见的机器学习框架,如 TensorFlow 和 ONNX。

ONNX 是 AI 模型的开源格式。 ONNX 支持框架之间的互操作性。 这意味着你可在众多常见的机器学习框架(如 PyTorch)中训练模型,将其转换为 ONNX 格式,并在 ML.NET 等不同框架中使用 ONNX 模型。 有关详细信息,请参阅 ONNX 网站。

先决条件

- .NET 6 SDK 或更高版本

- 文本编辑器或 IDE(如 Visual Studio 或 Visual Studio Code)

- ONNX 模型。 若要了解如何训练 AutoML ONNX 模型,请参阅以下银行营销分类笔记本。

- Netron(可选)

创建 C# 控制台应用程序

在此示例中,你将使用 .NET CLI 生成应用程序,但可使用 Visual Studio 执行相同的任务。 详细了解 .NET CLI。

打开终端并创建新的 C# .NET 控制台应用程序。 在本例中,应用程序的名称是

AutoMLONNXConsoleApp。 目录将使用与应用程序的内容相同的名称创建。dotnet new console -o AutoMLONNXConsoleApp在终端中,导航到 AutoMLONNXConsoleApp 目录。

cd AutoMLONNXConsoleApp

添加软件包

使用 .NET CLI 安装 Microsoft.ML、Microsoft.ML.OnnxRuntime 和 Microsoft.ML.OnnxTransformer NuGet 包。

dotnet add package Microsoft.ML dotnet add package Microsoft.ML.OnnxRuntime dotnet add package Microsoft.ML.OnnxTransformer这些包中有在 .NET 应用程序中使用 ONNX 模型所需的依赖项。 ML.NET 提供一个使用 ONNX 运行时进行预测的 API。

打开 Program.cs 文件并在顶部添加以下

using指令:using System.Linq; using Microsoft.ML; using Microsoft.ML.Data; using Microsoft.ML.Transforms.Onnx;

添加对 ONNX 模型的引用

控制台应用程序访问 ONNX 模型的一种方法是将其添加到生成输出目录。 如果还没有模型,请按照此笔记本创建示例模型。

在应用程序添加对 ONNX 模型文件的引用:

将 ONNX 模型复制到应用程序的 AutoMLONNXConsoleApp 根目录。

打开 AutoMLONNXConsoleApp.csproj 文件,并在

Project节点中添加以下内容。<ItemGroup> <None Include="automl-model.onnx"> <CopyToOutputDirectory>PreserveNewest</CopyToOutputDirectory> </None> </ItemGroup>在本例中,ONNX 模型文件的名称为 automl-model.onnx。

(若要详细了解 MSBuild 常见项,请参阅 MSBuild 指南。)

打开 Program.cs 文件,并在

Program类中添加以下行。static string ONNX_MODEL_PATH = "automl-model.onnx";

初始化 MLContext

在 Program 类的 Main 方法中,创建 MLContext 的新实例。

MLContext mlContext = new MLContext();

MLContext 类是所有 ML.NET 操作的起点,而初始化 mlContext 会创建一个新的 ML.NET 环境,该环境可在模型生命周期内共享。 在概念上,它类似于实体框架中的 DbContext。

定义模型数据架构

模型需要特定格式的输入和输出数据。 使用 ML.NET,可通过类定义数据的格式。 有时,你可能已经知道该格式是怎样的。 如果不知道数据格式,可使用 Netron 之类的工具来检查 ONNX 模型。

本示例中所用的模型使用来自“纽约 TLC 出租车行程”数据集的数据。 下表显示了数据示例:

| vendor_id | rate_code | passenger_count | trip_time_in_secs | trip_distance | payment_type | fare_amount |

|---|---|---|---|---|---|---|

| VTS | 1 | 1 | 1140 | 3.75 | CRD | 15.5 |

| VTS | 1 | 1 | 480 | 2.72 | CRD | 10.0 |

| VTS | 1 | 1 | 1680 | 7.8 | CSH | 26.5 |

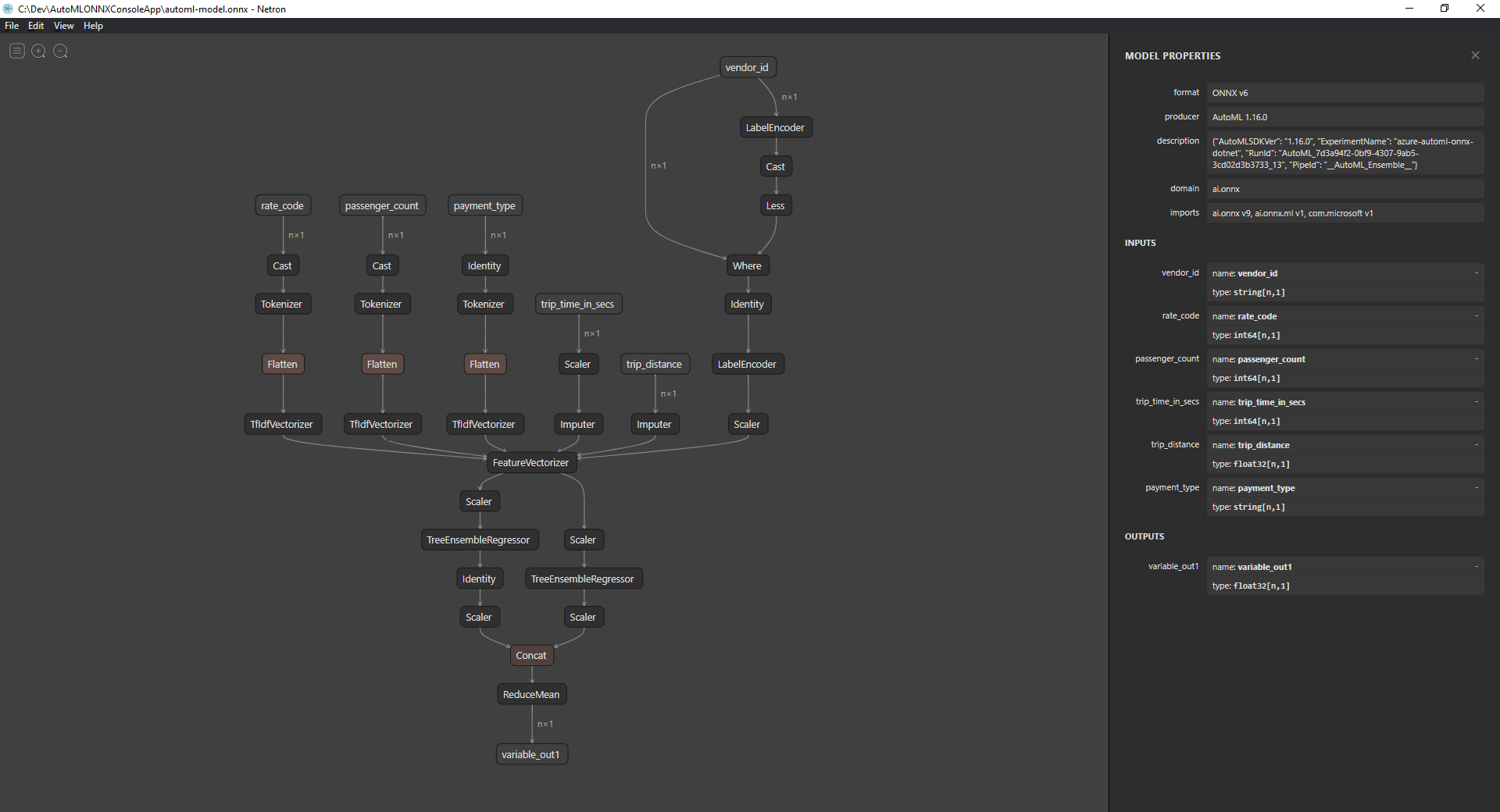

检查 ONNX 模型(可选)

使用 Netron 之类的工具来检查模型的输入和输出。

打开 Netron。

在顶部菜单栏中选择“文件”>“打开”,然后使用文件浏览器选择模型。

这将打开你的模型。 例如,automl-model.onnx 模型的结构如下所示:

选择图形底部的最后一个节点(在本例中为

variable_out1),显示模型的元数据。 侧栏中的输入和输出显示模型的预期输入、输出和数据类型。 使用此信息可定义模型的输入和输出架构。

定义模型输入架构

在 Program.cs 文件中使用以下属性创建一个名为 OnnxInput 的新类。

public class OnnxInput

{

[ColumnName("vendor_id")]

public string VendorId { get; set; }

[ColumnName("rate_code"),OnnxMapType(typeof(Int64),typeof(Single))]

public Int64 RateCode { get; set; }

[ColumnName("passenger_count"), OnnxMapType(typeof(Int64), typeof(Single))]

public Int64 PassengerCount { get; set; }

[ColumnName("trip_time_in_secs"), OnnxMapType(typeof(Int64), typeof(Single))]

public Int64 TripTimeInSecs { get; set; }

[ColumnName("trip_distance")]

public float TripDistance { get; set; }

[ColumnName("payment_type")]

public string PaymentType { get; set; }

}

每个属性都将映射到数据集中的一个列。 属性将用特性进一步批注。

利用 ColumnName 特性,可指定在操作数据时 ML.NET 应如何引用该列。 例如,尽管 TripDistance 属性遵循标准的 .NET 命名约定,但该模型只知道名为 trip_distance 的列或功能。 为了解决这种命名差异,ColumnName 特性会按名称 trip_distance 将 TripDistance 属性映射到列或功能。

对于数值,ML.NET 仅对 Single 值类型进行操作。 但是,某些列的原始数据类型为整数。 OnnxMapType 特性在 ONNX 和 ML.NET 之间映射类型。

若要详细了解数据特性,请参阅 ML.NET 加载数据指南。

定义模型输出架构

数据被处理后,它将生成特定格式的输出。 定义数据输出架构。 在 Program.cs 文件中使用以下属性创建一个名为 OnnxOutput 的新类。

public class OnnxOutput

{

[ColumnName("variable_out1")]

public float[] PredictedFare { get; set; }

}

与 OnnxInput 类似,使用 ColumnName 特性可将 variable_out1 输出映射到更具描述性的名称 PredictedFare。

定义预测管道

ML.NET 中的管道通常是一系列链式转换,它们对输入数据进行操作以生成输出。 若要详细了解数据转换,请参阅 ML.NET 数据转换指南。

在

Program类中创建名为GetPredictionPipeline的新方法static ITransformer GetPredictionPipeline(MLContext mlContext) { }定义输入列和输出列的名称。 将以下代码添加到

GetPredictionPipeline方法中。var inputColumns = new string [] { "vendor_id", "rate_code", "passenger_count", "trip_time_in_secs", "trip_distance", "payment_type" }; var outputColumns = new string [] { "variable_out1" };定义管道。

IEstimator提供管道的操作以及输入和输出架构的蓝图。var onnxPredictionPipeline = mlContext .Transforms .ApplyOnnxModel( outputColumnNames: outputColumns, inputColumnNames: inputColumns, ONNX_MODEL_PATH);在本例中,

ApplyOnnxModel是管道中的唯一转换,它采用输入列和输出列的名称以及 ONNX 模型文件的路径。IEstimator仅定义要应用于数据的操作集。 对数据进行的操作称为ITransformer。 使用Fit方法从onnxPredictionPipeline创建一个。var emptyDv = mlContext.Data.LoadFromEnumerable(new OnnxInput[] {}); return onnxPredictionPipeline.Fit(emptyDv);Fit方法需要IDataView作为输入以对其执行操作。IDataView是一种使用表格格式在 ML.NET 中表示数据的方法。 在本例中,管道仅用于预测,因此你可提供一个空的IDataView来为ITransformer提供必要的输入和输出架构信息。 然后,系统会返回合适的ITransformer,以供今后在应用程序中使用。提示

在此示例中,管道在同一应用程序中定义和使用。 但建议分别使用单独的应用程序来定义和使用管道进行预测。 在 ML.NET 中,可将管道序列化并进行保存,以供今后在其他 .NET 最终用户应用程序中使用。 ML.NET 支持各种部署目标,例如桌面应用程序、Web 服务、WebAssembly 应用程序等。 若要详细了解如何保存管道,请参阅“ML.NET 保存和加载经过训练的模型”指南。

在

Main方法中,用所需的参数调用GetPredictionPipeline方法。var onnxPredictionPipeline = GetPredictionPipeline(mlContext);

使用模型进行预测

现在你已有一个管道,可用它来进行预测。 ML.NET 提供了一个方便的 API,用于在名为 PredictionEngine 的单个数据实例上进行预测。

在

Main方法中,使用CreatePredictionEngine方法创建PredictionEngine。var onnxPredictionEngine = mlContext.Model.CreatePredictionEngine<OnnxInput, OnnxOutput>(onnxPredictionPipeline);创建测试数据输入。

var testInput = new OnnxInput { VendorId = "CMT", RateCode = 1, PassengerCount = 1, TripTimeInSecs = 1271, TripDistance = 3.8f, PaymentType = "CRD" };通过

Predict方法基于新的testInput数据,使用predictionEngine进行预测。var prediction = onnxPredictionEngine.Predict(testInput);将预测结果输出到控制台。

Console.WriteLine($"Predicted Fare: {prediction.PredictedFare.First()}");使用 .NET CLI 运行你的应用程序。

dotnet run结果应类似于以下输出:

Predicted Fare: 15.621523

若要详细了解如何在 ML.NET 中进行预测,请参阅“使用模型进行预测”。