通过 VPN 网关连接,可以在 Azure 内的虚拟网络与本地 IT 基础结构之间创建安全的跨界连接。

本文将演示如何验证从本地资源到达 Azure 虚拟机 (VM) 的网络吞吐量。

注意

本文旨在帮助诊断并解决常见的问题。 如果使用以下信息无法解决问题,请与支持人员联系。

概述

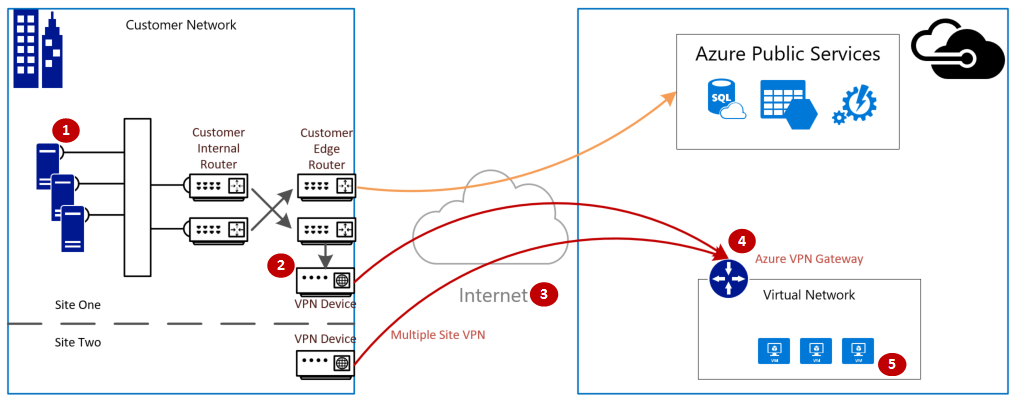

VPN 网关连接涉及以下组件:

- 本地 VPN 设备(请查看已验证 VPN 设备的列表。)

- 公共 Internet

- Azure VPN 网关

- Azure VM

下图显示的是通过 VPN 建立的从本地网络至 Azure 虚拟网络的逻辑连接。

计算最大的预期流入/流出量

- 确定应用程序的基准吞吐量需求。

- 确定 Azure VPN 网关的吞吐量限制。 如需帮助,请参阅关于 VPN 网关的“网关 SKU”部分。

- 确定与 VM 大小相应的 Azure VM 吞吐量指南。

- 确定 Internet 服务提供商 (ISP) 的带宽。

- 使用 VM、VPN 网关或 ISP 的最小带宽来计算预期的吞吐量;其度量方式是兆位/每秒 (/) 除以八 (8)。 此计算提供每秒兆字节数。

如果计算得出的吞吐量无法满足应用程序的基准吞吐量需求,则必须提高已被确定为瓶颈的资源的带宽。 若要调整 Azure VPN 网关的大小,请参阅更改网关 SKU。 若要调整虚拟机的大小,请参阅调整 VM 的大小。 如果 Internet 的带宽不及预期,也可联系 ISP。

注意

VPN 网关吞吐量是所有站点到站点\VNET 到 VNET 或点到站点连接的聚合。

使用性能工具验证网络吞吐量

此验证应在非高峰时段执行,因为测试期间的 VPN 隧道吞吐量饱和度无法给出准确的结果。

此测试使用 iPerf 工具来实施,此工具在 Windows 和 Linux 上均可使用,并且有“客户端”和“服务器”两种模式。 对于 Windows VM,其限速为 3 Gbps。

此工具不会对磁盘执行任何读/写操作。 它只会生成从一端至另一端的自生成 TCP 流量。 它已生成的统计信息基于各种旨在测量客户端和服务器节点间可用带宽的试验。 在两个节点间进行测试时,一个节点充当服务器,另一个节点充当客户端。 完成此测试后,建议对调两个节点的角色,以测试它们的上传和下载吞吐量。

下载 iPerf

注意

本文所讨论的第三方产品由独立于 Microsoft 的公司生产。 Microsoft 对这些产品的性能和可靠性不作任何明示或默示担保。

运行 iPerf (iperf3.exe)

启用允许流量的 NSG/ACL 规则(适用于在 Azure VM 上进行公共 IP 地址测试)。

在两个节点上,为端口 5001 启用防火墙例外。

Windows: 以管理员身份运行以下命令:

netsh advfirewall firewall add rule name="Open Port 5001" dir=in action=allow protocol=TCP localport=5001若要在测试完成后删除规则,请运行此命令:

netsh advfirewall firewall delete rule name="Open Port 5001" protocol=TCP localport=5001Azure Linux: Azure Linux 映像具有限制性较低的防火墙。 如果有应用程序在侦听某个端口,则流量会被允许通过。 受保护的自定义映像可能需要显式打开端口。 常见的 Linux OS 层防火墙包括

iptables、ufw或firewalld。在服务器节点上,更改为从中提取 iperf3.exe 的目录。 然后,在服务器模式下运行 iPerf 并将其设置为侦听端口 5001,如以下命令所示:

cd c:\iperf-3.1.2-win65 iperf3.exe -s -p 5001注意

可以根据环境中的特定防火墙限制自定义端口 5001。

在客户端节点上,转到从中提取 iperf 工具的目录,并运行以下命令:

iperf3.exe -c <IP of the iperf Server> -t 30 -p 5001 -P 32客户端将端口 5001 上 30 秒的流量引导到服务器。 标志“-P”表明我们正同时发出 32 个连至服务器节点的连接。

以下屏幕显示了本示例中的输出:

(可选)若要保留测试结果,请运行以下命令:

iperf3.exe -c IPofTheServerToReach -t 30 -p 5001 -P 32 >> output.txt完成上述步骤后,请调换角色以使服务器节点变为客户端节点(反之亦然),然后执行相同的步骤。

注意

Iperf 不是唯一工具。 NTTTCP 是用于测试的备用解决方案。

测试运行 Windows 的 VM

将 Latte.exe 加载到 VM

下载最新版本的 Latte.exe

考虑将 Latte.exe 放在单独的文件夹中,例如 c:\tools

允许 Latte.exe 通过 Windows 防火墙

在接收端上的 Windows 防火墙中创建“允许”规则,以允许 Latte.exe 流量抵达。 最简单的方法是按名称允许整个 Latte.exe 程序,而不是允许特定的 TCP 端口入站。

如下所示允许 Latte.exe 通过 Windows 防火墙

netsh advfirewall firewall add rule program=<PATH>\latte.exe name="Latte" protocol=any dir=in action=allow enable=yes profile=ANY

例如,如果已将 Latte.exe 复制到“c:\tools”文件夹中,则此命令为

netsh advfirewall firewall add rule program=c:\tools\latte.exe name="Latte" protocol=any dir=in action=allow enable=yes profile=ANY

运行延迟测试

在接收端启动 latte.exe(从 CMD 运行,而不要从 PowerShell 运行):

latte -a <Receiver IP address>:<port> -i <iterations>

大约 65000 次迭代就足以返回代表性的结果。

可输入任意可用端口号。

如果 VM 的 IP 地址为 10.0.0.4,则命令如下

latte -c -a 10.0.0.4:5005 -i 65100

在发送端启动 latte.exe(从 CMD 运行,而不要从 PowerShell 运行)

latte -c -a <Receiver IP address>:<port> -i <iterations>

生成的命令与接收端上的命令相同,只是添加了“-c”来指示这是“客户端”或发送端

latte -c -a 10.0.0.4:5005 -i 65100

等待结果。 该命令可能需要几分钟时间才能完成,具体取决于 VM 之间的距离。 考虑先运行较少的迭代次数以使测试成功,然后再运行较长的测试。

测试运行 Linux 的 VM

使用 SockPerf 测试 VM。

在 VM 上安装 SockPerf

在 Linux VM(发送端和接收端)上,运行以下命令以在 VM 上准备 SockPerf:

RHEL - 安装 GIT 和其他实用工具

sudo yum install gcc -y -q

sudo yum install git -y -q

sudo yum install gcc-c++ -y

sudo yum install ncurses-devel -y

sudo yum install -y automake

Ubuntu - 安装 GIT 和其他有用的工具

sudo apt-get install build-essential -y

sudo apt-get install git -y -q

sudo apt-get install -y autotools-dev

sudo apt-get install -y automake

Bash - 所有分发版

从 bash 命令行(假设已安装 git)

git clone https://github.com/mellanox/sockperf

cd sockperf/

./autogen.sh

./configure --prefix=

Make 速度较慢,可能需要几分钟时间

make

Make install 速度较快

sudo make install

在 VM 上运行 SockPerf

安装后的示例命令。 服务器/接收端 - 假设服务器 IP 为10.0.0.4

sudo sockperf sr --tcp -i 10.0.0.4 -p 12345 --full-rtt

客户端 - 假设服务器 IP 为 10.0.0.4

sockperf ping-pong -i 10.0.0.4 --tcp -m 1400 -t 101 -p 12345 --full-rtt

注意

在 VM 与网关之间执行吞吐量测试过程中,请确保没有中间跃点(例如虚拟设备)。 如果上述 iPERF/NTTTCP 测试返回的结果不佳(在总体吞吐量方面),请参阅本文,了解此问题的可能性根本原因是哪些重要因素造成的:

具体而言,在执行这些测试期间同时从客户端和服务器收集的数据包捕获跟踪(Wireshark/网络监视器)有助于对不良性能进行评估。 这些跟踪可能包括丢包、高延迟、MTU 大小问题、 碎片、TCP 0 窗口、失序片段等。

解决文件复制速度缓慢问题

即使使用上述步骤评估得出的总体吞吐量(iPERF/NTTTCP/等)良好,在使用 Windows 资源管理器或通过 RDP 会话拖放时,也仍可能会遇到文件复制速度缓慢的情况。 此问题通常是由以下的一个或两个因素造成的:

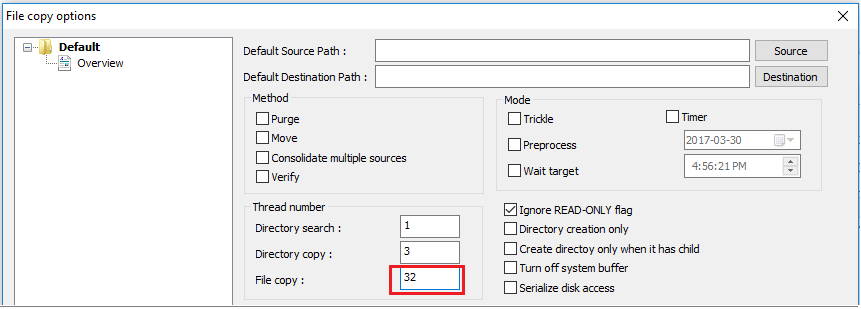

文件复制应用程序(如 Windows 资源管理器和 RDP)在复制文件时没有使用多个线程。 为了提高性能,请通过多线程文件复制应用程序(如 Richcopy)使用 16 或 32 个线程来复制文件。 若要更改 Richcopy 中的文件复制线程数目,请单击“操作”>“复制选项”>“文件复制”。

注意

并非所有应用程序的工作方式都相同,此外,并非所有应用程序/进程都利用所有线程。 如果运行测试,可以看到某些线程是空的,不能提供准确的吞吐量结果。 若要检查应用程序文件的传输性能,请通过增加连续线程数来使用多线程,或减少线程数,以找到应用程序或文件传输的最佳吞吐量。

VM 磁盘读/写速度不够快。 有关详细信息,请参阅 Azure 存储故障排除。

本地设备上的对外接口

指定希望 Azure 通过本地网络网关上的 VPN 访问的本地范围的子网。 同时,将 Azure 中的 VNET 地址空间定义为本地设备的相同地址空间。

基于路由的网关:基于路由的 VPN 的策略或流量选择器配置为任意到任意(或通配符)。

基于策略的网关:基于策略的 VPN 会根据本地网络和 Azure VNet 之间的地址前缀的各种组合,加密数据包并引导其通过 IPsec 隧道。 通常会在 VPN 配置中将策略(或流量选择器)定义为访问列表。

UsePolicyBasedTrafficSelector 连接:(“UsePolicyBasedTrafficSelectors”)$True连接配置 Azure VPN 网关以连接到本地基于策略的 VPN 防火墙。 如果启用 PolicyBasedTrafficSelectors,则需确保对于本地网络(本地网关)前缀与 Azure 虚拟网络前缀的所有组合,VPN 设备都定义了与之匹配的(而不是任意到任意)流量选择器。

不当的配置可能导致隧道中频繁断开连接、丢包、吞吐量不佳和延迟。

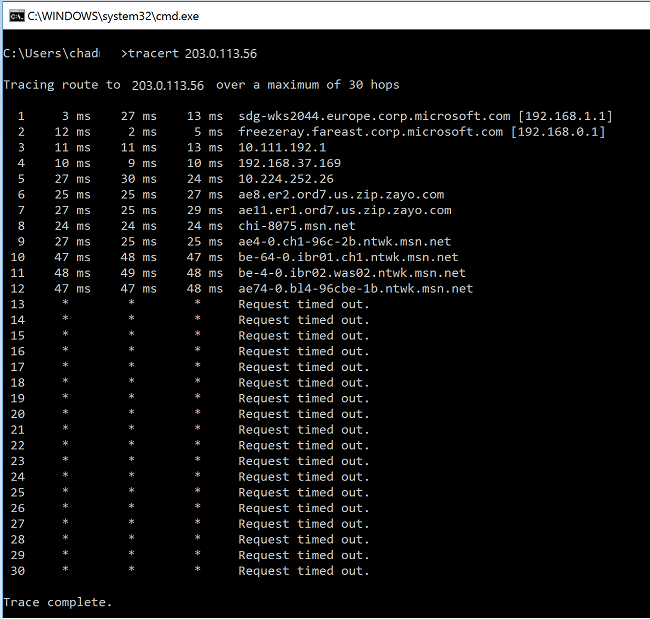

检查延迟

可使用以下工具来检查延迟:

- WinMTR

- TCPTraceroute

ping和psping(这些工具能够很好地评估 RTT,但不能在所有情况下使用。)

如果在进入 MS 网络主干之前发现任一跃点出现较高的延迟峰值,可以在 Internet 服务提供商的配合下进一步展开调查。

如果在“msn.net”内部的跃点中发现了非同寻常的高延迟峰值,请联系 MS 支持部门进一步展开调查。

后续步骤

有关详细信息或帮助,请查看以下链接: