Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Orchestration workflow is one of the features offered by Azure Language. This cloud-based API service uses machine learning to facilitate the development of orchestration models that seamlessly integrate Conversational Language Understanding (CLU) and Custom question Answering projects. Developers can create an orchestration workflow to iteratively tag utterances, train models, and evaluate their performance before deployment.

To simplify building and customizing your model, the service offers a custom playground that can be accessed through the Language studio. You can easily get started with the service by following the steps in this quickstart.

This documentation contains the following article types:

- Quickstarts are getting-started instructions to guide you through making requests to the service.

- Concepts provide explanations of the service functionality and features.

- How-to guides contain instructions for using the service in more specific or customized ways.

Example usage scenarios

Orchestration workflow can be used in multiple scenarios across various industries. Some examples are:

Enterprise chat bot

In a large corporation, an enterprise chat bot might handle various employee affairs. For example, it could process frequently asked questions using a custom question answering knowledge base. Additionally, it might manage calendar-specific operations through conversational language understanding capabilities. The bot could also handle interview feedback processing. To support these diverse functions, the bot needs to appropriately route incoming requests to the correct service. Orchestration workflow allows you to connect those skills to one project that handles the routing of incoming requests appropriately to power the enterprise bot.

Project development lifecycle

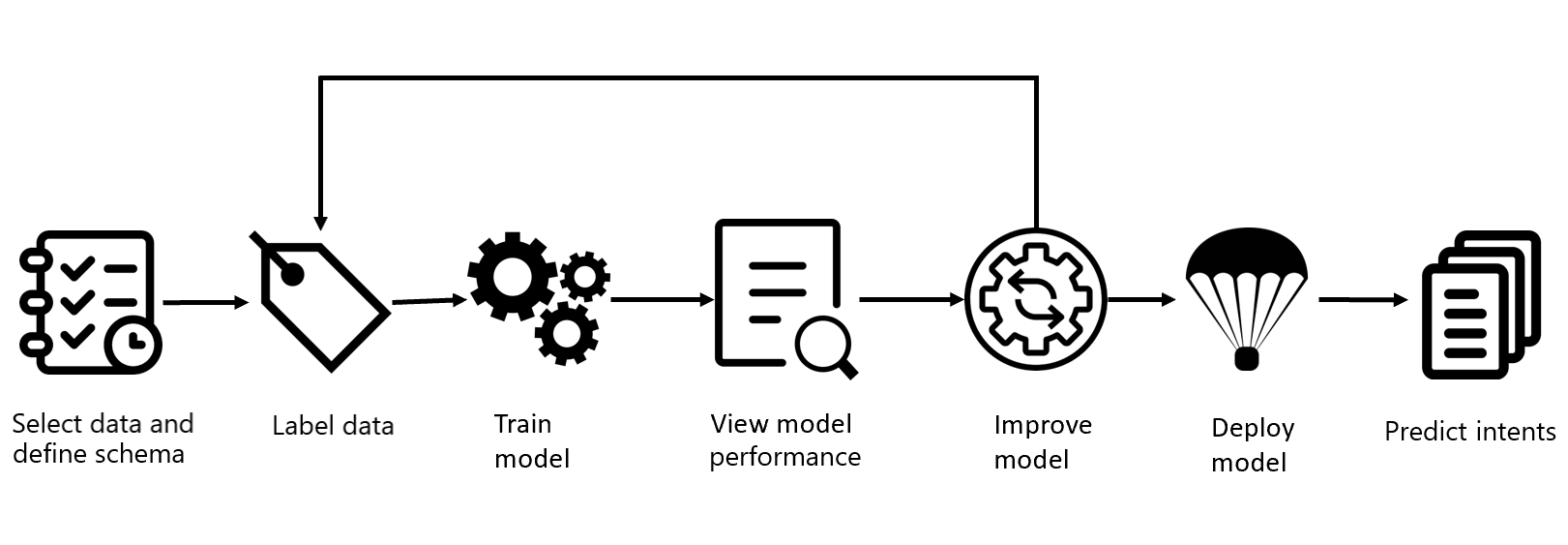

Creating an orchestration workflow project typically involves several different steps.

Follow these steps to get the most out of your model:

Define your schema: Know your data and define the actions and relevant information that needs to be recognized from user's input utterances. Create the intents that you want to assign to user's utterances and the projects you want to connect to your orchestration project.

Label your data: The quality of data tagging is a key factor in determining model performance.

Train a model: Your model starts learning from your tagged data.

View the model's performance: View the evaluation details for your model to determine how well it performs when introduced to new data.

Improve the model: After reviewing the model's performance, you can then learn how you can improve the model.

Deploy the model: Deploying a model makes it available for use via the prediction API.

Predict intents: Use your custom model to predict intents from user's utterances.

Reference documentation and code samples

As you use orchestration workflow, see the following reference documentation and samples for Azure Language:

| Development option / language | Reference documentation | Samples |

|---|---|---|

| REST APIs (Authoring) | REST API documentation | |

| REST APIs (Runtime) | REST API documentation | |

| C# (Runtime) | C# documentation | C# samples |

| Python (Runtime) | Python documentation | Python samples |

Next steps

Use the quickstart article to start using orchestration workflow.

As you go through the project development lifecycle, review the glossary to learn more about the terms used throughout the documentation for this feature.

Remember to view the service limits for information such as regional availability.