Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

In this article, you learn how to deploy an endpoint for a custom speech model. Except for batch transcription, you must deploy a custom endpoint to use a custom speech model.

Tip

The Batch transcription API doesn't require a hosted deployment endpoint for custom speech. You can conserve resources by only using the custom speech model for batch transcription. For more information, see Speech service pricing.

You can deploy an endpoint for a base or custom model, and then update the endpoint later to use a better trained model.

Note

Endpoints used by F0 Speech resources are deleted after seven days.

Add a deployment endpoint

To create a custom endpoint, follow these steps:

Sign in to the Speech Studio.

Select Custom speech > Your project name > Deploy models.

If this is your first endpoint, notice that the table has no endpoints listed. After you create an endpoint, use this page to track each deployed endpoint.

Select Deploy model to start the new endpoint wizard.

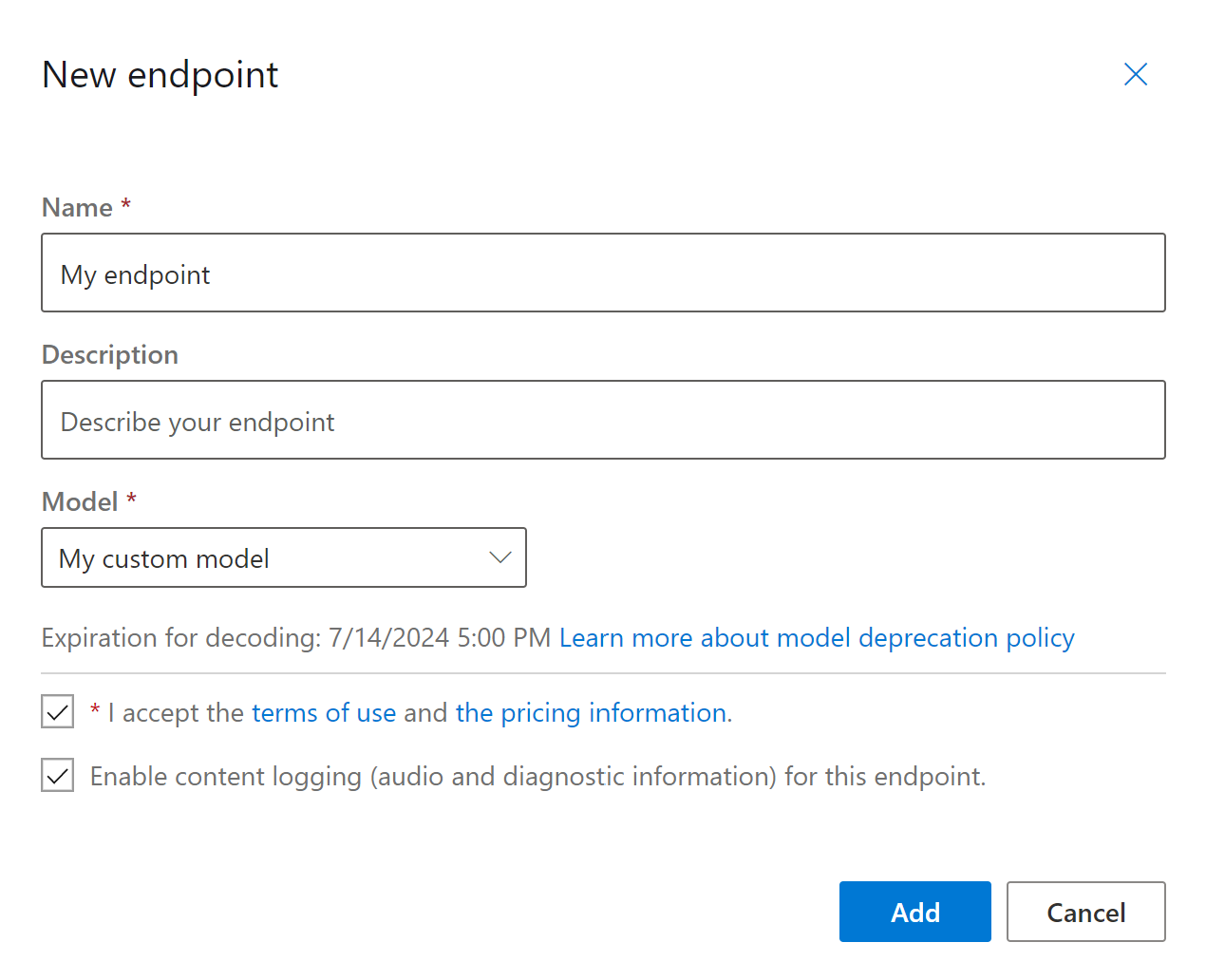

On the New endpoint page, enter a name and description for your custom endpoint.

Select the custom model that you want to associate with the endpoint.

Optionally, check the box to enable audio and diagnostic logging of the endpoint's traffic.

Select Add to save and deploy the endpoint.

On the main Deploy models page, details about the new endpoint are displayed in a table, such as name, description, status, and expiration date. It can take up to 30 minutes to instantiate a new endpoint that uses your custom models. When the status of the deployment changes to Succeeded, the endpoint is ready to use.

Important

Take note of the model expiration date. This date is the last day that you can use your custom model for speech recognition. For more information, see Model and endpoint lifecycle.

Select the endpoint link to view information specific to it, such as the endpoint key, endpoint URL, and sample code.

Before proceeding, make sure that you have the Speech CLI installed and configured.

To create an endpoint and deploy a model, use the spx csr endpoint create command. Construct the request parameters according to the following instructions:

- Set the

projectproperty to the ID of an existing project. Use theprojectproperty so you can manage fine-tuning for custom speech in the Speech Studio. To get the project ID, see Get the project ID for the REST API. - Set the required

modelproperty to the ID of the model that you want deployed to the endpoint. - Set the required

languageproperty. The endpoint locale must match the locale of the model. You can't change the locale later. The Speech CLIlanguageproperty corresponds to thelocaleproperty in the JSON request and response. - Set the required

nameproperty. This name appears in the Speech Studio. The Speech CLInameproperty corresponds to thedisplayNameproperty in the JSON request and response. - Optionally, set the

loggingproperty. Set this property toenabledto enable audio and diagnostic logging of the endpoint's traffic. The default isfalse.

Here's an example Speech CLI command to create an endpoint and deploy a model:

spx csr endpoint create --api-version v3.2 --project YourProjectId --model YourModelId --name "My Endpoint" --description "My Endpoint Description" --language "en-US"

Important

You must set --api-version v3.2. The Speech CLI uses the REST API but doesn't yet support versions later than v3.2.

You receive a response body in the following format:

{

"self": "https://chinanorth2.api.cognitive.azure.cn/speechtotext/v3.2/endpoints/aaaabbbb-0000-cccc-1111-dddd2222eeee",

"model": {

"self": "https://chinanorth2.api.cognitive.azure.cn/speechtotext/v3.2/models/bbbbcccc-1111-dddd-2222-eeee3333ffff"

},

"links": {

"logs": "https://chinanorth2.api.cognitive.azure.cn/speechtotext/v3.2/endpoints/a07164e8-22d1-4eb7-aa31-bf6bb1097f37/files/logs",

"restInteractive": "https://chinanorth.stt.speech.azure.cn/speech/recognition/interactive/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee",

"restConversation": "https://chinanorth.stt.speech.azure.cn/speech/recognition/conversation/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee",

"restDictation": "https://chinanorth.stt.speech.azure.cn/speech/recognition/dictation/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee",

"webSocketInteractive": "wss://chinanorth.stt.speech.azure.cn/speech/recognition/interactive/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee",

"webSocketConversation": "wss://chinanorth.stt.speech.azure.cn/speech/recognition/conversation/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee",

"webSocketDictation": "wss://chinanorth.stt.speech.azure.cn/speech/recognition/dictation/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee"

},

"project": {

"self": "https://chinanorth2.api.cognitive.azure.cn/speechtotext/v3.2/projects/ccccdddd-2222-eeee-3333-ffff4444aaaa"

},

"properties": {

"loggingEnabled": true

},

"lastActionDateTime": "2024-07-15T16:29:36Z",

"status": "NotStarted",

"createdDateTime": "2024-07-15T16:29:36Z",

"locale": "en-US",

"displayName": "My Endpoint",

"description": "My Endpoint Description"

}

The top-level self property in the response body is the endpoint's URI. Use this URI to get details about the endpoint's project, model, and logs. You also use this URI to update the endpoint.

For Speech CLI help with endpoints, run the following command:

spx help csr endpoint

To create an endpoint and deploy a model, use the Endpoints_Create operation of the Speech to text REST API. Construct the request body according to the following instructions:

- Set the

projectproperty to the URI of an existing project. Set this property so you can view and manage the endpoint in the Speech Studio. To get the project ID, see Get the project ID for the REST API. - Set the required

modelproperty to the URI of the model that you want deployed to the endpoint. - Set the required

localeproperty. The endpoint locale must match the locale of the model. You can't change the locale later. - Set the required

displayNameproperty. This name appears in the Speech Studio. - Optionally, set the

loggingEnabledproperty withinproperties. Set this property totrueto enable audio and diagnostic logging of the endpoint's traffic. The default isfalse.

Make an HTTP POST request using the URI as shown in the following Endpoints_Create example. Replace YourSpeechResoureKey with your Speech resource key, replace YourServiceRegion with your Speech resource region, and set the request body properties as previously described.

curl -v -X POST -H "Ocp-Apim-Subscription-Key: YourSpeechResoureKey" -H "Content-Type: application/json" -d '{

"project": {

"self": "https://chinanorth2.api.cognitive.azure.cn/speechtotext/v3.2/projects/ccccdddd-2222-eeee-3333-ffff4444aaaa"

},

"properties": {

"loggingEnabled": true

},

"displayName": "My Endpoint",

"description": "My Endpoint Description",

"model": {

"self": "https://chinanorth2.api.cognitive.azure.cn/speechtotext/v3.2/models/base/ddddeeee-3333-ffff-4444-aaaa5555bbbb"

},

"locale": "en-US",

}' "https://YourServiceRegion.api.cognitive.azure.cn/speechtotext/v3.2/endpoints"

You receive a response body in the following format:

{

"self": "https://chinanorth2.api.cognitive.azure.cn/speechtotext/v3.2/endpoints/aaaabbbb-0000-cccc-1111-dddd2222eeee",

"model": {

"self": "https://chinanorth2.api.cognitive.azure.cn/speechtotext/v3.2/models/bbbbcccc-1111-dddd-2222-eeee3333ffff"

},

"links": {

"logs": "https://chinanorth2.api.cognitive.azure.cn/speechtotext/v3.2/endpoints/a07164e8-22d1-4eb7-aa31-bf6bb1097f37/files/logs",

"restInteractive": "https://chinanorth.stt.speech.azure.cn/speech/recognition/interactive/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee",

"restConversation": "https://chinanorth.stt.speech.azure.cn/speech/recognition/conversation/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee",

"restDictation": "https://chinanorth.stt.speech.azure.cn/speech/recognition/dictation/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee",

"webSocketInteractive": "wss://chinanorth.stt.speech.azure.cn/speech/recognition/interactive/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee",

"webSocketConversation": "wss://chinanorth.stt.speech.azure.cn/speech/recognition/conversation/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee",

"webSocketDictation": "wss://chinanorth.stt.speech.azure.cn/speech/recognition/dictation/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee"

},

"project": {

"self": "https://chinanorth2.api.cognitive.azure.cn/speechtotext/v3.2/projects/ccccdddd-2222-eeee-3333-ffff4444aaaa"

},

"properties": {

"loggingEnabled": true

},

"lastActionDateTime": "2024-07-15T16:29:36Z",

"status": "NotStarted",

"createdDateTime": "2024-07-15T16:29:36Z",

"locale": "en-US",

"displayName": "My Endpoint",

"description": "My Endpoint Description"

}

The top-level self property in the response body is the endpoint's URI. Use this URI to get details about the endpoint's project, model, and logs. You also use this URI to update or delete the endpoint.

Change model and redeploy endpoint

You can update an endpoint to use another model created by the same Speech resource. As previously mentioned, you must update the endpoint's model before the model expires.

To use a new model and redeploy the custom endpoint:

- Sign in to the Speech Studio.

- Select Custom speech > Your project name > Deploy models.

- Select the link to an endpoint by name, and then select Change model.

- Select the new model that you want the endpoint to use.

- Select Done to save and redeploy the endpoint.

Before proceeding, make sure that you have the Speech CLI installed and configured.

To redeploy the custom endpoint with a new model, use the spx csr model update command. Construct the request parameters according to the following instructions:

- Set the required

endpointproperty to the ID of the endpoint that you want deployed. - Set the required

modelproperty to the ID of the model that you want deployed to the endpoint.

Here's an example Speech CLI command that redeploys the custom endpoint with a new model:

spx csr endpoint update --api-version v3.2 --endpoint YourEndpointId --model YourModelId

Important

You must set --api-version v3.2. The Speech CLI uses the REST API but doesn't yet support versions later than v3.2.

You receive a response body in the following format:

{

"self": "https://chinanorth2.api.cognitive.azure.cn/speechtotext/v3.2/endpoints/aaaabbbb-0000-cccc-1111-dddd2222eeee",

"model": {

"self": "https://chinanorth2.api.cognitive.azure.cn/speechtotext/v3.2/models/bbbbcccc-1111-dddd-2222-eeee3333ffff"

},

"links": {

"logs": "https://chinanorth2.api.cognitive.azure.cn/speechtotext/v3.2/endpoints/a07164e8-22d1-4eb7-aa31-bf6bb1097f37/files/logs",

"restInteractive": "https://chinanorth.stt.speech.azure.cn/speech/recognition/interactive/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee",

"restConversation": "https://chinanorth.stt.speech.azure.cn/speech/recognition/conversation/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee",

"restDictation": "https://chinanorth.stt.speech.azure.cn/speech/recognition/dictation/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee",

"webSocketInteractive": "wss://chinanorth.stt.speech.azure.cn/speech/recognition/interactive/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee",

"webSocketConversation": "wss://chinanorth.stt.speech.azure.cn/speech/recognition/conversation/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee",

"webSocketDictation": "wss://chinanorth.stt.speech.azure.cn/speech/recognition/dictation/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee"

},

"project": {

"self": "https://chinanorth2.api.cognitive.azure.cn/speechtotext/v3.2/projects/ccccdddd-2222-eeee-3333-ffff4444aaaa"

},

"properties": {

"loggingEnabled": true

},

"lastActionDateTime": "2024-07-15T16:30:12Z",

"status": "Succeeded",

"createdDateTime": "2024-07-15T16:29:36Z",

"locale": "en-US",

"displayName": "My Endpoint",

"description": "My Endpoint Description"

}

For Speech CLI help with endpoints, run the following command:

spx help csr endpoint

To redeploy the custom endpoint with a new model, use the Endpoints_Update operation of the Speech to text REST API. Construct the request body according to the following instructions:

- Set the

modelproperty to the URI of the model that you want deployed to the endpoint.

Make an HTTP PATCH request using the URI as shown in the following example. Replace YourSpeechResoureKey with your Speech resource key, replace YourServiceRegion with your Speech resource region, replace YourEndpointId with your endpoint ID, and set the request body properties as previously described.

curl -v -X PATCH -H "Ocp-Apim-Subscription-Key: YourSpeechResoureKey" -H "Content-Type: application/json" -d '{

"model": {

"self": "https://chinanorth2.api.cognitive.azure.cn/speechtotext/v3.2/models/bbbbcccc-1111-dddd-2222-eeee3333ffff"

},

}' "https://YourServiceRegion.api.cognitive.azure.cn/speechtotext/v3.2/endpoints/YourEndpointId"

You receive a response body in the following format:

{

"self": "https://chinanorth2.api.cognitive.azure.cn/speechtotext/v3.2/endpoints/aaaabbbb-0000-cccc-1111-dddd2222eeee",

"model": {

"self": "https://chinanorth2.api.cognitive.azure.cn/speechtotext/v3.2/models/bbbbcccc-1111-dddd-2222-eeee3333ffff"

},

"links": {

"logs": "https://chinanorth2.api.cognitive.azure.cn/speechtotext/v3.2/endpoints/a07164e8-22d1-4eb7-aa31-bf6bb1097f37/files/logs",

"restInteractive": "https://chinanorth.stt.speech.azure.cn/speech/recognition/interactive/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee",

"restConversation": "https://chinanorth.stt.speech.azure.cn/speech/recognition/conversation/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee",

"restDictation": "https://chinanorth.stt.speech.azure.cn/speech/recognition/dictation/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee",

"webSocketInteractive": "wss://chinanorth.stt.speech.azure.cn/speech/recognition/interactive/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee",

"webSocketConversation": "wss://chinanorth.stt.speech.azure.cn/speech/recognition/conversation/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee",

"webSocketDictation": "wss://chinanorth.stt.speech.azure.cn/speech/recognition/dictation/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee"

},

"project": {

"self": "https://chinanorth2.api.cognitive.azure.cn/speechtotext/v3.2/projects/ccccdddd-2222-eeee-3333-ffff4444aaaa"

},

"properties": {

"loggingEnabled": true

},

"lastActionDateTime": "2024-07-15T16:30:12Z",

"status": "Succeeded",

"createdDateTime": "2024-07-15T16:29:36Z",

"locale": "en-US",

"displayName": "My Endpoint",

"description": "My Endpoint Description"

}

The redeployment takes several minutes to complete. In the meantime, your endpoint uses the previous model without interruption of service.

View logging data

You can export logging data if you configured it when creating the endpoint.

To download the endpoint logs:

- Sign in to the Speech Studio.

- Select Custom speech > Your project name > Deploy models.

- Select the link by endpoint name.

- Under Content logging, select Download log.

Before proceeding, make sure that you have the Speech CLI installed and configured.

To get logs for an endpoint, use the spx csr endpoint list command. Construct the request parameters according to the following instructions:

- Set the required

endpointproperty to the ID of the endpoint that you want to get logs.

Here's an example Speech CLI command that gets logs for an endpoint:

spx csr endpoint list --api-version v3.2 --endpoint YourEndpointId

Important

You must set --api-version v3.2. The Speech CLI uses the REST API but doesn't yet support versions later than v3.2.

The response body returns the locations of each log file with more details.

To get logs for an endpoint, start by using the Endpoints_Get operation of the Speech to text REST API.

Make an HTTP GET request using the URI as shown in the following example. Replace YourEndpointId with your endpoint ID, replace YourSpeechResoureKey with your Speech resource key, and replace YourServiceRegion with your Speech resource region.

curl -v -X GET "https://YourServiceRegion.api.cognitive.azure.cn/speechtotext/v3.2/endpoints/YourEndpointId" -H "Ocp-Apim-Subscription-Key: YourSpeechResoureKey"

You receive a response body in the following format:

{

"self": "https://chinanorth2.api.cognitive.azure.cn/speechtotext/v3.2/endpoints/aaaabbbb-0000-cccc-1111-dddd2222eeee",

"model": {

"self": "https://chinanorth2.api.cognitive.azure.cn/speechtotext/v3.2/models/bbbbcccc-1111-dddd-2222-eeee3333ffff"

},

"links": {

"logs": "https://chinanorth2.api.cognitive.azure.cn/speechtotext/v3.2/endpoints/a07164e8-22d1-4eb7-aa31-bf6bb1097f37/files/logs",

"restInteractive": "https://chinanorth.stt.speech.azure.cn/speech/recognition/interactive/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee",

"restConversation": "https://chinanorth.stt.speech.azure.cn/speech/recognition/conversation/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee",

"restDictation": "https://chinanorth.stt.speech.azure.cn/speech/recognition/dictation/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee",

"webSocketInteractive": "wss://chinanorth.stt.speech.azure.cn/speech/recognition/interactive/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee",

"webSocketConversation": "wss://chinanorth.stt.speech.azure.cn/speech/recognition/conversation/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee",

"webSocketDictation": "wss://chinanorth.stt.speech.azure.cn/speech/recognition/dictation/cognitiveservices/v1?cid=aaaabbbb-0000-cccc-1111-dddd2222eeee"

},

"project": {

"self": "https://chinanorth2.api.cognitive.azure.cn/speechtotext/v3.2/projects/ccccdddd-2222-eeee-3333-ffff4444aaaa"

},

"properties": {

"loggingEnabled": true

},

"lastActionDateTime": "2024-07-15T16:30:12Z",

"status": "Succeeded",

"createdDateTime": "2024-07-15T16:29:36Z",

"locale": "en-US",

"displayName": "My Endpoint",

"description": "My Endpoint Description"

}

Make an HTTP GET request using the "logs" URI from the previous response body. Replace YourEndpointId with your endpoint ID, replace YourSpeechResoureKey with your Speech resource key, and replace YourServiceRegion with your Speech resource region.

curl -v -X GET "https://YourServiceRegion.api.cognitive.azure.cn/speechtotext/v3.2/endpoints/YourEndpointId/files/logs" -H "Ocp-Apim-Subscription-Key: YourSpeechResoureKey"

The response body returns the locations of each log file with more details.

Microsoft-owned storage keeps logging data for 30 days, and then it removes the data. If you link your own storage account to the Azure AI services subscription, the logging data isn't automatically deleted.