Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

An ingress controller is a piece of software that provides reverse proxy, configurable traffic routing, and TLS termination for Kubernetes services. Kubernetes ingress resources are used to configure the ingress rules and routes for individual Kubernetes services. Using an ingress controller and ingress rules, a single IP address can be used to route traffic to multiple services in a Kubernetes cluster.

This article shows you how to deploy the NGINX ingress controller in an Azure Kubernetes Service (AKS) cluster. The ingress controller is configured with a static public IP address. The cert-manager project is used to automatically generate and configure Let's Encrypt certificates. Finally, two applications are run in the AKS cluster, each of which is accessible over a single IP address.

You can also:

- Create a basic ingress controller with external network connectivity

- Enable the HTTP application routing add-on

- Create an ingress controller that uses your own TLS certificates

- Create an ingress controller that uses Let's Encrypt to automatically generate TLS certificates with a dynamic public IP address

Before you begin

This article assumes that you have an existing AKS cluster. If you need an AKS cluster, see the AKS quickstart using the Azure CLI, using Azure PowerShell, or using the Azure portal.

This article uses Helm 3 to install the NGINX ingress controller on a supported version of Kubernetes. Make sure that you are using the latest release of Helm and have access to the ingress-nginx and jetstack Helm repositories. The steps outlined in this article may not be compatible with previous versions of the Helm chart, NGINX ingress controller, or Kubernetes.

For more information on configuring and using Helm, see Install applications with Helm in Azure Kubernetes Service (AKS). For upgrade instructions, see the Helm install docs.

In addition, this article assumes you have an existing AKS cluster with an integrated ACR. For more details on creating an AKS cluster with an integrated ACR, see Authenticate with Azure Container Registry from Azure Kubernetes Service.

This article also requires that you are running the Azure CLI version 2.0.64 or later. Run az --version to find the version. If you need to install or upgrade, see Install Azure CLI.

Import the images used by the Helm chart into your ACR

This article uses the NGINX ingress controller Helm chart, which relies on three container images.

Use az acr import to import those images into your ACR.

REGISTRY_NAME=<REGISTRY_NAME>

SOURCE_REGISTRY=k8sgcr.azk8s.cn

CONTROLLER_IMAGE=ingress-nginx/controller

CONTROLLER_TAG=v1.0.4

PATCH_IMAGE=ingress-nginx/kube-webhook-certgen

PATCH_TAG=v1.1.1

DEFAULTBACKEND_IMAGE=defaultbackend-amd64

DEFAULTBACKEND_TAG=1.5

CERT_MANAGER_REGISTRY=quay.azk8s.cn

CERT_MANAGER_TAG=v1.5.4

CERT_MANAGER_IMAGE_CONTROLLER=jetstack/cert-manager-controller

CERT_MANAGER_IMAGE_WEBHOOK=jetstack/cert-manager-webhook

CERT_MANAGER_IMAGE_CAINJECTOR=jetstack/cert-manager-cainjector

az acr import --name $REGISTRY_NAME --source $SOURCE_REGISTRY/$CONTROLLER_IMAGE:$CONTROLLER_TAG --image $CONTROLLER_IMAGE:$CONTROLLER_TAG

az acr import --name $REGISTRY_NAME --source $SOURCE_REGISTRY/$PATCH_IMAGE:$PATCH_TAG --image $PATCH_IMAGE:$PATCH_TAG

az acr import --name $REGISTRY_NAME --source $SOURCE_REGISTRY/$DEFAULTBACKEND_IMAGE:$DEFAULTBACKEND_TAG --image $DEFAULTBACKEND_IMAGE:$DEFAULTBACKEND_TAG

az acr import --name $REGISTRY_NAME --source $CERT_MANAGER_REGISTRY/$CERT_MANAGER_IMAGE_CONTROLLER:$CERT_MANAGER_TAG --image $CERT_MANAGER_IMAGE_CONTROLLER:$CERT_MANAGER_TAG

az acr import --name $REGISTRY_NAME --source $CERT_MANAGER_REGISTRY/$CERT_MANAGER_IMAGE_WEBHOOK:$CERT_MANAGER_TAG --image $CERT_MANAGER_IMAGE_WEBHOOK:$CERT_MANAGER_TAG

az acr import --name $REGISTRY_NAME --source $CERT_MANAGER_REGISTRY/$CERT_MANAGER_IMAGE_CAINJECTOR:$CERT_MANAGER_TAG --image $CERT_MANAGER_IMAGE_CAINJECTOR:$CERT_MANAGER_TAG

Note

In addition to importing container images into your ACR, you can also import Helm charts into your ACR. For more information, see Push and pull Helm charts to an Azure container registry.

Create an ingress controller

By default, an NGINX ingress controller is created with a new public IP address assignment. This public IP address is only static for the life-span of the ingress controller, and is lost if the controller is deleted and re-created. A common configuration requirement is to provide the NGINX ingress controller an existing static public IP address. The static public IP address remains if the ingress controller is deleted. This approach allows you to use existing DNS records and network configurations in a consistent manner throughout the lifecycle of your applications.

If you need to create a static public IP address, first get the resource group name of the AKS cluster with the az aks show command:

az aks show --resource-group myResourceGroup --name myAKSCluster --query nodeResourceGroup -o tsv

Next, create a public IP address with the static allocation method using the az network public-ip create command. The following example creates a public IP address named myAKSPublicIP in the AKS cluster resource group obtained in the previous step:

az network public-ip create --resource-group MC_myResourceGroup_myAKSCluster_chinaeast2 --name myAKSPublicIP --sku Standard --allocation-method static --query publicIp.ipAddress -o tsv

The above commands create an IP address that will be deleted if you delete your AKS cluster.

Alternatively, you can create an IP address in a different resource group which can be managed separately from your AKS cluster. If you create an IP address in a different resource group, ensure the following:

- The cluster identity used by the AKS cluster has delegated permissions to the resource group, such as Network Contributor.

- Add the

--set controller.service.annotations."service\.beta\.kubernetes\.io/azure-load-balancer-resource-group"="<RESOURCE_GROUP>"parameter. Replace<RESOURCE_GROUP>with the name of the resource group where the IP address resides.

For more information, see Use a static public IP address and DNS label with the AKS load balancer.

Now deploy the nginx-ingress chart with Helm. For added redundancy, two replicas of the NGINX ingress controllers are deployed with the --set controller.replicaCount parameter. To fully benefit from running replicas of the ingress controller, make sure there's more than one node in your AKS cluster.

IP and DNS label

You must pass two additional parameters to the Helm release so the ingress controller is made aware both of the static IP address of the load balancer to be allocated to the ingress controller service, and of the DNS name label being applied to the public IP address resource. For the HTTPS certificates to work correctly, a DNS name label is used to configure an FQDN for the ingress controller IP address.

- Add the

--set controller.service.loadBalancerIPparameter. Specify your own public IP address that was created in the previous step. - Add the

--set controller.service.annotations."service\.beta\.kubernetes\.io/azure-dns-label-name"parameter. Specify a DNS name label to be applied to the public IP address that was created in the previous step. This label will create a DNS name of the form<LABEL>.<AZURE REGION NAME>.cloudapp.chinacloudapi.cn

The ingress controller also needs to be scheduled on a Linux node. Windows Server nodes shouldn't run the ingress controller. A node selector is specified using the --set nodeSelector parameter to tell the Kubernetes scheduler to run the NGINX ingress controller on a Linux-based node.

Tip

The following example creates a Kubernetes namespace for the ingress resources named ingress-basic and is intended to work within that namespace. Specify a namespace for your own environment as needed. If your AKS cluster is not Kubernetes RBAC enabled, add --set rbac.create=false to the Helm commands.

Tip

If you would like to enable client source IP preservation for requests to containers in your cluster, add --set controller.service.externalTrafficPolicy=Local to the Helm install command. The client source IP is stored in the request header under X-Forwarded-For. When using an ingress controller with client source IP preservation enabled, TLS pass-through will not work.

Update the following script with the IP address of your ingress controller and a unique name that you would like to use for the FQDN prefix.

Replace <STATIC_IP> and <DNS_LABEL> with your own IP address and unique name when running the command. The DNS_LABEL must be unique within the Azure region.

# Add the ingress-nginx repository

helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

# Set variable for ACR location to use for pulling images

ACR_URL=<REGISTRY_URL>

STATIC_IP=<STATIC_IP>

DNS_LABEL=<DNS_LABEL>

# Use Helm to deploy an NGINX ingress controller

helm install nginx-ingress ingress-nginx/ingress-nginx \

--version 4.0.13 \

--namespace ingress-basic --create-namespace \

--set controller.replicaCount=2 \

--set controller.nodeSelector."kubernetes\.io/os"=linux \

--set controller.image.registry=$ACR_URL \

--set controller.image.image=$CONTROLLER_IMAGE \

--set controller.image.tag=$CONTROLLER_TAG \

--set controller.image.digest="" \

--set controller.image.repository=$SOURCE_REGISTRY/$CONTROLLER_IMAGE \

--set controller.admissionWebhooks.patch.nodeSelector."kubernetes\.io/os"=linux \

--set controller.service.annotations."service\.beta\.kubernetes\.io/azure-load-balancer-health-probe-request-path"=/healthz \

--set controller.admissionWebhooks.patch.image.registry=$ACR_URL \

--set controller.admissionWebhooks.patch.image.image=$PATCH_IMAGE \

--set controller.admissionWebhooks.patch.image.tag=$PATCH_TAG \

--set controller.admissionWebhooks.patch.image.digest="" \

--set defaultBackend.nodeSelector."kubernetes\.io/os"=linux \

--set defaultBackend.image.registry=$ACR_URL \

--set defaultBackend.image.image=$DEFAULTBACKEND_IMAGE \

--set defaultBackend.image.tag=$DEFAULTBACKEND_TAG \

--set defaultBackend.image.repository=$SOURCE_REGISTRY/$DEFAULTBACKEND_IMAGE \

--set defaultBackend.image.digest="" \

--set controller.service.loadBalancerIP=$STATIC_IP \

--set controller.service.annotations."service\.beta\.kubernetes\.io/azure-dns-label-name"=$DNS_LABEL

When the Kubernetes load balancer service is created for the NGINX ingress controller, your static IP address is assigned, as shown in the following example output:

$ kubectl --namespace ingress-basic get services -o wide -w nginx-ingress-ingress-nginx-controller

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

nginx-ingress-ingress-nginx-controller LoadBalancer 10.0.74.133 EXTERNAL_IP 80:32486/TCP,443:30953/TCP 44s app.kubernetes.io/component=controller,app.kubernetes.io/instance=nginx-ingress,app.kubernetes.io/name=ingress-nginx

No ingress rules have been created yet, so the NGINX ingress controller's default 404 page is displayed if you browse to the public IP address. Ingress rules are configured in the following steps.

You can verify that the DNS name label has been applied by querying the FQDN on the public IP address as follows:

az network public-ip list --resource-group MC_myResourceGroup_myAKSCluster_chinaeast2 --query "[?name=='myAKSPublicIP'].[dnsSettings.fqdn]" -o tsv

The ingress controller is now accessible through the IP address or the FQDN.

Install cert-manager

The NGINX ingress controller supports TLS termination. There are several ways to retrieve and configure certificates for HTTPS. This article demonstrates using cert-manager, which provides automatic Lets Encrypt certificate generation and management functionality.

Note

This article uses the staging environment for Let's Encrypt. In production deployments, use letsencrypt-prod and https://acme-v02.api.letsencrypt.org/directory in the resource definitions and when installing the Helm chart.

To install the cert-manager controller in an Kubernetes RBAC-enabled cluster, use the following helm install command:

# Label the cert-manager namespace to disable resource validation

kubectl label namespace ingress-basic cert-manager.io/disable-validation=true

# Add the Jetstack Helm repository

helm repo add jetstack https://charts.jetstack.io

# Update your local Helm chart repository cache

helm repo update

# Install the cert-manager Helm chart

helm install cert-manager jetstack/cert-manager \

--namespace ingress-basic \

--version $CERT_MANAGER_TAG \

--set installCRDs=true \

--set nodeSelector."kubernetes\.io/os"=linux \

--set image.repository=$ACR_URL/$CERT_MANAGER_IMAGE_CONTROLLER \

--set image.tag=$CERT_MANAGER_TAG \

--set webhook.image.repository=$ACR_URL/$CERT_MANAGER_IMAGE_WEBHOOK \

--set webhook.image.tag=$CERT_MANAGER_TAG \

--set cainjector.image.repository=$ACR_URL/$CERT_MANAGER_IMAGE_CAINJECTOR \

--set cainjector.image.tag=$CERT_MANAGER_TAG

For more information on cert-manager configuration, see the cert-manager project.

Create a CA cluster issuer

Before certificates can be issued, cert-manager requires an Issuer or ClusterIssuer resource. These Kubernetes resources are identical in functionality, however Issuer works in a single namespace, and ClusterIssuer works across all namespaces. For more information, see the cert-manager issuer documentation.

Create a cluster issuer, such as cluster-issuer.yaml, using the following example manifest. Update the email address with a valid address from your organization:

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: letsencrypt-staging

spec:

acme:

server: https://acme-staging-v02.api.letsencrypt.org/directory

email: user@contoso.com

privateKeySecretRef:

name: letsencrypt-staging

solvers:

- http01:

ingress:

class: nginx

podTemplate:

spec:

nodeSelector:

"kubernetes.io/os": linux

To create the issuer, use the kubectl apply command.

kubectl apply -f cluster-issuer.yaml --namespace ingress-basic

The output should be similar to this example:

clusterissuer.cert-manager.io/letsencrypt-staging created

Run demo applications

An ingress controller and a certificate management solution have been configured. Now let's run two demo applications in your AKS cluster. In this example, Helm is used to deploy two instances of a simple 'Hello world' application.

To see the ingress controller in action, run two demo applications in your AKS cluster. In this example, you use kubectl apply to deploy two instances of a simple Hello world application.

Create a aks-helloworld-one.yaml file and copy in the following example YAML:

apiVersion: apps/v1

kind: Deployment

metadata:

name: aks-helloworld-one

spec:

replicas: 1

selector:

matchLabels:

app: aks-helloworld-one

template:

metadata:

labels:

app: aks-helloworld-one

spec:

containers:

- name: aks-helloworld-one

image: mcr.azk8s.cn/azuredocs/aks-helloworld:v1

ports:

- containerPort: 80

env:

- name: TITLE

value: "Welcome to Azure Kubernetes Service (AKS)"

---

apiVersion: v1

kind: Service

metadata:

name: aks-helloworld-one

spec:

type: ClusterIP

ports:

- port: 80

selector:

app: aks-helloworld-one

Create a aks-helloworld-two.yaml file and copy in the following example YAML:

apiVersion: apps/v1

kind: Deployment

metadata:

name: aks-helloworld-two

spec:

replicas: 1

selector:

matchLabels:

app: aks-helloworld-two

template:

metadata:

labels:

app: aks-helloworld-two

spec:

containers:

- name: aks-helloworld-two

image: mcr.azk8s.cn/azuredocs/aks-helloworld:v1

ports:

- containerPort: 80

env:

- name: TITLE

value: "AKS Ingress Demo"

---

apiVersion: v1

kind: Service

metadata:

name: aks-helloworld-two

spec:

type: ClusterIP

ports:

- port: 80

selector:

app: aks-helloworld-two

Run the two demo applications using kubectl apply:

kubectl apply -f aks-helloworld-one.yaml --namespace ingress-basic

kubectl apply -f aks-helloworld-two.yaml --namespace ingress-basic

Create an ingress route

Both applications are now running on your Kubernetes cluster, however they're configured with a service of type ClusterIP. As such, the applications aren't accessible from the internet. To make them publicly available, create a Kubernetes ingress resource. The ingress resource configures the rules that route traffic to one of the two applications.

In the following example, traffic to the address https://demo-aks-ingress.chinaeast2.cloudapp.chinacloudapi.cn/ is routed to the service named aks-helloworld. Traffic to the address https://demo-aks-ingress.chinaeast2.cloudapp.chinacloudapi.cn/hello-world-two is routed to the ingress-demo service. Update the hosts and host to the DNS name you created in a previous step.

Create a file named hello-world-ingress.yaml and copy in the following example YAML.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: hello-world-ingress

annotations:

cert-manager.io/cluster-issuer: letsencrypt-staging

nginx.ingress.kubernetes.io/rewrite-target: /$2

nginx.ingress.kubernetes.io/use-regex: "true"

spec:

ingressClassName: nginx

tls:

- hosts:

- demo-aks-ingress.chinaeast2.cloudapp.chinacloudapi.cn

secretName: tls-secret

rules:

- host: demo-aks-ingress.chinaeast2.cloudapp.chinacloudapi.cn

http:

paths:

- path: /hello-world-one(/|$)(.*)

pathType: Prefix

backend:

service:

name: aks-helloworld-one

port:

number: 80

- path: /hello-world-two(/|$)(.*)

pathType: Prefix

backend:

service:

name: aks-helloworld-two

port:

number: 80

- path: /(.*)

pathType: Prefix

backend:

service:

name: aks-helloworld-one

port:

number: 80

Create the ingress resource using the kubectl apply command.

kubectl apply -f hello-world-ingress.yaml --namespace ingress-basic

The output should be similar to this example:

ingress.networking.k8s.io/hello-world-ingress created

Verify certificate object

Next, a certificate resource must be created. The certificate resource defines the desired X.509 certificate. For more information, see cert-manager certificates.

Cert-manager has likely automatically created a certificate object for you using ingress-shim, which is automatically deployed with cert-manager since v0.2.2. For more information, see the ingress-shim documentation.

To verify that the certificate was created successfully, use the kubectl describe certificate tls-secret --namespace ingress-basic command.

If the certificate was issued, you will see output similar to the following:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal CreateOrder 11m cert-manager Created new ACME order, attempting validation...

Normal DomainVerified 10m cert-manager Domain "demo-aks-ingress.chinaeast2.cloudapp.chinacloudapi.cn" verified with "http-01" validation

Normal IssueCert 10m cert-manager Issuing certificate...

Normal CertObtained 10m cert-manager Obtained certificate from ACME server

Normal CertIssued 10m cert-manager Certificate issued successfully

Test the ingress configuration

Open a web browser to the FQDN of your Kubernetes ingress controller, such as https://demo-aks-ingress.chinaeast2.cloudapp.chinacloudapi.cn.

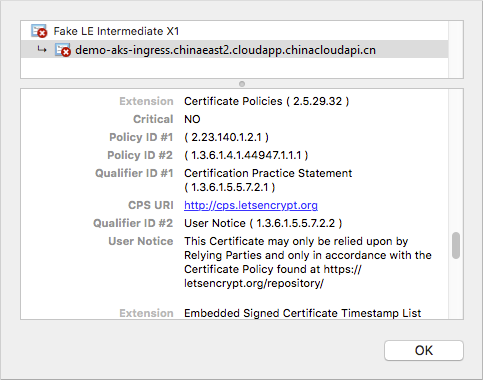

As these examples use letsencrypt-staging, the issued TLS/SSL certificate is not trusted by the browser. Accept the warning prompt to continue to your application. The certificate information shows this Fake LE Intermediate X1 certificate is issued by Let's Encrypt. This fake certificate indicates cert-manager processed the request correctly and received a certificate from the provider:

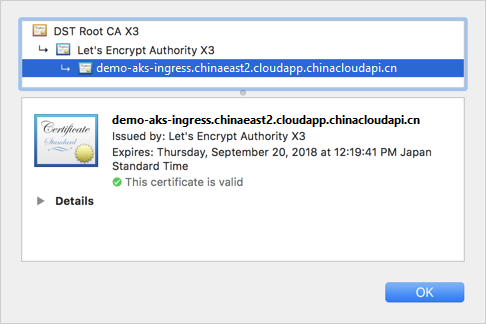

When you change Let's Encrypt to use prod rather than staging, a trusted certificate issued by Let's Encrypt is used, as shown in the following example:

The demo application is shown in the web browser:

Now add the /hello-world-two path to the FQDN, such as https://demo-aks-ingress.chinaeast2.cloudapp.chinacloudapi.cn/hello-world-two. The second demo application with the custom title is shown:

Clean up resources

This article used Helm to install the ingress components, certificates, and sample apps. When you deploy a Helm chart, a number of Kubernetes resources are created. These resources includes pods, deployments, and services. To clean up these resources, you can either delete the entire sample namespace, or the individual resources.

Delete the sample namespace and all resources

To delete the entire sample namespace, use the kubectl delete command and specify your namespace name. All the resources in the namespace are deleted.

kubectl delete namespace ingress-basic

Delete resources individually

Alternatively, a more granular approach is to delete the individual resources created. First, remove the certificate resources:

kubectl delete -f certificates.yaml

kubectl delete -f cluster-issuer.yaml

Now list the Helm releases with the helm list command. Look for charts named nginx-ingress and cert-manager as shown in the following example output:

$ helm list --all-namespaces

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

nginx-ingress ingress-basic 1 2020-01-11 14:51:03.454165006 deployed nginx-ingress-1.28.2 0.26.2

cert-manager ingress-basic 1 2020-01-06 21:19:03.866212286 deployed cert-manager-v0.13.0 v0.13.0

Uninstall the releases with the helm uninstall command. The following example uninstalls the NGINX ingress deployment and certificate manager deployments.

$ helm uninstall nginx-ingress cert-manager -n ingress-basic

release "nginx-ingress" deleted

release "cert-manager" deleted

Next, remove the two sample applications:

kubectl delete -f aks-helloworld-one.yaml --namespace ingress-basic

kubectl delete -f aks-helloworld-two.yaml --namespace ingress-basic

Delete the itself namespace. Use the kubectl delete command and specify your namespace name:

kubectl delete namespace ingress-basic

Finally, remove the static public IP address created for the ingress controller. Provide your MC_ cluster resource group name obtained in the first step of this article, such as MC_myResourceGroup_myAKSCluster_chinaeast2:

az network public-ip delete --resource-group MC_myResourceGroup_myAKSCluster_chinaeast2 --name myAKSPublicIP

Next steps

This article included some external components to AKS. To learn more about these components, see the following project pages:

You can also:

- Create a basic ingress controller with external network connectivity

- Enable the HTTP application routing add-on

- Create an ingress controller that uses an internal, private network and IP address

- Create an ingress controller that uses your own TLS certificates

- Create an ingress controller with a dynamic public IP and configure Let's Encrypt to automatically generate TLS certificates