Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Azure Container Apps run in the context of an environment, with its own virtual network (VNet). This VNet creates a secure boundary around your Azure Container Apps environment.

Ingress configuration in Azure Container Apps determines how external network traffic reaches your applications. Configuring ingress enables you to control traffic routing, improve application performance, and implement advanced deployment strategies. This article guides you through the ingress configuration options available in Azure Container Apps and helps you choose the right settings for your workloads.

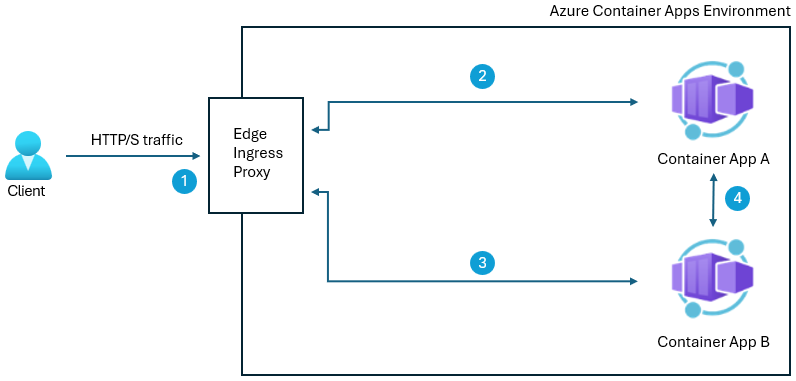

An Azure Container Apps environment includes a scalable edge ingress proxy responsible for the following features:

Transport Layer Security (TLS) termination, which decrypts TLS traffic as it enters the environment. This operation shifts the work of decryption away from your container apps, reducing their resource consumption and improving their performance.

Session affinity, which helps you build stateful applications that require a consistent connection to the same container app replica.

The following diagram shows an example environment with the ingress proxy routing traffic to two container apps.

By default, Azure Container Apps creates your container app environment with the default ingress mode. If your application needs to operate at high scale levels, you can set the ingress mode to premium.

Default ingress mode

With the default ingress mode, your Container Apps environment has two ingress proxy instances. Container apps creates more instances as needed, up to a maximum of 10. Each instance is allocated up to 1 vCPU core and 2 GB of memory.

In the default ingress mode, no billing is applied for scaling the ingress proxy or for the vCPU cores and allocated memory.

Premium ingress mode

The default ingress mode could become a bottleneck in high scale environments. As an alternative, the premium ingress mode includes advanced features to ensure your ingress keeps up with traffic demands.

These features include:

Workload profile support: Ingress proxy instances run in a workload profile of your choice. You have control over the number of vCPU cores and memory resources available to the proxy.

Configurable scale range rules: Proxy scale range rules are configurable so you can make sure you have as many instances as your application requires.

Advanced settings: You can configure advanced settings such as idle timeouts for ingress proxy instances.

To decide between default and premium ingress mode, you evaluate the resources consumed by the proxy instance considering the requests served. Start by looking at vCPU cores and memory resources consumed by the proxy instance. If your environment sustains the maximum ingress proxy count (default 10) for any extended period, consider switching to premium ingress mode. For more information, see metrics.

Workload profile

You can select a workload profile to provide dedicated nodes for your ingress proxy instances that scale to your needs. The D4-D32 workload profile types are recommended. Each ingress proxy instance is allocated 1 vCPU core. For more information, see Workload profiles in Azure Container Apps.

The workload profile:

- Must not be the Consumption workload profile.

- Must not be shared with container apps or jobs.

- Must not be deleted while you're using it for your ingress proxy.

Running your ingress proxy in a workload profile is billed at the rate for that workload profile. For more information, see billing.

You can also configure the number of workload profile nodes. A workload profile is a scalable pool of nodes. Each node contains multiple ingress proxy instances. The number of nodes scales based on vCPU and memory utilization. The minimum number of node instances is two.

Scaling

The ingress proxy scales independently from your container app scaling.

When your ingress proxy reaches high vCPU or memory utilization, Container Apps creates more ingress proxy instances. When utilization decreases, the extra ingress proxy instances are removed.

Your minimum and maximum ingress proxy instances are determined as follows:

Minimum: There are a minimum of two node instances.

Maximum: Your maximum node instances multiplied by your vCPU cores. For example, if you have 50 maximum node instances and 4 vCPU cores, you have a maximum of 200 ingress proxy instances.

The ingress proxy instances are spread among the available workload profile nodes.

Advanced ingress settings

With the premium ingress mode enabled, you can also configure the following settings:

| Setting | Description | Minimum | Maximum | Default |

|---|---|---|---|---|

| Termination grace period | The amount of time (in seconds) for the container app to finish processing requests before they're canceled during shutdown. | 0 | 3,600 | 500 |

| Idle request timeout | Idle request timeouts in minutes. | 4 | 30 | 4 |

| Request header count | Increase this setting if you have clients that send a large number of request headers. | 1 | N/A | 100 |

You should only increase these settings as needed, because raising them could lead to your ingress proxy instances consuming more resources for longer periods of time, becoming more vulnerable to resource exhaustion and denial of service attacks.

Configure ingress

You can configure the ingress for your environment after you create it.

Browse to your environment in the Azure portal.

Select Networking.

Select Ingress settings.

Configure your ingress settings as follows.

Setting Value Ingress Mode Select Default or Premium. Workload profile size Select a size from D4 to D32. Minimum node instances Enter the minimum workload profile node instances. Maximum node instances Enter the maximum workload profile node instances. Termination grace period Enter the termination grace period in minutes. Idle request timeout Enter the idle request timeout in minutes. Request header count Enter the request header count. Select Apply.