Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

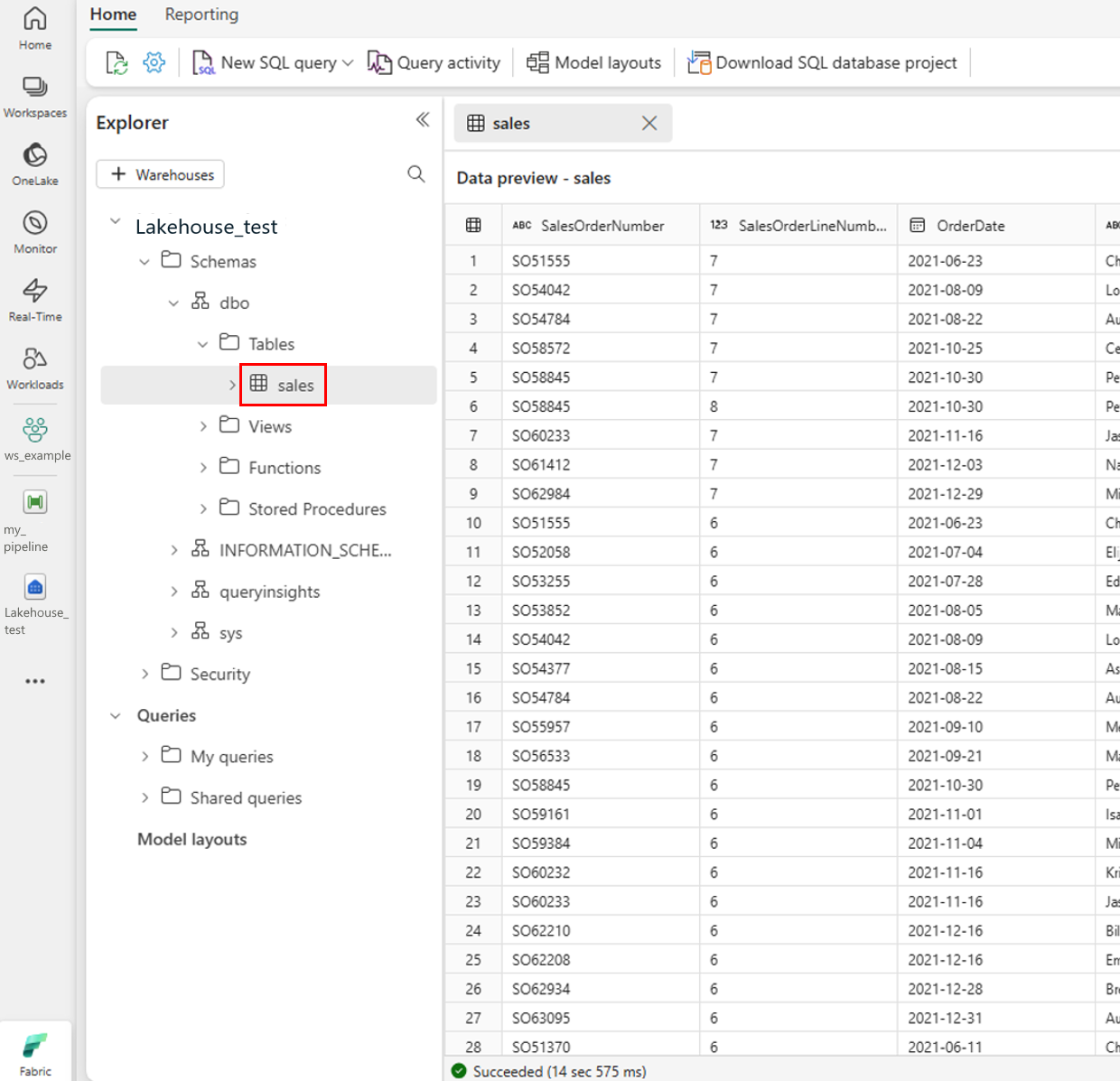

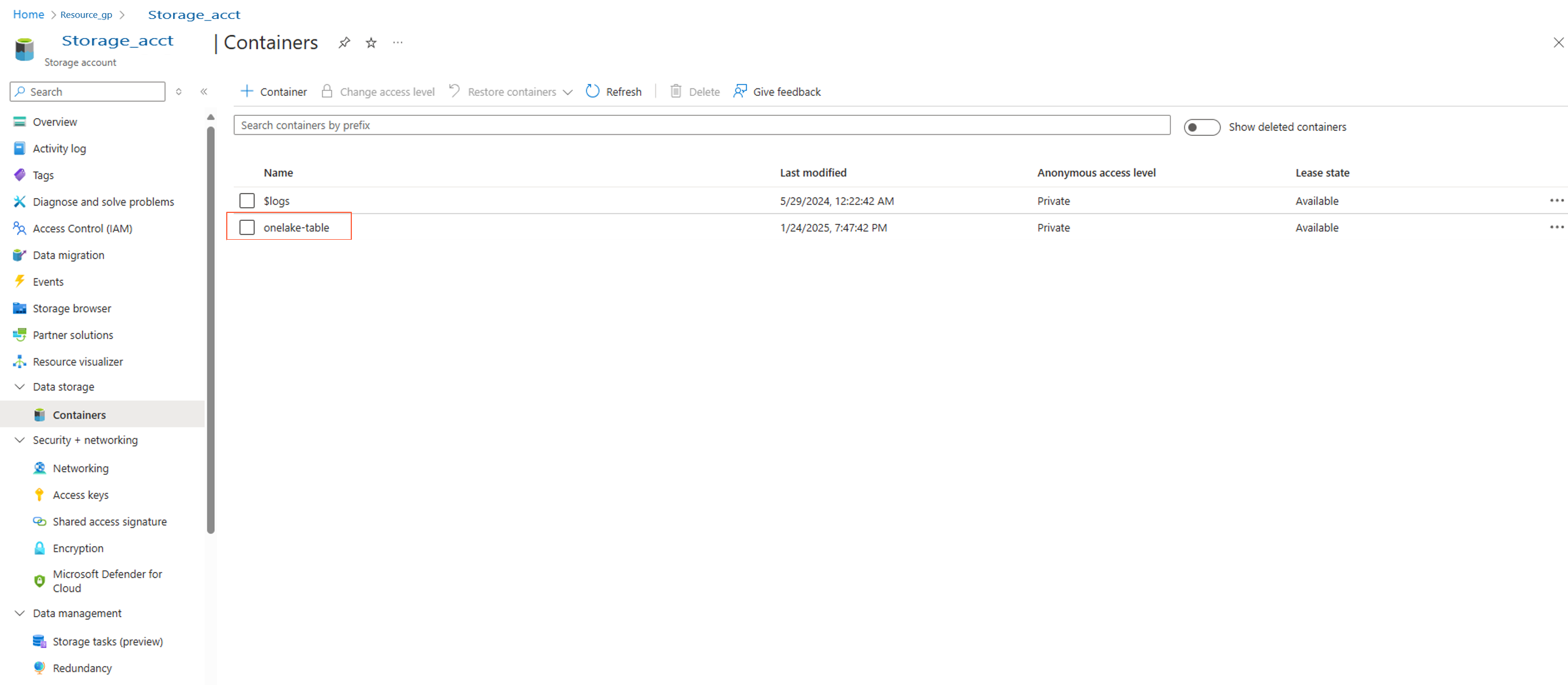

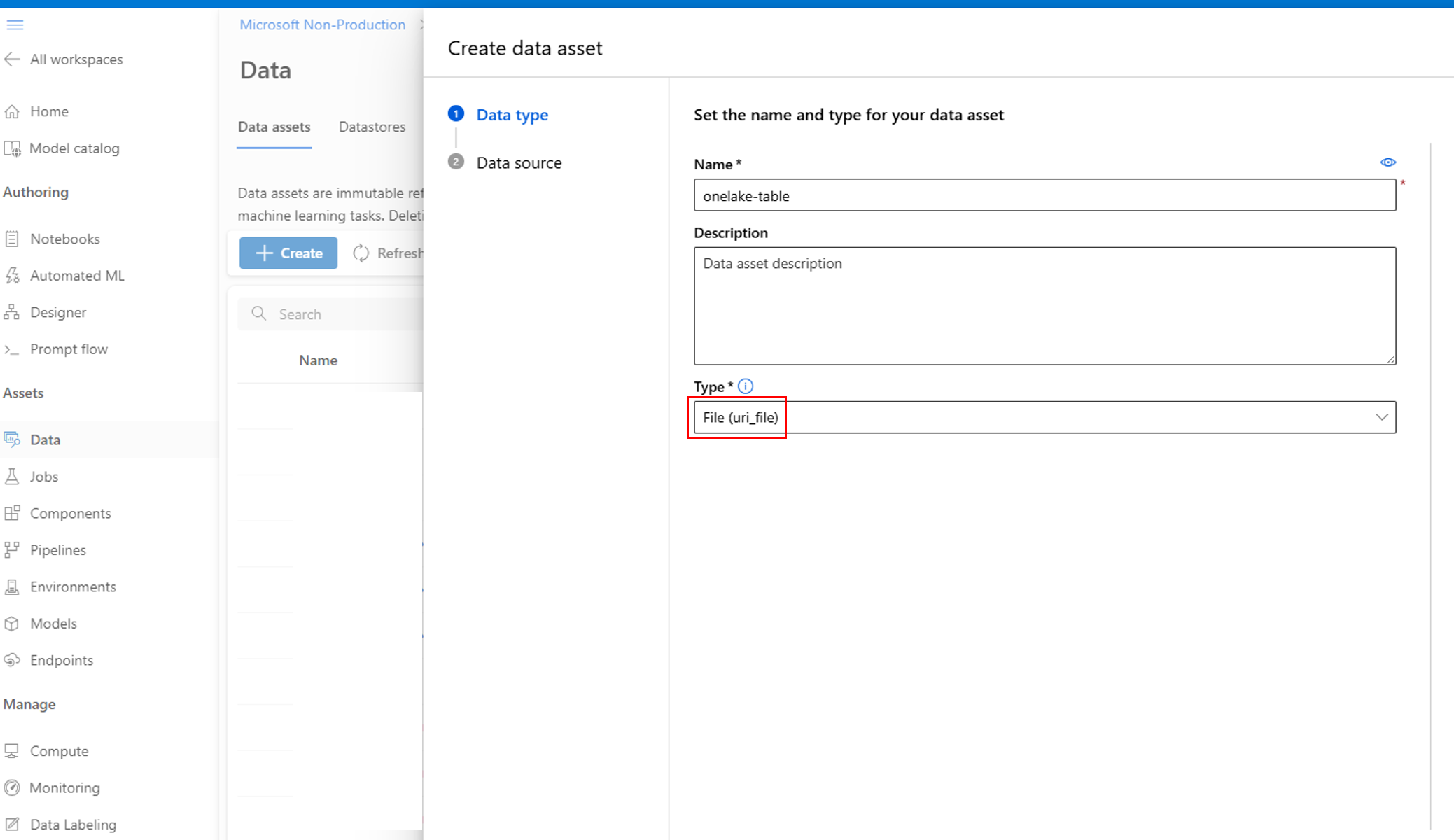

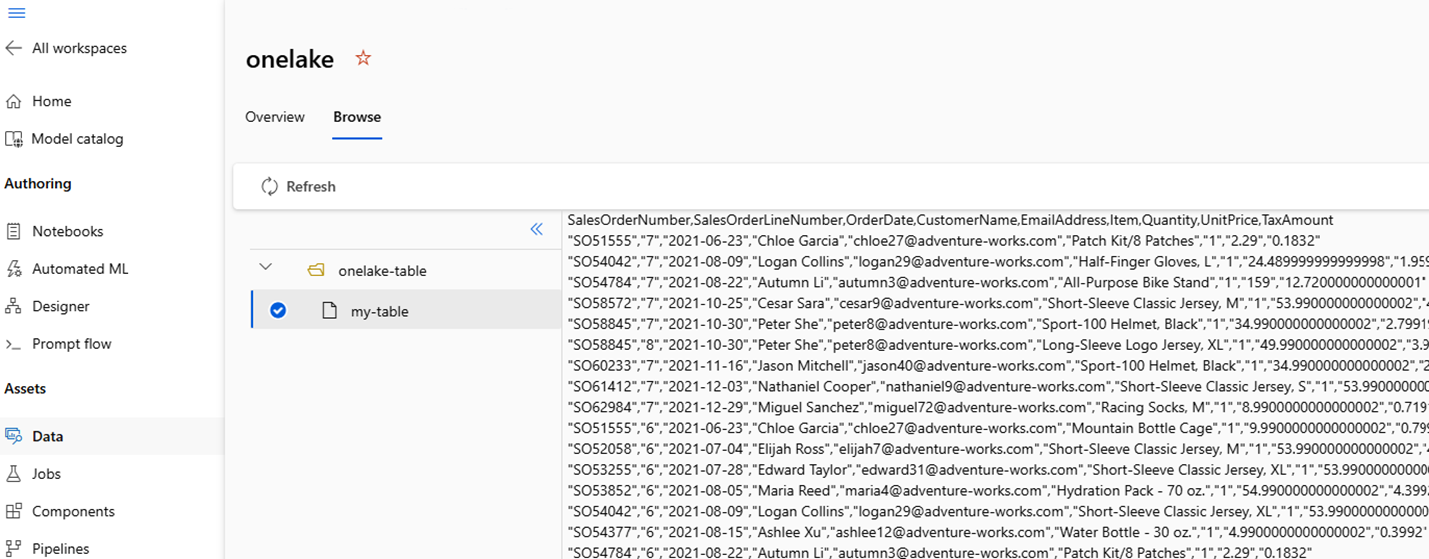

Existing solutions can link an Azure Machine Learning resource to OneLake, extract the data, and create a datastore in Azure Machine Learning. However, in those solutions, the OneLake data is of type "Files." Those solutions don't work for OneLake table-type data, as shown in the following screenshot:

Additionally, some customers might prefer to build the link in the UI. A solution that links Azure Machine Learning resources to OneLake tables is needed. In this article, you learn how to link OneLake tables to Azure Machine Learning studio resources through the UI.

Prerequisites

- An Azure subscription; if you don't have an Azure subscription, create a Trial before you start.

- An Azure Machine Learning workspace. Visit Create workspace resources.

- An Azure Data Lake Storage (ADLS) storage account. Visit Create an Azure Data Lake Storage (ADLS) storage account.

- Knowledge of assigning roles in Azure storage account.

Solution structure

This solution has three parts. First, create and set up a Data Lake Storage account in the Azure portal. Next, copy the data from OneLake to Azure Data Lake Storage. Bring the data to the Azure Machine Learning resource, and lastly, create the datastore. The following screenshot shows the overall flow of the solution:

Set up the Data Lake storage account in the Azure portal

Assign the Storage Blob Data Contributor and Storage File Data Privileged Contributor roles to the user identity or service principal, to enable key access and creating container permissions. To assign appropriate roles to the user identity:

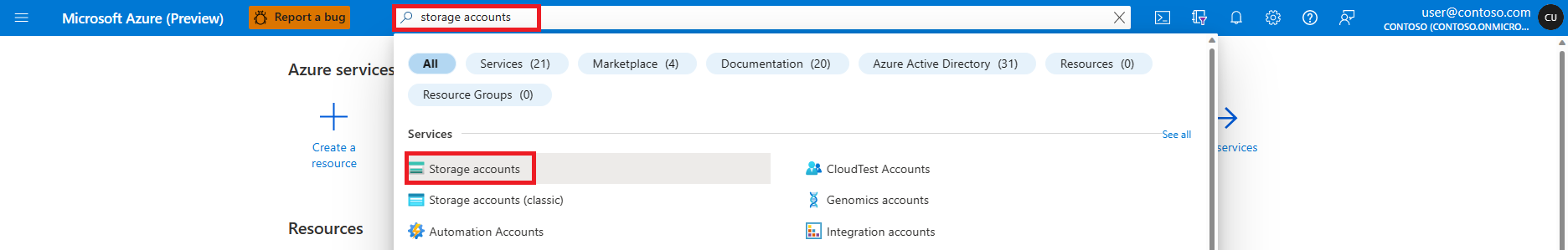

Open the Azure portal

Select the Storage accounts service.

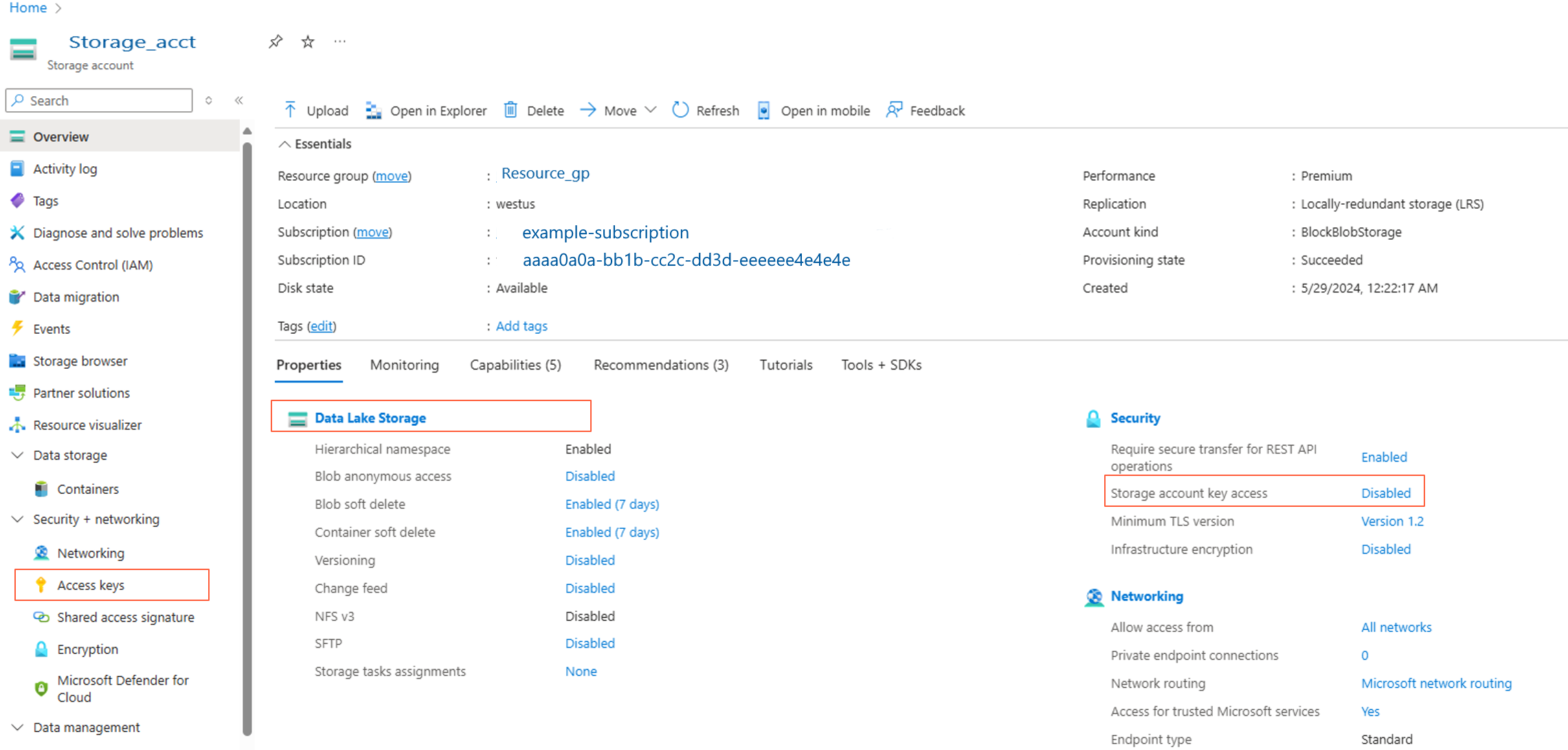

On the Storage accounts page, select the Data Lake Storage account you created in the prerequisite step. A page showing the storage account properties opens.

Select the Access keys from the left panel and record the key. This value is required in a later step.

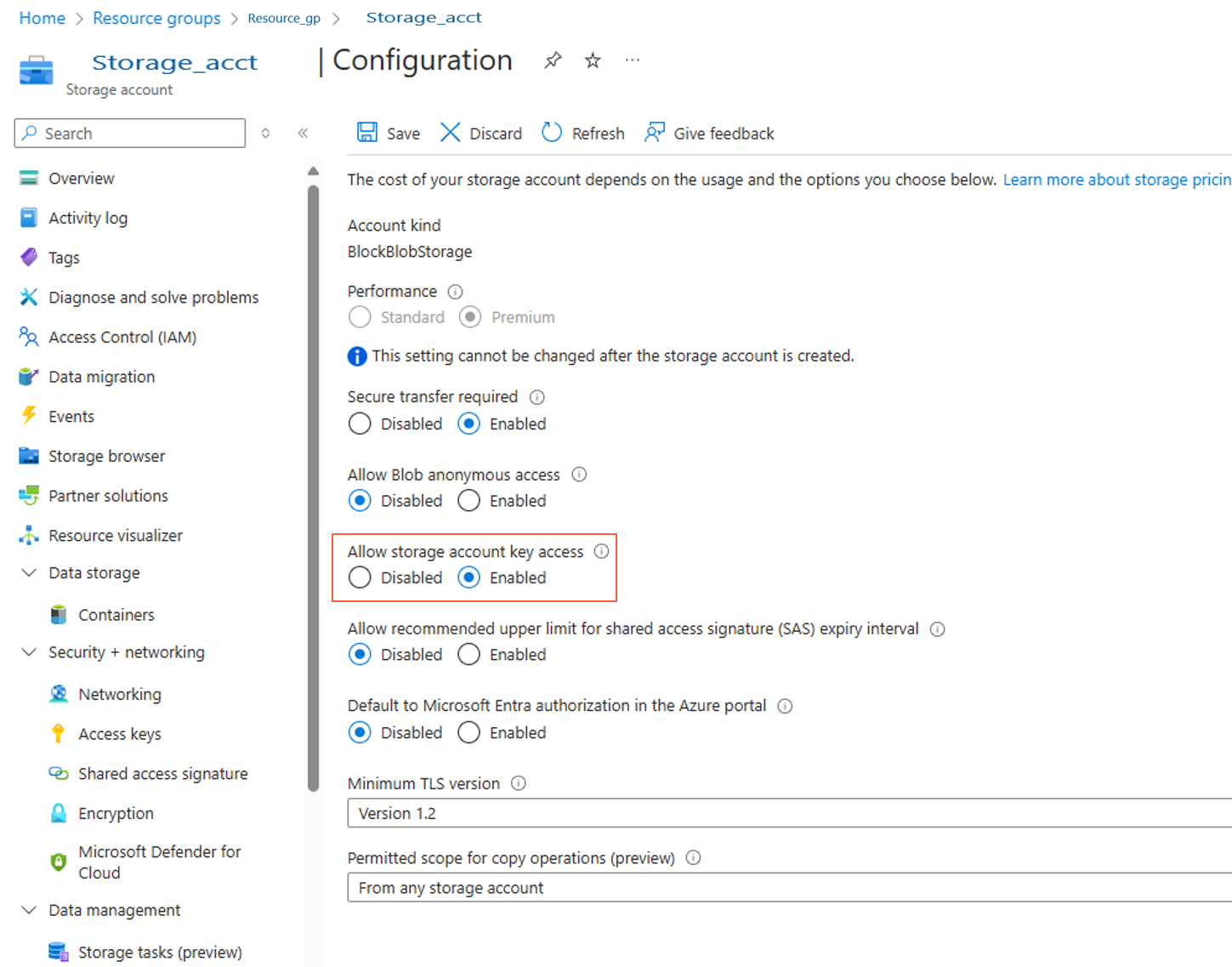

Select and enable Allow storage account key access as shown in the following screenshot:

Select Access Control (IAM) from left panel, and assign the Storage Blob Data Contributor and Storage File Data Privileged Contributor roles to the service principal.

Create a container in the storage account. Name it onelake-table.

Use a Fabric data pipeline to copy data to an Azure Data Lake Storage account

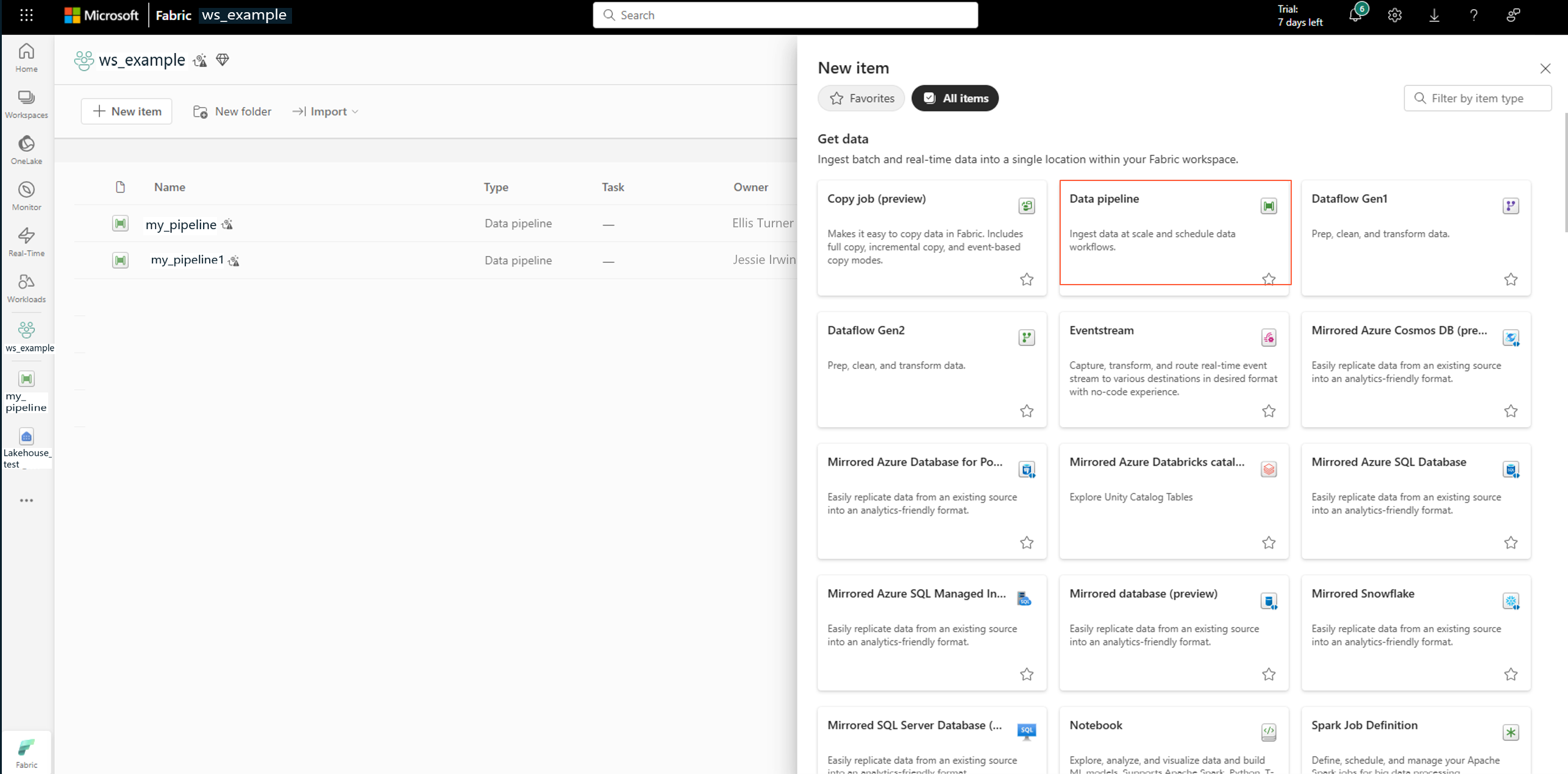

At the Fabric portal, select Data pipeline at the New item page.

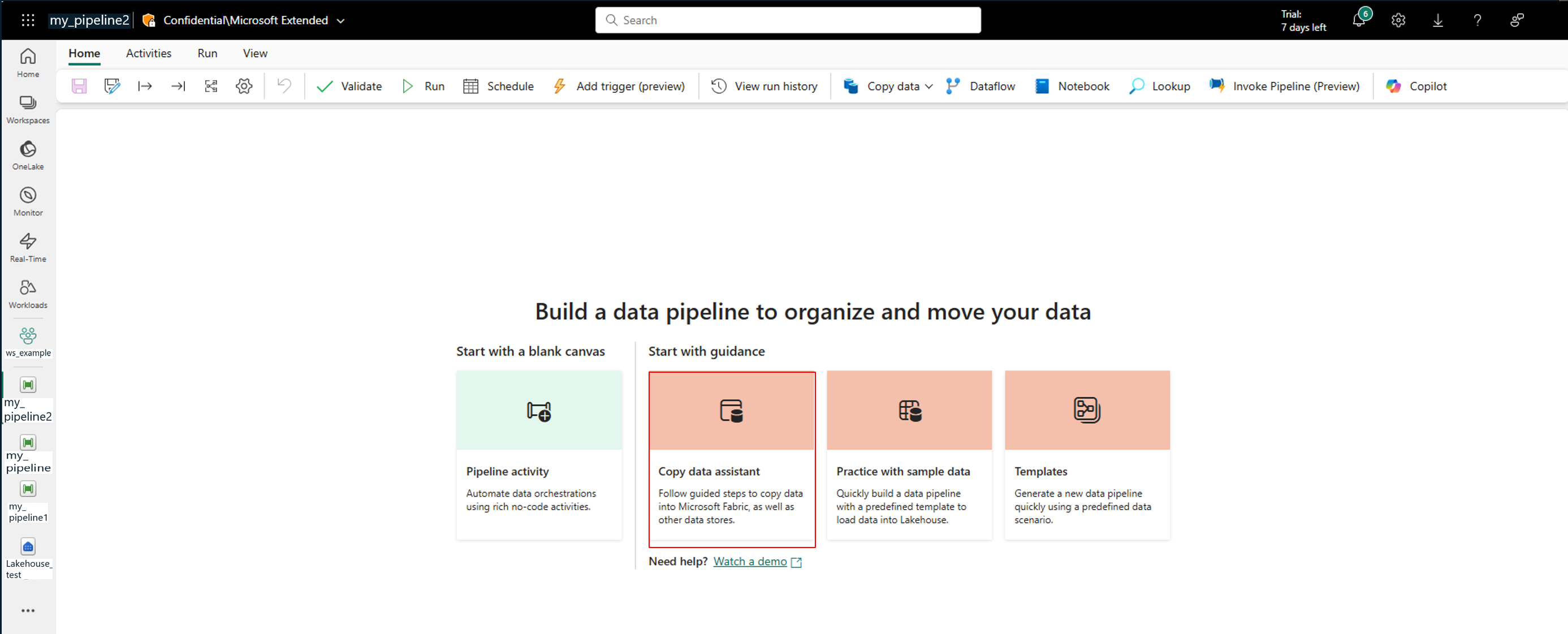

Select Copy data assistant.

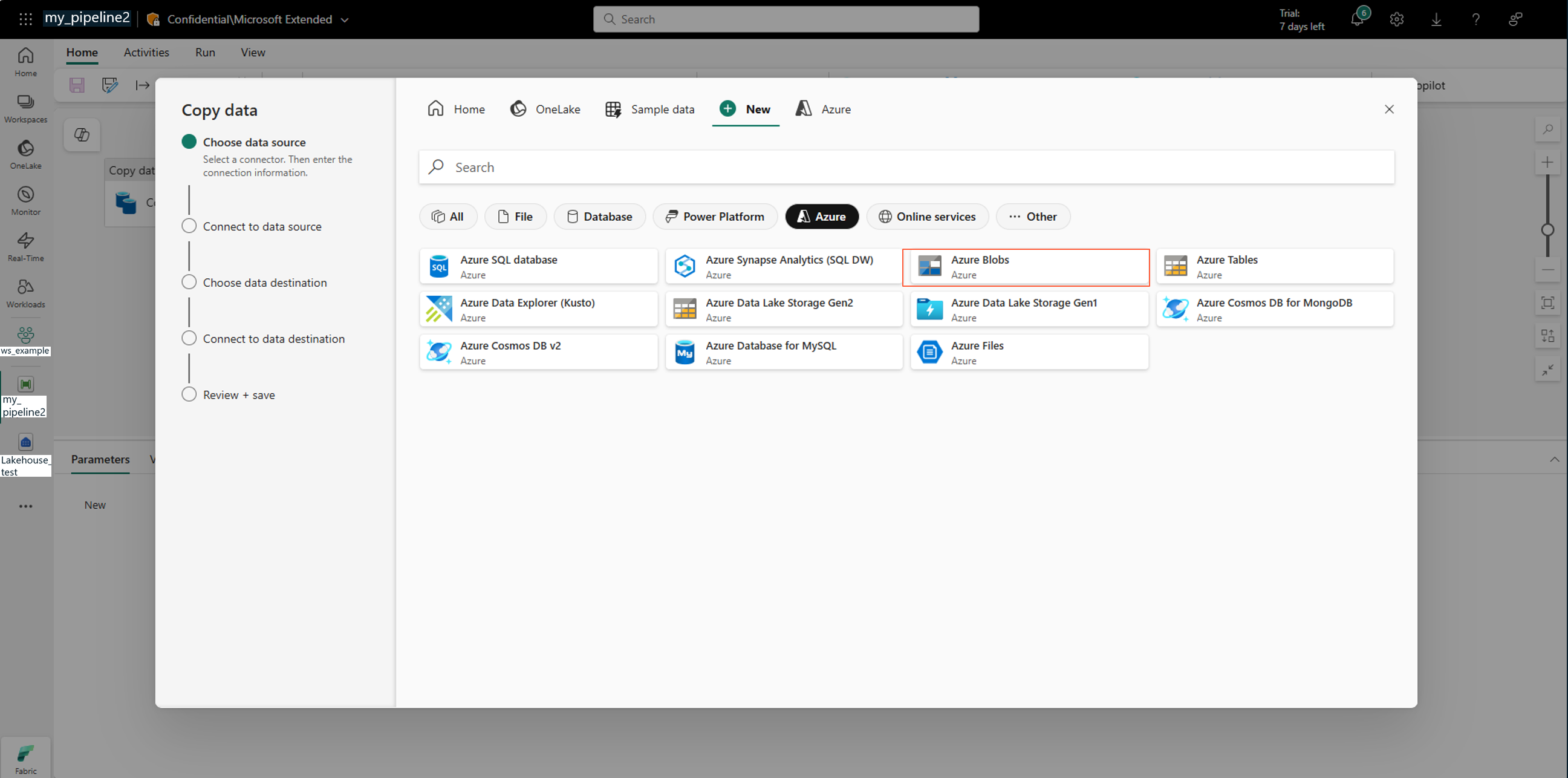

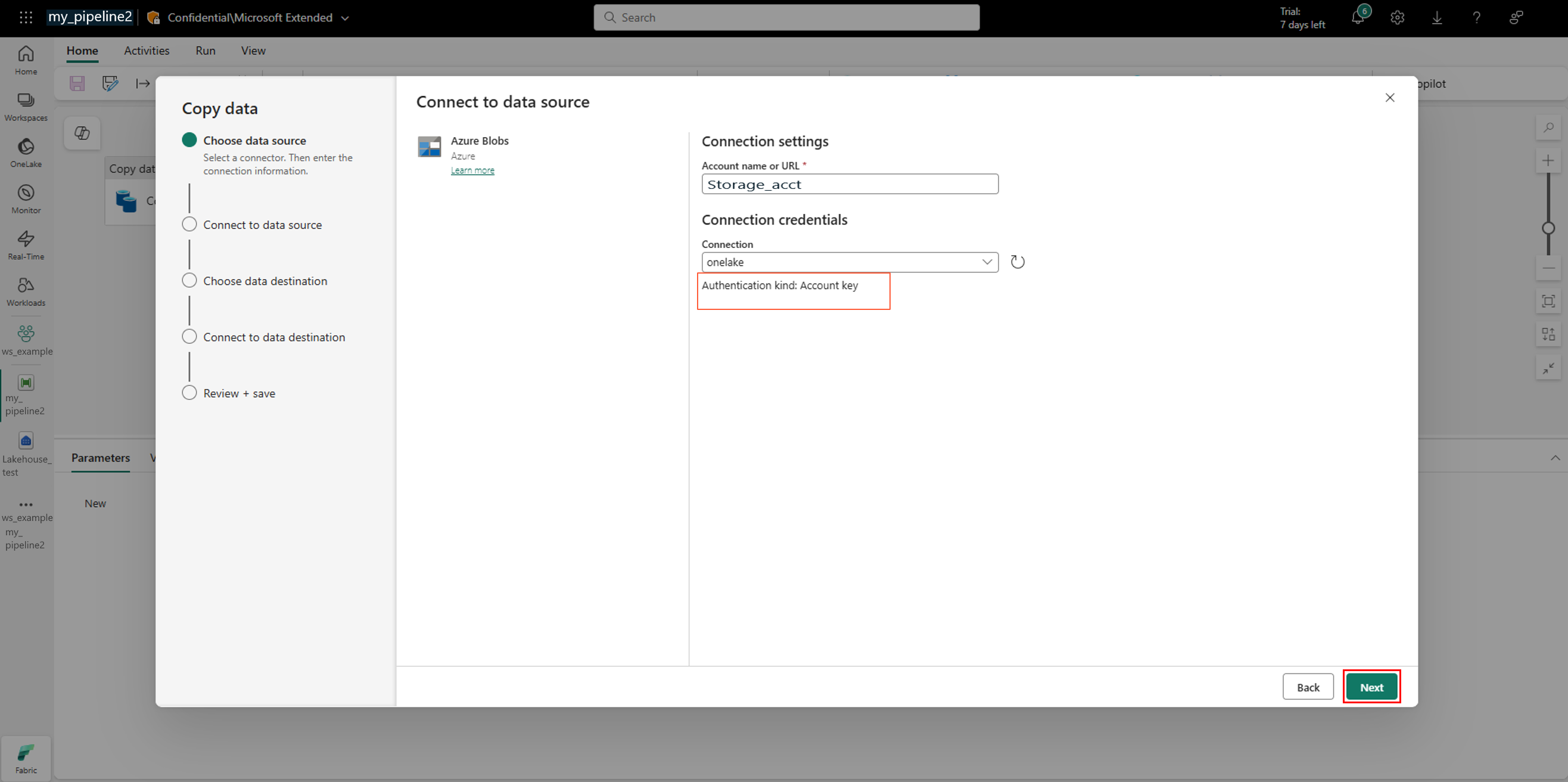

In Copy data assistant, select Azure Blobs:

To create a connection to the Azure Data Lake storage account, select Authentication kind: Account key and then Next:

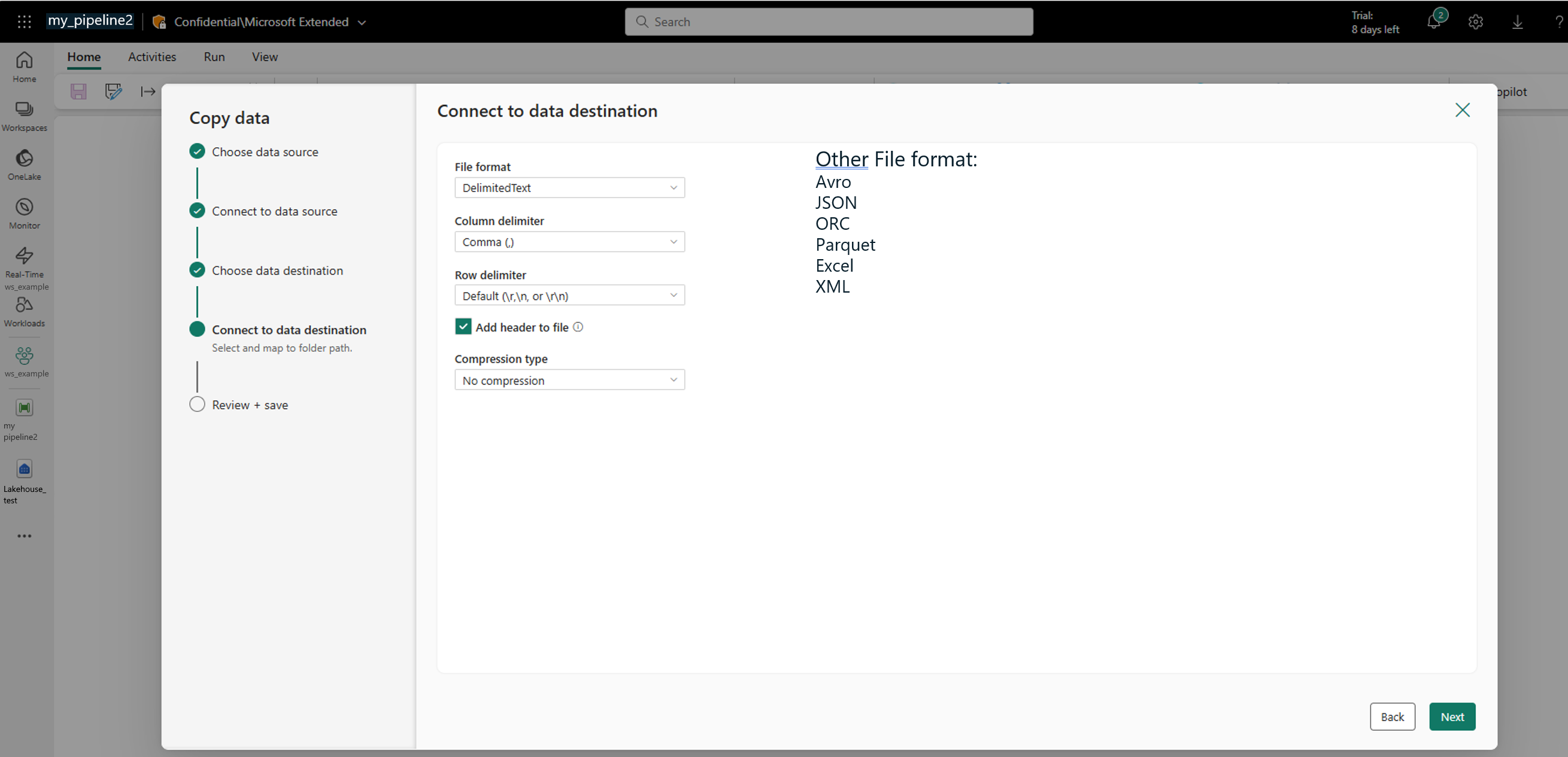

Select the data destination, and select Next:

Connect to the data destination, and select Next:

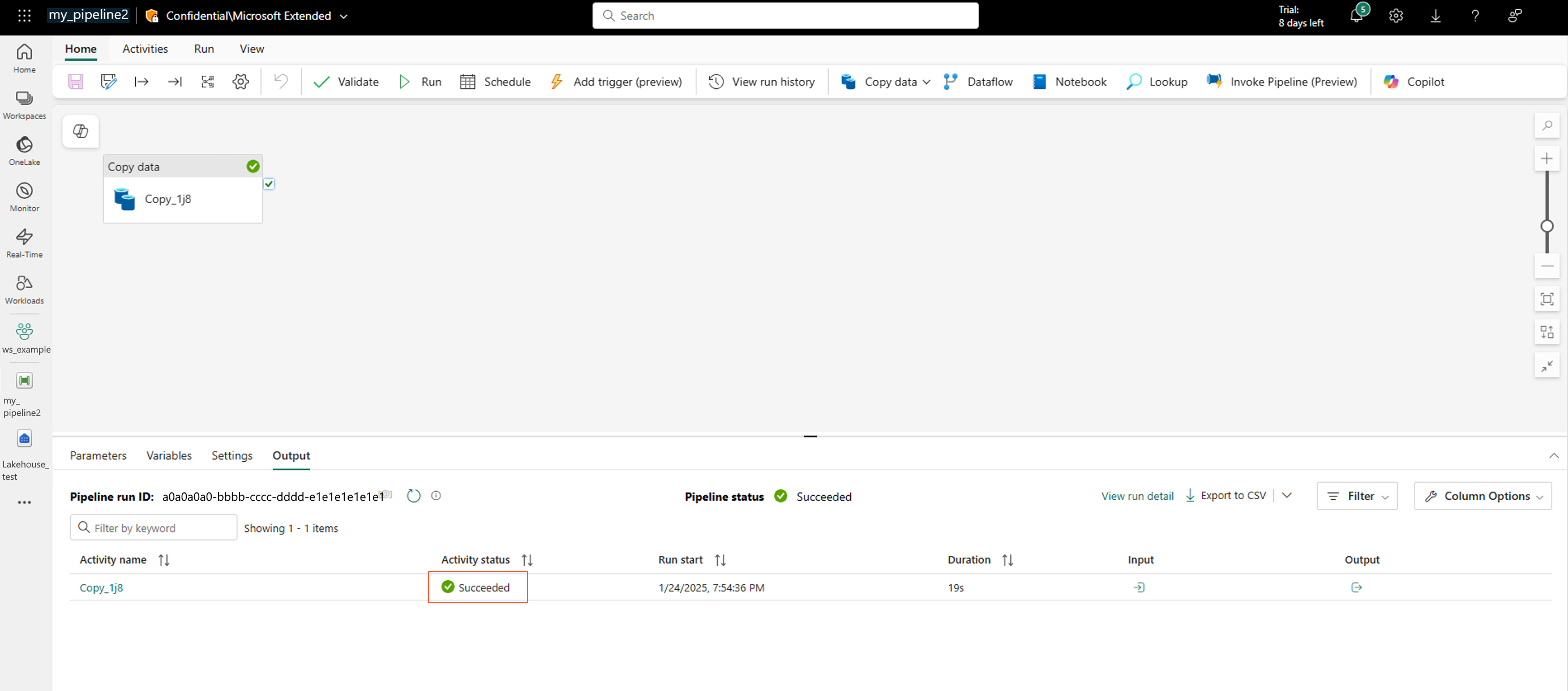

That step automatically starts the data copy job:

This step might take a while. It directly leads to the next step.

Check that the data copy job finished successfully:

Create datastore in Azure Machine Learning linking to Azure Data Lake Storage container

Now that your data is in the Azure Data Lake storage resource, you can create an Azure Machine Learning datastore.

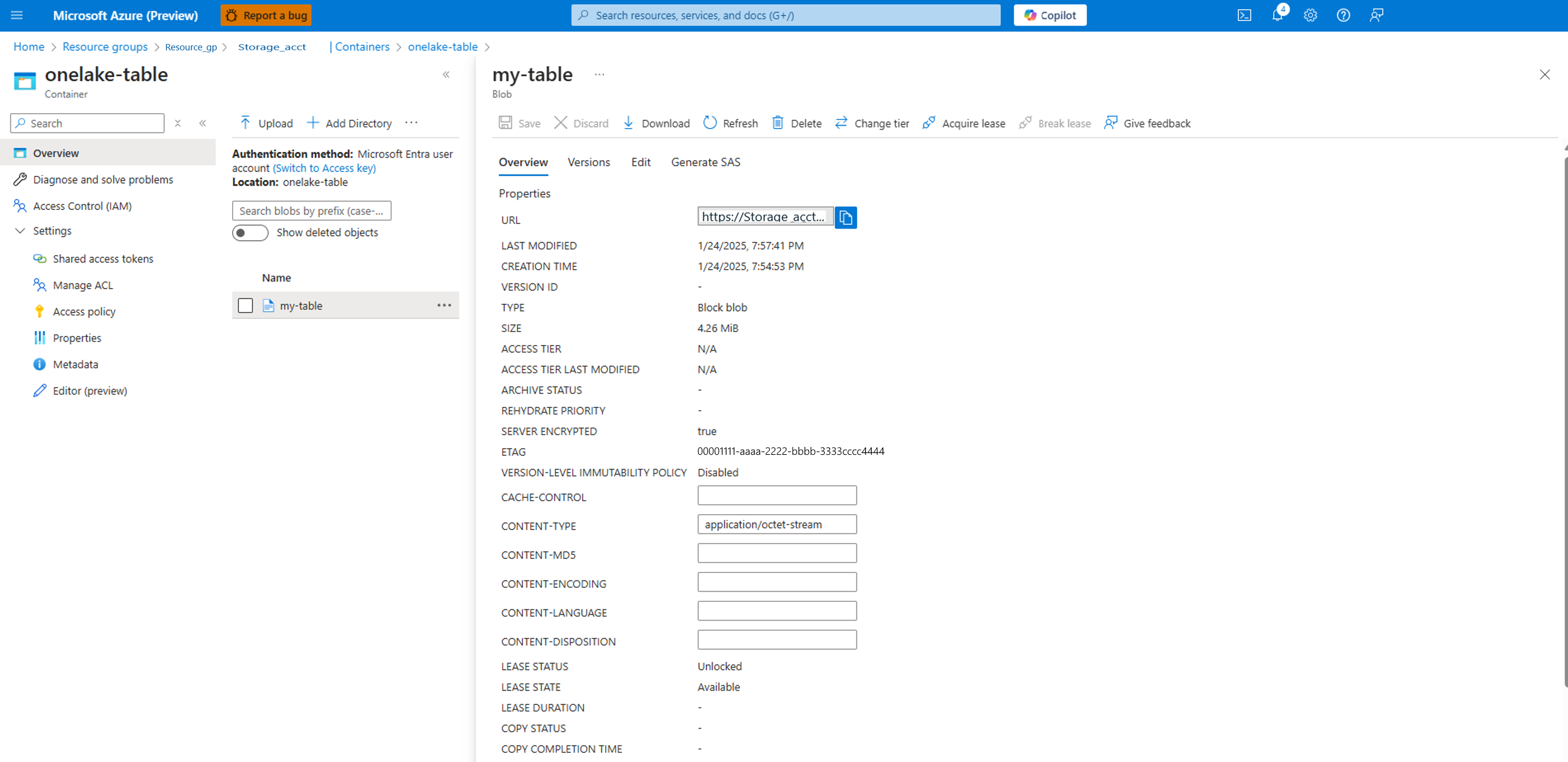

In Azure storage account, the container as shown on the left has data, as shown on the right:

In Machine Learning studio create data asset, select the File (uri_file) type:

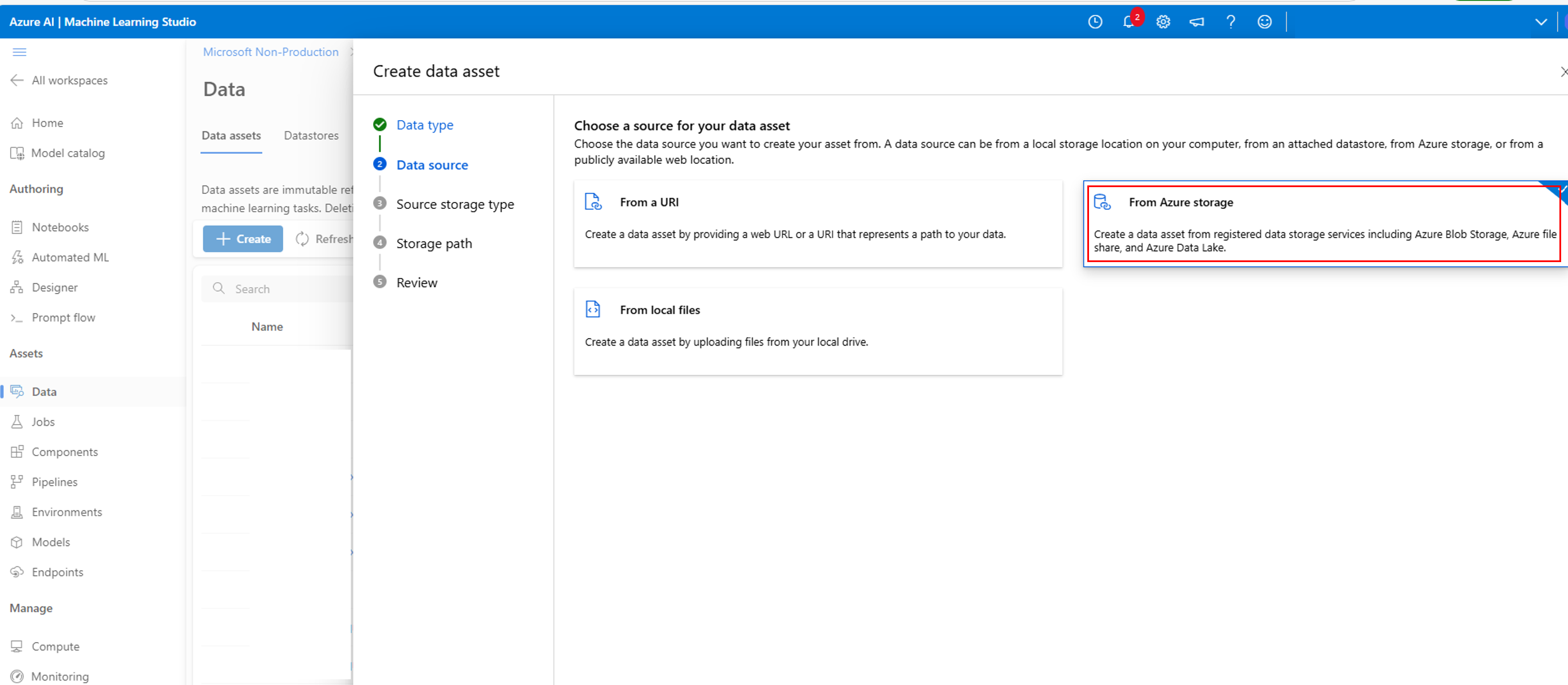

Select From Azure storage:

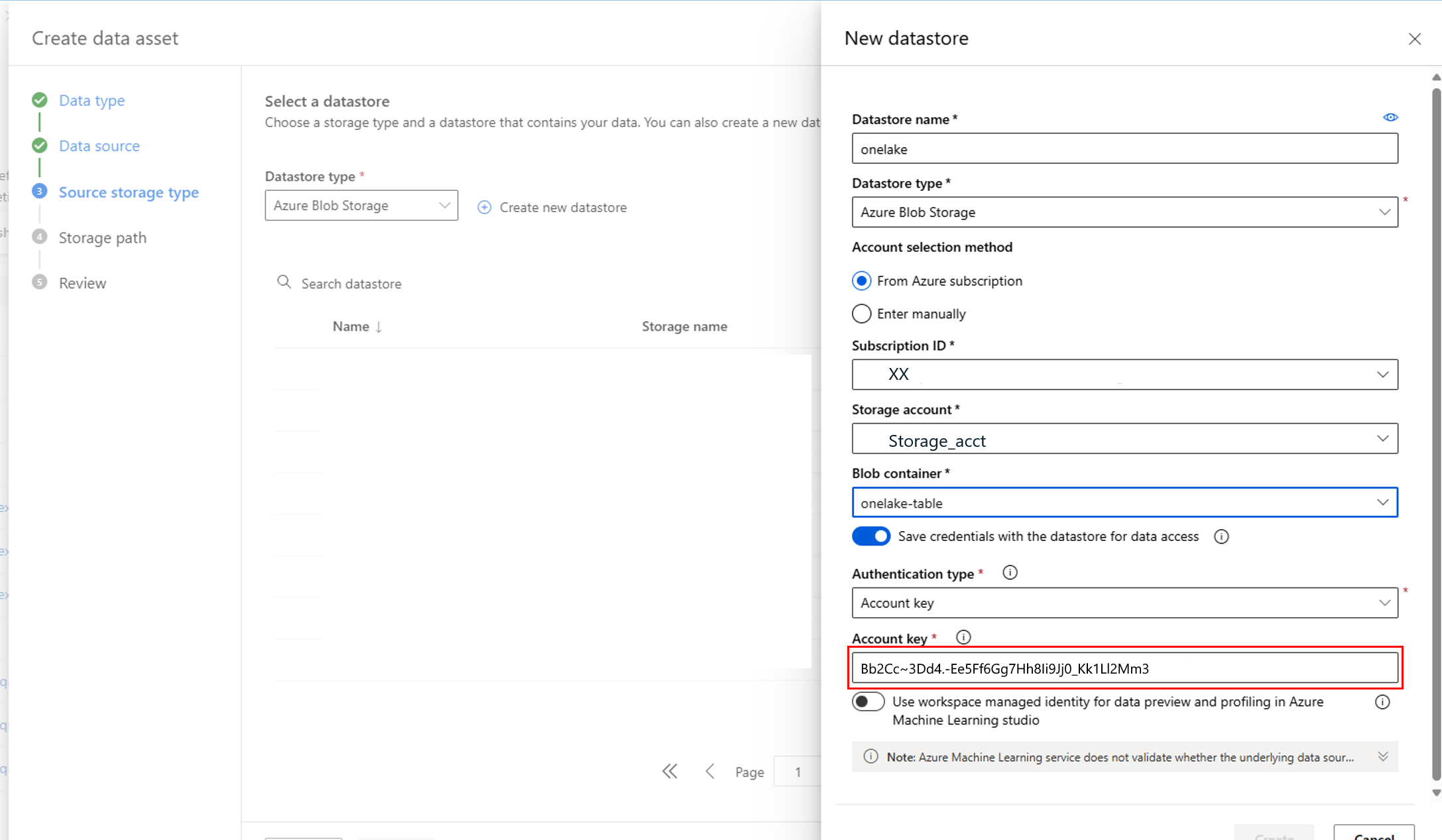

Using the Account key value from the earlier Create a connection to the Azure Data Lake storage account step, create a New datastore:

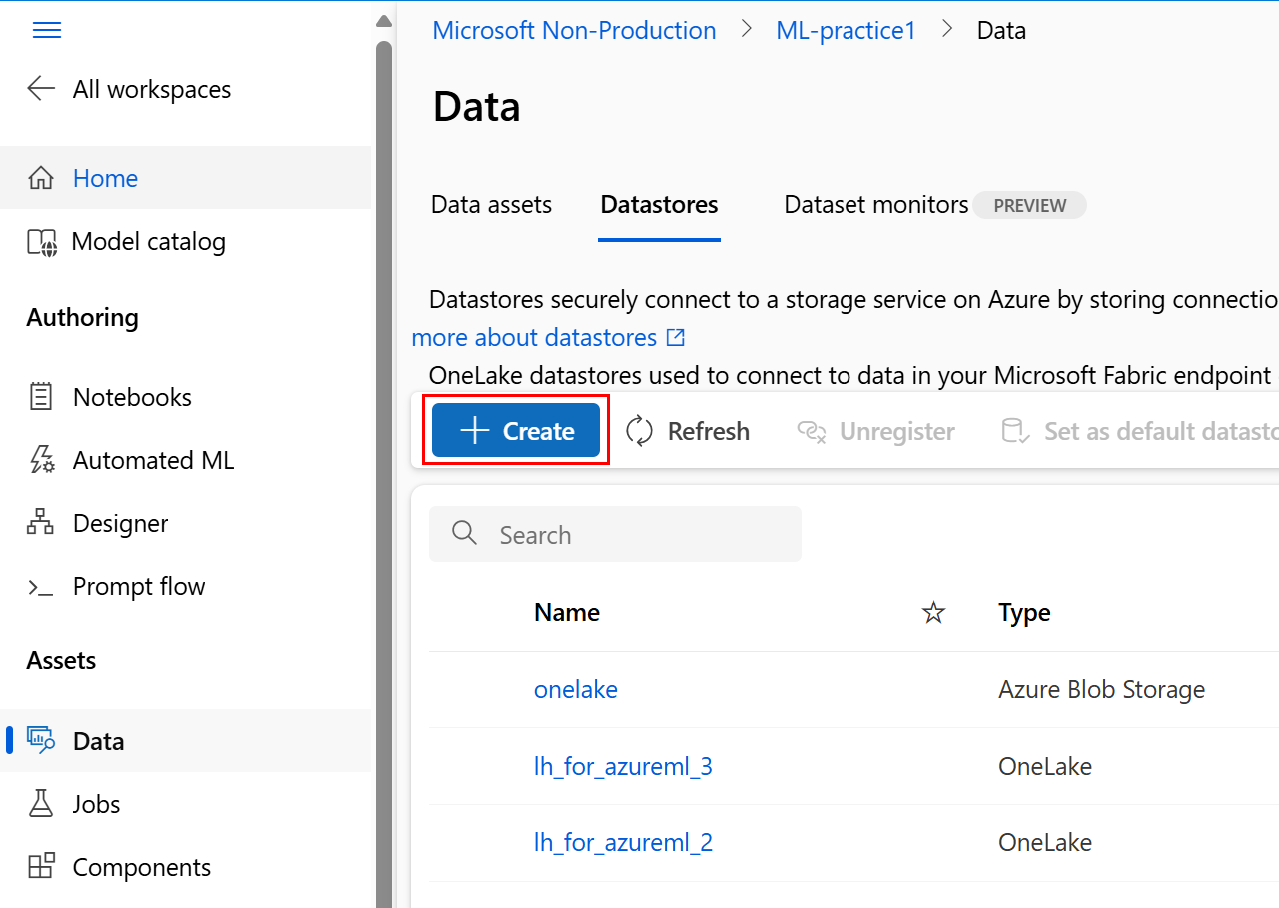

You can also directly create a datastore in the Azure Machine Learning Studio:

You can review details of the datastore you created:

Review the data in the datastore

Now that you successfully created the datastore in Azure Machine Learning, you can use it in machine learning exercises.