Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

APPLIES TO:  Azure Machine Learning SDK v1 for Python

Azure Machine Learning SDK v1 for Python

Important

This article provides information on using the Azure Machine Learning SDK v1. SDK v1 is deprecated as of March 31, 2025. Support for it will end on June 30, 2026. You can install and use SDK v1 until that date. Your existing workflows using SDK v1 will continue to operate after the end-of-support date. However, they could be exposed to security risks or breaking changes in the event of architectural changes in the product.

We recommend that you transition to the SDK v2 before June 30, 2026. For more information on SDK v2, see What is Azure Machine Learning CLI and Python SDK v2? and the SDK v2 reference.

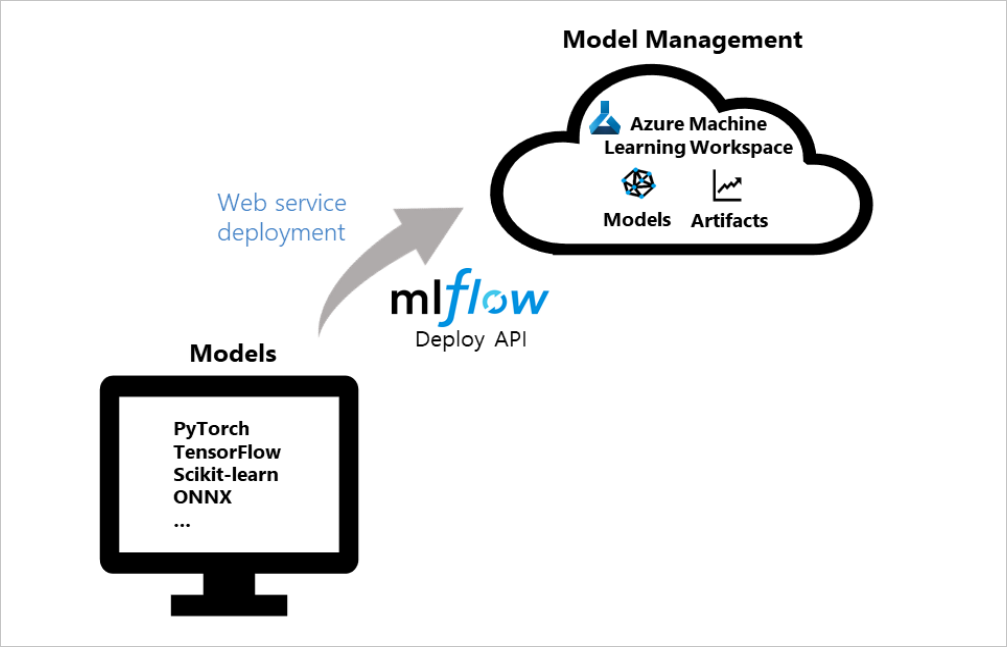

MLflow is an open-source library for managing the life cycle of machine learning experiments. MLflow integration with Azure Machine Learning lets you extend the management capabilities beyond model training to the deployment phase of production models. In this article, you deploy an MLflow model as an Azure web service and apply Azure Machine Learning model management and data drift detection features to your production models.

The following diagram demonstrates how the MLflow deploy API integrates with Azure Machine Learning to deploy models. You create models as Azure web services by using popular frameworks like PyTorch, TensorFlow, or scikit-learn, and manage the services in your workspace:

Tip

This article supports data scientists and developers who want to deploy an MLflow model to an Azure Machine Learning web service endpoint. If you're an admin who wants to monitor resource usage and events from Azure Machine Learning, such as quotas, completed training runs, or completed model deployments, see Monitoring Azure Machine Learning.

Prerequisites

Train a machine learning model. If you don't have a trained model, download the notebook that best fits your compute scenario in the Azure Machine Learning Notebooks repository on GitHub. Follow the instructions in the notebook and run the cells to prepare the model.

Set up the MLflow Tracking URI to connect Azure Machine Learning.

Install the azureml-mlflow package. This package automatically loads the azureml-core definitions in the Azure Machine Learning Python SDK, which provides the connectivity for MLflow to access your workspace.

Confirm you have the required access permissions for MLflow operations with your workspace.

Deployment options

Azure Machine Learning offers the following deployment configuration options:

- Azure Container Instances: Suitable for quick dev-test deployment.

- Azure Kubernetes Service (AKS): Recommended for scalable production deployment.

Note

Azure Machine Learning Endpoints (v2) provide an improved, simpler deployment experience. Endpoints support both real-time and batch inference scenarios. Endpoints provide a unified interface to invoke and manage model deployments across compute types. See What are Azure Machine Learning endpoints?.

For more MLflow and Azure Machine Learning functionality integrations, see MLflow and Azure Machine Learning (v2), which uses the v2 SDK.

Deploy to Azure Container Instances

To deploy your MLflow model to an Azure Machine Learning web service, your model must be set up with the MLflow Tracking URI to connect with Azure Machine Learning.

For the deployment to Azure Container Instances, you don't need to define any deployment configuration. The service defaults to an Azure Container Instances deployment when a configuration isn't provided. You can register and deploy the model in one step with MLflow's deploy method for Azure Machine Learning.

from mlflow.deployments import get_deploy_client

# Set the tracking URI as the deployment client

client = get_deploy_client(mlflow.get_tracking_uri())

# Set the model path

model_path = "model"

# Define the model path and the name as the service name

# The model is registered automatically and a name is autogenerated by using the "name" parameter

client.create_deployment(name="mlflow-test-aci", model_uri='runs:/{}/{}'.format(run.id, model_path))

Customize deployment config json file

If you prefer not to use the defaults, you can set up your deployment with a deployment config json file that uses parameters from the deploy_configuration() method as a reference.

Define deployment config parameters

In your deployment config json file, you define each deployment config parameter in the form of a dictionary. The following snippet provides an example. For more information about what your deployment configuration json file can contain, see the Azure Container instance deployment configuration schema in the Azure Machine Learning Azure CLI reference.

{"computeType": "aci",

"containerResourceRequirements": {"cpu": 1, "memoryInGB": 1},

"location": "chinanorth2"

}

Your config json file can then be used to create your deployment:

# Set the deployment config json file

deploy_path = "deployment_config.json"

test_config = {'deploy-config-file': deploy_path}

client.create_deployment(model_uri='runs:/{}/{}'.format(run.id, model_path), config=test_config, name="mlflow-test-aci")

Deploy to Azure Kubernetes Service (AKS)

To deploy your MLflow model to an Azure Machine Learning web service, your model must be set up with the MLflow Tracking URI to connect with Azure Machine Learning.

For deployment to AKS, you first create an AKS cluster by using the ComputeTarget.create() method. This process can take 20-25 minutes to create a new cluster.

from azureml.core.compute import AksCompute, ComputeTarget

# Use the default configuration (can also provide parameters to customize)

prov_config = AksCompute.provisioning_configuration()

aks_name = 'aks-mlflow'

# Create the cluster

aks_target = ComputeTarget.create(workspace=ws, name=aks_name, provisioning_configuration=prov_config)

aks_target.wait_for_completion(show_output = True)

print(aks_target.provisioning_state)

print(aks_target.provisioning_errors)

Create a deployment config json by using the deploy_configuration() method values as a reference. Define each deployment config parameter as a dictionary, as demonstrated in the following example:

{"computeType": "aks", "computeTargetName": "aks-mlflow"}

Then, register and deploy the model in a single step with the MLflow deployment client:

from mlflow.deployments import get_deploy_client

# Set the tracking URI as the deployment client

client = get_deploy_client(mlflow.get_tracking_uri())

# Set the model path

model_path = "model"

# Set the deployment config json file

deploy_path = "deployment_config.json"

test_config = {'deploy-config-file': deploy_path}

# Define the model path and the name as the service name

# The model is registered automatically and a name is autogenerated by using the "name" parameter

client.create_deployment(model_uri='runs:/{}/{}'.format(run.id, model_path), config=test_config, name="mlflow-test-aci")

The service deployment can take several minutes.

Clean up resources

If you don't plan to use your deployed web service, use the service.delete() method to delete the service from your notebook. For more information, see the delete() method of the WebService Class in the Python SDK documentation.

Explore example notebooks

The MLflow with Azure Machine Learning notebooks demonstrate and expand upon concepts presented in this article.

Note

For a community-driven repository of examples that use MLflow, see the Azure Machine Learning examples repository on GitHub.