Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

APPLIES TO:

Azure CLI ml extension v2 (current)

Azure CLI ml extension v2 (current)

Python SDK azure-ai-ml v2 (current)

Python SDK azure-ai-ml v2 (current)

This article describes how to use NVIDIA Triton Inference Server in Azure Machine Learning with online endpoints.

Triton is multi-framework open-source software that's optimized for inference. It supports popular machine learning frameworks like TensorFlow, ONNX Runtime, PyTorch, and NVIDIA TensorRT. You can use it for CPU or GPU workloads.

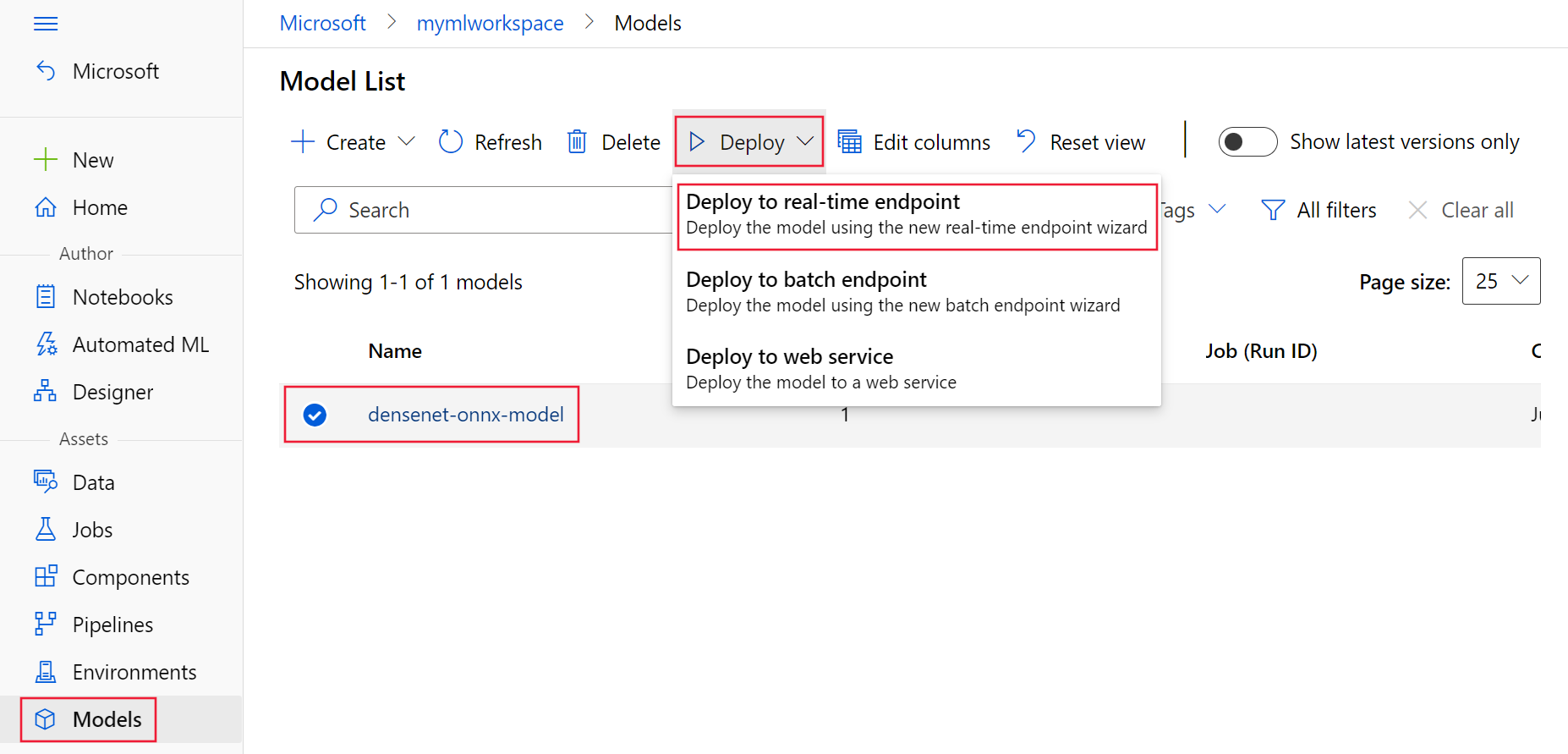

There are two main approaches you can take to use Triton models when deploying them to online endpoints: No-code deployment or full-code (Bring Your Own Container) deployment.

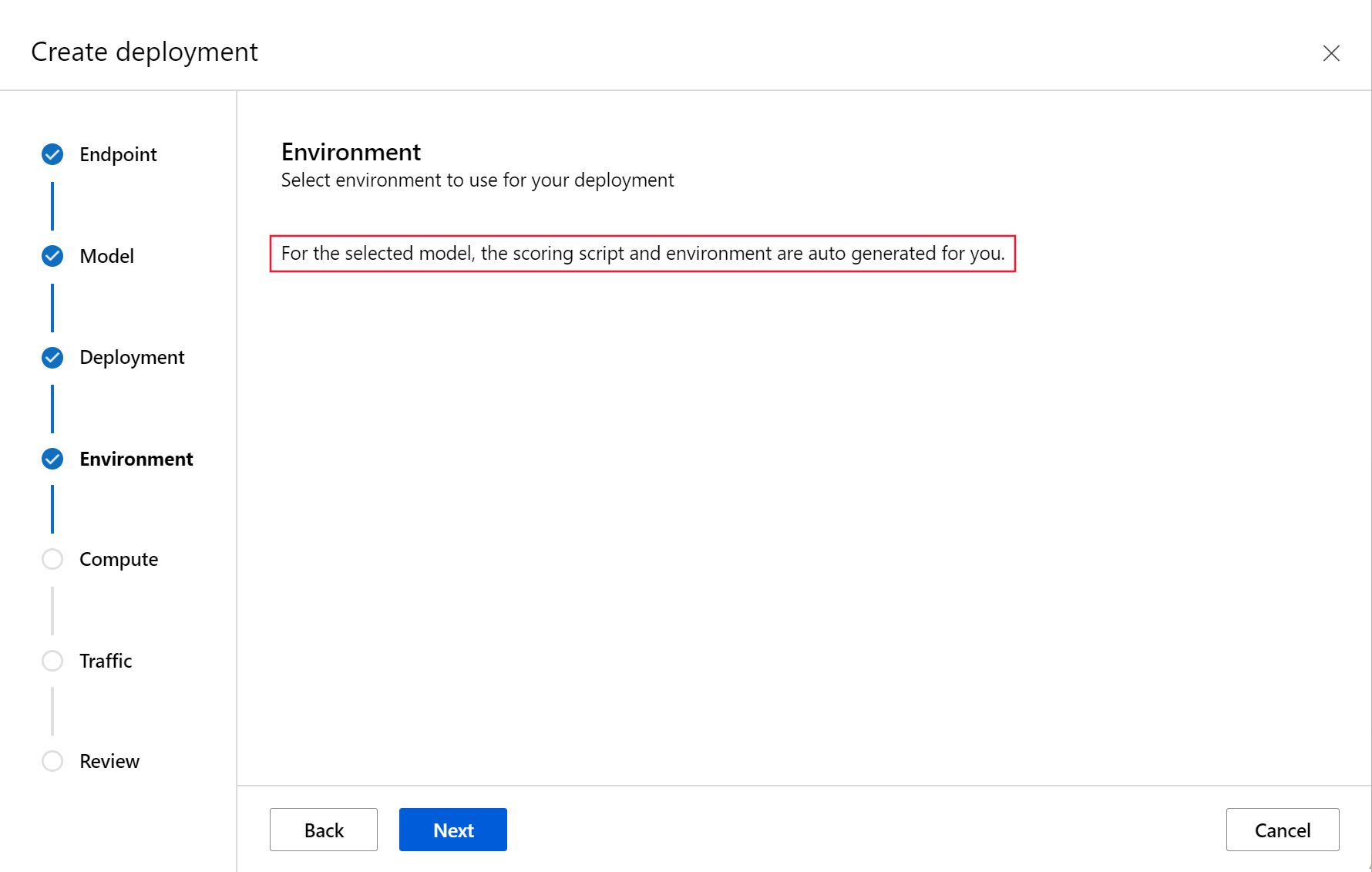

- No-code deployment for Triton models is a simple way to deploy them because you only need to bring Triton models to deploy.

- Full-code deployment for Triton models is a more advanced way to deploy them because you have full control over customizing the configurations available for Triton inference server.

For both options, Triton Inference Server performs inferencing based on the Triton model as defined by NVIDIA. For instance, ensemble models can be used for more advanced scenarios.

Triton is supported in both managed online endpoints and Kubernetes online endpoints.

In this article, you'll learn how to deploy a model by using no-code deployment for Triton to a managed online endpoint. Information is provided for using the Azure CLI, Python SDK v2, and Azure Machine Learning studio. If you want to customize further by directly using Triton Inference Server's configuration, see Use a custom container to deploy a model and the BYOC example for Triton (deployment definition and end-to-end script).

Note

Use of the NVIDIA Triton Inference Server container is governed by the NVIDIA AI Enterprise Software license agreement and can be used for 90 days without an enterprise product subscription. For more information, see NVIDIA AI Enterprise on Azure Machine Learning.

Prerequisites

The Azure CLI and the

mlextension to the Azure CLI, installed and configured. For more information, see Install and set up the CLI (v2).A Bash shell or a compatible shell, for example, a shell on a Linux system or Windows Subsystem for Linux. The Azure CLI examples in this article assume that you use this type of shell.

An Azure Machine Learning workspace. For instructions to create a workspace, see Set up.

A working Python 3.10 or later environment.

You must have additional Python packages installed for scoring. You can install them by using the following code. They include:

- NumPy. An array and numerical computing library.

- Triton Inference Server Client. Facilitates requests to the Triton Inference Server.

- Pillow. A library for image operations.

- Gevent. A networking library used for connecting to the Triton server.

pip install numpy pip install tritonclient[http] pip install pillow pip install geventAccess to NCv3-series VMs for your Azure subscription.

Important

You might need to request a quota increase for your subscription before you can use this series of VMs. For more information, see NCv3-series.

NVIDIA Triton Inference Server requires a specific model repository structure, where there's a directory for each model and subdirectories for the model versions. The contents of each model version subdirectory is determined by the type of the model and the requirements of the backend that supports the model. For information about the structure for all models, see Model Files.

The information in this document is based on using a model stored in ONNX format, so the directory structure of the model repository is

<model-repository>/<model-name>/1/model.onnx. Specifically, this model performs image identification.

The information in this article is based on code samples contained in the azureml-examples repository. To run the commands locally without having to copy/paste YAML and other files, clone the repo and then change directories to the cli directory in the repo:

git clone https://github.com/Azure/azureml-examples --depth 1

cd azureml-examples

cd cli

If you haven't already set the defaults for the Azure CLI, save your default settings. To avoid passing in the values for your subscription, workspace, and resource group multiple times, use the following commands. Replace the following parameters with values for your specific configuration:

- Replace

<subscription>with your Azure subscription ID. - Replace

<workspace>with your Azure Machine Learning workspace name. - Replace

<resource-group>with the Azure resource group that contains your workspace. - Replace

<location>with the Azure region that contains your workspace.

Tip

You can see what your current defaults are by using the az configure -l command.

az account set --subscription <subscription>

az configure --defaults workspace=<workspace> group=<resource-group> location=<location>

Define the deployment configuration

APPLIES TO:  Azure CLI ml extension v2 (current)

Azure CLI ml extension v2 (current)

This section describes how to deploy to a managed online endpoint by using the Azure CLI with the Machine Learning extension (v2).

Important

For Triton no-code-deployment, testing via local endpoints is currently not supported.

To avoid typing in a path for multiple commands, use the following command to set a

BASE_PATHenvironment variable. This variable points to the directory where the model and associated YAML configuration files are located:BASE_PATH=endpoints/online/triton/single-modelUse the following command to set the name of the endpoint that will be created. In this example, a random name is created for the endpoint:

export ENDPOINT_NAME=triton-single-endpt-`echo $RANDOM`Create a YAML configuration file for your endpoint. The following example configures the name and authentication mode of the endpoint. The file used in the following commands is located at

/cli/endpoints/online/triton/single-model/create-managed-endpoint.ymlin the azureml-examples repo you cloned earlier:create-managed-endpoint.yaml

$schema: https://azuremlschemas.azureedge.net/latest/managedOnlineEndpoint.schema.json

name: my-endpoint

auth_mode: aml_token

Create a YAML configuration file for the deployment. The following example configures a deployment named

blueto the endpoint defined in the previous step. The file used in the following commands is located at/cli/endpoints/online/triton/single-model/create-managed-deployment.ymlin the azureml-examples repo you cloned earlier:Important

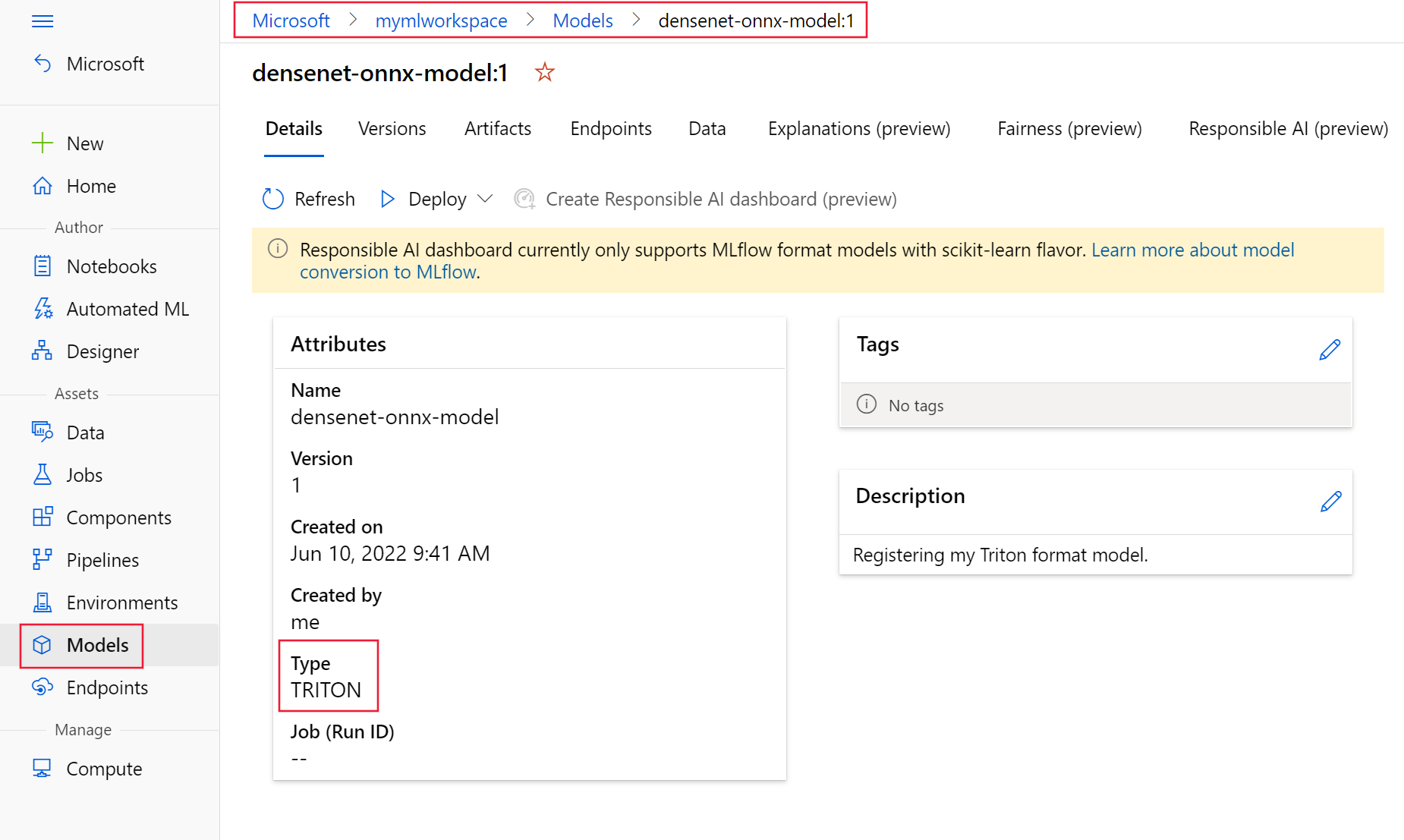

For Triton no-code-deployment to work, you need to set

typetotriton_model:type: triton_model. For more information, see CLI (v2) model YAML schema.This deployment uses a Standard_NC6s_v3 VM. You might need to request a quota increase for your subscription before you can use this VM. For more information, see NCv3-series.

$schema: https://azuremlschemas.azureedge.net/latest/managedOnlineDeployment.schema.json

name: blue

endpoint_name: my-endpoint

model:

name: sample-densenet-onnx-model

version: 1

path: ./models

type: triton_model

instance_count: 1

instance_type: Standard_NC6s_v3

Deploy to Azure

APPLIES TO:  Azure CLI ml extension v2 (current)

Azure CLI ml extension v2 (current)

To create an endpoint by using the YAML configuration, use the following command:

az ml online-endpoint create -n $ENDPOINT_NAME -f $BASE_PATH/create-managed-endpoint.yamlTo create the deployment by using the YAML configuration, use the following command:

az ml online-deployment create --name blue --endpoint $ENDPOINT_NAME -f $BASE_PATH/create-managed-deployment.yaml --all-traffic

Test the endpoint

APPLIES TO:  Azure CLI ml extension v2 (current)

Azure CLI ml extension v2 (current)

After your deployment is complete, use the following command to make a scoring request to the deployed endpoint.

Tip

The file /cli/endpoints/online/triton/single-model/triton_densenet_scoring.py in the azureml-examples repo is used for scoring. The image passed to the endpoint needs preprocessing to meet the size, type, and format requirements, and post-processing to show the predicted label. The triton_densenet_scoring.py file uses the tritonclient.http library to communicate with the Triton inference server. This file runs on the client side.

To get the endpoint scoring URI, use the following command:

scoring_uri=$(az ml online-endpoint show -n $ENDPOINT_NAME --query scoring_uri -o tsv) scoring_uri=${scoring_uri%/*}To get an authentication key, use the following command:

auth_token=$(az ml online-endpoint get-credentials -n $ENDPOINT_NAME --query accessToken -o tsv)To score data with the endpoint, use the following command. It submits the image of a peacock to the endpoint:

python $BASE_PATH/triton_densenet_scoring.py --base_url=$scoring_uri --token=$auth_token --image_path $BASE_PATH/data/peacock.jpgThe response from the script is similar to the following response:

Is server ready - True Is model ready - True /azureml-examples/cli/endpoints/online/triton/single-model/densenet_labels.txt 84 : PEACOCK

Delete the endpoint and model

APPLIES TO:  Azure CLI ml extension v2 (current)

Azure CLI ml extension v2 (current)

When you're done with the endpoint, use the following command to delete it:

az ml online-endpoint delete -n $ENDPOINT_NAME --yesUse the following command to archive your model:

az ml model archive --name sample-densenet-onnx-model --version 1

Next steps

To learn more, review these articles:

- Deploy models with REST

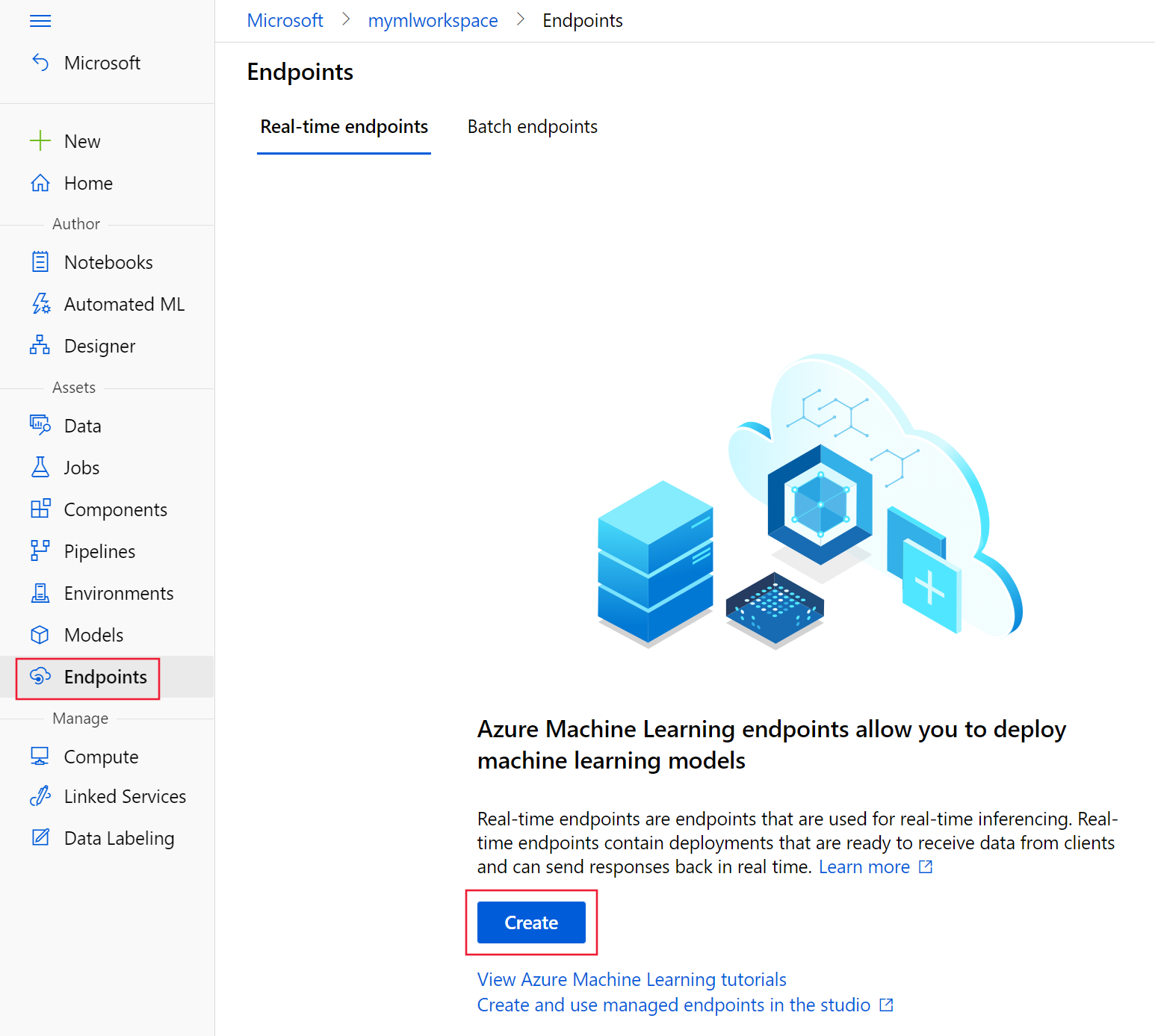

- Create and use managed online endpoints in the studio

- Safe rollout for online endpoints

- How to autoscale managed online endpoints

- View costs for an Azure Machine Learning managed online endpoint

- Access Azure resources from an online endpoint with a managed identity

- Troubleshoot online endpoint deployment and scoring