Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

In this tutorial, you perform an ETL operation: extract, transform, and load data. You take a raw CSV data file, import it into an Azure HDInsight cluster, transform it with Apache Hive, and load it into Azure SQL Database with Apache Sqoop.

In this tutorial, you learn how to:

- Extract and upload the data to an HDInsight cluster.

- Transform the data by using Apache Hive.

- Load the data to Azure SQL Database by using Sqoop.

If you don't have an Azure subscription, create a trial account before you begin.

Prerequisites

A storage account that has a hierarchical namespace (Azure Data Lake Storage) that is configured for HDInsight

See Use Azure Data Lake Storage with Azure HDInsight clusters.

A Linux-based Hadoop cluster on HDInsight

See Quickstart: Get started with Apache Hadoop and Apache Hive in Azure HDInsight using the Azure portal.

Azure SQL Database

You use Azure SQL Database as a destination data store. If you don't have a database in SQL Database, see Create a database in Azure SQL Database in the Azure portal.

Azure CLI

If you haven't installed the Azure CLI, see Install the Azure CLI.

A Secure Shell (SSH) client

For more information, see Connect to HDInsight (Hadoop) by using SSH.

Download, extract and then upload the data

In this section, you download sample flight data. Then, you upload that data to your HDInsight cluster and then copy that data to your Data Lake Storage account.

Download the On_Time_Reporting_Carrier_On_Time_Performance_1987_present_2016_1.zip file. This file contains the flight data.

Open a command prompt and use the following Secure Copy (Scp) command to upload the .zip file to the HDInsight cluster head node:

scp On_Time_Reporting_Carrier_On_Time_Performance_1987_present_2016_1.zip <ssh-user-name>@<cluster-name>-ssh.azurehdinsight.cn:- Replace the

<ssh-user-name>placeholder with the SSH username for the HDInsight cluster. - Replace the

<cluster-name>placeholder with the name of the HDInsight cluster.

If you use a password to authenticate your SSH username, you're prompted for the password.

If you use a public key, you might need to use the

-iparameter and specify the path to the matching private key. For example,scp -i ~/.ssh/id_rsa <file_name>.zip <user-name>@<cluster-name>-ssh.azurehdinsight.cn:.- Replace the

After the upload has finished, connect to the cluster by using SSH. On the command prompt, enter the following command:

ssh <ssh-user-name>@<cluster-name>-ssh.azurehdinsight.cnUse the following command to unzip the .zip file:

unzip <file-name>.zipThe command extracts a .csv file.

Use the following command to create the Data Lake Storage container.

hadoop fs -D "fs.azure.createRemoteFileSystemDuringInitialization=true" -ls abfs://<container-name>@<storage-account-name>.dfs.core.chinacloudapi.cn/Replace the

<container-name>placeholder with the name that you want to give your container.Replace the

<storage-account-name>placeholder with the name of your storage account.Use the following command to create a directory.

hdfs dfs -mkdir -p abfs://<container-name>@<storage-account-name>.dfs.core.chinacloudapi.cn/tutorials/flightdelays/dataUse the following command to copy the .csv file to the directory:

hdfs dfs -put "On_Time_Reporting_Carrier_On_Time_Performance_(1987_present)_2016_1.csv" abfs://<container-name>@<storage-account-name>.dfs.core.chinacloudapi.cn/tutorials/flightdelays/data/Use quotes around the file name if the file name contains spaces or special characters.

Transform the data

In this section, you use Beeline to run an Apache Hive job.

As part of the Apache Hive job, you import the data from the .csv file into an Apache Hive table named delays.

From the SSH prompt that you already have for the HDInsight cluster, use the following command to create and edit a new file named flightdelays.hql:

nano flightdelays.hqlModify the following text by replacing the

<container-name>and<storage-account-name>placeholders with your container and storage account name. Then copy and paste the text into the nano console by using pressing the SHIFT key along with the right-mouse select button.DROP TABLE delays_raw; -- Creates an external table over the csv file CREATE EXTERNAL TABLE delays_raw ( YEAR string, FL_DATE string, UNIQUE_CARRIER string, CARRIER string, FL_NUM string, ORIGIN_AIRPORT_ID string, ORIGIN string, ORIGIN_CITY_NAME string, ORIGIN_CITY_NAME_TEMP string, ORIGIN_STATE_ABR string, DEST_AIRPORT_ID string, DEST string, DEST_CITY_NAME string, DEST_CITY_NAME_TEMP string, DEST_STATE_ABR string, DEP_DELAY_NEW float, ARR_DELAY_NEW float, CARRIER_DELAY float, WEATHER_DELAY float, NAS_DELAY float, SECURITY_DELAY float, LATE_AIRCRAFT_DELAY float) -- The following lines describe the format and location of the file ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' LINES TERMINATED BY '\n' STORED AS TEXTFILE LOCATION 'abfs://<container-name>@<storage-account-name>.dfs.core.chinacloudapi.cn/tutorials/flightdelays/data'; -- Drop the delays table if it exists DROP TABLE delays; -- Create the delays table and populate it with data -- pulled in from the CSV file (via the external table defined previously) CREATE TABLE delays LOCATION 'abfs://<container-name>@<storage-account-name>.dfs.core.chinacloudapi.cn/tutorials/flightdelays/processed' AS SELECT YEAR AS year, FL_DATE AS FlightDate, substring(UNIQUE_CARRIER, 2, length(UNIQUE_CARRIER) -1) AS IATA_CODE_Reporting_Airline, substring(CARRIER, 2, length(CARRIER) -1) AS Reporting_Airline, substring(FL_NUM, 2, length(FL_NUM) -1) AS Flight_Number_Reporting_Airline, ORIGIN_AIRPORT_ID AS OriginAirportID, substring(ORIGIN, 2, length(ORIGIN) -1) AS OriginAirportSeqID, substring(ORIGIN_CITY_NAME, 2) AS OriginCityName, substring(ORIGIN_STATE_ABR, 2, length(ORIGIN_STATE_ABR) -1) AS OriginState, DEST_AIRPORT_ID AS DestAirportID, substring(DEST, 2, length(DEST) -1) AS DestAirportSeqID, substring(DEST_CITY_NAME,2) AS DestCityName, substring(DEST_STATE_ABR, 2, length(DEST_STATE_ABR) -1) AS DestState, DEP_DELAY_NEW AS DepDelay, ARR_DELAY_NEW AS ArrDelay, CARRIER_DELAY AS CarrierDelay, WEATHER_DELAY AS WeatherDelay, NAS_DELAY AS NASDelay, SECURITY_DELAY AS SecurityDelay, LATE_AIRCRAFT_DELAY AS LateAircraftDelay FROM delays_raw;Save the file by typing CTRL+X and then typing

Ywhen prompted.To start Hive and run the

flightdelays.hqlfile, use the following command:beeline -u 'jdbc:hive2://localhost:10001/;transportMode=http' -f flightdelays.hqlAfter the

flightdelays.hqlscript finishes running, use the following command to open an interactive Beeline session:beeline -u 'jdbc:hive2://localhost:10001/;transportMode=http'When you receive the

jdbc:hive2://localhost:10001/>prompt, use the following query to retrieve data from the imported flight delay data:INSERT OVERWRITE DIRECTORY '/tutorials/flightdelays/output' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' SELECT regexp_replace(OriginCityName, '''', ''), avg(WeatherDelay) FROM delays WHERE WeatherDelay IS NOT NULL GROUP BY OriginCityName;This query retrieves a list of cities that experienced weather delays, along with the average delay time, and saves it to

abfs://<container-name>@<storage-account-name>.dfs.core.chinacloudapi.cn/tutorials/flightdelays/output. Later, Sqoop reads the data from this location and exports it to Azure SQL Database.To exit Beeline, enter

!quitat the prompt.

Create a SQL database table

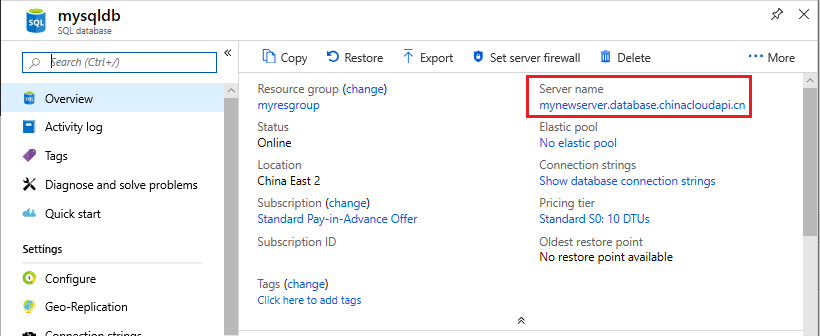

You need the server name from SQL Database for this operation. Complete these steps to find your server name.

Go to the Azure portal.

Select SQL Databases.

Filter on the name of the database that you choose to use. The server name is listed in the Server name column.

Filter on the name of the database that you want to use. The server name is listed in the Server name column.

There are many ways to connect to SQL Database and create a table. The following steps use FreeTDS from the HDInsight cluster.

To install FreeTDS, use the following command from an SSH connection to the cluster:

sudo apt-get --assume-yes install freetds-dev freetds-binAfter the installation completes, use the following command to connect to SQL Database.

TDSVER=8.0 tsql -H '<server-name>.database.chinacloudapi.cn' -U '<admin-login>' -p 1433 -D '<database-name>'Replace the

<server-name>placeholder with the logical SQL server name.Replace the

<admin-login>placeholder with the admin username for SQL Database.Replace the

<database-name>placeholder with the database name

When you're prompted, enter the password for the SQL Database admin username.

You receive output similar to the following text:

locale is "en_US.UTF-8" locale charset is "UTF-8" using default charset "UTF-8" Default database being set to sqooptest 1>At the

1>prompt, enter the following statements:CREATE TABLE [dbo].[delays]( [OriginCityName] [nvarchar](50) NOT NULL, [WeatherDelay] float, CONSTRAINT [PK_delays] PRIMARY KEY CLUSTERED ([OriginCityName] ASC)) GOWhen the

GOstatement is entered, the previous statements are evaluated.The query creates a table named delays, which has a clustered index.

Use the following query to verify that the table is created:

SELECT * FROM information_schema.tables GOThe output is similar to the following text:

TABLE_CATALOG TABLE_SCHEMA TABLE_NAME TABLE_TYPE databaseName dbo delays BASE TABLEEnter

exitat the1>prompt to exit the tsql utility.

Export and load the data

In the previous sections, you copied the transformed data at the location abfs://<container-name>@<storage-account-name>.dfs.core.chinacloudapi.cn/tutorials/flightdelays/output. In this section, you use Sqoop to export the data from abfs://<container-name>@<storage-account-name>.dfs.core.chinacloudapi.cn/tutorials/flightdelays/output to the table you created in the Azure SQL Database.

Use the following command to verify that Sqoop can see your SQL database:

sqoop list-databases --connect jdbc:sqlserver://<SERVER_NAME>.database.chinacloudapi.cn:1433 --username <ADMIN_LOGIN> --password <ADMIN_PASSWORD>The command returns a list of databases, including the database in which you created the delays table.

Use the following command to export data from the hivesampletable table to the delays table:

sqoop export --connect 'jdbc:sqlserver://<SERVER_NAME>.database.chinacloudapi.cn:1433;database=<DATABASE_NAME>' --username <ADMIN_LOGIN> --password <ADMIN_PASSWORD> --table 'delays' --export-dir 'abfs://<container-name>@.dfs.core.chinacloudapi.cn/tutorials/flightdelays/output' --fields-terminated-by '\t' -m 1Sqoop connects to the database that contains the delays table, and exports data from the

/tutorials/flightdelays/outputdirectory to the delays table.After the

sqoopcommand finishes, use the tsql utility to connect to the database:TDSVER=8.0 tsql -H <SERVER_NAME>.database.chinacloudapi.cn -U <ADMIN_LOGIN> -P <ADMIN_PASSWORD> -p 1433 -D <DATABASE_NAME>Use the following statements to verify that the data was exported to the delays table:

SELECT * FROM delays GOYou should see a listing of data in the table. The table includes the city name and the average flight delay time for that city.

Enter

exitto exit the tsql utility.

Clean up resources

All resources used in this tutorial are preexisting. No cleanup is necessary.

Next steps

To learn more ways to work with data in HDInsight, see the following article: