Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Customer-managed failover enables you to fail over your entire geo-redundant storage account to the secondary region if the storage service endpoints for the primary region become unavailable. During failover, the original secondary region becomes the new primary region. All storage service endpoints are then redirected to the new primary region. After the storage service endpoint outage is resolved, you can perform another failover operation to fail back to the original primary region.

This article describes what happens during a customer-managed failover and failback at every stage of the process.

Redundancy management during failover and failback

Tip

To understand the various redundancy states during the failover and failback process in detail, see Azure Storage redundancy for definitions of each.

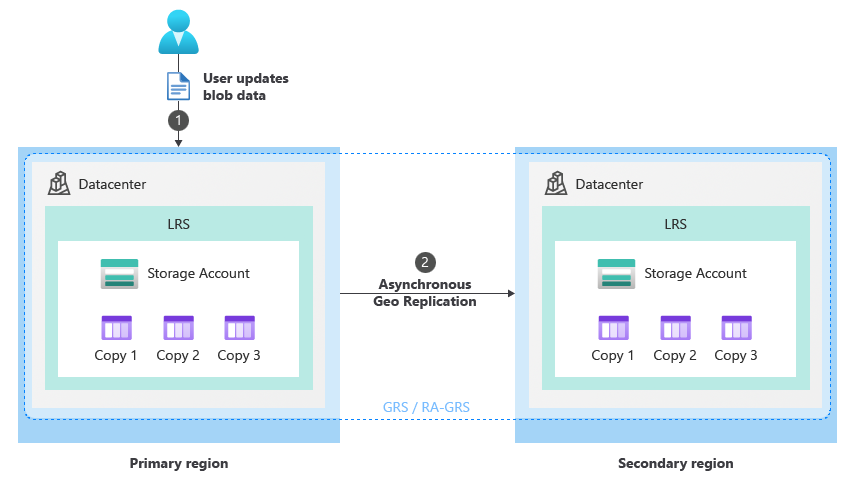

Data is replicated three times per region for each storage account configured for geo-redundant storage (GRS) or read access geo-redundant storage (RA-GRS) redundancy. This approach means that replication occurs within both primary and secondary regions. Each region is essentially locally redundant (LRS), and contains three copies of your data.

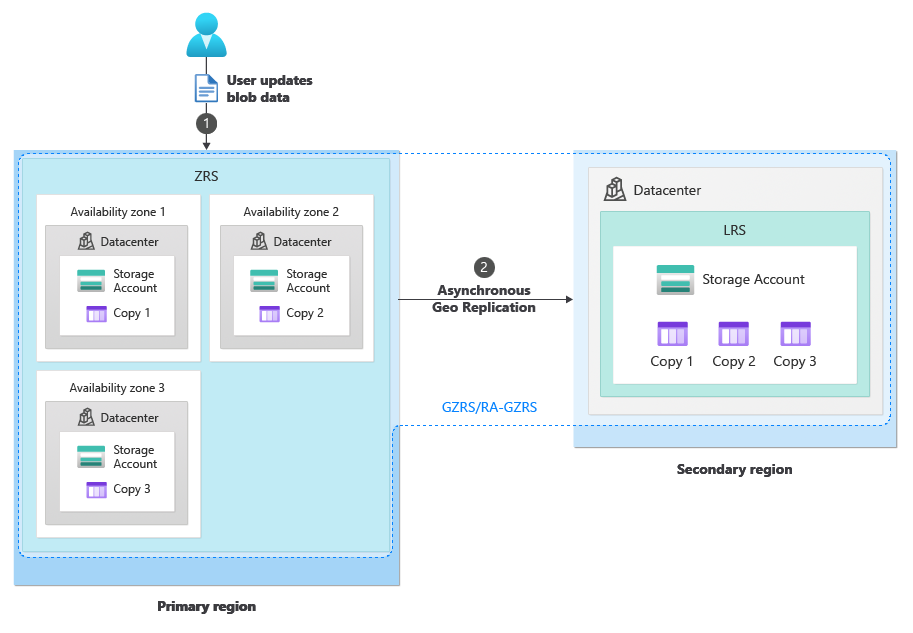

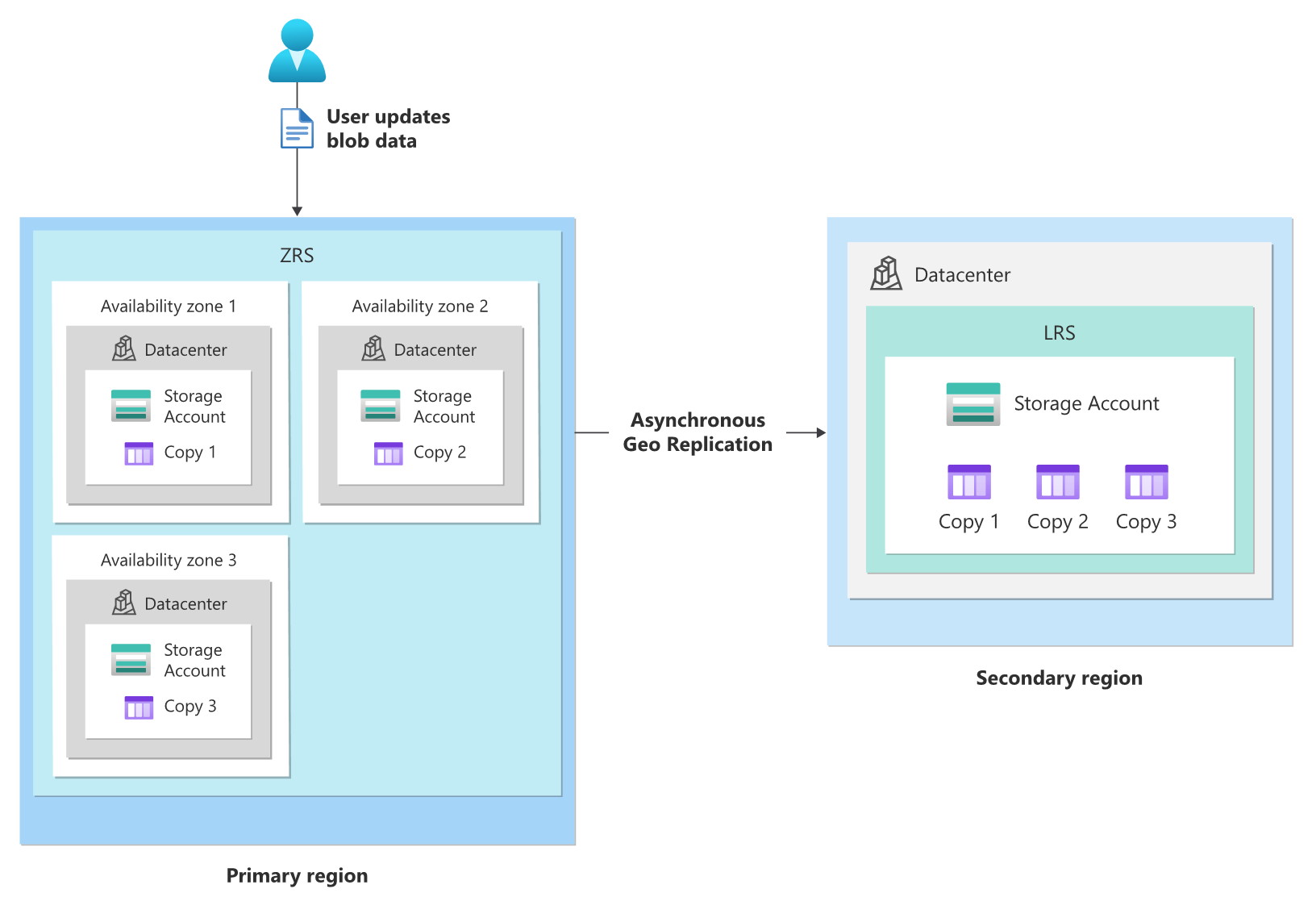

When a storage account is configured for geo-zone-redundant storage (GZRS) or read access geo-zone-redundant storage (RA-GZRS) replication, data is zone-redundant within the zone redundant storage (ZRS) primary region and replicated three times within the LRS secondary region. If the account is configured for read access (RA), you're able to read data from the secondary region as long as the storage service endpoints to that region are available.

During the customer-managed failover process, the Domain Name System (DNS) entries for the storage service endpoints are switched. Your storage account's secondary endpoints become the new primary endpoints, and the original primary endpoints become the new secondary. After failover, the copy of your storage account in the original primary region is deleted and your storage account continues to be replicated three times locally within the new primary region. At that point, your storage account becomes locally redundant and utilizes LRS.

The original and current redundancy configurations are stored within the storage account's properties. This functionality allows you to return to your original configuration when you fail back. For a complete list of resulting redundancy configurations, read Recovery planning and failover.

To regain geo-redundancy after a failover, you need to reconfigure your account as GRS. After the account is reconfigured for geo-redundancy, Azure immediately begins copying data from the new primary region to the new secondary. If you configure your storage account for read access to the secondary region, that access is available. However, replication from the primary to the secondary region might take some time to complete.

Warning

After your account is reconfigured for geo-redundancy, it might take a significant amount of time before existing data in the new primary region is fully copied to the new secondary.

To avoid a major data loss, check the value of the Last Sync Time property before failing back. To evaluate potential data loss, compare the last sync time to the last time at which data was written to the new primary.

The failback process is essentially the same as the failover process, except that the replication configuration is restored to its original, prefailover state.

After failback, you can reconfigure your storage account to take advantage of geo-redundancy. If the original primary was configured as ZRS, you can configure it to be GZRS or RA-GZRS. For more options, see Change how a storage account is replicated.

How to initiate a failover

To learn how to initiate a failover, see Initiate an account failover.

Caution

Failover usually involves some data loss, and potentially file and data inconsistencies. It's important to understand the impact that an account failover would have on your data before initiating this type of failover.

For details about potential data loss and inconsistencies, see Anticipate data loss and inconsistencies.

The failover and failback process

This section summarizes the failover process for a customer-managed failover.

Failover transition summary

After a customer-managed failover:

- The secondary region becomes the new primary

- The copy of the data in the original primary region is deleted

- The storage account is converted to LRS

- Geo-redundancy is lost

This table summarizes the resulting redundancy configuration at every stage of a customer-managed failover and failback:

| Original configuration |

After failover |

After re-enabling geo redundancy |

After failback |

After re-enabling geo redundancy |

|---|---|---|---|---|

| GRS | LRS | GRS 1 | LRS | GRS 1 |

| GZRS | LRS | GRS 1 | ZRS | GZRS 1 |

1 Geo-redundancy is lost during a customer-managed failover and must be manually reconfigured.

Failover transition details

The following diagrams show the customer-managed failover and failback process for a storage account configured for geo-redundancy. The transition details for GZRS and RA-GZRS are slightly different from GRS and RA-GRS.

Normal operation (GRS/RA-GRS)

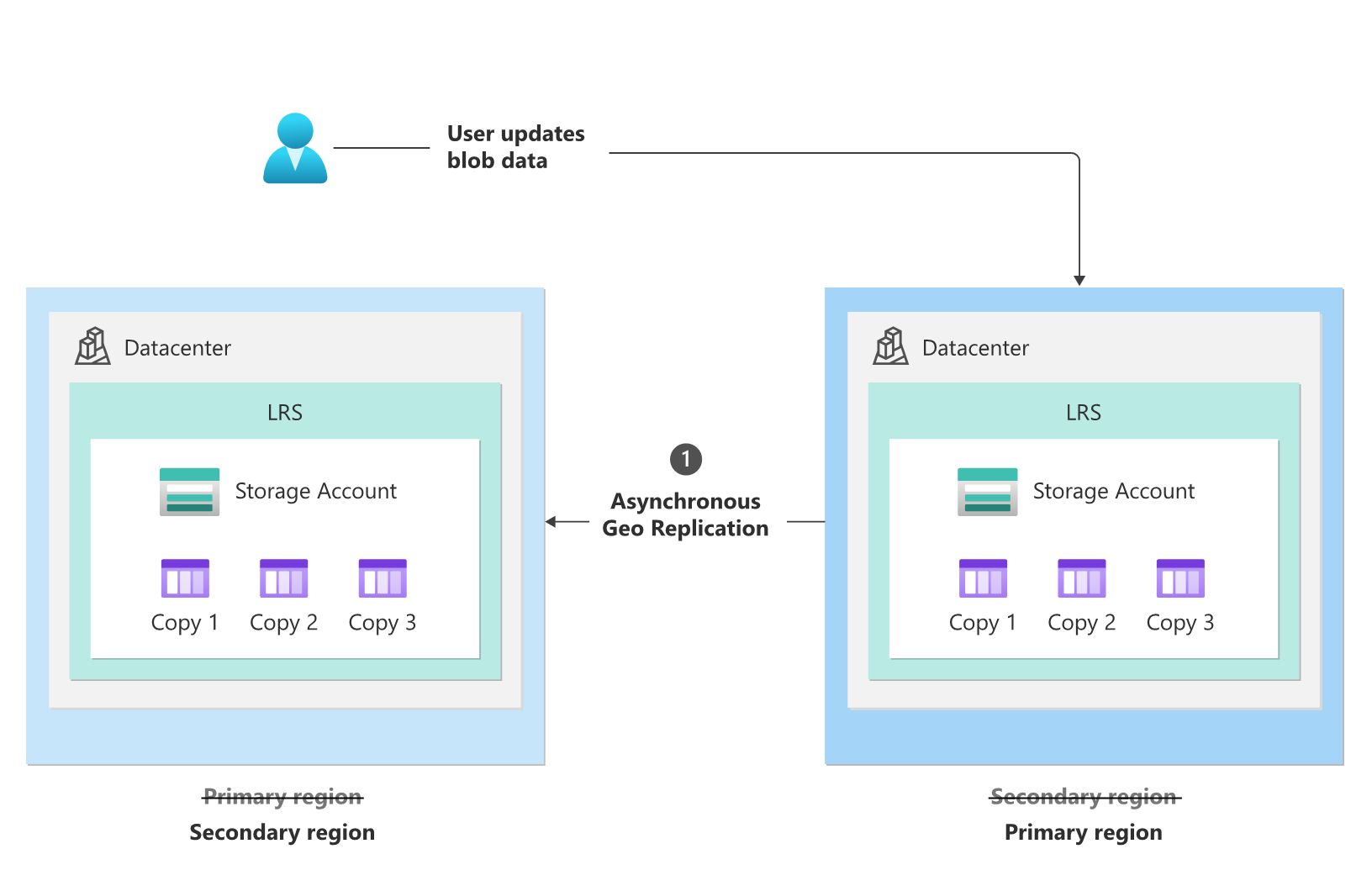

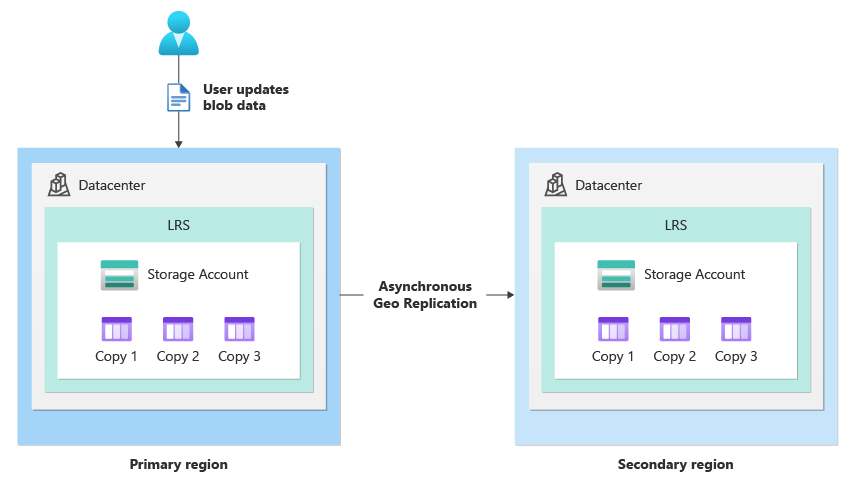

Under normal circumstances, a client writes data to a storage account in the primary region via storage service endpoints (1). The data is then copied asynchronously from the primary region to the secondary region (2). The following image shows the normal state of a storage account configured as GRS when the primary endpoints are available:

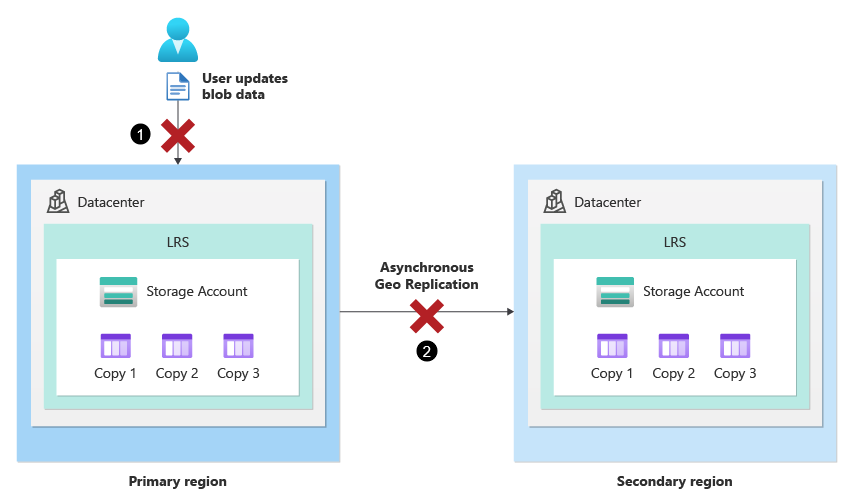

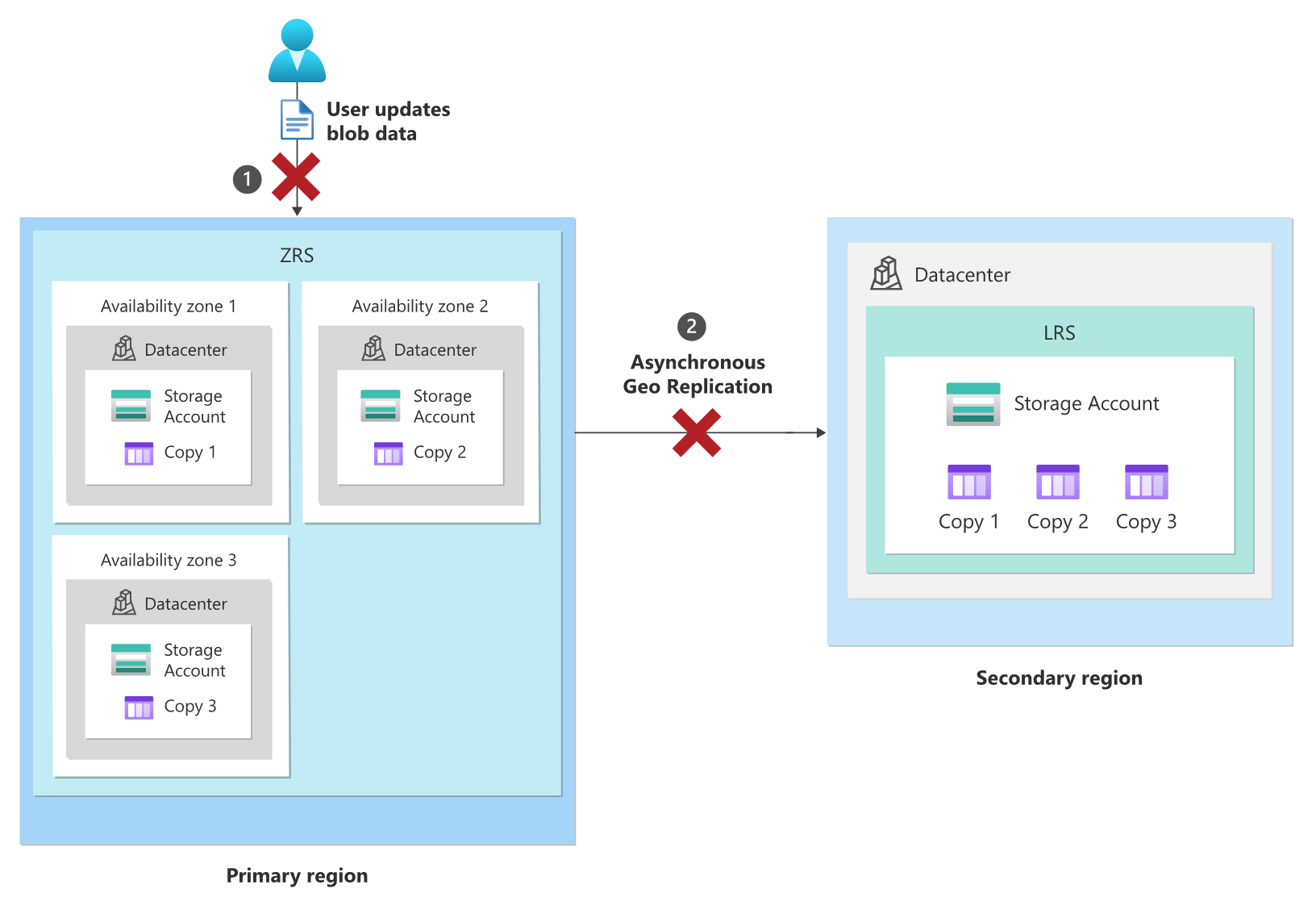

The storage service endpoints become unavailable in the primary region (GRS/RA-GRS)

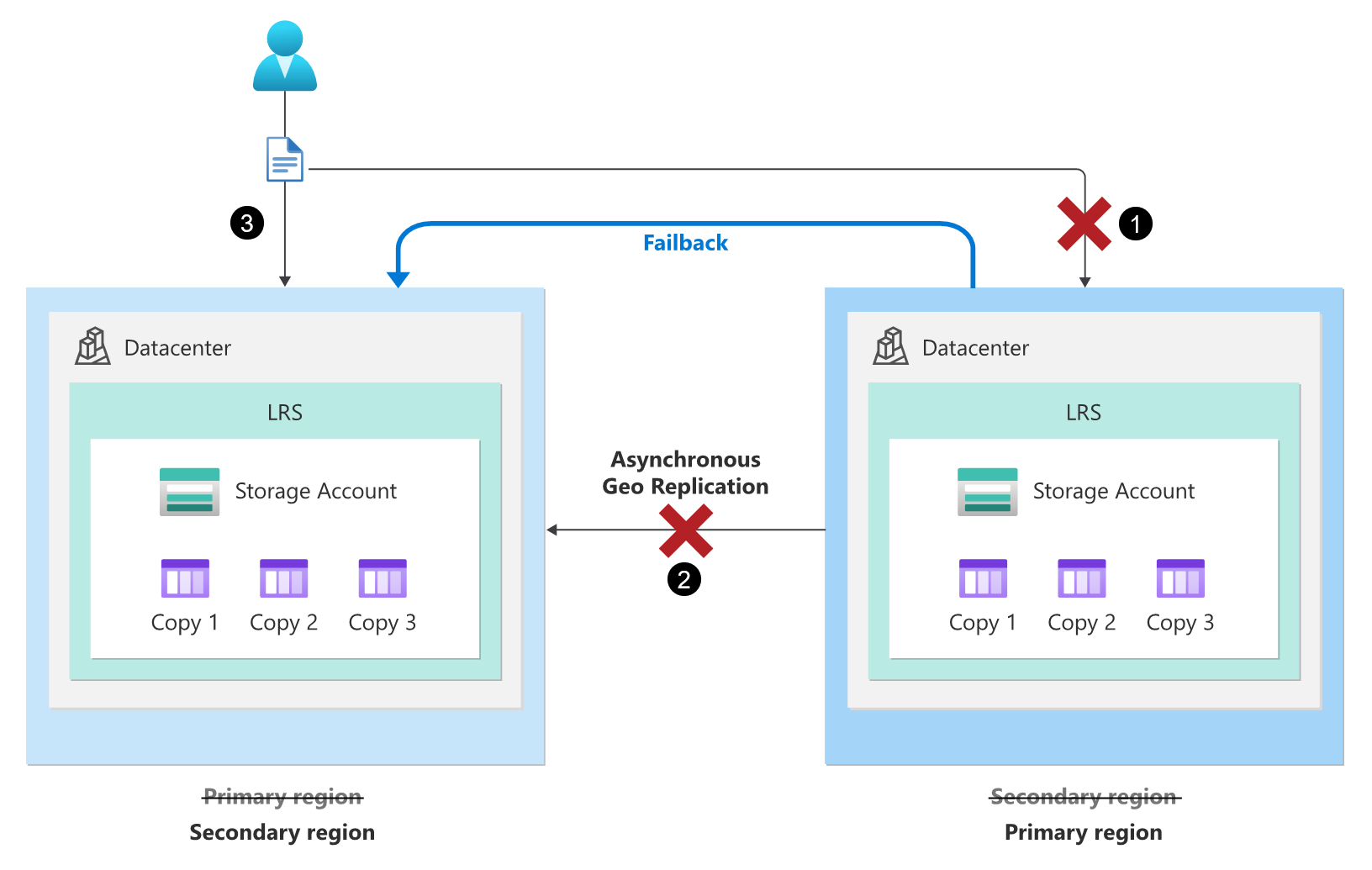

If the primary storage service endpoints become unavailable for any reason (1), the client is no longer able to write to the storage account. Depending on the underlying cause of the outage, replication to the secondary region might no longer be functioning (2), so some data loss should be expected. The following image shows the scenario where the primary endpoints become unavailable, but before recovery occurs:

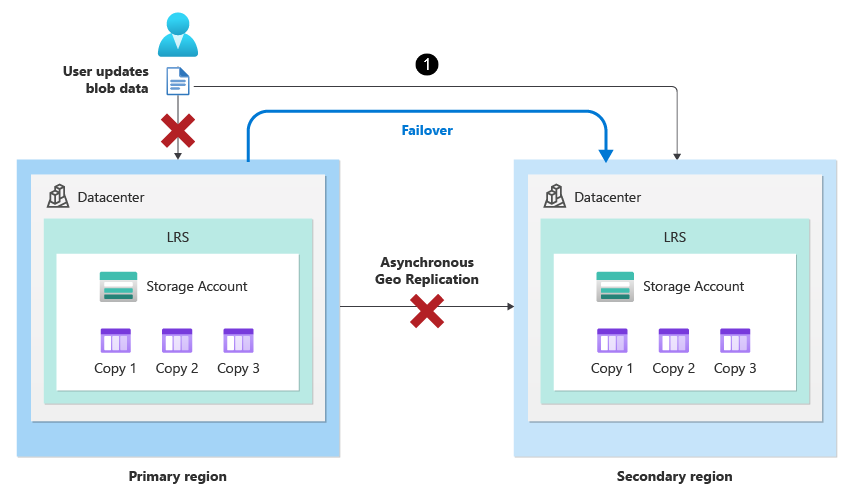

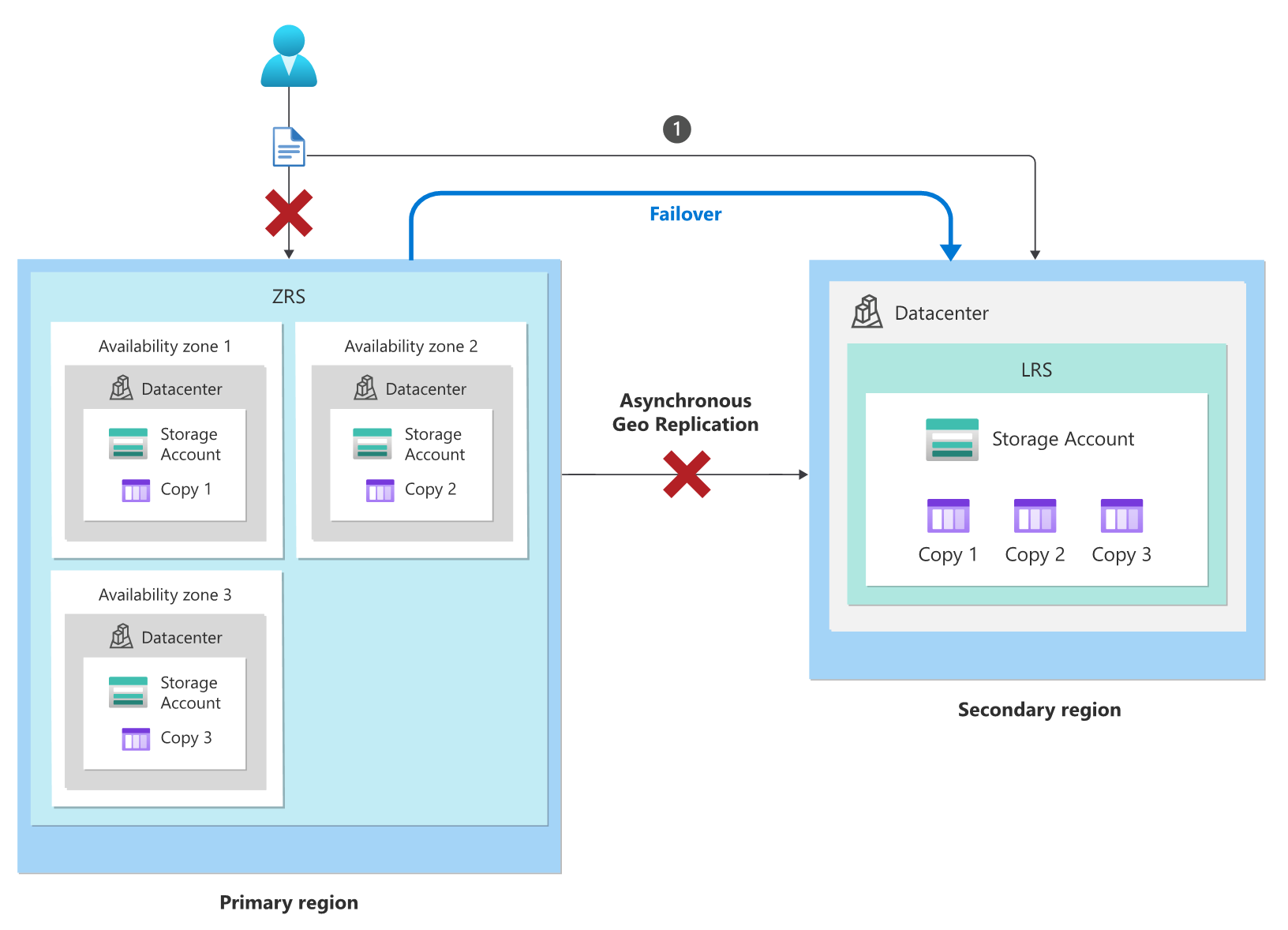

The failover process (GRS/RA-GRS)

To restore write access to your data, you can initiate a failover. The storage service endpoint URIs for blobs, tables, queues, and files remain unchanged, but their DNS entries are changed to point to the secondary region as shown:

Customer-managed failover typically takes about an hour.

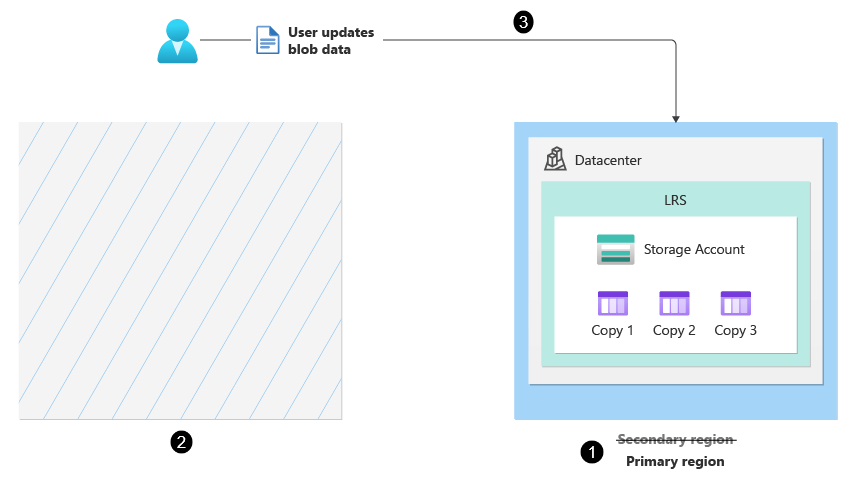

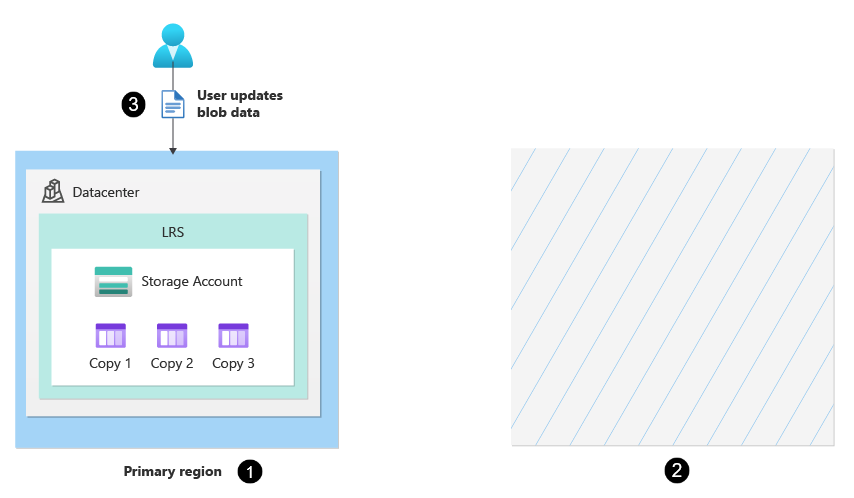

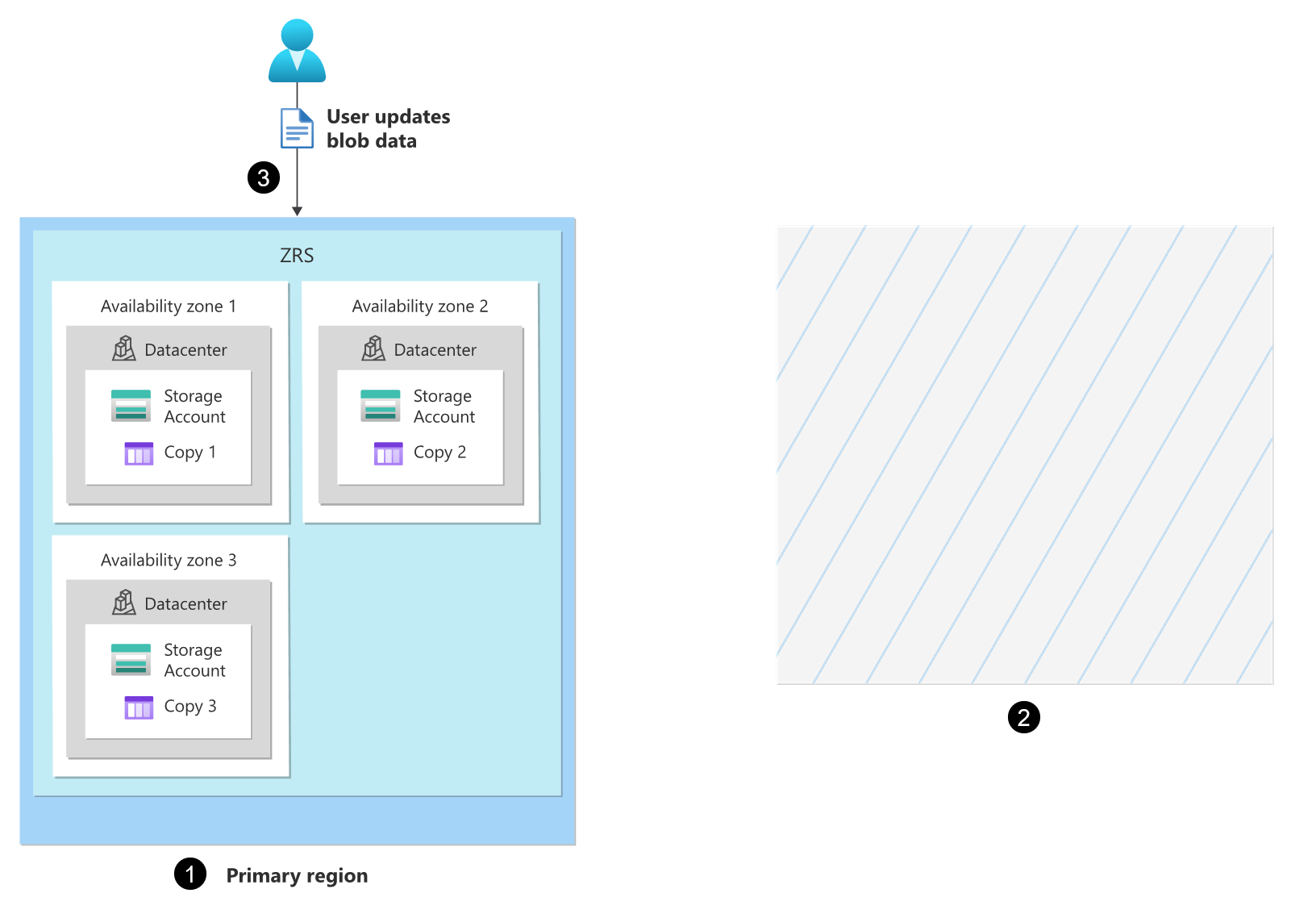

After the failover is complete, the original secondary becomes the new primary (1), and the copy of the storage account in the original primary is deleted (2). The storage account is configured as LRS in the new primary region, and is no longer geo-redundant. Users can resume writing data to the storage account (3), as shown in this image:

To resume replication to a new secondary region, reconfigure the account for geo-redundancy.

Important

Keep in mind that converting a locally redundant storage account to use geo-redundancy incurs both cost and time. For more information, see The time and cost of failing over.

After reconfiguring the account to utilize GRS, Azure begins copying your data asynchronously to the new secondary region (1) as shown in this image:

Read access to the new secondary region isn't available again until the issue causing the original outage is resolved.

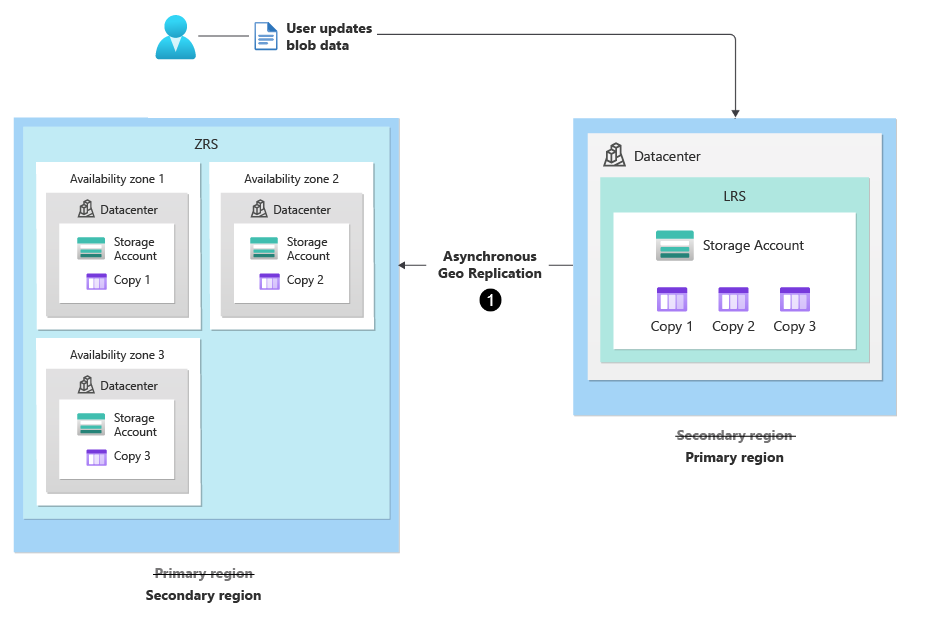

The failback process (GRS/RA-GRS)

Warning

After your account is reconfigured for geo-redundancy, it might take a significant amount of time before the data in the new primary region is fully copied to the new secondary.

To avoid a major data loss, check the value of the Last Sync Time property before failing back. Compare the last sync time to the last times that data was written to the new primary to evaluate potential data loss.

After the issue causing the original outage is resolved, you can initiate failback to the original primary region. The failback process is the same as the original failover process previously explained.

Important points to consider:

- With customer-initiated failover and failback, your data isn't allowed to finish replicating to the secondary region during the failback process. Therefore, it's important to check the value of the Last Sync Time property before failing back.

- The DNS entries for the storage service endpoints are switched. The endpoints within the secondary region become the new primary endpoints for your storage account.

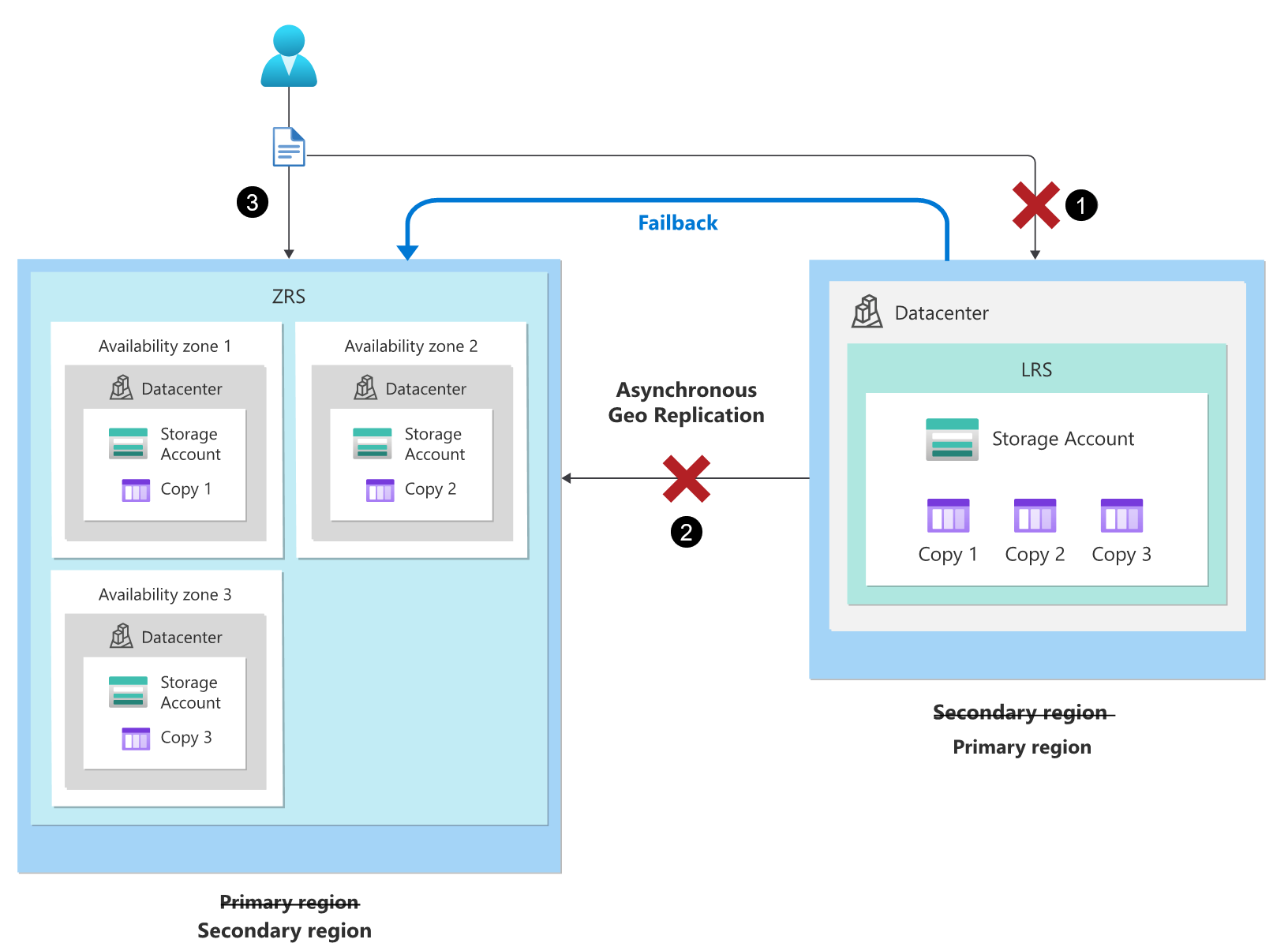

After the failback is complete, the original primary region becomes the current one again (1), and the copy of the storage account in the original secondary is deleted (2). The storage account is configured as locally redundant in the primary region, and is no longer geo-redundant. Users can resume writing data to the storage account (3), as shown in this image:

To resume replication to the original secondary region, reconfigure the account for geo-redundancy.

Important

Keep in mind that converting a locally redundant storage account to use geo-redundancy incurs both cost and time. For more information, see The time and cost of failing over.

After reconfiguring the account as GRS, replication to the original secondary region resumes as shown in this image: