Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Translator is an Azure AI services that enables you to perform language translation and other language-related operations. In this tutorial, you'll learn how to use Translator to build intelligent, multi-language solutions on Azure Synapse Analytics.

This tutorial demonstrates using translator with MMLSpark to:

- Translate text

- Transliterate text

- Detect language

- Break sentence

- Dictionary lookup

- Dictionary example

If you don't have an Azure subscription, create a trial account before you begin.

Prerequisites

- Azure Synapse Analytics workspace with an Azure Data Lake Storage Gen2 storage account configured as the default storage. You need to be the Storage Blob Data Contributor of the Data Lake Storage Gen2 file system that you work with.

- Spark pool in your Azure Synapse Analytics workspace. For details, see Create a Spark pool in Azure Synapse.

- Pre-configuration steps described in the tutorial Configure Azure AI services in Azure Synapse.

Get started

Open Synapse Studio and create a new notebook. To get started, import MMLSpark.

import mmlspark

from mmlspark.cognitive import *

from notebookutils import mssparkutils

from pyspark.sql.functions import col, flatten

Configure translator

Use the linked translator you configured in the pre-configuration steps .

ai_service_name = "<Your linked service for translator>"

Translate Text

The core operation of the Translator service is to translate text.

df = spark.createDataFrame([

(["Hello, what is your name?", "Bye"],)

], ["text",])

translate = (Translate()

.setLinkedService(ai_service_name)

.setTextCol("text")

.setToLanguage(["zh-Hans", "fr"])

.setOutputCol("translation")

.setConcurrency(5))

display(translate

.transform(df)

.withColumn("translation", flatten(col("translation.translations")))

.withColumn("translation", col("translation.text"))

.select("translation"))

Expected results

["你好,你叫什么名字?","Bonjour, quel est votre nom?","再见","Au revoir"]

Transliterate Text

Transliteration is the process of converting a word or phrase from the script (alphabet) of one language to another based on phonetic similarity.

transliterateDf = spark.createDataFrame([

(["こんにちは", "さようなら"],)

], ["text",])

transliterate = (Transliterate()

.setLinkedService(ai_service_name)

.setLanguage("ja")

.setFromScript("Jpan")

.setToScript("Latn")

.setTextCol("text")

.setOutputCol("result"))

display(transliterate

.transform(transliterateDf)

.withColumn("text", col("result.text"))

.withColumn("script", col("result.script"))

.select("text", "script"))

Expected results

| text | script |

|---|---|

| "["Kon'nichiwa","sayonara"]" | "["Latn","Latn"]" |

Detect Language

If you know that you'll need translation, but don't know the language of the text that will be sent to the Translator service, you can use the language detection operation.

detectDf = spark.createDataFrame([

(["Hello, what is your name?"],)

], ["text",])

detect = (Detect()

.setLinkedService(ai_service_name)

.setTextCol("text")

.setOutputCol("result"))

display(detect

.transform(detectDf)

.withColumn("language", col("result.language"))

.select("language"))

Expected results

"["en"]"

Break Sentence

Identifies the positioning of sentence boundaries in a piece of text.

bsDf = spark.createDataFrame([

(["Hello, what is your name?"],)

], ["text",])

breakSentence = (BreakSentence()

.setLinkedService(ai_service_name)

.setTextCol("text")

.setOutputCol("result"))

display(breakSentence

.transform(bsDf)

.withColumn("sentLen", flatten(col("result.sentLen")))

.select("sentLen"))

Expected results

"[25]"

Dictionary lookup (alternate translations)

With the endpoint, you can get alternate translations for a word or phrase.

dictDf = spark.createDataFrame([

(["fly"],)

], ["text",])

dictionaryLookup = (DictionaryLookup()

.setLinkedService(ai_service_name)

.setFromLanguage("en")

.setToLanguage("es")

.setTextCol("text")

.setOutputCol("result"))

display(dictionaryLookup

.transform(dictDf)

.withColumn("translations", flatten(col("result.translations")))

.withColumn("normalizedTarget", col("translations.normalizedTarget"))

.select("normalizedTarget"))

Expected results

| normalizedTarget |

|---|

| "["volar","mosca","operan","pilotar","moscas","marcha"]" |

Dictionary examples (translations in context)

After you've performed a dictionary lookup, you can pass the source text and translation to the dictionary/examples endpoint to get a list of examples that show both terms in the context of a sentence or phrase.

dictDf = spark.createDataFrame([

([("fly", "volar")],)

], ["textAndTranslation",])

dictionaryExamples = (DictionaryExamples()

.setLinkedService(ai_service_name)

.setFromLanguage("en")

.setToLanguage("es")

.setTextAndTranslationCol("textAndTranslation")

.setOutputCol("result"))

display(dictionaryExamples

.transform(dictDf)

.withColumn("examples", flatten(col("result.examples")))

.select("examples"))

Expected results

[{"sourcePrefix":"I mean, for a guy who could ","sourceSuffix":".","targetPrefix":"Quiero decir, para un tipo que podía ","targetTerm":"volar","sourceTerm":"fly","targetSuffix":"."},{"sourcePrefix":"Now it's time to make you ","sourceSuffix":".","targetPrefix":"Ahora es hora de que te haga ","targetTerm":"volar","sourceTerm":"fly","targetSuffix":"."},{"sourcePrefix":"One happy thought will make you ","sourceSuffix":".","targetPrefix":"Uno solo te hará ","targetTerm":"volar","sourceTerm":"fly","targetSuffix":"."},{"sourcePrefix":"They need machines to ","sourceSuffix":".","targetPrefix":"Necesitan máquinas para ","targetTerm":"volar","sourceTerm":"fly","targetSuffix":"."},{"sourcePrefix":"That should really ","sourceSuffix":".","targetPrefix":"Eso realmente debe ","targetTerm":"volar","sourceTerm":"fly","targetSuffix":"."},{"sourcePrefix":"It sure takes longer when you can't ","sourceSuffix":".","targetPrefix":"Lleva más tiempo cuando no puedes ","targetTerm":"volar","sourceTerm":"fly","targetSuffix":"."},{"sourcePrefix":"I have to ","sourceSuffix":" home in the morning.","targetPrefix":"Tengo que ","targetTerm":"volar","sourceTerm":"fly","targetSuffix":" a casa por la mañana."},{"sourcePrefix":"You taught me to ","sourceSuffix":".","targetPrefix":"Me enseñaste a ","targetTerm":"volar","sourceTerm":"fly","targetSuffix":"."},{"sourcePrefix":"I think you should ","sourceSuffix":" with the window closed.","targetPrefix":"Creo que debemos ","targetTerm":"volar","sourceTerm":"fly","targetSuffix":" con la ventana cerrada."},{"sourcePrefix":"They look like they could ","sourceSuffix":".","targetPrefix":"Parece que pudieran ","targetTerm":"volar","sourceTerm":"fly","targetSuffix":"."},{"sourcePrefix":"But you can ","sourceSuffix":", for instance?","targetPrefix":"Que puedes ","targetTerm":"volar","sourceTerm":"fly","targetSuffix":", por ejemplo."},{"sourcePrefix":"At least until her kids can be able to ","sourceSuffix":".","targetPrefix":"Al menos hasta que sus hijos sean capaces de ","targetTerm":"volar","sourceTerm":"fly","targetSuffix":"."},{"sourcePrefix":"I thought you could ","sourceSuffix":".","targetPrefix":"Pensé que podías ","targetTerm":"volar","sourceTerm":"fly","targetSuffix":"."},{"sourcePrefix":"I was wondering what it would be like to ","sourceSuffix":".","targetPrefix":"Me preguntaba cómo sería ","targetTerm":"volar","sourceTerm":"fly","targetSuffix":"."},{"sourcePrefix":"But nobody else can ","sourceSuffix":".","targetPrefix":"Pero nadie puede ","targetTerm":"volar","sourceTerm":"fly","targetSuffix":"."}]

Clean up resources

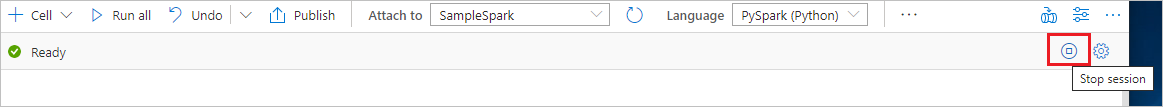

To ensure the Spark instance is shut down, end any connected sessions(notebooks). The pool shuts down when the idle time specified in the Apache Spark pool is reached. You can also select stop session from the status bar at the upper right of the notebook.