重要

自定义命令将于 2026 年 4 月 30 日停用。 自 2023 年 10 月 30 日起,无法在 Speech Studio 中创建新的自定义命令应用程序。 与此更改相关的是,LUIS 将于 2025 年 10 月 1 日停用。 自 2023 年 4 月 1 日起,无法创建新的 LUIS 资源。

语音助手等应用程序会侦听用户并采取响应措施,通常会反馈给用户。 他们使用语音转文本听录用户的语音,然后对文本的自然语言理解执行操作。 此操作通常包含由智能体通过文本转语音生成的语音输出。 设备使用语音 SDK DialogServiceConnector 的对象连接到代理。

使用“自定义命令”,可以轻松构建针对语音优先的交互体验进行了优化的丰富语音命令应用。 它提供了统一的创作体验、自动托管模型,以及相对较低的复杂性。 自定义命令可帮助你专注为声音命令场景构建最佳解决方案。

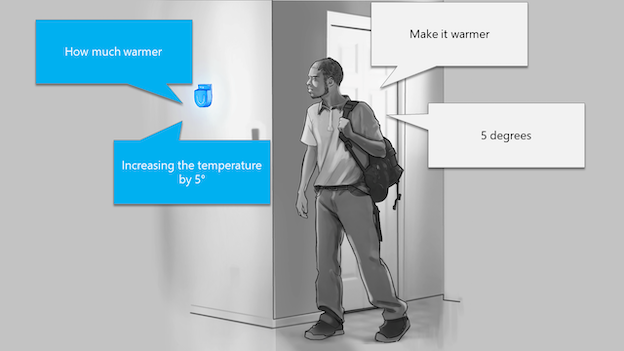

自定义命令最适合于任务完成或命令和控制方案,例如“打开头顶灯”或“使其变暖 5 度”。 自定义命令特别适合用于物联网 (IoT) 设备、环境监控设备和无外设设备。 示例包括用于酒店、零售和汽车行业的解决方案,其中从事这些行业的用户会希望为来宾、商店库存管理或车内功能提供语音控制体验。

适用于“自定义命令”的良好候选项具有一个固定词汇表,其中包含一组已经过完善定义的变量。 例如,家庭自动化任务(如“控制恒温器”)就是理想之选。

“自定义命令”入门

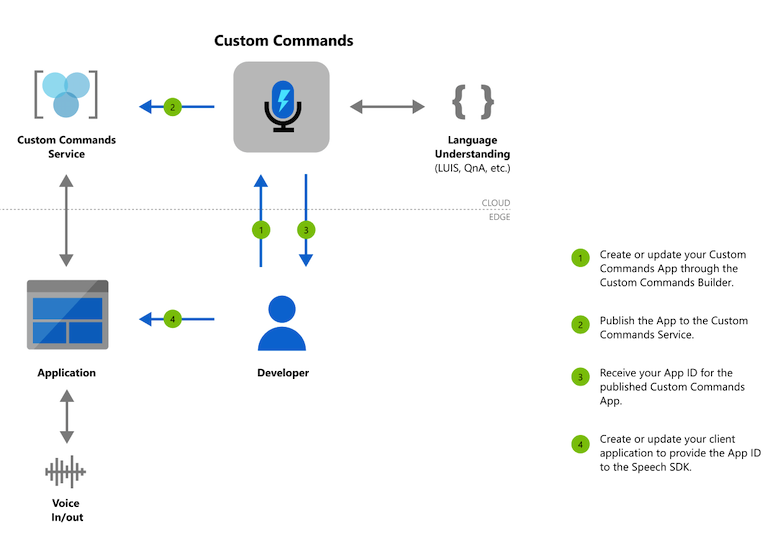

“自定义命令”的目标是降低你用于了解所有不同技术的认知负荷,使你专注于构建语音命令应用。 使用自定义命令创建语音 AI 服务资源的第一步。 可以在 Speech Studio 上创作“自定义命令”应用并将其发布,之后设备上的应用程序便可以使用语音 SDK 与它进行通信。

“自定义命令”的创作流

按照我们的快速入门操作,在不到 10 分钟的时间内便可让第一个“自定义命令”应用运行代码。

完成快速入门后,请浏览我们的操作指南,了解用于设计、开发、调试、部署和集成“自定义命令”应用程序的详细步骤。