Kubernetes 群集生成容器见解收集的大量数据。 由于为引入和保留此数据付费,因此需要配置环境以优化成本。 可以筛选出不需要的数据并优化存储数据的 Log Analytics 工作区的配置,以显著降低监视成本。

分析收集的数据并确定是否正在收集不需要的任何数据后,有几个选项可用于筛选不想收集的任何数据。 这包括从一组预定义的成本配置中进行选择,到利用不同的功能根据特定条件筛选数据。 本文提供了有关如何分析和优化容器见解数据收集的指南的演练。

通过 Prometheus 的 Azure Monitor 托管服务启用指标收集。

容器见解以前使用 Log Analytics 中的数据为 Azure 门户中的可视化效果提供支持。 随着托管版 Prometheus 的发布,指标收集的方式更加便宜且高效。 容器见解现在提供通过托管 Prometheus 指标进行可视化的功能。 若要开始使用托管 Prometheus,请参阅在 Azure Monitor 中切换到使用托管 Prometheus 的可视化效果。

分析数据引入

要确定节省成本的最佳机会,请分析在不同表中收集的数据量。 此信息将帮助确定哪些表正在消耗最多的数据,并帮助做出有关如何降低成本的明智决策。

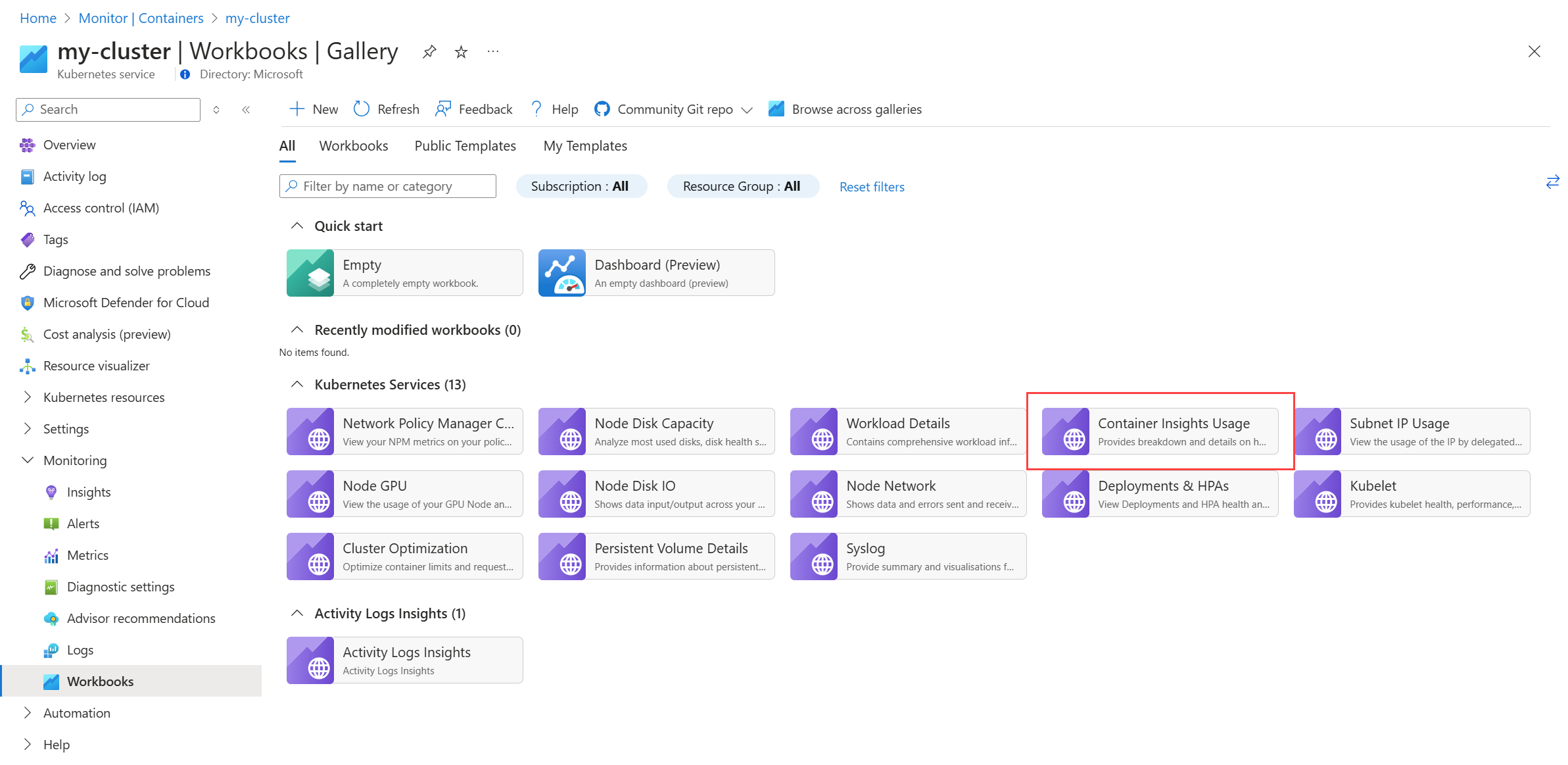

可以使用“容器见解使用情况”Runbook 直观显示每个工作区中引入的数据量,该 Runbook 可从受监视群集的“工作簿”页获取。

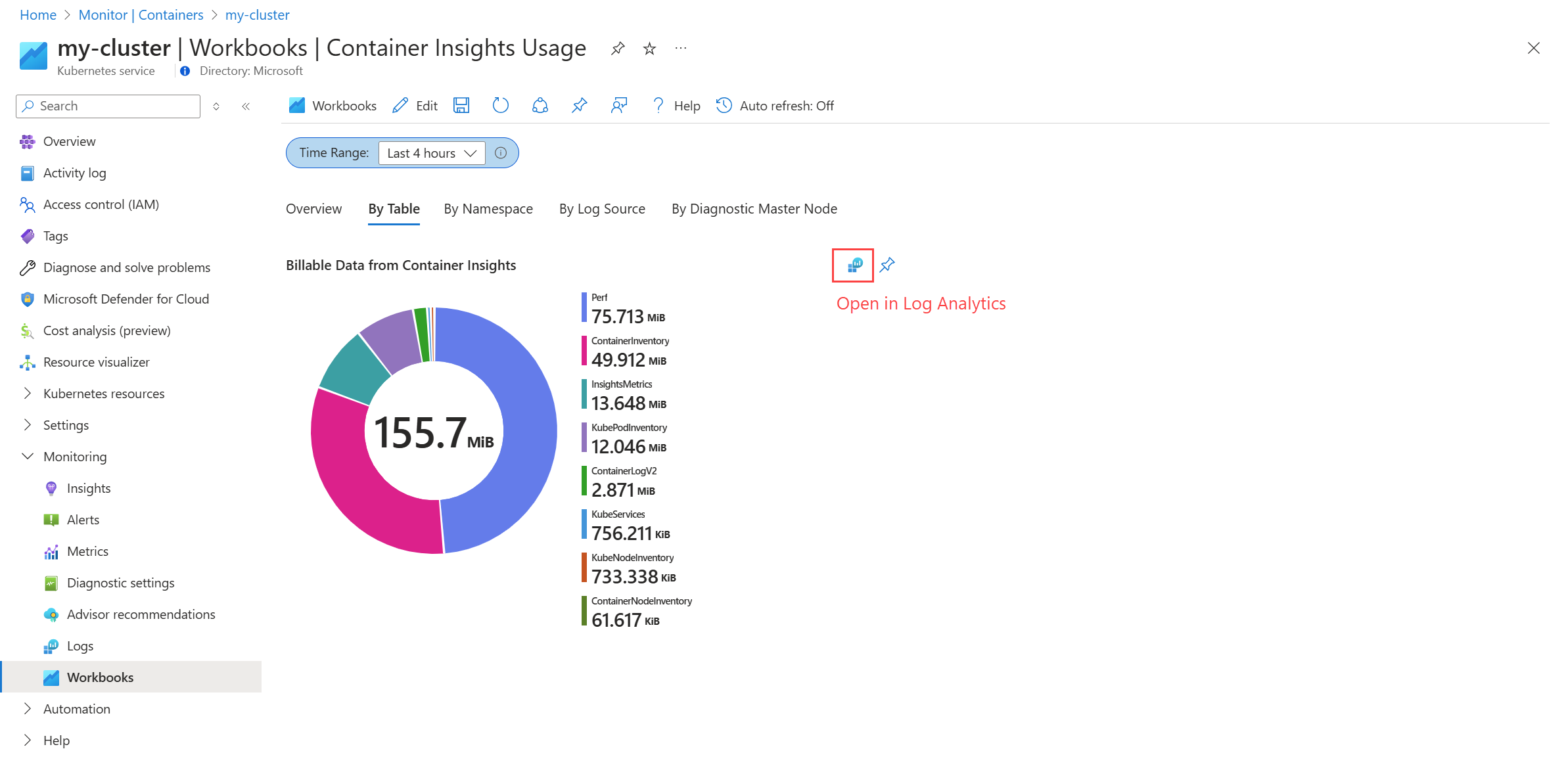

报表允许按不同的类别(例如,表、命名空间和日志源)查看数据使用情况。 使用这些不同的视图来确定未使用的、可以筛选出以降低成本的任何数据。

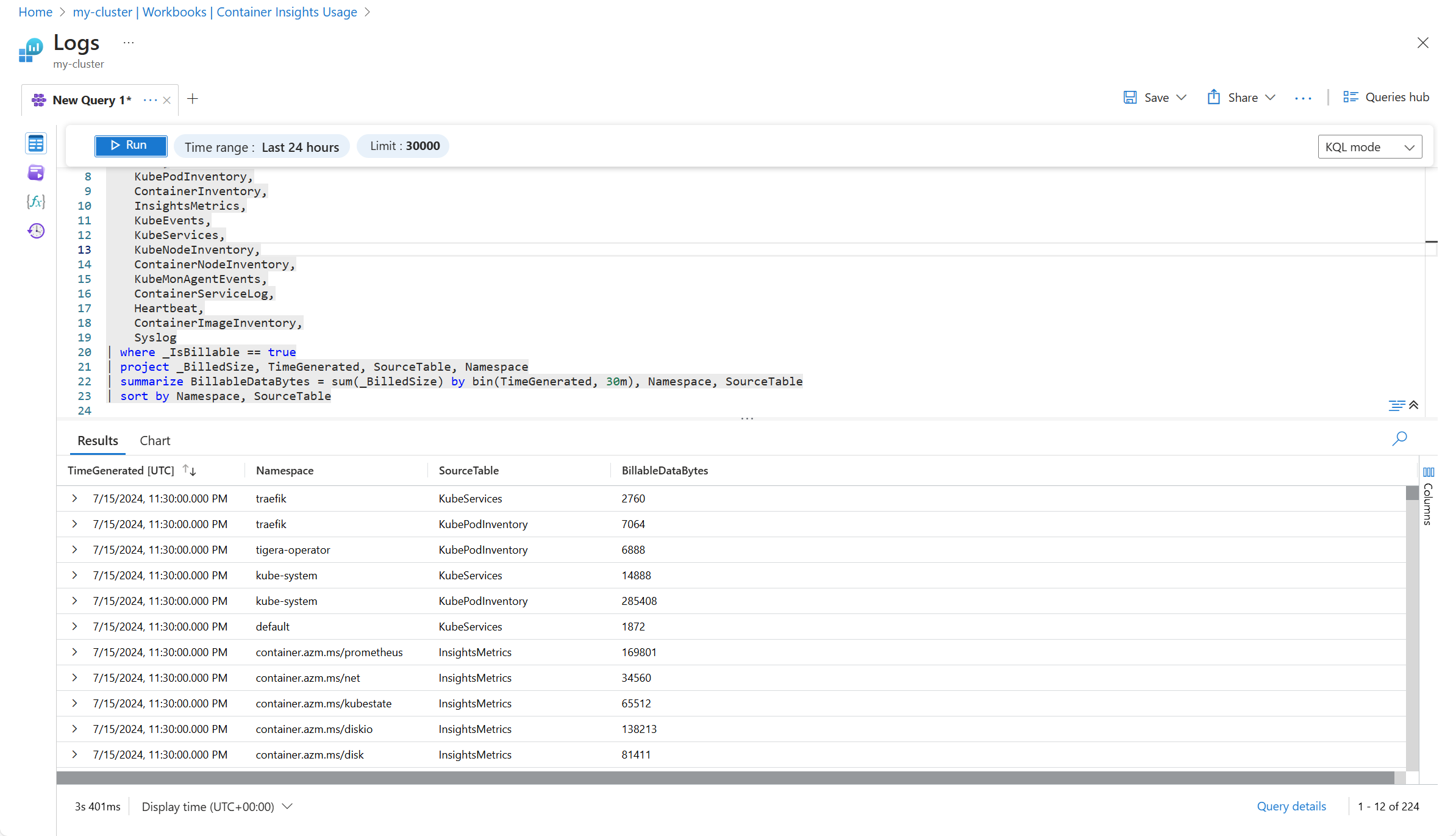

选择该选项以在 Log Analytics 中打开查询,可在其中执行更详细的分析,包括查看正在收集的单个记录。 有关可用于分析收集的数据的其他查询,请参阅容器见解中的查询日志。

例如,以下屏幕截图显示了对用于按表的日志查询的修改,该查询按命名空间和表显示数据。

筛选收集的数据

确定可以筛选的数据后,使用容器见解中的不同配置选项以筛选出不需要的数据。 选项可用于选择预定义的配置、设置单个参数,并使用自定义日志查询进行详细筛选。

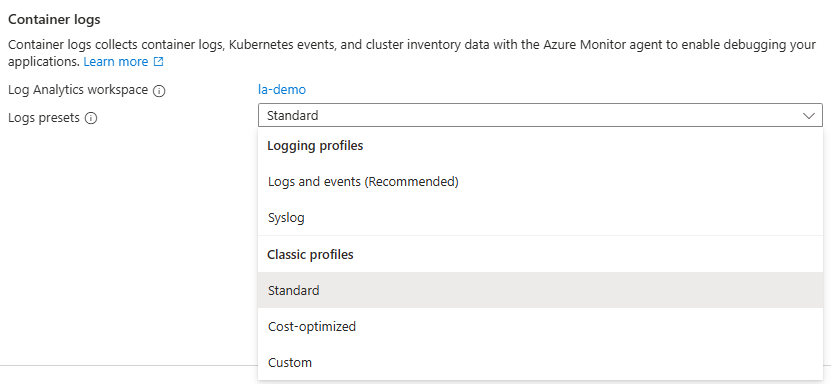

日志预设

筛选数据的最简单方法是使用 Azure 门户中的“ 监视 > 监视器设置”中的日志预设。 每个预设都包含根据不同的操作和成本配置文件收集的不同表集。 日志预设旨在帮助你根据常见方案快速配置数据收集。

筛选选项

选择适当的日志预设后,可以使用下表中的不同方法筛选其他数据。 每个选项都允许根据不同的条件筛选数据。 完成配置后,应仅收集分析和警报所需的数据。

| 筛选依据 | 说明 |

|---|---|

| 表 | 如果要选择单个表来填充日志预设组以外的其他表,请手动修改 DCR。 例如,你可能想要收集 ContainerLogV2 ,但不收集包含在同一日志预设中的 KubeEvents 。 有关要用于 DCR 的流的列表,请参阅 Stream 值 。 |

| 容器日志 |

ContainerLogV2存储群集中容器生成的 stdout/stderr 记录。 虽然可以使用 DCR 禁用整个表的收集,但可以使用群集的 ConfigMap 单独配置 stderr 和 stdout 日志的集合。 由于可以单独配置stdout和stderr设置,因此可以选择启用一个设置而不是另一个设置。有关筛选容器日志的详细信息,请参阅 使用 ConfigMap 筛选容器日志集合 。 |

| 命名空间 | Kubernetes 中的命名空间用于对群集中的资源进行分组。 可以从不需要的特定命名空间的资源中筛选出数据。 如果使用 DCR,则只有当已为Perf表启用集合时,才能按命名空间筛选性能数据。 使用 ConfigMap 筛选stdout和stderr日志中特定命名空间的数据。请参阅筛选容器日志,了解按命名空间筛选日志的详细信息,并请参阅平台日志筛选(系统 Kubernetes 命名空间),了解系统命名空间的详细信息。 |

| Pod 和容器 | 注释筛选允许根据对 Pod 所做的注释筛选出容器日志。 使用 ConfigMap,可以指定是否应为单个 Pod 和容器收集 stdout 和 stderr 日志。 有关更新 ConfigMap 和在 Pod 中设置注释的详细信息,请参阅基于注释的工作负载筛选。 |

转换

引入时间转换允许应用 KQL 查询来筛选和转换Azure Monitor 管道中的数据,然后再将其存储在 Log Analytics 工作区中。 这样,便可以根据无法使用其他选项执行的条件筛选数据。

例如,可以选择根据 ContainerLogV2 中的日志级别筛选容器日志。 可以将转换添加到容器见解 DCR,这会执行下图中的功能。 在此示例中,仅收集error和critical级别事件,而忽略任何其他事件。

备选策略是将不太重要的事件保存到为基本日志配置的单独表。 这些事件仍可用于故障排除,但数据引入节省了大量成本。

请参阅容器见解中的数据转换,详细了解如何将转换添加到容器见解 DCR,包括使用转换的示例 DCR。

配置定价层

对于偶尔用于调试和故障排除的数据,Azure Monitor 中的基本日志为在 Log Analytics 工作区中引入数据提供了大幅的成本折扣。 为基本日志配置的表为数据引入提供了大幅的成本折扣,以换取日志查询的成本,这意味着它们非常适合很少访问的所需数据。

为基本日志配置 ContainerLogV2 ,以显著降低容器日志的数据引入成本。 请参阅 AKS 的成本高效警报策略 ,了解不同的策略,以继续针对容器日志发出警报,此表配置为基本日志。

经济高效的警报策略

警报是监视 Azure Kubernetes 服务(AKS)上的工作负荷的关键部分。 高级警报需要在 Log Analytics 工作区中使用 Analytics 层日志,但对于大容量环境或某些类型的日志(例如审核日志)而言,这可能成本高昂。

通过将保存容器日志的表转换为 基本日志 并利用 Log Analytics 平台的其他经济高效策略,可以显著降低数据引入成本。 Azure Monitor 提供针对这些表的事件驱动和基于摘要的警报的选项,使你能够更好地控制成本,而无需牺牲对 AKS 工作负载的运行状况和行为的可见性。 有关详细信息,请参阅 AKS 的成本高效警报策略 。

后续步骤

为了帮助你了解基于数据(通过容器见解收集)中的最新使用模式可能产生的成本,请参阅分析 Log Analytics 工作区中的使用情况。