适用于: Azure 数据工厂

Azure 数据工厂  Azure Synapse Analytics

Azure Synapse Analytics

在本快速入门中,你将使用复制数据工具创建一个管道,将数据从 Azure Blob 存储中的源文件夹复制到目标文件夹。

先决条件

Azure 订阅

如果没有 Azure 订阅,可在开始前创建一个试用帐户。

在 Azure Blob 存储中准备源数据

选择下面的按钮以进行试用!

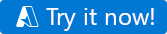

你将重定向到下图中显示的配置页来部署模板。 之后,只需创建新的资源组。 (可以将所有其他值保留默认状态。)然后单击“查看 + 创建”,单击“创建”以部署资源。

重要

请编辑模板,使存储后缀和终结点适用于由世纪互联运营的 Microsoft Azure。

注意

部署模板的用户需要将角色分配给托管标识。 这需要通过所有者、用户访问管理员或托管标识操作员角色授予的权限。

新的资源组中将创建一个新的 Blob 存储帐户,Blob 存储中名为 input 的文件夹中将存储 moviesDB2.csv 文件。

创建数据工厂

可以使用现有数据工厂或创建一个新的数据工厂,如快速入门:使用 Azure 门户创建数据工厂中所述。

使用复制数据工具复制数据

以下步骤将引导你了解如何使用 Azure 数据工厂中的复制数据工具轻松复制数据。

步骤 1:启动复制数据工具

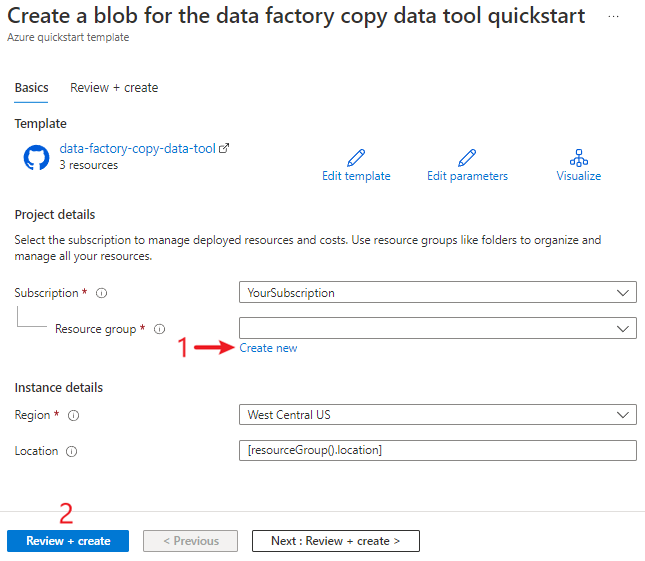

在 Azure 数据工厂的主页上,选择“引入”磁贴来启动“复制数据”工具。

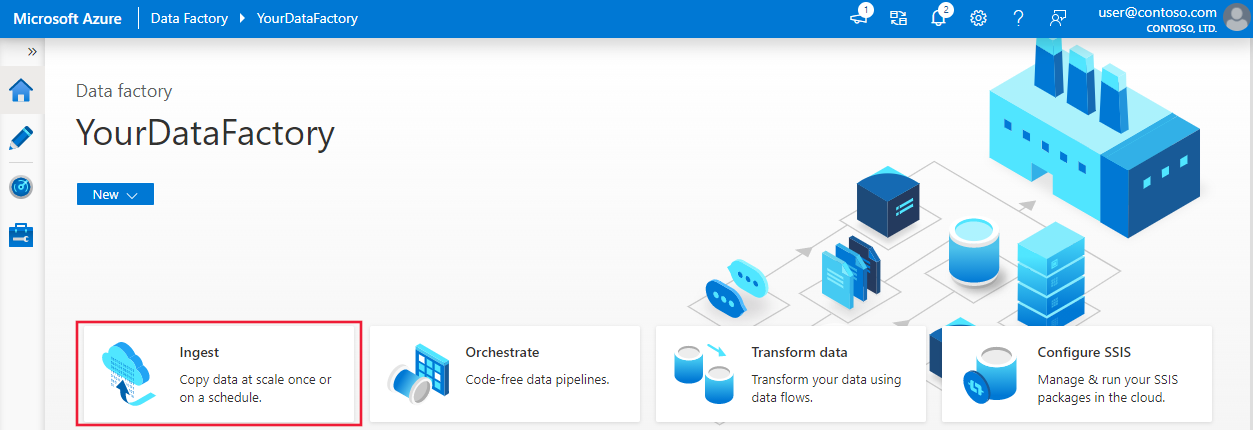

在“复制数据”工具的“属性”页上,选择“任务类型”下的“内置复制任务”,然后选择“下一步” 。

步骤 2:完成源配置

单击“+ 创建新连接”,添加一个连接。

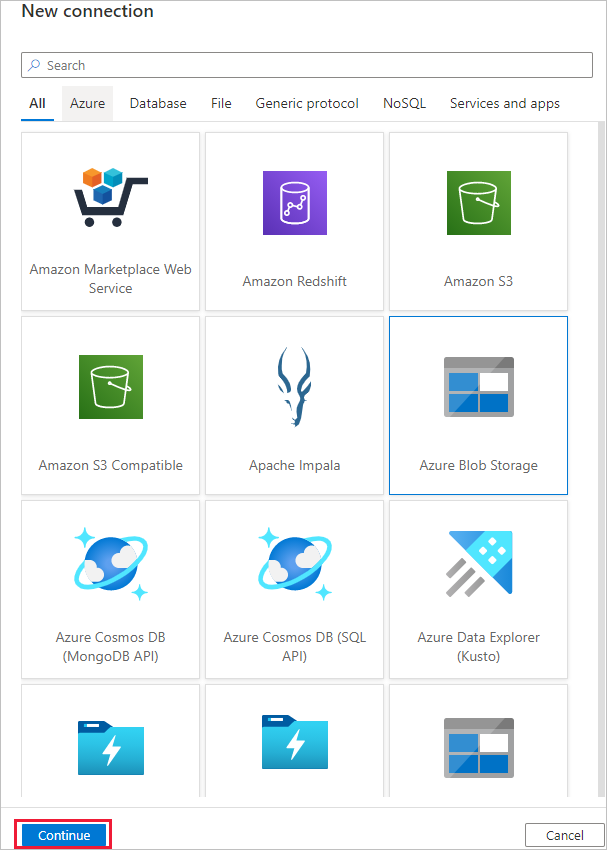

选择要创建的用于源连接的链接服务类型。 在本教程中,我们使用“Azure Blob 存储”。 从库中选择它,然后选择“继续”。

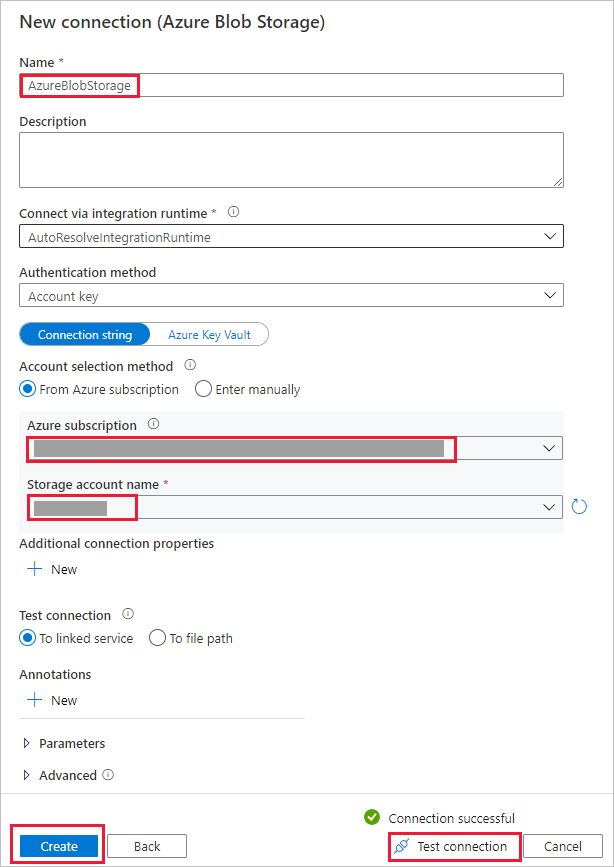

在“新建连接(Azure Blob 存储)”页上,指定连接的名称。 从“Azure 订阅”列表中选择你的 Azure 订阅,从“存储帐户名称”列表中选择你的存储帐户,测试连接,然后选择“创建” 。

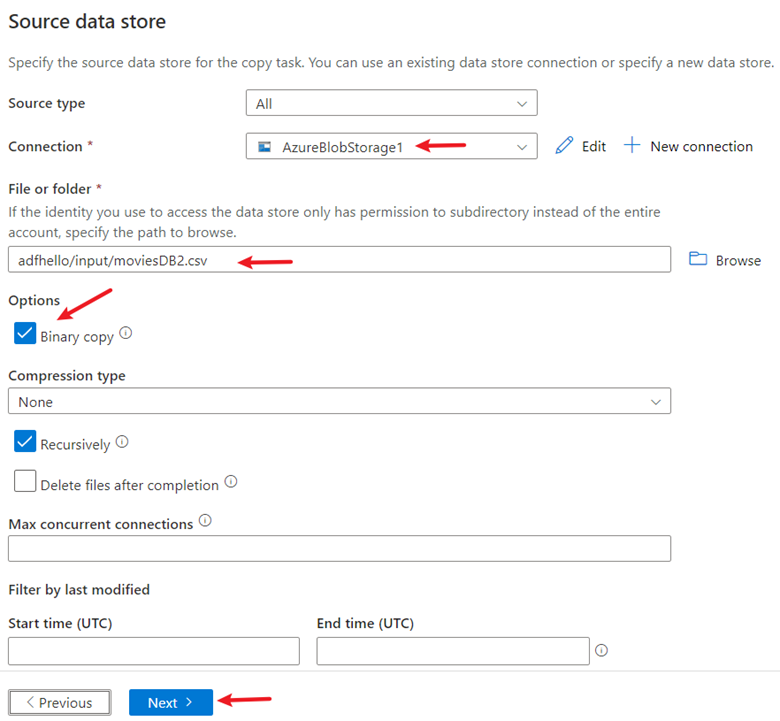

在“连接”块中选择新建的连接。

在“文件或文件夹”部分,选择“浏览”导航到“adftutorial/input”文件夹,选择“emp.txt”文件,然后单击“确定” 。

选中“二进制复制”复选框以便按原样复制文件,然后选择“下一步”。

步骤 3:完成目标配置

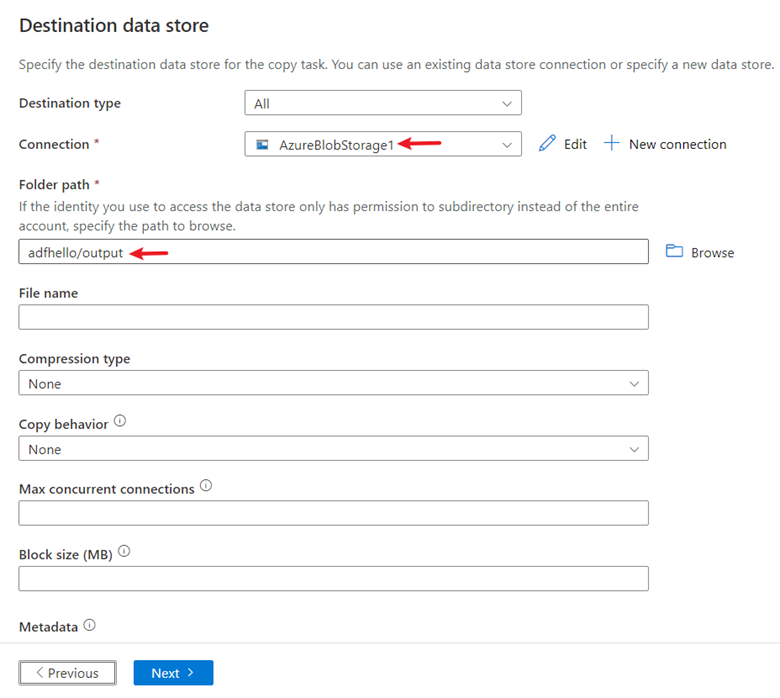

选择在“连接”块中创建的“AzureBlobStorage”连接 。

在“文件夹路径”部分,输入“adftutorial/output”作为文件夹路径。

将其他设置保留默认值,然后选择“下一步”。

步骤 4:查看所有设置和部署

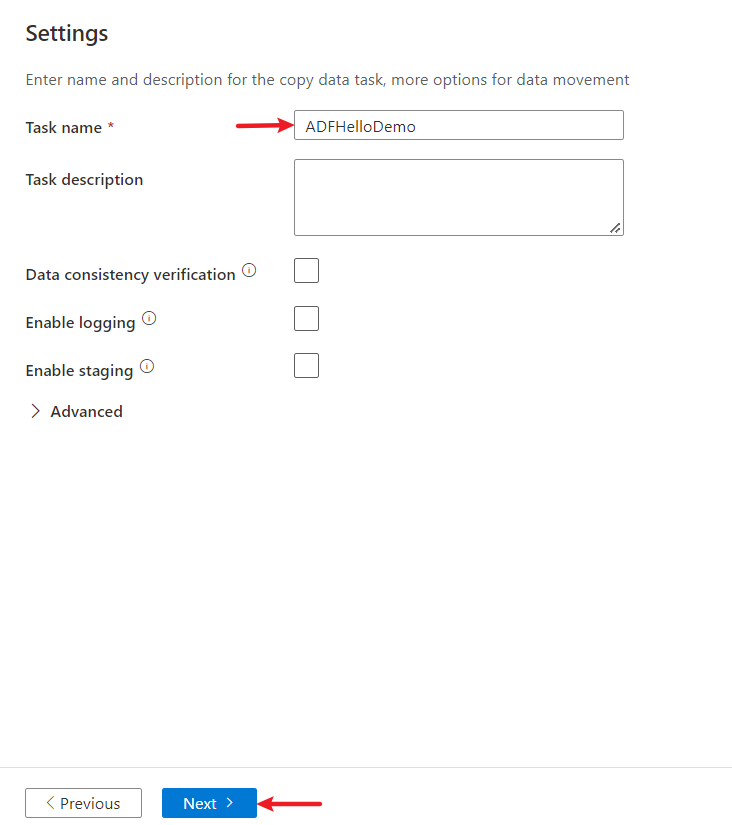

在“设置”页上,指定管道的名称及其说明,然后选择“下一步”以使用其他默认配置 。

在“摘要”页中查看所有设置,然后选择“下一步”。

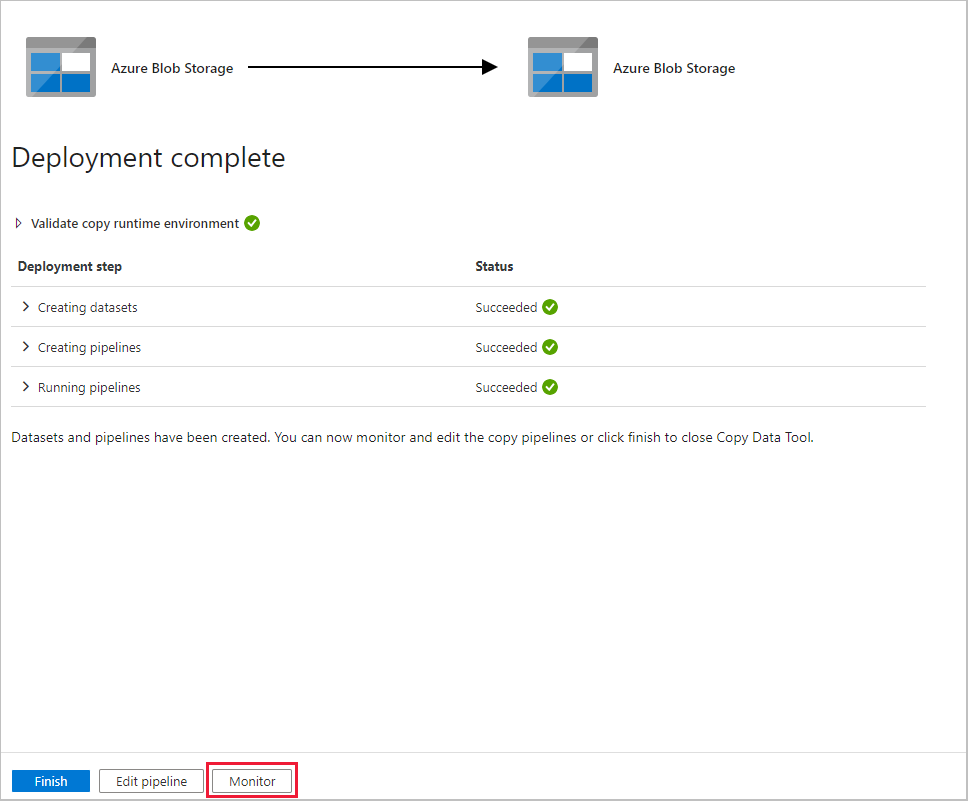

在“部署已完成”页中,选择“监视”可监视创建的管道。

步骤 5:监视正在运行的结果

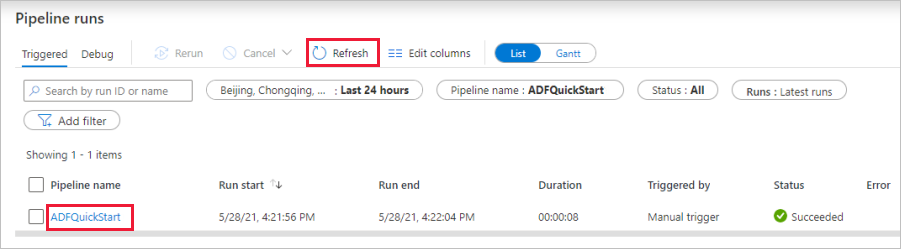

应用程序将切换到“监视”选项卡。可在此选项卡中查看管道的状态。选择“刷新”可刷新列表。 单击“管道名称”下的链接,查看活动运行详细信息或重新运行管道。

在“活动运行”页上,选择“活动名称”列下的“详细信息”链接(眼镜图标),以获取有关复制操作的更多详细信息 。 有关属性的详细信息,请参阅复制活动概述。

相关内容

此示例中的管道将数据从 Azure Blob 存储中的一个位置复制到另一个位置。 若要了解如何在更多方案中使用数据工厂,请完成相关教程。