适用于: Azure 数据工厂

Azure 数据工厂  Azure Synapse Analytics

Azure Synapse Analytics

本快速入门介绍如何使用 Azure 资源管理器模板(ARM 模板)来创建 Azure 数据工厂。 在此数据工厂中创建的管道会将数据从 Azure Blob 存储中的一个文件夹复制到另一个文件夹。 有关如何使用 Azure 数据工厂转换数据的教程,请参阅教程:使用 Spark 转换数据。

Azure 资源管理器模板是定义项目基础结构和配置的 JavaScript 对象表示法 (JSON) 文件。 模板使用声明性语法。 你可以在不编写用于创建部署的编程命令序列的情况下,描述预期部署。

注意

本文不提供数据工厂服务的详细介绍。 有关 Azure 数据工厂服务的介绍,请参阅 Azure 数据工厂简介。

如果你的环境满足先决条件,并且你熟悉如何使用 ARM 模板,请选择“部署到 Azure”按钮。 Azure 门户中会打开模板。

先决条件

Azure 订阅

如果没有 Azure 订阅,可在开始前创建一个试用帐户。

创建文件

打开文本编辑器(如记事本),并创建包含以下内容的名为“emp.txt”的文件:

John, Doe

Jane, Doe

将此文件保存在 C:\ADFv2QuickStartPSH 文件夹中。 (如果此文件夹不存在,则创建它。)

审阅模板

本快速入门中使用的模板来自 Azure 快速启动模板。

{

"$schema": "https://schema.management.azure.com/schemas/2019-04-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"metadata": {

"_generator": {

"name": "bicep",

"version": "0.4.1.14562",

"templateHash": "8367564219536411224"

}

},

"parameters": {

"dataFactoryName": {

"type": "string",

"defaultValue": "[format('datafactory{0}', uniqueString(resourceGroup().id))]",

"metadata": {

"description": "Data Factory Name"

}

},

"location": {

"type": "string",

"defaultValue": "[resourceGroup().location]",

"metadata": {

"description": "Location of the data factory."

}

},

"storageAccountName": {

"type": "string",

"defaultValue": "[format('storage{0}', uniqueString(resourceGroup().id))]",

"metadata": {

"description": "Name of the Azure storage account that contains the input/output data."

}

},

"blobContainerName": {

"type": "string",

"defaultValue": "[format('blob{0}', uniqueString(resourceGroup().id))]",

"metadata": {

"description": "Name of the blob container in the Azure Storage account."

}

}

},

"functions": [],

"variables": {

"dataFactoryLinkedServiceName": "ArmtemplateStorageLinkedService",

"dataFactoryDataSetInName": "ArmtemplateTestDatasetIn",

"dataFactoryDataSetOutName": "ArmtemplateTestDatasetOut",

"pipelineName": "ArmtemplateSampleCopyPipeline"

},

"resources": [

{

"type": "Microsoft.Storage/storageAccounts",

"apiVersion": "2021-04-01",

"name": "[parameters('storageAccountName')]",

"location": "[parameters('location')]",

"sku": {

"name": "Standard_LRS"

},

"kind": "StorageV2"

},

{

"type": "Microsoft.Storage/storageAccounts/blobServices/containers",

"apiVersion": "2021-04-01",

"name": "[format('{0}/default/{1}', parameters('storageAccountName'), parameters('blobContainerName'))]",

"dependsOn": [

"[resourceId('Microsoft.Storage/storageAccounts', parameters('storageAccountName'))]"

]

},

{

"type": "Microsoft.DataFactory/factories",

"apiVersion": "2018-06-01",

"name": "[parameters('dataFactoryName')]",

"location": "[parameters('location')]",

"identity": {

"type": "SystemAssigned"

}

},

{

"type": "Microsoft.DataFactory/factories/linkedservices",

"apiVersion": "2018-06-01",

"name": "[format('{0}/{1}', parameters('dataFactoryName'), variables('dataFactoryLinkedServiceName'))]",

"properties": {

"type": "AzureBlobStorage",

"typeProperties": {

"connectionString": "[format('DefaultEndpointsProtocol=https;AccountName={0};AccountKey={1};EndpointSuffix=core.chinacloudapi.cn', parameters('storageAccountName'), listKeys(resourceId('Microsoft.Storage/storageAccounts', parameters('storageAccountName')), '2021-04-01').keys[0].value)]"

}

},

"dependsOn": [

"[resourceId('Microsoft.DataFactory/factories', parameters('dataFactoryName'))]",

"[resourceId('Microsoft.Storage/storageAccounts', parameters('storageAccountName'))]"

]

},

{

"type": "Microsoft.DataFactory/factories/datasets",

"apiVersion": "2018-06-01",

"name": "[format('{0}/{1}', parameters('dataFactoryName'), variables('dataFactoryDataSetInName'))]",

"properties": {

"linkedServiceName": {

"referenceName": "[variables('dataFactoryLinkedServiceName')]",

"type": "LinkedServiceReference"

},

"type": "Binary",

"typeProperties": {

"location": {

"type": "AzureBlobStorageLocation",

"container": "[format('{0}/default/{1}', parameters('storageAccountName'), parameters('blobContainerName'))]",

"folderPath": "input",

"fileName": "emp.txt"

}

}

},

"dependsOn": [

"[resourceId('Microsoft.Storage/storageAccounts/blobServices/containers', split(format('{0}/default/{1}', parameters('storageAccountName'), parameters('blobContainerName')), '/')[0], split(format('{0}/default/{1}', parameters('storageAccountName'), parameters('blobContainerName')), '/')[1], split(format('{0}/default/{1}', parameters('storageAccountName'), parameters('blobContainerName')), '/')[2])]",

"[resourceId('Microsoft.DataFactory/factories', parameters('dataFactoryName'))]",

"[resourceId('Microsoft.DataFactory/factories/linkedservices', parameters('dataFactoryName'), variables('dataFactoryLinkedServiceName'))]"

]

},

{

"type": "Microsoft.DataFactory/factories/datasets",

"apiVersion": "2018-06-01",

"name": "[format('{0}/{1}', parameters('dataFactoryName'), variables('dataFactoryDataSetOutName'))]",

"properties": {

"linkedServiceName": {

"referenceName": "[variables('dataFactoryLinkedServiceName')]",

"type": "LinkedServiceReference"

},

"type": "Binary",

"typeProperties": {

"location": {

"type": "AzureBlobStorageLocation",

"container": "[format('{0}/default/{1}', parameters('storageAccountName'), parameters('blobContainerName'))]",

"folderPath": "output"

}

}

},

"dependsOn": [

"[resourceId('Microsoft.Storage/storageAccounts/blobServices/containers', split(format('{0}/default/{1}', parameters('storageAccountName'), parameters('blobContainerName')), '/')[0], split(format('{0}/default/{1}', parameters('storageAccountName'), parameters('blobContainerName')), '/')[1], split(format('{0}/default/{1}', parameters('storageAccountName'), parameters('blobContainerName')), '/')[2])]",

"[resourceId('Microsoft.DataFactory/factories', parameters('dataFactoryName'))]",

"[resourceId('Microsoft.DataFactory/factories/linkedservices', parameters('dataFactoryName'), variables('dataFactoryLinkedServiceName'))]"

]

},

{

"type": "Microsoft.DataFactory/factories/pipelines",

"apiVersion": "2018-06-01",

"name": "[format('{0}/{1}', parameters('dataFactoryName'), variables('pipelineName'))]",

"properties": {

"activities": [

{

"name": "MyCopyActivity",

"type": "Copy",

"policy": {

"timeout": "7.00:00:00",

"retry": 0,

"retryIntervalInSeconds": 30,

"secureOutput": false,

"secureInput": false

},

"typeProperties": {

"source": {

"type": "BinarySource",

"storeSettings": {

"type": "AzureBlobStorageReadSettings",

"recursive": true

}

},

"sink": {

"type": "BinarySink",

"storeSettings": {

"type": "AzureBlobStorageWriterSettings"

}

},

"enableStaging": false

},

"inputs": [

{

"referenceName": "[variables('dataFactoryDataSetInName')]",

"type": "DatasetReference"

}

],

"outputs": [

{

"referenceName": "[variables('dataFactoryDataSetOutName')]",

"type": "DatasetReference"

}

]

}

]

},

"dependsOn": [

"[resourceId('Microsoft.DataFactory/factories', parameters('dataFactoryName'))]",

"[resourceId('Microsoft.DataFactory/factories/datasets', parameters('dataFactoryName'), variables('dataFactoryDataSetInName'))]",

"[resourceId('Microsoft.DataFactory/factories/datasets', parameters('dataFactoryName'), variables('dataFactoryDataSetOutName'))]"

]

}

]

}

该模板中定义了 Azure 资源:

- Microsoft.Storage/storageAccounts:定义存储帐户。

- Microsoft.DataFactory/factories:创建 Azure 数据工厂。

- Microsoft.DataFactory/factories/linkedServices:创建 Azure 数据工厂链接服务。

- Microsoft.DataFactory/factories/datasets:创建 Azure 数据工厂数据集。

- Microsoft.DataFactory/factories/pipelines:创建 Azure 数据工厂管道。

可以在快速入门模板库中找到更多 Azure 数据工厂模板示例。

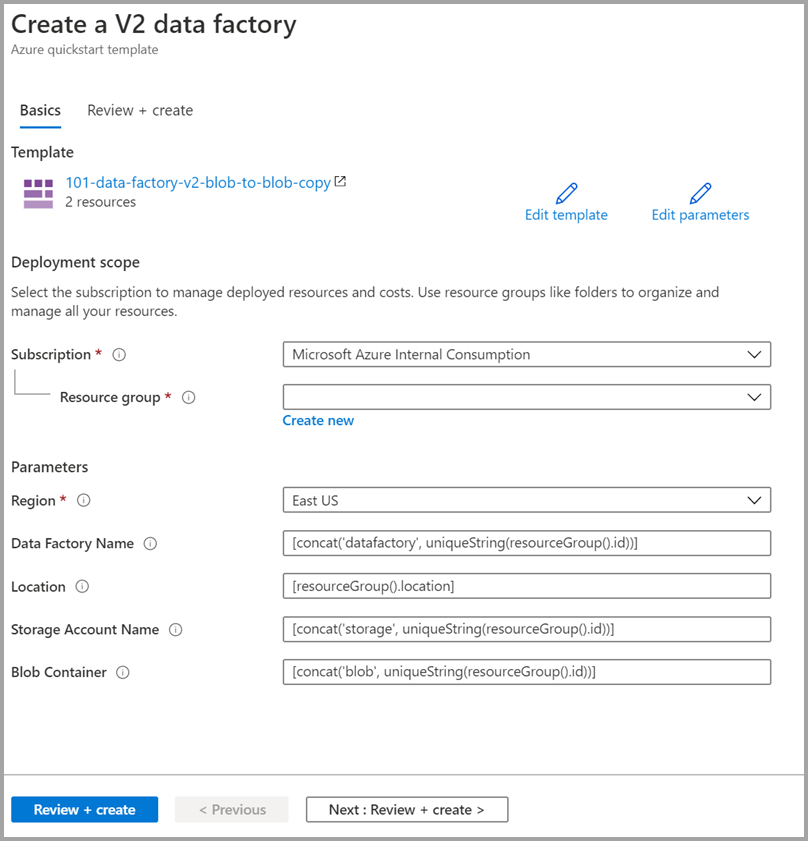

部署模板

选择下图登录到 Azure 并打开一个模板。 此模板创建 Azure 数据工厂帐户、存储帐户和 blob 容器。

选择或输入以下值。

除非另有指定,否则请使用默认值创建 Azure 数据工厂资源:

- 订阅:选择 Azure 订阅。

- 资源组:选择“新建”,输入资源组的唯一名称,然后选择“确定”。

- 区域:选择一个位置。 例如,“中国东部 2”。

- 数据工厂名称:使用默认值。

- 位置:使用默认值。

- 存储帐户名称:使用默认值。

- Blob 容器:使用默认值。

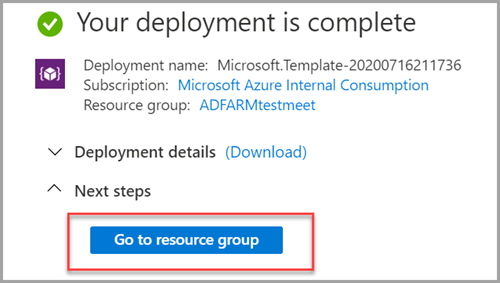

查看已部署的资源

选择转到资源组。

验证是否已创建 Azure 数据工厂。

- 你的 Azure 数据工厂名称的格式是 -datafactory <uniqueid>。

验证是否已创建存储帐户。

- 存储帐户名称的格式为 - storage<uniqueid>。

选择创建的存储帐户,并选择“容器”。

- 在“容器”页上,选择创建的 blob 容器。

- blob 容器名称的格式为 - blob<uniqueid>。

- 在“容器”页上,选择创建的 blob 容器。

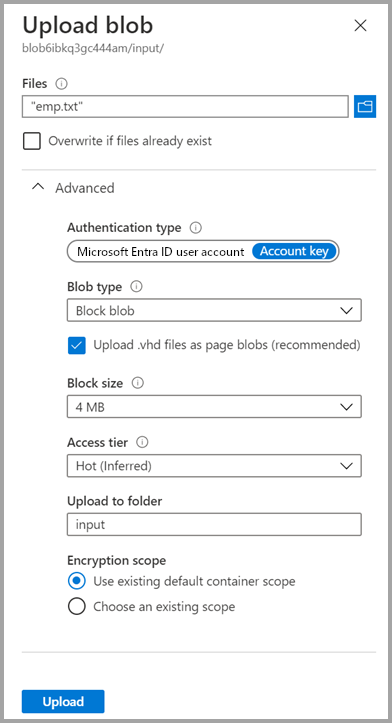

上传文件

在“容器”页上,选择“上传”。

在右侧窗口,选择“文件”框,然后浏览到先前创建的“emp.txt”文件并进行选择。

展开“高级”标题。

在“上传到文件夹”框中,输入“输入”。

选择“上传”按钮。 应该会在列表中看到 emp.txt 文件和上传状态。

选择“关闭”图标 (X) 以关闭“上传 Blob”页面。

请保持容器页面打开,因为您可以在快速入门结束时使用它来验证输出。

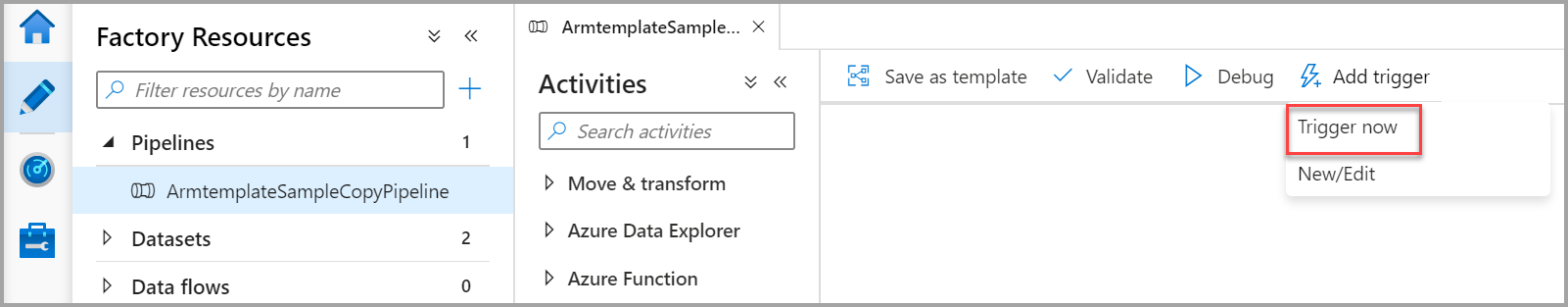

启动触发器

导航到“数据工厂”页,选择创建的数据工厂。

在“打开 Azure 数据工厂工作室”磁贴上选择“打开”。

选择“作者”选项卡

。

。选择创建的管道 - ArmtemplateSampleCopyPipeline。

选择添加触发器>立即触发。

在“管道运行”下的右窗格中,选择“确定”。

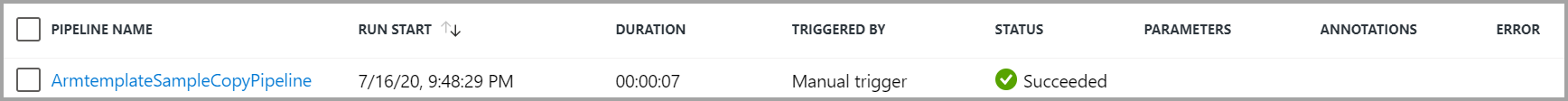

监视管道

选择监视选项卡

。

。此时会看到与管道运行相关联的活动运行。 在本快速入门中,管道只有一个类型为“复制”的活动。 因此会看到该活动运行。

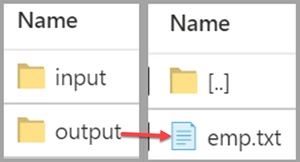

验证输出文件

该管道自动在 blob 容器中创建一个输出文件夹。 然后将 emp.txt 文件从 input 文件夹复制到 output 文件夹。

在 Azure 门户的“容器”页中选择“刷新”,以查看输出文件夹。

在文件夹列表中,选择“输出”。

确认 emp.txt 已复制到 output 文件夹。

清理资源

可以通过两种方式清理在快速入门中创建的资源。 可以删除 Azure 资源组,其中包括资源组中的所有资源。 若要使其他资源保持原封不动,请仅删除在此教程中创建的数据工厂。

删除资源组时会删除所有资源,包括其中的数据工厂。 运行以下命令可以删除整个资源组:

Remove-AzResourceGroup -ResourceGroupName $resourcegroupname

如果只需删除数据工厂,而不是整个资源组,请运行以下命令:

Remove-AzDataFactoryV2 -Name $dataFactoryName -ResourceGroupName $resourceGroupName

相关内容

在本快速入门中,你使用 ARM 模板创建了 Azure 数据工厂工作区,并验证了部署。 若要详细了解 Azure 数据工厂和 Azure 资源管理器,请继续阅读以下文章。

- Azure 数据工厂文档

- 了解有关 Azure 资源管理器的详细信息

- 获取其他 Azure 数据工厂 ARM 模板