适用于: Azure 数据工厂

Azure 数据工厂  Azure Synapse Analytics

Azure Synapse Analytics

本文介绍了一个解决方案模板,你可以使用该模板将数据从 Azure Data Lake Storage Gen2 批量复制到 Azure Synapse Analytics/Azure SQL 数据库。

关于此解决方案模板

此模板从 Azure Data Lake Storage Gen2 源检索文件。 然后,它会循环访问源中的每个文件,并将文件复制到目标数据存储。

当前,此模板仅支持复制 DelimitedText 格式的数据。 也可以从源数据存储中检索其他数据格式的文件,但不能将这些文件复制到目标数据存储。

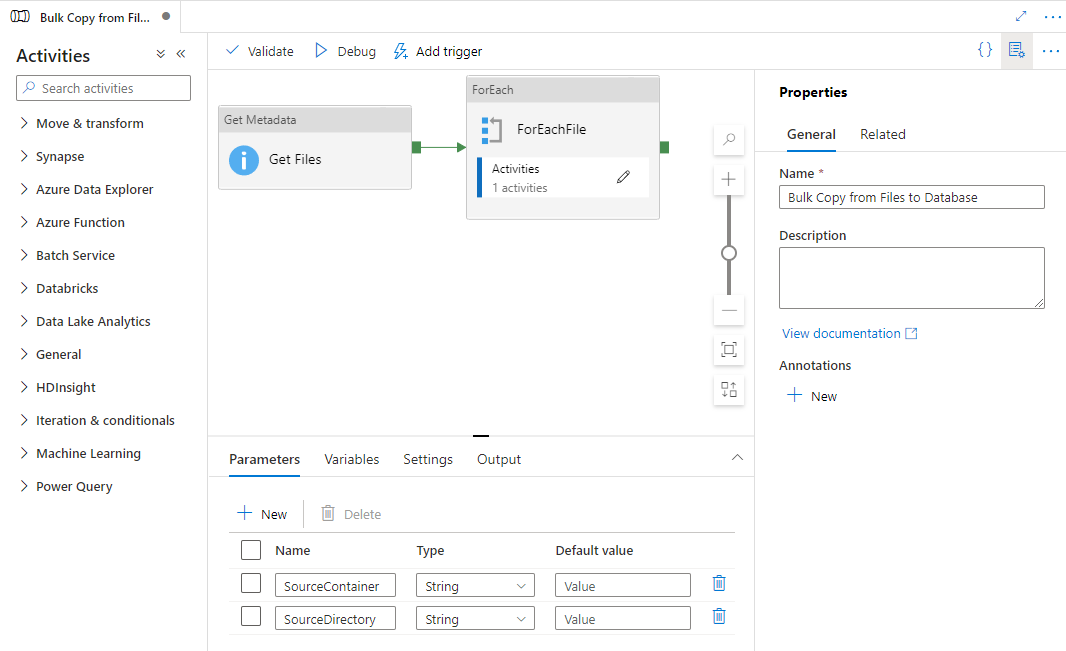

该模板包含三个活动:

- 获取元数据活动可从 Azure Data Lake Storage Gen2 中检索文件,然后将其传递给后续的 ForEach 活动。

- ForEach 活动可从获取元数据活动获取文件,并以迭代方式将每个文件传递给复制活动。

- 复制活动位于 ForEach 活动中,可将源数据存储中的每个文件复制到目标数据存储。

此模板定义以下两个参数:

- SourceContainer 是从 Azure Data Lake Storage Gen2 中复制数据的根容器路径。

- SourceDirectory 是从 Azure Data Lake Storage Gen2 中复制数据的根容器下的目录路径。

如何使用此解决方案模板

打开 Azure 数据工厂工作室,然后单击铅笔图标选择“创建者”选项卡。

将鼠标悬停在“管道”部分上,然后选择右侧显示的省略号。 然后选择“模板中的管道”。

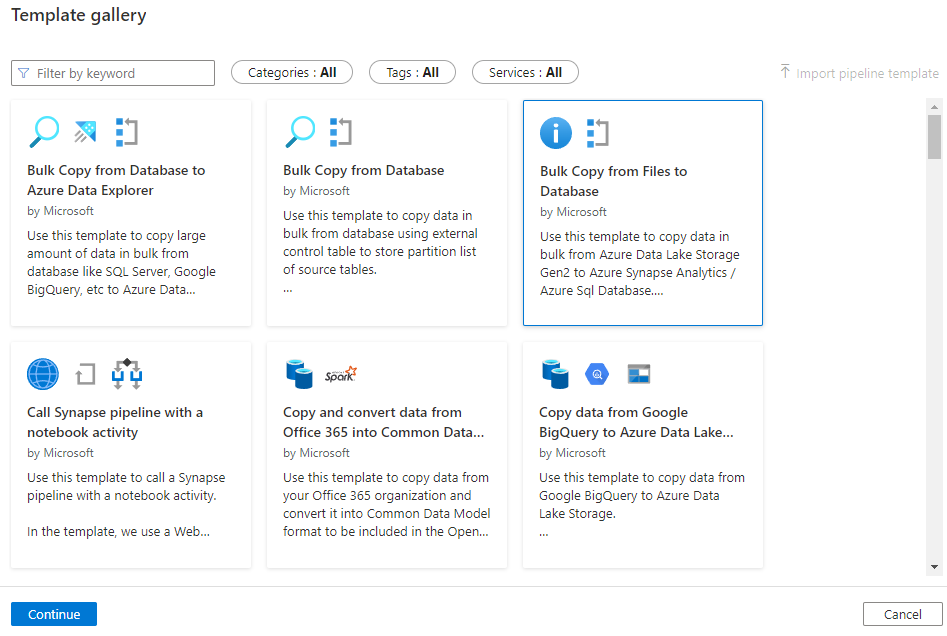

选择“从文件向数据库进行批量复制”模板,然后选择“继续”。

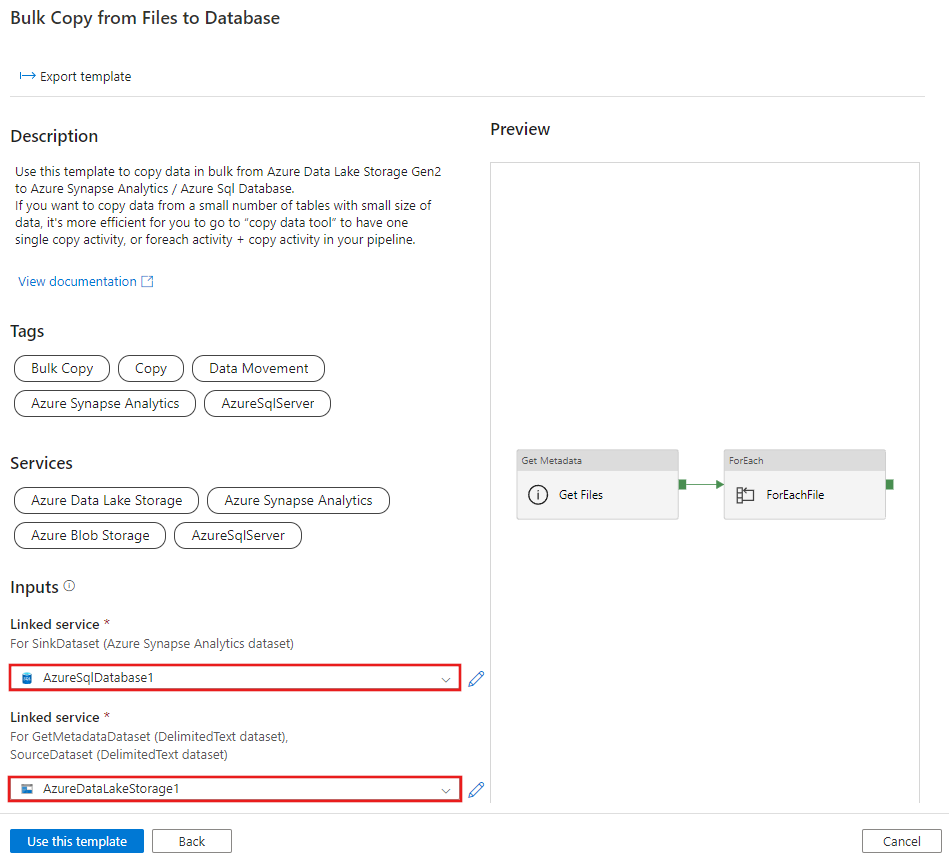

单击“新建”以连接到源 Gen2 存储作为源,连接到数据库作为接收器。 然后选择“使用此模板”。

此时将创建一个新的管道,如以下示例中所示:

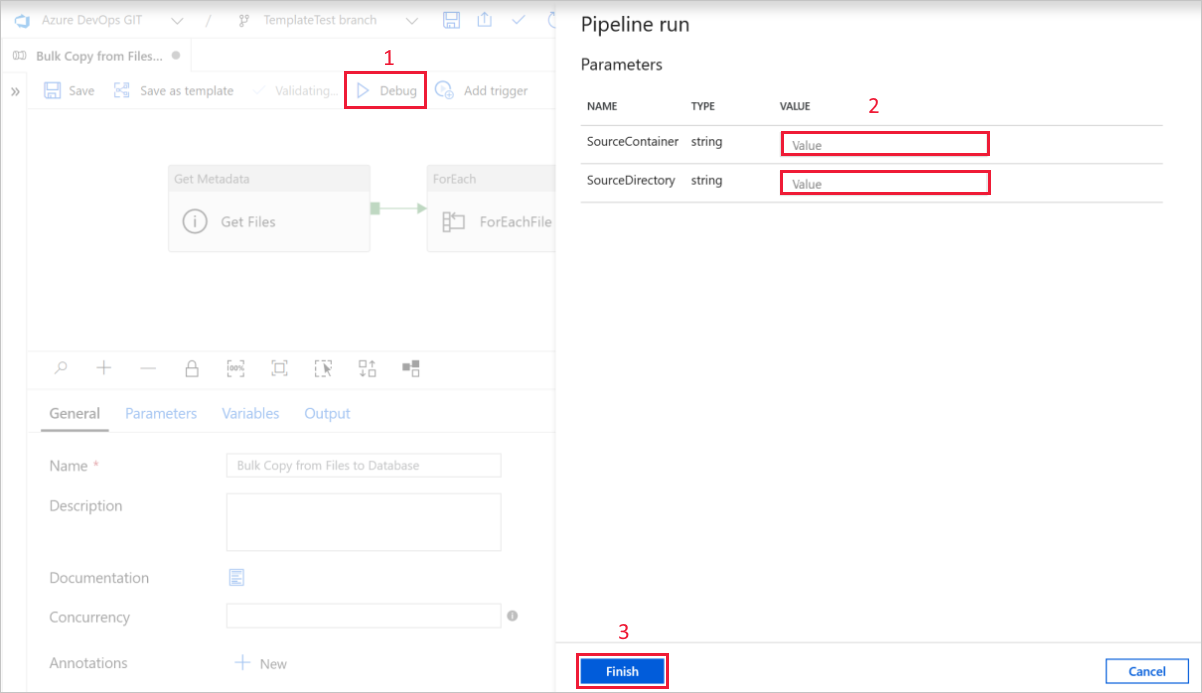

选择“调试”,输入参数,然后选择“完成”。

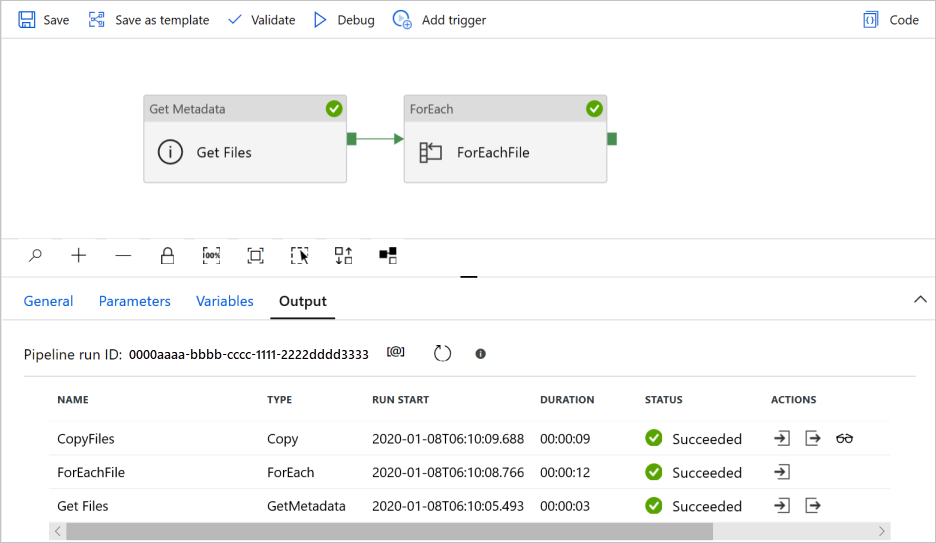

管道运行成功完成后,你会看到类似于以下示例的结果: