适用于: Azure 数据工厂

Azure 数据工厂  Azure Synapse Analytics

Azure Synapse Analytics

本教程使用 Azure 门户创建一个数据工厂管道,该管道可以使用 HDInsight 群集上的 Hive 活动转换 Azure 虚拟网络 (VNet) 中的数据。 在本教程中执行以下步骤:

- 创建数据工厂。

- 创建自承载 Integration Runtime

- 创建 Azure 存储和 Azure HDInsight 链接服务

- 使用 Hive 活动创建管道。

- 触发管道运行。

- 监视管道运行

- 验证输出

如果没有 Azure 订阅,可在开始前创建一个试用帐户。

先决条件

注意

建议使用 Azure Az PowerShell 模块与 Azure 交互。 请参阅安装 Azure PowerShell 以开始使用。 若要了解如何迁移到 Az PowerShell 模块,请参阅 将 Azure PowerShell 从 AzureRM 迁移到 Az。

Azure 存储帐户。 创建 Hive 脚本并将其上传到 Azure 存储。 Hive 脚本的输出存储在此存储帐户中。 在本示例中,HDInsight 群集使用此 Azure 存储帐户作为主存储。

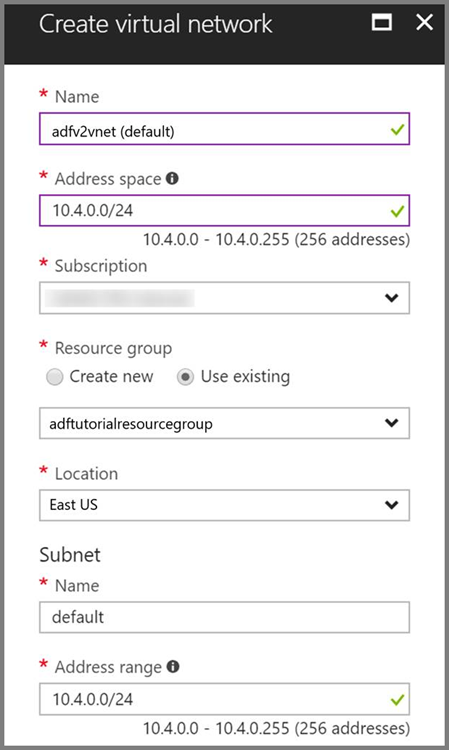

Azure 虚拟网络。 如果没有 Azure 虚拟网络,请遵照这些说明创建虚拟网络。 在本示例中,HDInsight 位于 Azure 虚拟网络中。 下面是 Azure 虚拟网络的示例配置。

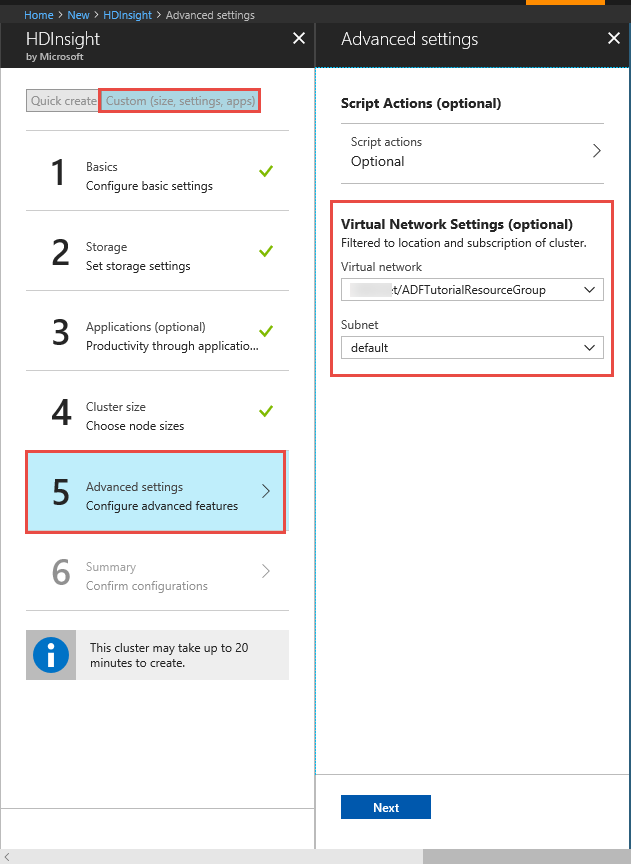

HDInsight 群集。 创建一个 HDInsight 群集,并按照以下文章中所述,将该群集加入到在前一步骤中创建的虚拟网络:使用 Azure 虚拟网络扩展 Azure HDInsight。 下面是虚拟网络中 HDInsight 的示例配置。

Azure PowerShell。 遵循如何安装和配置 Azure PowerShell 中的说明。

一个虚拟机。 创建一个 Azure 虚拟机 (VM),并将其加入到 HDInsight 群集所在的同一个虚拟网络。 有关详细信息,请参阅如何创建虚拟机。

将 Hive 脚本上传到 Blob 存储帐户

创建包含以下内容的名为 hivescript.hql 的 Hive SQL 文件:

DROP TABLE IF EXISTS HiveSampleOut; CREATE EXTERNAL TABLE HiveSampleOut (clientid string, market string, devicemodel string, state string) ROW FORMAT DELIMITED FIELDS TERMINATED BY ' ' STORED AS TEXTFILE LOCATION '${hiveconf:Output}'; INSERT OVERWRITE TABLE HiveSampleOut Select clientid, market, devicemodel, state FROM hivesampletable在 Azure Blob 存储中,创建名为 adftutorial 的容器(如果尚不存在)。

创建名为 hivescripts 的文件夹。

将 hivescript.hql 文件上传到 hivescripts 子文件夹。

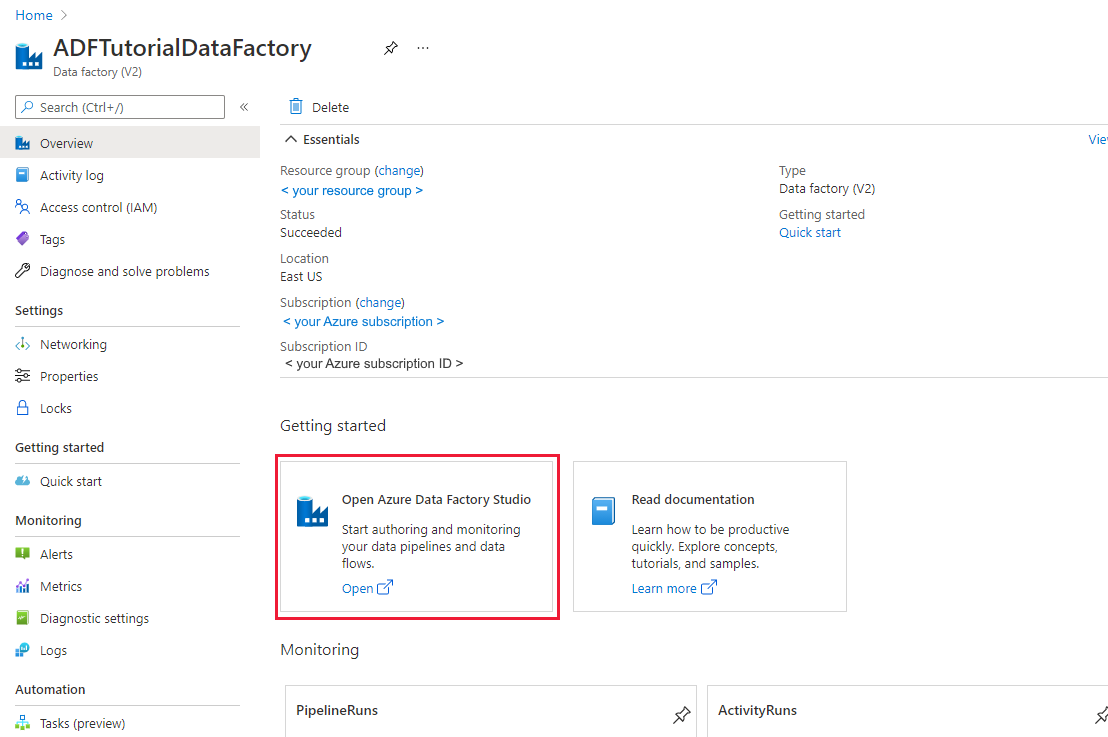

创建数据工厂

如果尚未创建数据工厂,请按照快速入门:使用 Azure 门户和 Azure 数据工厂工作室创建数据工厂中的步骤进行创建。 创建后,浏览到 Azure 门户中的数据工厂。

在“打开 Azure 数据工厂工作室”磁贴上选择“打开”,以便在单独选项卡中启动“数据集成应用程序”。

创建自承载 Integration Runtime

由于 Hadoop 群集位于虚拟网络中,因此需要在同一个虚拟网络中安装自承载集成运行时 (IR)。 在本部分,我们将创建一个新的 VM、将其加入到同一个虚拟网络,然后在其上安装自承载 IR。 数据工厂服务可以使用自承载 IR 将处理请求分发到虚拟网络中的某个计算服务,例如 HDInsight。 自承载 IR 还可用于将数据移入/移出虚拟网络中的数据存储,以及移到 Azure。 当数据存储或计算资源位于本地环境中时,也可以使用自承载 IR。

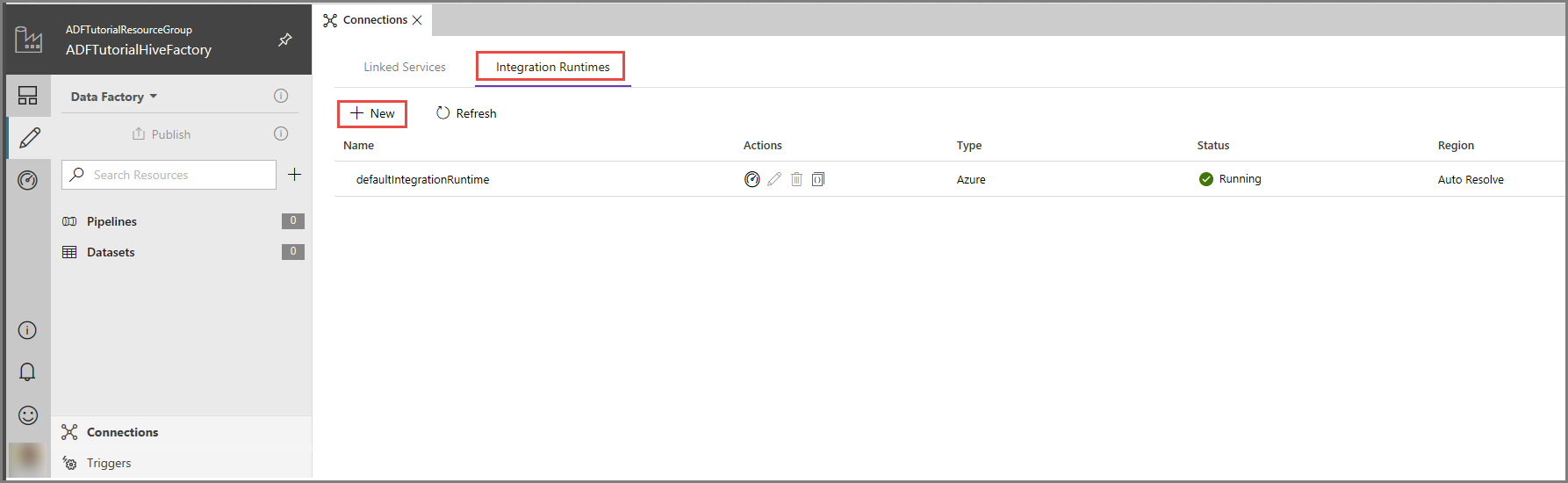

在 Azure 数据工厂 UI 中,单击窗口底部的“链接”,切换到“集成运行时”选项卡,然后单击工具栏上的“+ 新建”按钮。

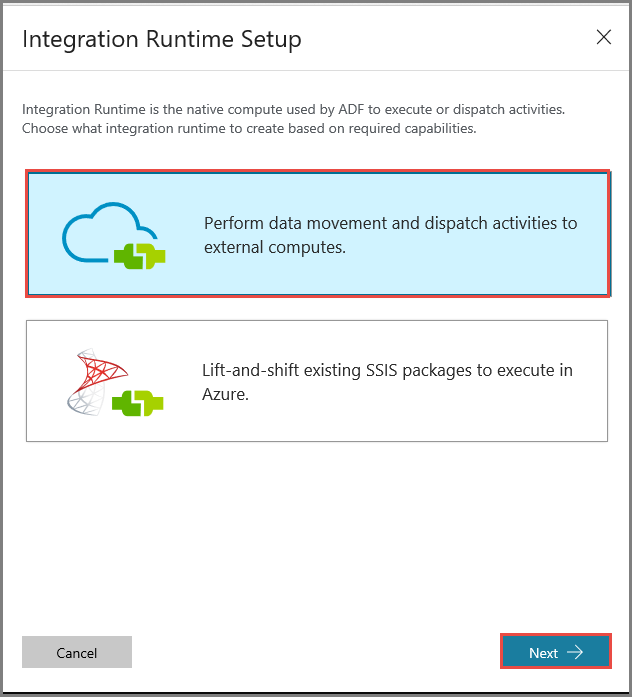

在“集成运行时安装”窗口中,选择“执行数据移动并将活动分发到外部计算”选项,然后单击“下一步”。

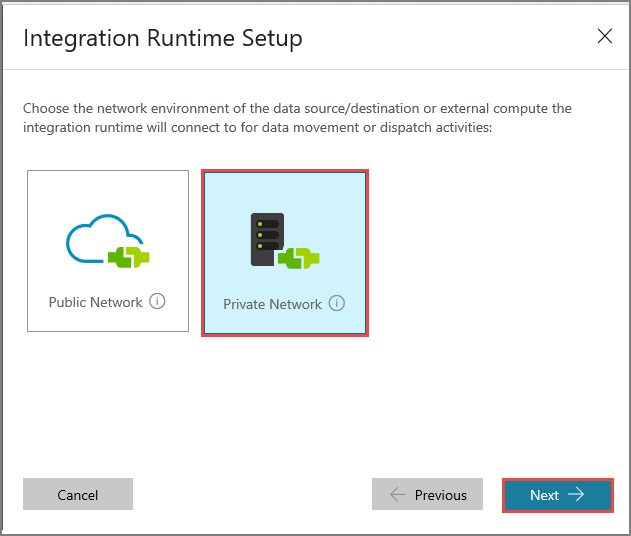

选择“专用网络”,单击“下一步”。

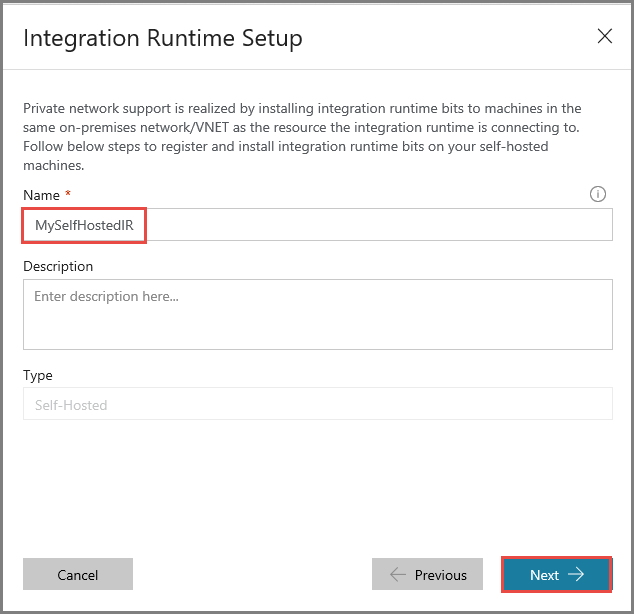

在“名称”中输入 MySelfHostedIR,然后单击“下一步”。

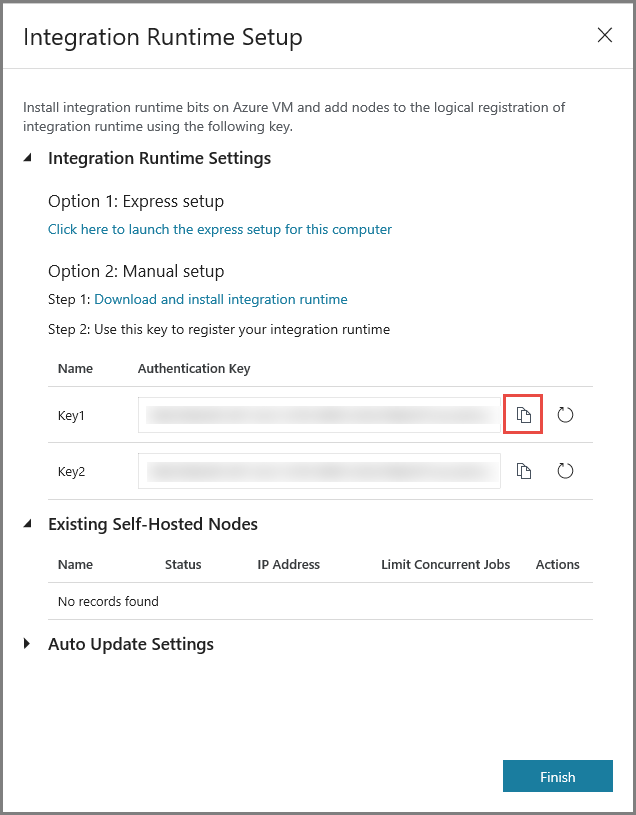

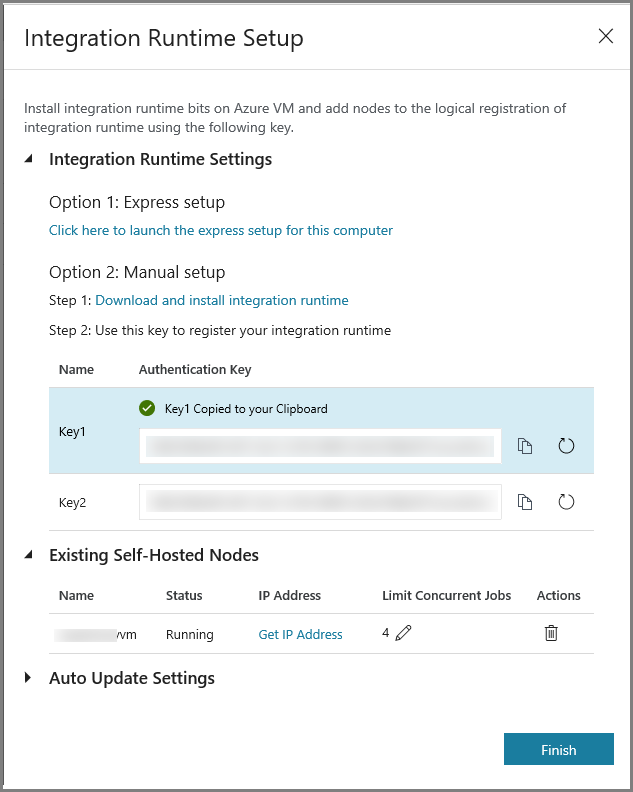

单击复制按钮复制集成运行时的身份验证密钥,并将其保存。 使窗口保持打开。 稍后将要使用此密钥注册虚拟机中安装的 IR。

在虚拟机上安装 IR

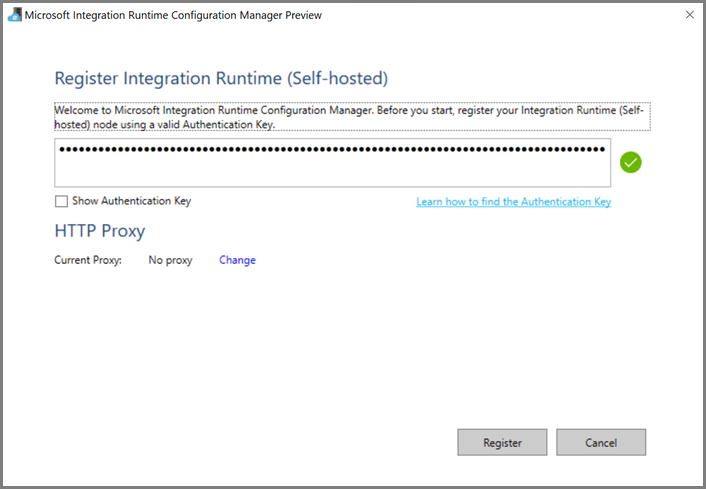

在该 Azure VM 上,下载自我托管的集成运行时。 使用上一步骤中获取的身份验证密钥手动注册自承载集成运行时。

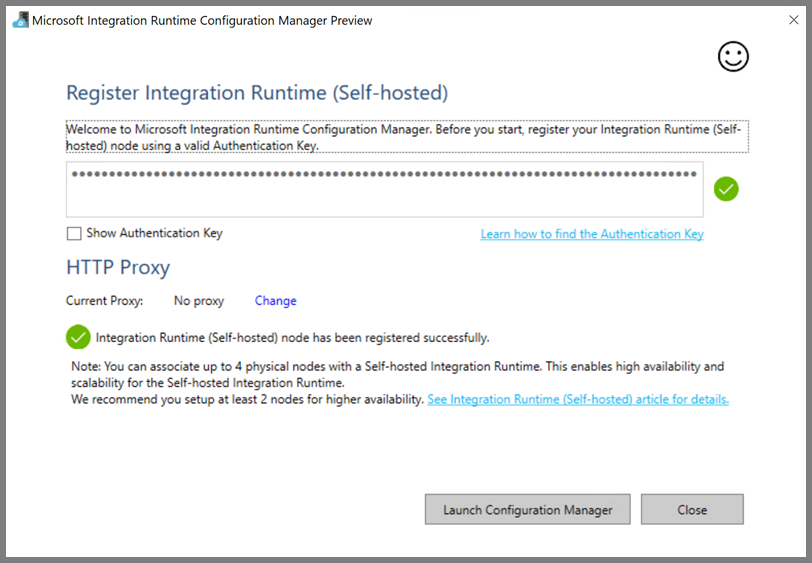

成功注册自承载集成运行时后,会看到以下消息:

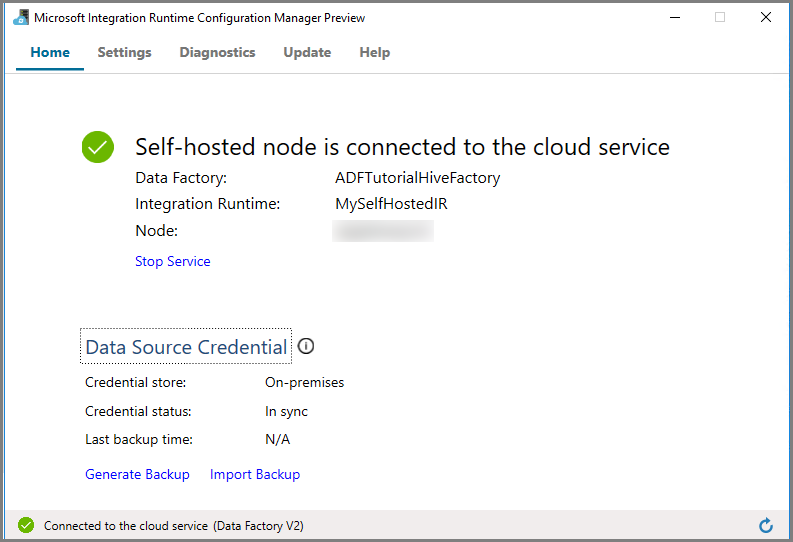

单击“启动配置管理器”。 将节点连接到云服务后,会看到以下页:

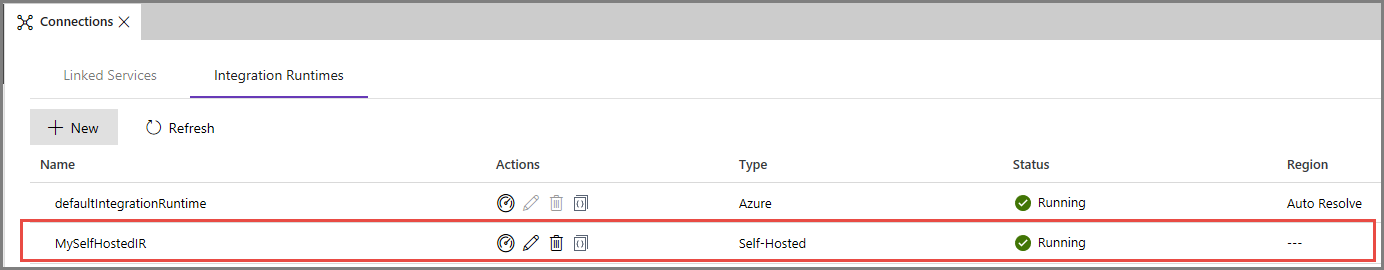

Azure 数据工厂 UI 中的自承载 IR

在 Azure 数据工厂 UI 中,应会看到自承载 VM 的名称及其状态。

单击“完成”关闭“集成运行时安装”窗口。 集成运行时列表中会显示该自承载 IR。

创建链接服务

在本部分中创作并部署两个链接服务:

- 一个用于将 Azure 存储帐户链接到数据工厂的 Azure 存储链接服务。 此存储是 HDInsight 群集使用的主存储。 在本例中,我们将使用此 Azure 存储帐户来存储 Hive 脚本以及该脚本的输出。

- 一个 HDInsight 链接服务。 Azure 数据工厂将 Hive 脚本提交到此 HDInsight 群集以供执行。

创建 Azure 存储链接服务

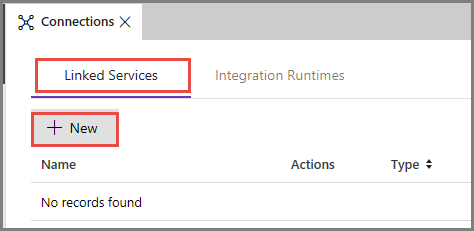

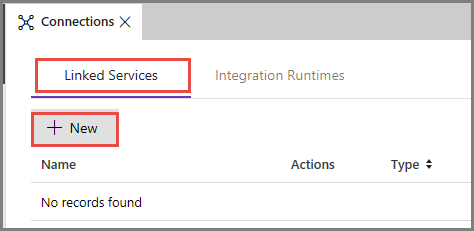

切换到“链接的服务”选项卡,单击“新建”。

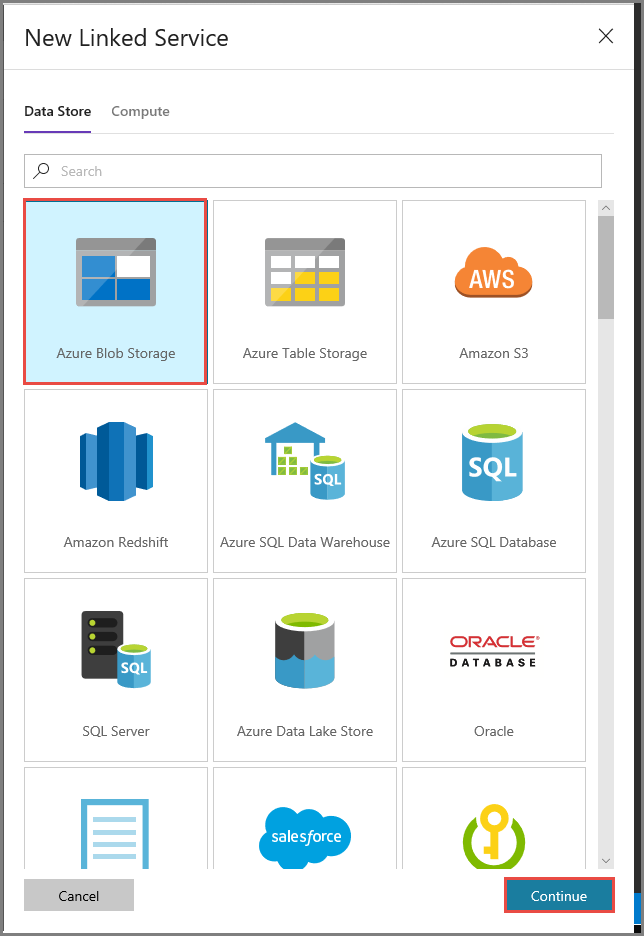

在“新建链接服务”窗口中,选择“Azure Blob 存储”,然后单击“继续”。

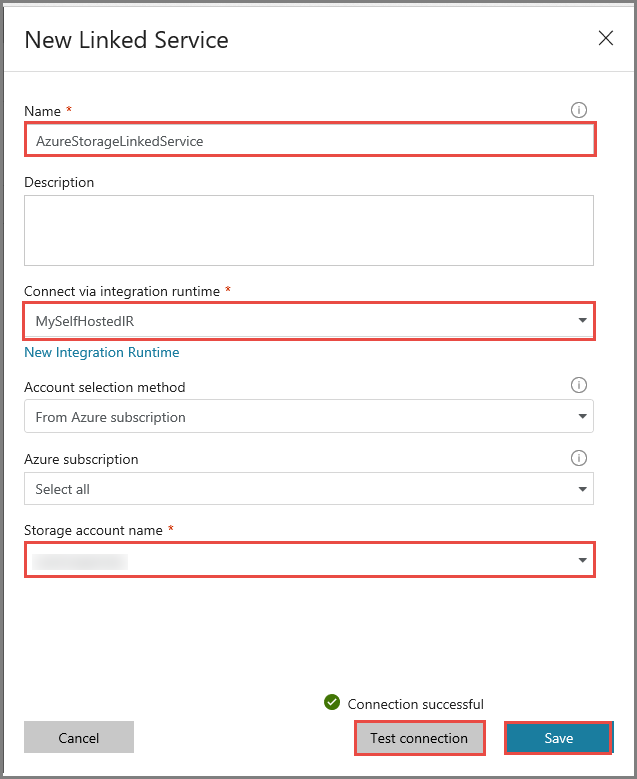

在“新建链接服务”窗口中执行以下步骤:

输入 AzureStorageLinkedService 作为名称。

为“通过集成运行时连接”选择“MySelfHostedIR”。

对于“存储帐户名称”,请选择自己的 Azure 存储帐户。

若要测试与存储帐户的连接,请单击“测试连接”。

单击“保存” 。

创建 HDInsight 链接的服务

再次单击“新建”以创建另一个链接服务。

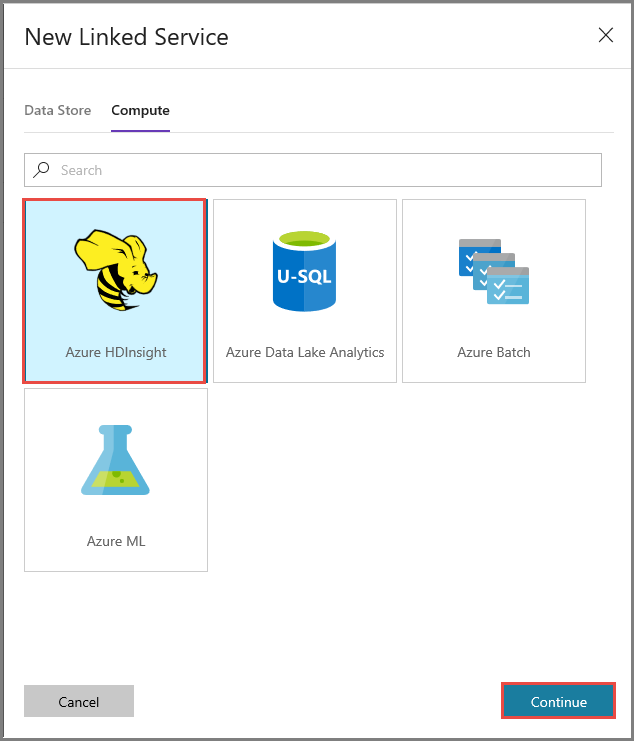

切换到“计算”选项卡,选择“Azure HDInsight”,然后单击“继续”。

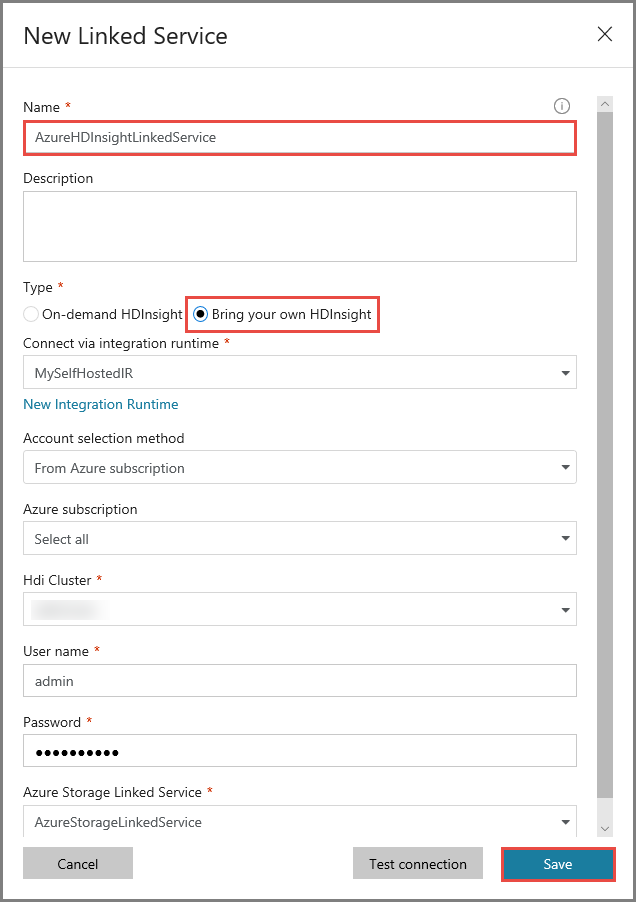

在“新建链接服务”窗口中执行以下步骤:

在“名称”中输入 AzureHDInsightLinkedService。

选择“自带 HDInsight”。

对于“HDI 群集”,请选择自己的 HDInsight 群集。

输入 HDInsight 群集的用户名。

输入该用户的密码。

本文假设你有权通过 Internet 访问该群集。 例如,可以通过 https://clustername.azurehdinsight.cn 连接到该群集。 此地址使用公共网关。如果已使用网络安全组 (NSG) 或用户定义的路由 (UDR) 限制了从 Internet 的访问,则该网关不可用。 要使数据工厂能够将作业提交到 Azure 虚拟网络中的 HDInsight 群集,需要相应地配置 Azure 虚拟网络,使 URL 可解析成 HDInsight 所用的网关的专用 IP 地址。

在 Azure 门户中,打开 HDInsight 所在的虚拟网络。 打开名称以

nic-gateway-0开头的网络接口。 记下其专用 IP 地址。 例如 10.6.0.15。如果 Azure 虚拟网络包含 DNS 服务器,请更新 DNS 记录,使 HDInsight 群集 URL

https://<clustername>.azurehdinsight.cn可解析成10.6.0.15。 如果 Azure 虚拟网络中没有 DNS 服务器,可以通过编辑已注册为自承载集成运行时节点的所有 VM 的 hosts 文件 (C:\Windows\System32\drivers\etc) 并添加如下所示的条目,来暂时解决此问题:10.6.0.15 myHDIClusterName.azurehdinsight.cn

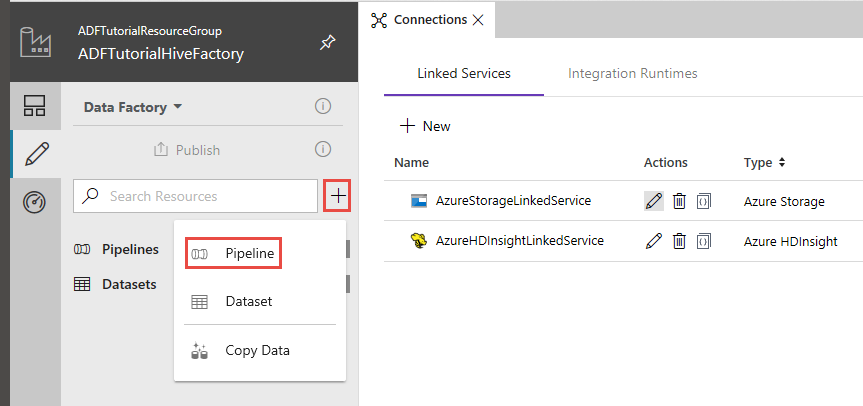

创建管道

本步骤创建包含 Hive 活动的新管道。 该活动执行 Hive 脚本来返回示例表中的数据,并将其保存到定义的路径。

请注意以下几点:

- scriptPath 指向用于 MyStorageLinkedService 的 Azure 存储帐户中的 Hive 脚本路径。 此路径区分大小写。

- Output 是 Hive 脚本中使用的参数。 使用

wasbs://<Container>@<StorageAccount>.blob.core.chinacloudapi.cn/outputfolder/格式指向 Azure 存储中的现有文件夹。 此路径区分大小写。

在数据工厂 UI 中,单击左窗格中的“+”(加号),然后单击“管道”。

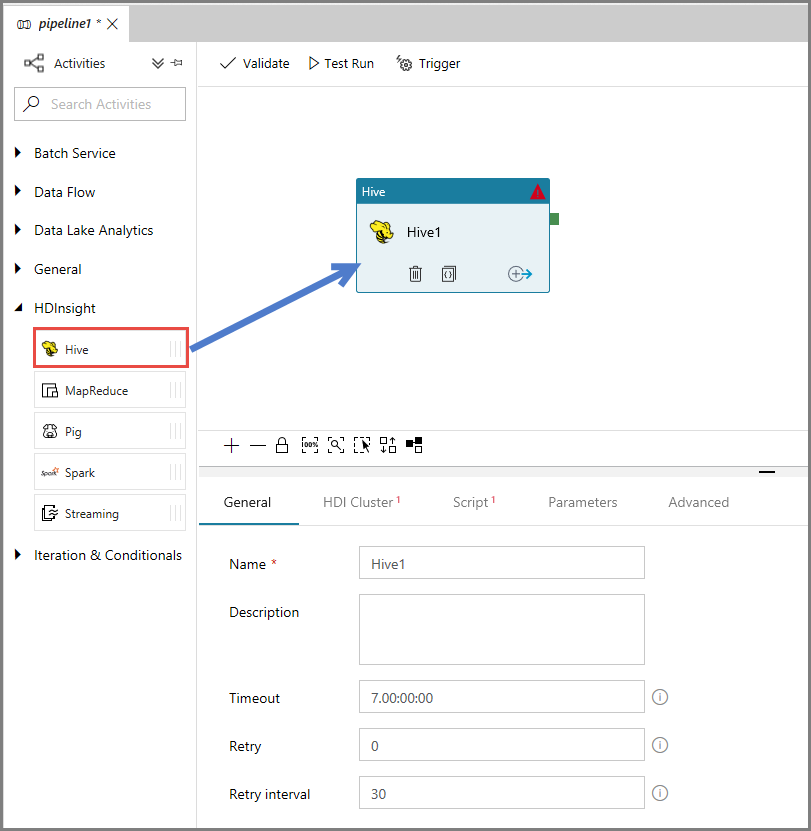

在“活动”工具箱中展开“HDInsight”,将“Hive”活动拖放到管道设计器图面。

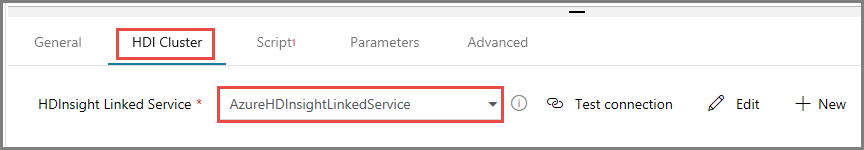

在属性窗口中切换到“HDI 群集”选项卡,然后为“HDInsight 链接服务”选择“AzureHDInsightLinkedService”。

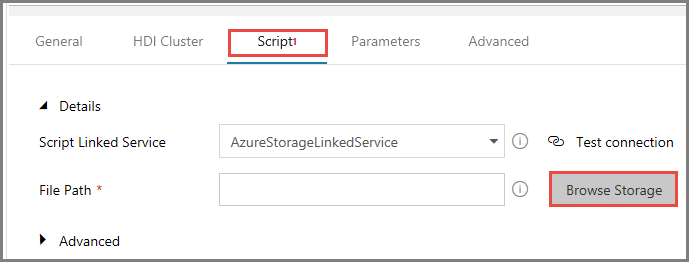

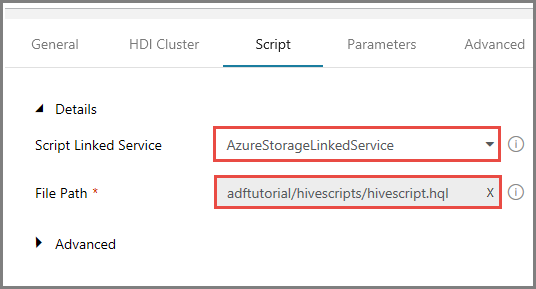

切换到“脚本”选项卡,然后执行以下步骤:

为“脚本链接服务”选择“AzureStorageLinkedService”。

对于“文件路径”,请单击“浏览存储”。

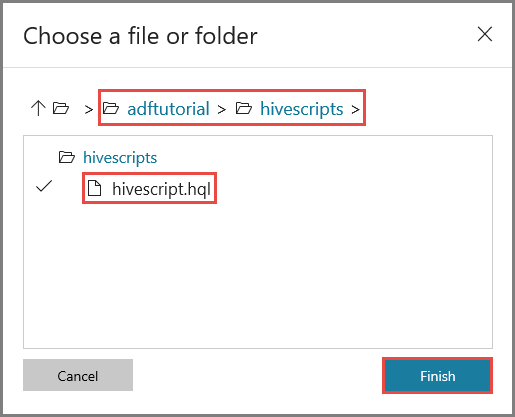

在“选择文件或文件夹”窗口中导航到 adftutorial 容器中的 hivescripts 文件夹,选择 hivescript.hql,然后单击“完成”。

确认“文件路径”中显示了 adftutorial/hivescripts/hivescript.hql。

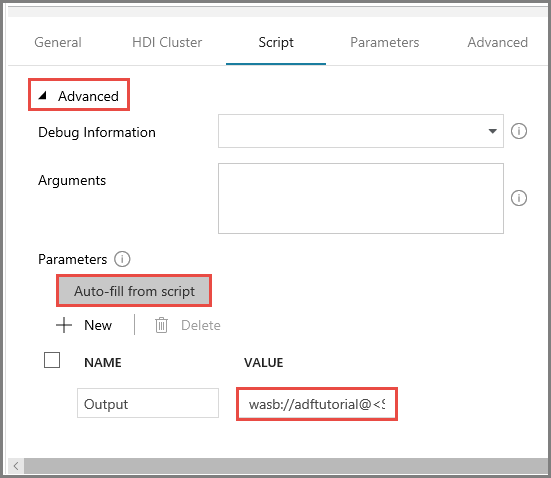

在“脚本”选项卡中,展开“高级”部分。

单击“参数”对应的“从脚本自动填充”。

使用以下格式输入“输出”参数的值:

wasbs://<Blob Container>@<StorageAccount>.blob.core.chinacloudapi.cn/outputfolder/。 例如:wasbs://adftutorial@mystorageaccount.blob.core.chinacloudapi.cn/outputfolder/。

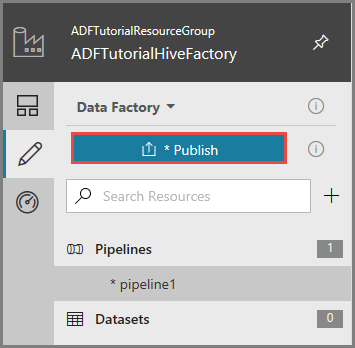

若要将项目发布到数据工厂,请单击“发布”。

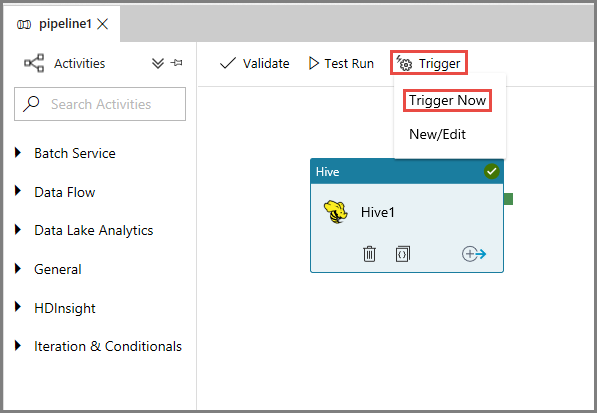

触发管道运行

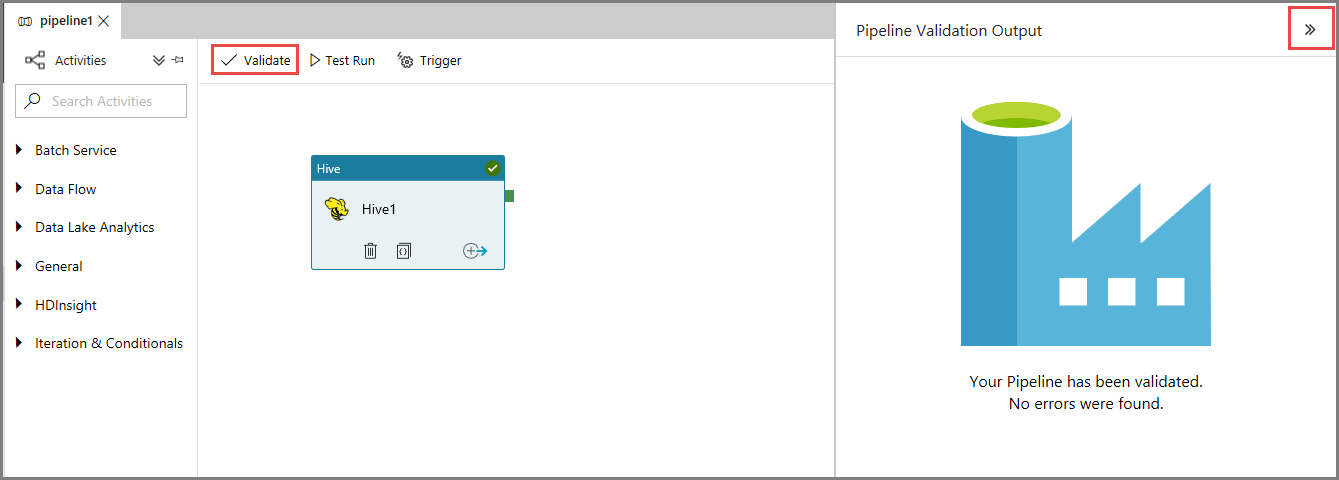

首先,请单击工具栏中的“验证”按钮来验证管道。 单击右箭头 (>>) 关闭“管道验证输出”窗口。

若要触发某个管道运行,请在工具栏中单击“触发器”,然后单击“立即触发”。

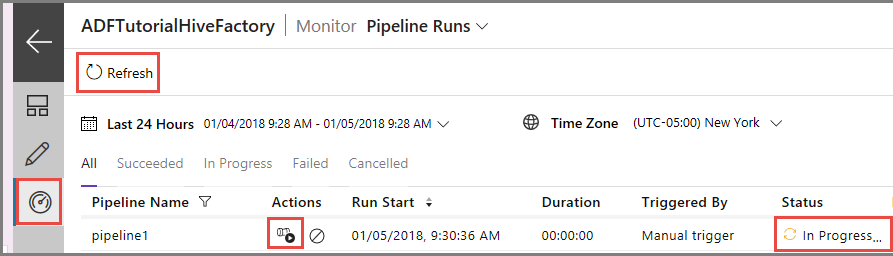

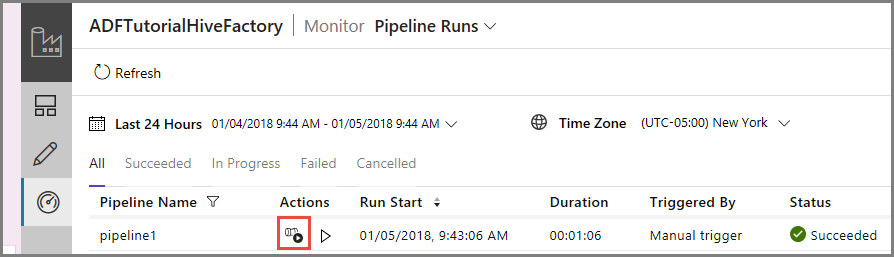

监视管道运行

在左侧切换到“监视”选项卡。 “管道运行”列表中会显示一个管道运行。

若要刷新列表,请单击“刷新”。

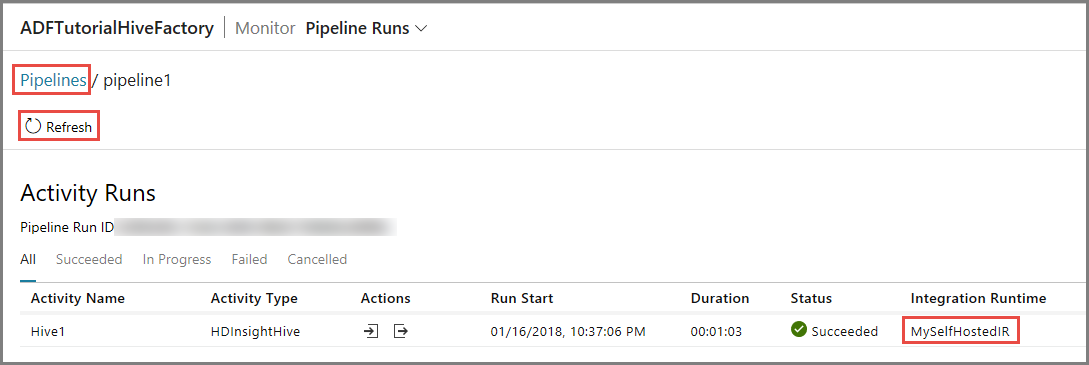

若要查看与管道运行相关联的活动运行,请单击“操作”列中的“查看活动运行”。 其他操作链接用于停止/重新运行管道。

只能看到一个活动运行,因为该管道中只包含一个 HDInsightHive 类型的活动。 若要切换回到上一视图,请单击顶部的“管道”链接。

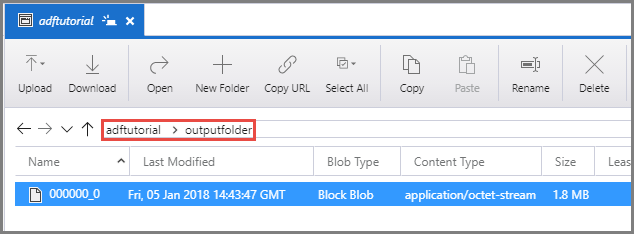

确认可以在 adftutorial 容器的 outputfolder 中看到输出文件。

相关内容

已在本教程中执行了以下步骤:

- 创建数据工厂。

- 创建自承载 Integration Runtime

- 创建 Azure 存储和 Azure HDInsight 链接服务

- 使用 Hive 活动创建管道。

- 触发管道运行。

- 监视管道运行

- 验证输出

请转到下一篇教程,了解如何在 Azure 上使用 Spark 群集转换数据: