适用于: Azure 数据工厂

Azure 数据工厂  Azure Synapse Analytics

Azure Synapse Analytics

本快速入门介绍了如何使用 Azure 数据工厂工作室或 Azure 门户 UI 来创建数据工厂。

注意

如果你对 Azure 数据工厂不太熟悉,请在尝试本快速入门之前参阅 Azure 数据工厂简介。

先决条件

Azure 订阅

如果没有 Azure 订阅,可在开始前创建一个试用帐户。

Azure 角色

若要了解创建数据工厂的 Azure 角色要求,请参阅 Azure 角色要求。

创建数据工厂

通过 Azure 数据工厂工作室中提供的快速创建体验,用户可以在几秒钟内创建数据工厂。 Azure 门户中提供了更高级的创建选项。

Azure 数据工厂工作室中的快速创建

启动 Microsoft Edge 或 Google Chrome Web 浏览器。 目前,仅 Microsoft Edge 和 Google Chrome Web 浏览器支持数据工厂 UI。

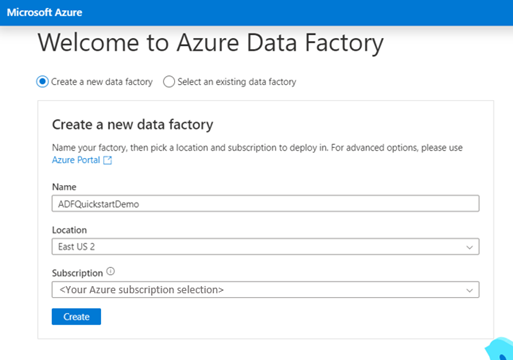

转到 Azure 数据工厂工作室,然后选择“创建新的数据工厂”单选按钮。

可以使用默认值直接创建,也可以输入唯一的名称,并选择创建新数据工厂时要使用的首选位置和订阅。

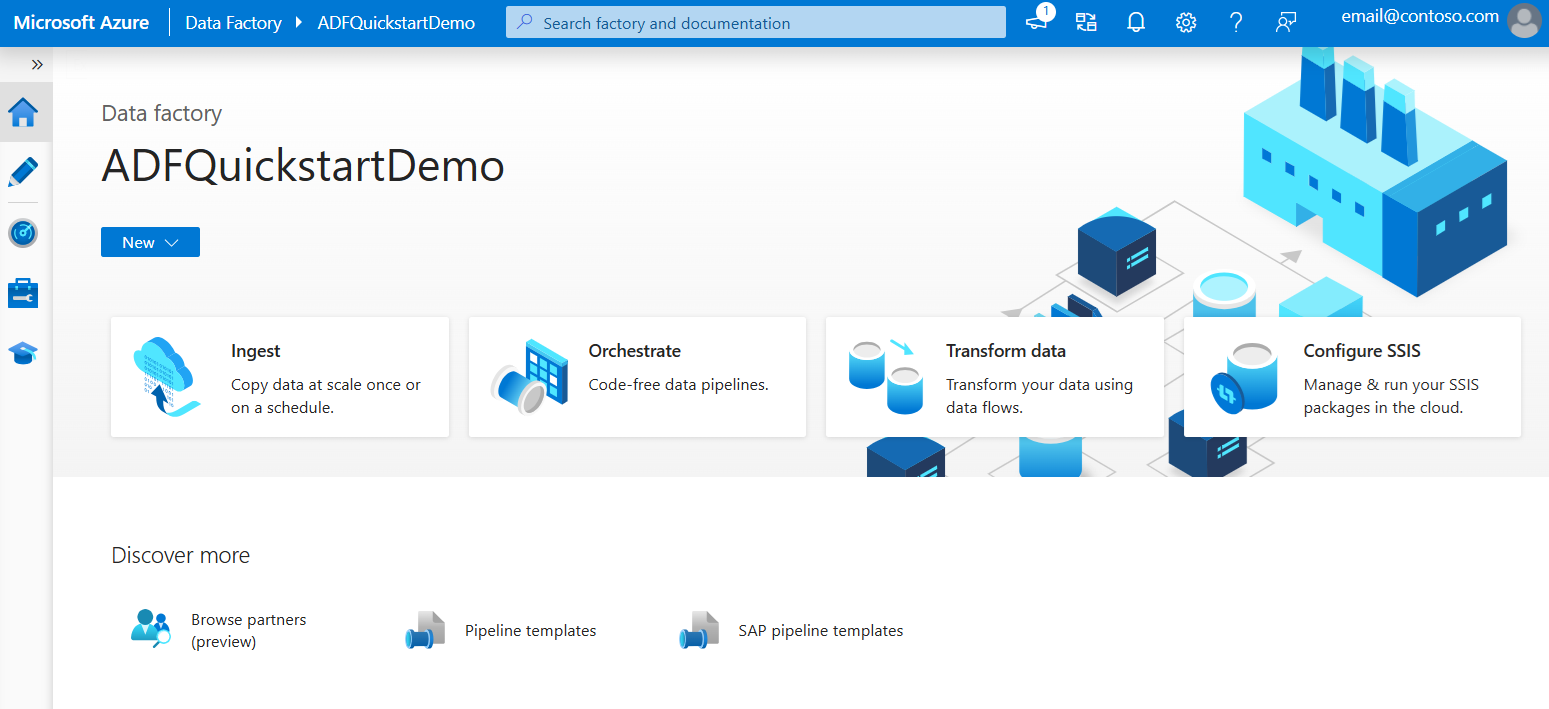

创建后,可以直接进入 Azure 数据工厂工作室 的主页。

Azure 门户中的高级创建

启动 Microsoft Edge 或 Google Chrome Web 浏览器。 目前,仅 Microsoft Edge 和 Google Chrome Web 浏览器支持数据工厂 UI。

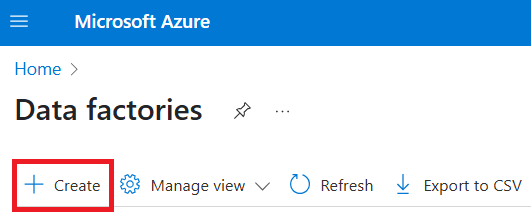

转到 Azure 门户的数据工厂页面。

在 Azure 门户的数据工厂页上登录后,单击“创建”。

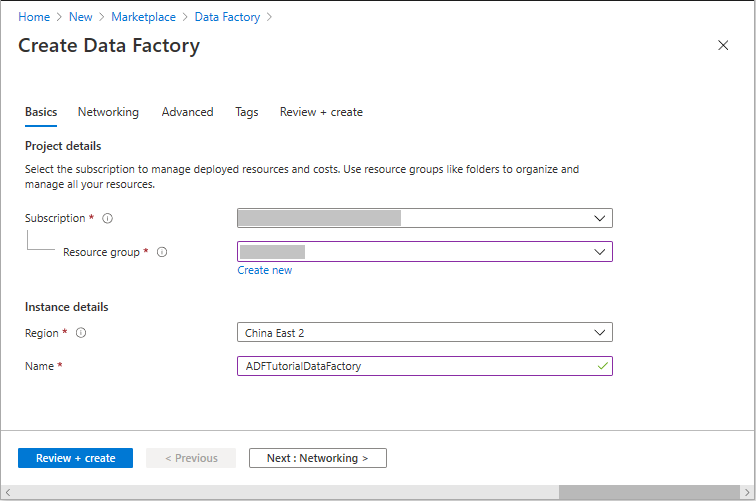

对于“资源组”,请执行以下步骤之一:

从下拉列表中选择现有资源组。

选择“新建”,并输入新资源组的名称。

若要了解资源组,请参阅使用资源组管理 Azure 资源。

对于“区域”,选择数据工厂所在的位置。

该列表仅显示数据工厂支持的位置,以及 Azure 数据工厂元数据要存储到的位置。 数据工厂使用的关联数据存储(如 Azure 存储和 Azure SQL 数据库)和计算(如 Azure HDInsight)可以在其他区域中运行。

对于“名称”,输入“ADFTutorialDataFactory”。

Azure 数据工厂的名称必须 全局唯一。 如果出现以下错误,请更改数据工厂的名称(例如改为 <yourname>ADFTutorialDataFactory),并重新尝试创建。 有关数据工厂项目的命名规则,请参阅数据工厂 - 命名规则一文。

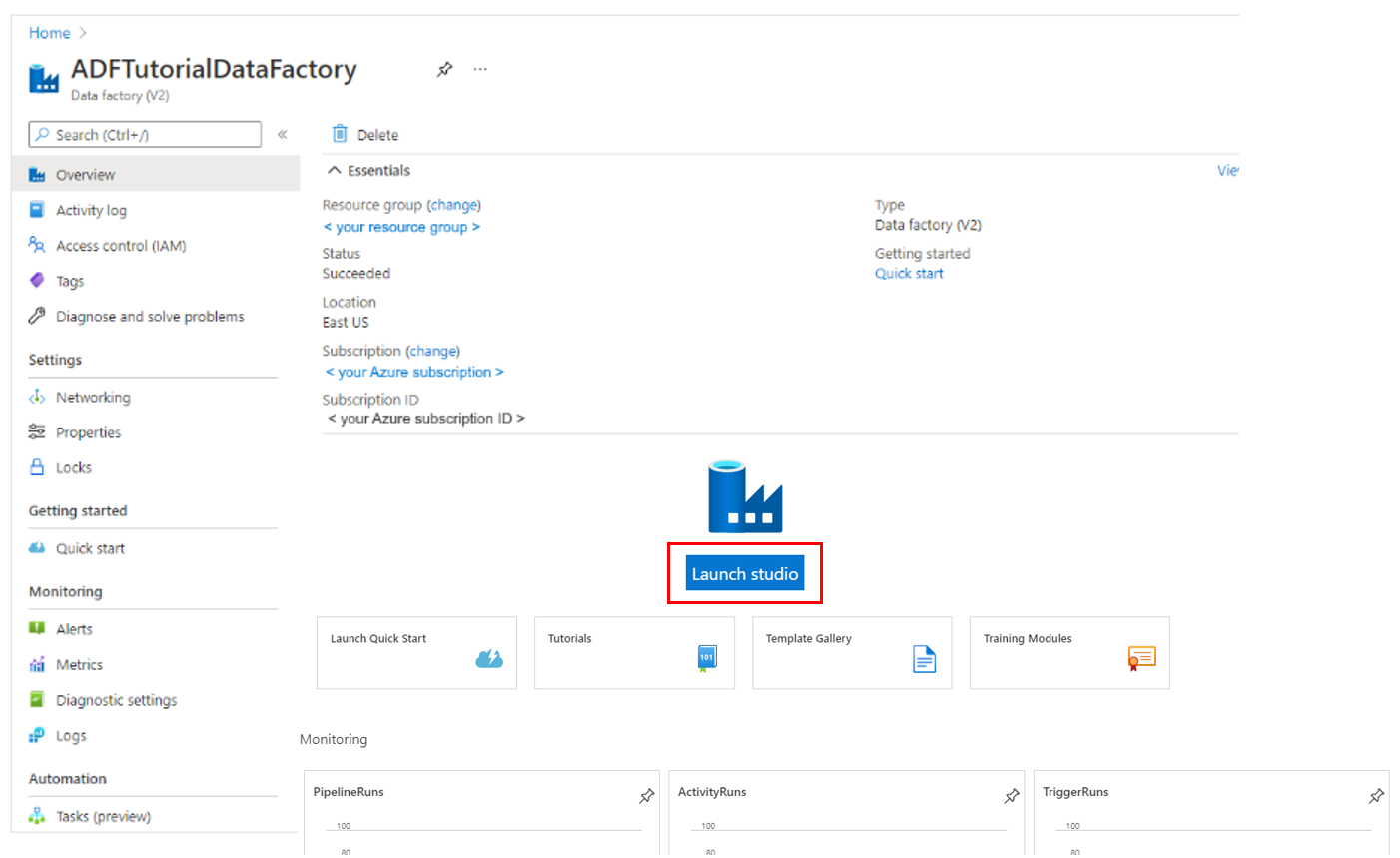

选择“查看 + 创建”,然后在通过验证后选择“创建” 。 创建完成后,选择 "转到资源" 以进入 "数据工厂" 页面。

选择“启动工作室”打开 Azure 数据工厂工作室,以在单独的浏览器标签页上启动 Azure 数据工厂用户界面 (UI) 应用程序。

注意

如果看到 Web 浏览器停留在“正在授权”状态,请清除“阻止第三方 Cookie 和站点数据”复选框。 或者使其保持选中状态,为 login.partner.microsoftonline.cn 创建一个例外,然后再次尝试打开该应用。

相关内容

了解如何根据 Hello World - 如何复制数据教程,使用 Azure 数据工厂将数据从一个位置复制到另一个位置。 学习了解如何使用 Azure 数据工厂创建数据流。