适用于: Azure CLI ml 扩展 v2 (当前)

Azure CLI ml 扩展 v2 (当前) Python SDK azure-ai-ml v2 (当前)

Python SDK azure-ai-ml v2 (当前)

本文介绍如何排查和解决常见的 Azure 机器学习联机终结点部署和评分问题。

本文档采用一种可方便你着手进行故障排除的结构:

HTTP 状态代码部分介绍了使用 REST 请求为终结点评分时调用和预测错误如何映射到 HTTP 状态代码。

先决条件

- Azure 活动订阅提供 Azure 机器学习的免费版或付费版。 获取 Azure 试用版订阅。

- 一个 Azure 机器学习工作区。

- Azure CLI 和 Azure 机器学习 CLI v2。 安装、设置和使用 CLI (v2)。

请求跟踪

受支持的跟踪标头有两个:

x-request-id保留用于服务器跟踪。 Azure 机器学习会替代此标头,以确保它是有效的 GUID。 为失败的请求创建支持票证时,请附加失败的请求 ID 以加快调查。 或者,提供区域的名称和终结点名称。x-ms-client-request-id可用于客户端跟踪方案。 此标头仅接受字母数字字符、连字符和下划线,并被截断为最多 40 个字符。

本地部署

本地部署会将模型部署到本地 Docker 环境。 本地部署支持创建、更新和删除本地终结点,并允许你从终结点调用和获取日志。 本地部署可用于在部署到云之前进行测试和调试。

提示

还可以使用 Azure 机器学习推理 HTTP 服务器 Python 包 在本地调试评分脚本。 使用推理服务器进行调试有助于在部署到本地终结点之前调试评分脚本,以便在不受部署容器配置影响的情况下进行调试。

可以使用 Azure CLI 或 Python SDK 在本地进行部署。 Azure 机器学习工作室不支持本地部署或本地终结点。

若要使用本地部署,请将 --local 添加到相应的命令。

az ml online-deployment create --endpoint-name <endpoint-name> -n <deployment-name> -f <spec_file.yaml> --local

本地部署包括以下步骤:

- Docker 生成新的容器映像,或者从本地 Docker 缓存拉取现有映像。 如果某个现有映像与规范文件的环境部分相匹配,则 Docker 将使用该映像。

- Docker 启动装载了本地项目(如模型和代码文件)的新容器。

有关详细信息,请参阅 使用本地终结点在本地部署和调试。

提示

可以使用 Visual Studio Code 在本地测试和调试终结点。 有关详细信息,请参阅 Visual Studio Code 中的本地调试联机终结点。

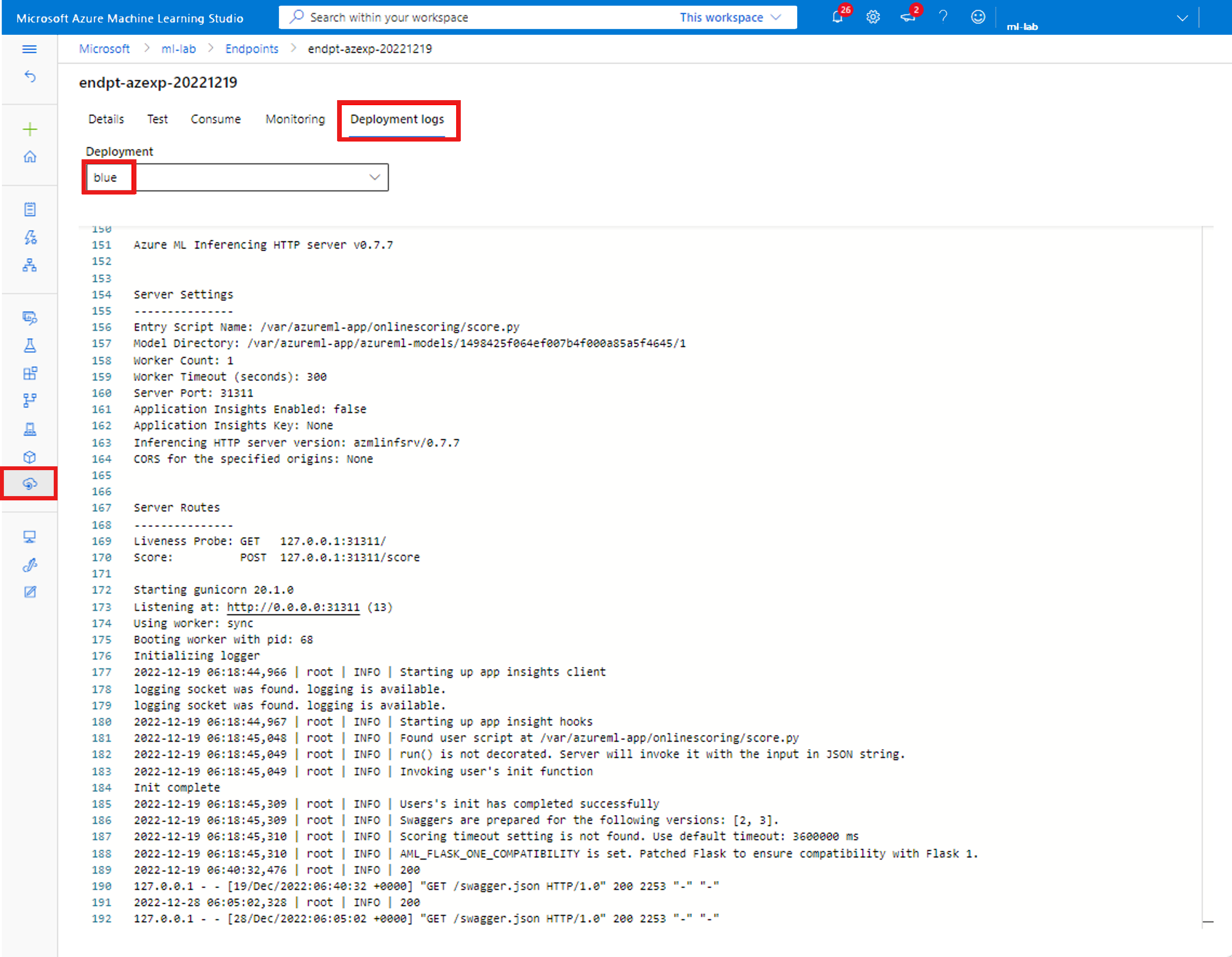

获取容器日志

无法直接访问部署模型的虚拟机 (VM),但可以从 VM 上运行的部分容器中获取日志。 获取的信息量取决于部署的预配状态。 如果指定的容器已正常启动并开始运行,则会显示其控制台输出。 否则,你会收到一条消息,指示稍后重试。

可以从以下类型的容器获取日志:

- 推理服务器控制台日志包含评分脚本 score.py 代码中的打印和日志记录函数的输出。

- 存储初始化表达式日志包含有关代码和模型数据是否已成功下载到容器的信息。 该容器会在推理服务器容器开始运行之前运行。

对于 Kubernetes 联机终结点,管理员可以直接访问部署模型的群集,并在 Kubernetes 中查看日志。 例如:

kubectl -n <compute-namespace> logs <container-name>

注意

如果使用 Python 日志记录,请确保为要发布到日志中的消息使用正确的日志记录级别,例如 INFO。

查看容器的日志输出

若要查看容器的日志输出,请使用以下命令:

az ml online-deployment get-logs -g <resource-group> -w <workspace-name> -e <endpoint-name> -n <deployment-name> -l 100

或

az ml online-deployment get-logs --resource-group <resource-group> --workspace-name <workspace-name> --endpoint-name <endpoint-name> --name <deployment-name> --lines 100

默认情况下,日志是从推理服务器拉取的。 可以通过传递 –-container storage-initializer 从存储初始化表达式容器获取日志。

上述命令包括 --resource-group 和 --workspace-name。 还可以全局 az configure 设置这些参数,以避免在每个命令中重复这些参数。 例如:

az configure --defaults group=<resource-group> workspace=<workspace-name>

若要检查当前的配置设置,请运行:

az configure --list-defaults

将 --help 和 --debug 添加到命令可查看更多信息。

常见部署错误

部署操作状态可以报告以下常见部署错误:

-

常见于托管联机终结点和 Kubernetes 联机终结点:

- 订阅不存在

- 由于授权错误,启动任务失败

- 由于资源上的角色分配不正确,启动任务失败

- 启用 mdc 时,由于存储帐户上的角色分配不正确,启动任务失败

- 模板函数规范无效

- 无法下载用户容器映像

- 无法下载用户模型

限于 Kubernetes 联机终结点:

如果要创建或更新 Kubernetes 联机部署,另请参阅 特定于 Kubernetes 部署的常见错误。

错误:ImageBuildFailure

生成 Docker 映像环境时返回此错误。 可以检查生成日志,了解有关失败的更多详细信息。 生成日志位于 Azure 机器学习工作区的默认存储中。

确切位置可能作为错误的一部分返回,例如 "the build log under the storage account '[storage-account-name]' in the container '[container-name]' at the path '[path-to-the-log]'"。

以下部分介绍常见的映像生成失败场景:

未在具有虚拟网络的专用工作区中设置映像生成计算

如果错误消息提到 "failed to communicate with the workspace's container registry",并且正在使用虚拟网络,并且工作区的容器注册表是专用的,并且配置了专用终结点,则需要 允许容器注册表 在虚拟网络中生成映像。

映像生成超时

映像生成超时通常是由于映像变得太大而无法在创建部署的时间范围内完成生成。 检查错误所指定位置的映像生成日志。 日志会在发生映像超时的时间点截断。

若要解决此问题,请单独生成映像,以便在部署创建过程中只需拉取映像。 如果 ImageBuild 超时,另请查看默认的探测设置。

泛型映像生成失败

查看生成日志,了解有关失败的更多详细信息。 如果在生成日志中没有发现明显的错误,并且最后一行是 Installing pip dependencies: ...working...,则错误可能是由依赖项引起的。 在 conda 文件中固定版本依赖项可解决此问题。

在部署到云之前,请尝试 在本地部署 以测试和调试模型。

错误:OutOfQuota

以下资源可能会在使用 Azure 服务时用完配额:

仅对于 Kubernetes 在线终结点,Kubernetes 资源可能也会耗尽配额。

CPU 配额

部署模型需要有足够的计算配额。 CPU 配额定义每个订阅、每个工作区、每个 SKU 和每个区域可用的虚拟核心数。 每次部署都会扣减可用配额,删除部署后,会根据 SKU 的类型重新增加配额。

可以检查是否有未使用的部署可以删除,也可以 提交配额增加请求。

群集配额

如果没有足够的 Azure 机器学习计算群集配额,则会出现 OutOfQuota 错误。 此配额定义每个订阅一次可用于在 Azure 云中部署 CPU 或 GPU 节点的群集总数。

磁盘配额

如果模型的大小大于可用磁盘空间,并且无法下载模型,则会出现 OutOfQuota 错误。 尝试使用具有更多磁盘空间的 SKU 或减少映像和模型大小。

内存配额

当模型的内存占用大于可用内存时,就会发生 OutOfQuota 问题。 尝试具有更多内存的 SKU 。

角色分配配额

创建托管联机终结点时,需要为托管标识分配角色才能访问工作区资源。 如果达到 角色分配限制,请尝试删除此订阅中一些未使用的角色分配。 可以通过在 Azure 门户中为 Azure 订阅选择“访问控制”来查看所有角色分配。

终结点配额

尝试删除此订阅中某些未使用的终结点。 如果所有终结点都正在使用中,请尝试 请求提高终结点限制。 若要详细了解终结点限制,请参阅 使用 Azure 机器学习联机终结点和批处理终结点的终结点配额。

Kubernetes 配额

当由于节点对此部署不可调度而无法提供所请求的 CPU 或内存时,就会出现 OutOfQuota 错误。 例如,节点可能已被封锁或不可用。

此错误消息通常指示群集中的资源不足,例如 OutOfQuota: Kubernetes unschedulable. Details:0/1 nodes are available: 1 Too many pods...。 此消息表示群集中的 Pod 太多,没有足够的资源来根据请求部署新模型。

你可以尝试以下缓解措施来解决此问题:

维护 Kubernetes 群集的 IT 运维人员可以尝试添加更多节点,或者清除群集中一些未使用的 Pod 来释放一些资源。

部署模型的机器学习工程师可以尝试减少部署的资源请求。

- 如果通过资源部分在部署配置中直接定义资源请求,则可以尝试减少资源请求。

- 如果使用

instance_type定义模型部署的资源,请联系 IT 运维人员调整实例类型资源配置。 有关详细信息,请参阅 “创建和管理实例类型”。

区域范围的 VM 容量

由于区域中缺少 Azure 机器学习容量,服务无法预配指定的 VM 大小。 请稍后重试,或尝试部署到其他区域。

其他配额

为了运行在部署过程中提供的 score.py 文件,Azure 将创建一个包含 score.py 所需全部资源的容器。 然后,Azure 机器学习在该容器上运行评分脚本。 如果容器无法启动,则无法进行评分。 容器请求的资源数量可能超过了 instance_type 可以支持的数量。 请考虑更新联机部署的 instance_type。

若要获取此错误的确切原因,请执行下列操作。

运行以下命令:

az ml online-deployment get-logs -e <endpoint-name> -n <deployment-name> -l 100

错误:BadArgument

使用托管联机终结点或 Kubernetes 联机终结点时,你可能会因以下原因而遇到此错误:

仅使用 Kubernetes 联机终结点时,也可能会因以下原因而遇到此错误:

订阅不存在

引用的 Azure 订阅必须是现有的且处于活动状态。 当 Azure 找不到输入的订阅 ID 时,会发生此错误。 此错误可能是由订阅 ID 中的拼写错误造成的。 请仔细检查是否已正确输入订阅 ID,以及它当前是否处于活动状态。

授权错误

预配计算资源之后(在创建部署过程中),Azure 会尝试从工作区容器注册表拉取用户容器映像,并将用户模型和代码项目从工作区存储帐户装载到用户容器中。 Azure 使用 托管标识 访问存储帐户和容器注册表。

如果使用用户分配的标识创建了关联的终结点,则用户的托管标识必须对工作区的存储帐户具有存储 Blob 数据读取者权限,并且对工作区容器注册表具有 AcrPull 权限。 确保用户分配的标识具有正确的权限。

启用 MDC 时,用户的托管标识必须对工作区存储帐户具有“存储 Blob 数据参与者”权限。 有关详细信息,请参阅 启用 MDC 时的存储 Blob 授权错误。

如果使用系统分配的标识创建了关联的终结点,则系统会自动授予 Azure 基于角色的访问控制 (RBAC) 权限,此时不需要任何进一步的权限。 有关详细信息,请参阅 容器注册表授权错误。

无效的模板函数规范

错误地指定了模板函数时,会发生此错误。 请修复策略或移除策略分配以取消阻止。 错误消息可能包括策略分配名称和策略定义,以帮助调试此错误。 有关避免模板故障的提示,请参阅 Azure 策略定义结构 。

无法下载用户容器映像

找不到用户容器。 检查容器日志 以获取更多详细信息。

确保容器映像在工作区容器注册表中可用。 例如,如果映像为 testacr.azurecr.io/azureml/azureml_92a029f831ce58d2ed011c3c42d35acb:latest,可以使用以下命令来检查存储库:

az acr repository show-tags -n testacr --repository azureml/azureml_92a029f831ce58d2ed011c3c42d35acb --orderby time_desc --output table`

无法下载用户模型

找不到用户模型。 检查容器日志 以获取更多详细信息。 确保将模型注册到部署所在的工作区。

要在工作区中显示模型的详细信息,请执行以下操作: 必须指定版本或标签才能获取模型信息。

运行以下命令:

az ml model show --name <model-name> --version <version>

另请检查 Blob 是否存在于工作区存储帐户中。 例如,如果 Blob 为 https://foobar.blob.core.chinacloudapi.cn/210212154504-1517266419/WebUpload/210212154504-1517266419/GaussianNB.pkl,则可使用以下命令检查 blob 是否存在:

az storage blob exists --account-name <storage-account-name> --container-name <container-name> --name WebUpload/210212154504-1517266419/GaussianNB.pkl --subscription <sub-name>

如果 Blob 存在,可以使用以下命令从存储初始化表达式中获取日志:

az ml online-deployment get-logs --endpoint-name <endpoint-name> --name <deployment-name> –-container storage-initializer`

不支持使用专用网络的 MLflow 模型格式

如果对 托管联机终结点使用旧网络隔离方法,则不能将专用网络功能与 MLflow 模型格式配合使用。 如果需要使用无代码部署方法部署 MLflow 模型,请尝试使用 工作区托管虚拟网络。

资源请求数大于限制

资源的请求数必须小于或等于限制。 如果不设置限制,Azure 机器学习会在你将计算附加到工作区时设置默认值。 可以通过 Azure 门户或 az ml compute show 命令来检查限制。

Azureml-fe 未准备就绪

在 azureml-fe安装期间,负责将传入推理请求路由到已部署服务的前端组件会安装,并根据需要自动扩展。 该组件在群集上应至少有一个正常运行的副本。

如果在你触发 kubernetes 联机终结点和部署创建/更新请求时它不可用,你会收到此错误消息。 检查 Pod 状态和日志以解决此问题。 还可以尝试更新群集上安装的 k8s 扩展。

错误:ResourceNotReady

为了运行在部署过程中提供的 score.py 文件,Azure 将创建一个包含 score.py 文件所需全部资源的容器,并在该容器上运行评分脚本。 在这种场景下发生的错误表现为此容器在运行时崩溃,因此无法评分。 存在以下任一原因时,可能出现此错误:

score.py 中存在错误。 使用

get-logs来诊断常见问题,例如:- Score.py 尝试导入的包未包含在 conda 环境中

- 语法错误

-

init()方法失败

如果

get-logs未生成任何日志,通常意味着容器启动失败。 若要调试此问题,请尝试 在本地部署。未正确设置就绪状态或运行情况探测。

容器初始化耗时过长,导致就绪情况或运行情况探测因超过失败阈值而失败。 在这种情况下,请调整 探测设置 ,以允许更长的时间初始化容器。 或者尝试更大的 支持的 VM SKU,以加速初始化。

容器的环境设置有错误,例如缺少依赖项。

如果收到

TypeError: register() takes 3 positional arguments but 4 were given错误,请检查 flask v2 和azureml-inference-server-http之间的依赖项。 有关详细信息,请参阅 HTTP 服务器问题疑难解答。

错误:ResourceNotFound

使用托管联机终结点或 Kubernetes 联机终结点时,你可能会因以下原因而遇到此错误:

资源管理器找不到所需资源

当 Azure 资源管理器找不到所需资源时,将发生此错误。 例如,如果在指定的路径上找不到存储帐户,则可能会收到此错误。 仔细检查路径或名称规范是否准备以及是否存在拼写错误。 有关详细信息,请参阅 解决找不到资源的错误。

容器注册表授权错误

当为部署提供属于专用或无法访问的容器注册表的映像时,会发生此错误。 Azure 机器学习 API 无法接受专用注册表凭据。

若要缓解此错误,请确保容器注册表不是专用的或执行以下步骤:

- 将专用注册表的 acrPull 角色授予联机终结点的系统标识。

- 在环境定义中,指定专用映像的地址以及不修改或生成映像的说明。

如果缓解成功,则映像不需要生成,最终映像地址为给定的映像地址。 部署时,联机终结点的系统标识将从专用注册表中拉取映像。

有关更多诊断信息,请参阅 如何使用工作区诊断。

错误:WorkspaceManagedNetworkNotReady

如果尝试创建启用工作区托管虚拟网络的联机部署,但尚未预配托管虚拟网络,则会发生此错误。 在创建联机部署之前预配工作区托管虚拟网络。

若要手动预配工作区托管虚拟网络,请按照 手动预配托管 VNet 中的说明进行操作。 完成后,可以开始创建联机部署。 有关详细信息,请参阅 使用托管联机终结点进行网络隔离 ,并使用 网络隔离保护托管联机终结点。

错误:OperationCanceled

使用托管联机终结点或 Kubernetes 联机终结点时,你可能会因以下原因而遇到此错误:

操作已被另一个具有更高优先级的操作取消

Azure 操作具有一定的优先级,并按从最高到最低的顺序执行。 当操作被具有较高优先级的另一个操作替代时,会发生此错误。 重试该操作可能可以在不取消的情况下执行操作。

操作在等待锁定确认时被取消

Azure 操作在提交后有短暂的等待期,在此期间它们会检索锁来确保不会遇到争用条件。 如果提交的操作与另一个操作相同,则会发生此错误。 另一个操作当前正在等待确认它已收到锁才能继续进行。

可能在初次请求后过早提交了类似的请求。 等待片刻后重试操作可能可以在不取消的情况下执行操作。

错误:SecretsInjectionError

联机部署创建期间的机密检索和注入使用与联机终结点关联的标识从工作区连接或密钥保管库检索机密。 以下任一原因都会导致发生此错误:

终结点标识没有从工作区连接或密钥保管库读取机密的 Azure RBAC 权限,即使部署定义将机密指定为引用(映射到环境变量)也是如此。 角色分配可能需要一段时间才能使更改生效。

机密引用的格式无效,或者工作区连接或密钥保管库中不存在指定的机密。

有关详细信息,请参阅 联机终结点中的机密注入(预览版) 和 使用机密注入(预览版)从联机部署访问机密。

错误:InternalServerError

此错误意味着需要修复的 Azure 机器学习服务存在问题。 提交 客户支持票证 ,其中包含解决问题所需的所有信息。

特定于 Kubernetes 部署的常见错误

标识和身份验证错误:

- ACRSecretError

- TokenRefreshFailed

- GetAADTokenFailed

- ACR身份验证挑战失败

- ACRTokenExchangeFailed

- Kubernetes不可访问

Crashloopbackoff 错误:

评分脚本错误:

其他错误:

- NamespaceNotFound

- EndpointAlreadyExists

- 评分功能不健康

- 验证评分失败

- 无效的部署规范

- PodUnschedulable

- PodOutOfMemory

- 推理客户端调用失败

错误:ACRSecretError

由于以下原因之一,你可能会在创建或更新 Kubernetes 联机部署时收到此错误:

角色分配未完成。 等待几秒钟,然后重试。

未正确安装或配置已启用 Azure Arc 的 Kubernetes 群集或 AKS Azure 机器学习扩展。 请检查已启用 Azure Arc 的 Kubernetes 或 Azure 机器学习扩展的配置和状态。

Kubernetes 群集的网络配置不正确。 请检查代理、网络策略或证书。

AKS 专用群集的终结点不正确。 请确保为容器注册表、存储帐户和 AKS 虚拟网络中的工作区设置专用终结点。

Azure 机器学习扩展版本为 v1.1.25 或更低版本。 确保该扩展的版本高于 v1.1.25。

错误:TokenRefreshFailed

发生此错误是因为未正确设置 Kubernetes 群集标识,因此扩展无法从 Azure 获取主体凭据。 重新安装 Azure 机器学习扩展 ,然后重试。

错误:GetAADTokenFailed

发生此错误的原因是 Kubernetes 群集请求 Microsoft Entra ID 令牌失败或超时。请检查网络访问权限,然后重试。

按照“使用 Kubernetes 计算”中的说明检查出站代理,并确保群集可以连接到工作区。 可以在群集中的联机终结点自定义资源定义 (CRD) 中找到工作区终结点 URL。

检查工作区是否允许公共访问。 无论 AKS 群集本身是公用的还是专用的,如果专用工作区禁用公用网络访问,Kubernetes 群集只能通过专用链接与该工作区通信。 有关详细信息,请参阅 什么是安全的 AKS 推理环境。

错误:ACRAuthenticationChallengeFailed

发生此错误的原因是 Kubernetes 群集无法访问工作区的容器注册表服务来执行身份验证质询。 请检查网络(尤其是容器注册表公用网络访问),然后重试。 可以按照 GetAADTokenFailed 中的故障排除步骤检查网络。

错误:KubernetesUnaccessible

在 Kubernetes 模型部署期间你可能会收到以下错误:

{"code":"BadRequest","statusCode":400,"message":"The request is invalid.","details":[{"code":"KubernetesUnaccessible","message":"Kubernetes error: AuthenticationException. Reason: InvalidCertificate"}],...}

若要缓解此错误,可以轮换群集的 AKS 证书。 新证书应在 5 小时后更新,因此可以等待 5 小时再重新部署。 有关详细信息,请参阅 Azure Kubernetes 服务(AKS)中的证书轮换。

错误:ImagePullLoopBackOff

创建/更新 Kubernetes 联机部署时可能会遇到此错误的原因是,无法从容器注册表下载映像,从而导致映像拉取失败。 可以检查群集网络策略和工作区容器注册表,查看群集是否可以从容器注册表中拉取映像。

错误:DeploymentCrashLoopBackOff

创建或更新 Kubernetes 联机部署时可能收到此错误的原因是,用户容器在初始化时发生故障。 此错误有两个可能的原因:

- 用户脚本 score.py 出现语法错误或导入错误,导致在初始化时引发异常。

- 部署 Pod 需要的内存超过其上限。

若要缓解此错误,首先可以在部署日志中检查用户脚本中是否存在任何异常。 如果错误仍然存在,请尝试扩展资源/实例类型内存限制。

错误:KubernetesCrashLoopBackOff

由于以下原因之一,你可能会在创建或更新 Kubernetes 联机终结点或部署时收到此错误:

- 一个或多个 Pod 停滞在 CrashLoopBackoff 状态中。 检查部署日志是否存在,以及日志中是否存在错误消息。

- 初始化分数代码时,score.py 出现错误,容器崩溃。 按照 ERROR: ResourceNotReady 下的说明进行操作。

- 评分过程需要的内存超过部署配置限制。 可以尝试使用更大的内存限制更新部署。

错误:NamespaceNotFound

创建或更新 Kubernetes 联机终结点时可能会遇到此错误的原因是,Kubernetes 计算使用的命名空间在群集中不可用。 请检查工作区门户中的 Kubernetes 计算,并检查 Kubernetes 群集中的命名空间。 如果命名空间不可用,可以拆离旧计算并重新附加以创建新计算,从而指定一个群集中已存在的命名空间。

错误:UserScriptInitFailed

创建或更新 Kubernetes 联机部署时可能会收到此错误的原因是,上传的 score.py 文件中的 init 函数引发了异常。 请检查部署日志以详细查看异常消息并修复异常。

错误:UserScriptImportError

创建或更新 Kubernetes 联机部署时可能会收到此错误的原因是,上传的 score.py 文件导入了不可用的包。 请检查部署日志以详细查看异常消息并修复异常。

错误:UserScriptFunctionNotFound

创建或更新 Kubernetes 联机部署时可能会收到此错误的原因是,上传的 score.py 文件没有名为 或 init() 的函数run()。 请检查代码并添加函数。

错误:EndpointNotFound

创建或更新 Kubernetes 联机部署时可能会收到此错误的原因是,系统在群集中找不到用于部署的终结点资源。 请在现有终结点中创建部署,或首先在群集中创建终结点。

错误:EndpointAlreadyExists

创建 Kubernetes 联机终结点时可能会遇到此错误的原因是,终结点已存在于群集中。 终结点名称在每个工作区和每个群集中应是唯一的,因此应使用其他名称创建终结点。

错误:ScoringFeUnhealthy

创建或更新 Kubernetes 联机终结点或部署时可能会收到此错误,因为群集中运行的 azureml-fe 系统服务找不到或运行不正常。 若要缓解此问题,请在群集中重新安装或更新 Azure 机器学习扩展。

错误:ValidateScoringFailed

创建或更新 Kubernetes 联机部署时可能会遇到此错误的原因是,处理模型部署时评分请求 URL 验证失败。 请检查终结点 URL,然后尝试重新部署。

错误:InvalidDeploymentSpec

创建或更新 Kubernetes 联机部署时可能收到此错误的原因是,部署规范无效。 请检查错误消息以确保 instance count 有效。 如果你已启用自动缩放,请确保 minimum instance count 和 maximum instance count 均有效。

错误:PodUnschedulable

由于以下原因之一,你可能会在创建或更新 Kubernetes 联机终结点或部署时收到此错误:

- 由于群集中的资源不足,系统无法将 Pod 安排到节点。

- 没有节点与节点相关性/选择器匹配。

若要缓解此错误,请执行以下步骤:

- 检查所使用的

node selector的instance_type定义,以及群集节点的node label配置。 - 检查

instance_type以及 AKS 群集的节点 SKU 大小或已启用 Azure Arc 的群集的节点资源。 - 如果群集资源不足,可以降低实例类型资源需求,或使用所需的资源较少的其他实例类型。

- 如果群集没有可满足部署要求的资源,请删除一些部署以释放资源。

错误:PodOutOfMemory

创建/更新联机部署时遇到此错误可能的原因是,为部署提供的内存限制不足。 可以将内存限制设置为更大的值,或使用更大的实例类型来缓解此错误。

错误:InferencingClientCallFailed

创建/更新 Kubernetes 联机终结点或部署时可能会遇到此错误的原因是,Kubernetes 群集的 k8s 扩展不可连接。 在这种情况下,可以拆离计算,然后再重新附加计算。

若要通过重新附加来排查错误,请确保重新附加时使用与以前拆离的计算完全相同的配置(例如相同的计算名称和命名空间),否则可能会遇到其他错误。 如果它仍然无法正常工作,请让可以访问群集的管理员使用 kubectl get po -n azureml 来检查中继服务器 Pod 是否正在运行。

模型使用问题

终结点 invoke 作状态导致的常见模型消耗错误包括 带宽限制问题、 CORS 策略和各种 HTTP 状态代码。

带宽限制问题

托管联机终结点对于每个终结点都有带宽限制。 可以在 联机终结点的限制中找到限制配置。 如果带宽使用量超出限制,则请求会延迟。

要监视带宽延迟,请使用“网络字节数”指标来了解当前带宽使用情况。 有关详细信息,请参阅监视托管联机终结点。

如果强制实施带宽限制,则会返回两个响应尾部:

-

ms-azureml-bandwidth-request-delay-ms是请求流传输所用的延迟时间(以毫秒为单位)。 -

ms-azureml-bandwidth-response-delay-ms是响应流传输所用的延迟时间(以毫秒为单位)。

被 CORS 策略阻止

V2 联机终结点原生不支持跨域资源共享(CORS)。 如果 Web 应用程序尝试调用终结点时未正确处理 CORS 预检请求,你会看到以下错误消息:

Access to fetch at 'https://{your-endpoint-name}.{your-region}.inference.studio.ml.azure.cn/score' from origin http://{your-url} has been blocked by CORS policy: Response to preflight request doesn't pass access control check. No 'Access-control-allow-origin' header is present on the request resource. If an opaque response serves your needs, set the request's mode to 'no-cors' to fetch the resource with the CORS disabled.

可以使用 Azure Functions、Azure 应用程序网关或其他服务作为临时层来处理 CORS 预检请求。

HTTP 状态代码

使用 REST 请求访问联机终结点时,返回的状态代码遵循 HTTP 状态代码的标准。 以下部分详述了终结点调用和预测错误如何映射到 HTTP 状态代码。

托管联机终结点的常见错误代码

下表包含 REST 请求使用托管联机终结点时的常见错误代码:

| 状态代码 | 原因 | 说明 |

|---|---|---|

| 200 | 好的 | 已在延迟范围内成功执行了模型。 |

| 401 | 未授权 | 你无权执行请求的操作(如评分),或者令牌已过期或格式错误。 有关详细信息,请参阅 托管联机终结点的身份验证 ,以及 对联机终结点的客户端进行身份验证。 |

| 404 | 未找到 | 终结点没有任何权重为正的有效部署。 |

| 408 | 请求超时 | 模型执行所用的时间超过了在模型部署配置的 request_timeout_ms 下的 request_settings 中提供的超时。 |

| 424 | 模型错误 | 如果模型容器返回非 200 响应,则 Azure 将返回 424。 在终结点的 Model Status Code的 Requests Per Minute 指标下检查 维度。 或者查看响应头 ms-azureml-model-error-statuscode 和 ms-azureml-model-error-reason 了解详细信息。 如果出现 424 并伴随活动或准备情况探测失败,可以考虑调整 ProbeSettings 以允许更多时间来探测容器的活动性或准备性。 |

| 429 | 挂起的请求太多 | 模型当前收到的请求数超过了它可以处理的数量。 为了保证顺利运行,Azure 机器学习允许在任意给定时间最多 2 * max_concurrent_requests_per_instance * instance_count requests 的并行处理量。 超过此最大值的请求将被拒绝。可以在 request_settings 和 scale_settings 部分下查看模型部署配置,以验证和调整这些设置。 此外,请确保正确传递环境变量 WORKER_COUNT ,如 RequestSettings 中所述。如果在使用自动缩放时遇到此问题,则意味着模型获取请求的速度比系统纵向扩展的速度更快。 请考虑使用 指数退避 重新发送请求,以便让系统有时间进行调整。 还可以使用代码 计算实例计数来增加实例数。 这些步骤与设置自动缩放相结合,有助于确保模型已准备好处理涌入的请求。 |

| 429 | 速率受限 | 每秒请求数达到托管联机终结点 限制。 |

| 500 | 内部服务器错误 | Azure 机器学习预配的基础结构发生故障。 |

Kubernetes 联机终结点的常见错误代码

下表包含 REST 请求使用 Kubernetes 联机终结点时的常见错误代码:

| 状态代码 | 错误 | 说明 |

|---|---|---|

| 409 | 冲突错误 | 如有操作正在进行,则同一联机终结点上的任何新操作都会响应 409 冲突错误。 例如,如果创建或更新联机终结点操作正在进行,那么触发新的删除操作会引发错误。 |

| 502 | 在 score.py 文件的 run() 方法中的异常或崩溃 |

当 score.py 中存在错误时(例如导入的包在 conda 环境中不存在)或在 方法中存在语法错误或失败时,请参阅init()调试该文件。 |

| 503 | 每秒请求量大幅增加 | 自动缩放程序旨在处理负载中的逐步更改。 如果每秒收到的请求大幅增加,客户端可能会收到 HTTP 状态代码 503。 即使自动缩放器反应迅速,但 AKS 仍需要大量时间来创建其他容器。 请参阅 如何防止 503 状态代码错误。 |

| 504 | 请求超时 | 504 状态代码指示请求已超时。默认超时设置为 5 秒。 可通过修改 score.py,删除不必要的调用来增加超时值或尝试加快终结点速度。 如果这些操作无法解决问题,则代码可能处于非响应状态或无限循环状态。 按照 ERROR:ResourceNotReady 调试 score.py 文件。 |

| 500 | 内部服务器错误 | Azure 机器学习预配的基础结构发生故障。 |

如何防止 503 状态代码错误

Kubernetes 联机部署支持自动缩放,这允许添加副本以支持额外的负载。 有关详细信息,请参阅 Azure 机器学习推理路由器。 纵向扩展或缩减决策取决于当前容器副本的利用率。

有两个操作可帮助防止 503 状态代码错误:更改创建新副本的利用率级别或更改最小副本数。 这两种方法可以单独使用,也可以结合使用。

通过将

autoscale_target_utilization设置为较低的值,更改自动缩放创建新副本的利用率目标。 此更改不会导致副本创建速度加快,但会导致利用率阈值较低。 例如,可以在利用率达到 30% 时,通过将值改为 30% 来创建副本,而不是等待该服务的利用率达到 70% 时再创建。更改最小副本数,以提供可以处理传入峰值的大型池。

如何计算实例数

要增加实例数量,可以按如下所示计算所需的副本:

from math import ceil

# target requests per second

target_rps = 20

# time to process the request (in seconds, choose appropriate percentile)

request_process_time = 10

# Maximum concurrent requests per instance

max_concurrent_requests_per_instance = 1

# The target CPU usage of the model container. 70% in this example

target_utilization = .7

concurrent_requests = target_rps * request_process_time / target_utilization

# Number of instance count

instance_count = ceil(concurrent_requests / max_concurrent_requests_per_instance)

注意

如果收到的峰值请求数大于新的最小副本数可以处理的数量,则可能会再次收到 503 代码。 例如,终结点流量增加时,可能需要增加最小副本数量。

如果 Kubernetes 联机终结点已在使用当前的最大副本数,并且仍然收到 503 状态代码,请增加 autoscale_max_replicas 值以增加最大副本数。

网络隔离问题

此部分提供有关常见网络隔离问题的信息。

联机终结点创建失败并显示一条有关 v1 旧版模式的消息

托管联机终结点是 Azure 机器学习 v2 API 平台的一项功能。 如果 Azure 机器学习工作区配置为 v1 版本旧模式,则托管的在线终结点将不起作用。 具体而言,如果 v1_legacy_mode 工作区设置设置为 true,则 v1 旧模式处于打开状态,并且不支持 v2 API。

若要了解如何关闭 v1 旧模式,请参阅 Azure 资源管理器上的新 API 平台的网络隔离更改。

重要

在将 v1_legacy_mode 设置为 false 之前,请与网络安全团队联系,因为 v1 旧模式可能是由于某种原因而被开启的。

使用基于密钥的身份验证创建联机终结点失败

使用以下命令列出工作区的 Azure 密钥保管库的网络规则。 将 <key-vault-name> 替换为你的密钥保管库名称。

az keyvault network-rule list -n <key-vault-name>

此命令的响应类似于以下 JSON 代码:

{

"bypass": "AzureServices",

"defaultAction": "Deny",

"ipRules": [],

"virtualNetworkRules": []

}

如果值bypass不是AzureServices,请使用“配置 Azure Key Vault 网络设置”中的指南将其设置为AzureServices。

联机部署失败并出现映像下载错误

注意

将 旧网络隔离方法用于托管联机终结点时,会出现此问题。 在此方法中,Azure 机器学习为终结点下的每个部署创建托管虚拟网络。

检查部署的

egress-public-network-access标志值是否为disabled。 如果此标志已启用,并且容器注册表的可见性为“专用”,则预期会发生这种失败。使用以下命令检查专用终结点连接的状态。 请将

<registry-name>替换为你的工作区的 Azure 容器注册表名称:az acr private-endpoint-connection list -r <registry-name> --query "[?privateLinkServiceConnectionState.description=='Egress for Microsoft.MachineLearningServices/workspaces/onlineEndpoints'].{ID:id, status:privateLinkServiceConnectionState.status}"在响应代码中,验证

status字段是否设置为Approved。 如果未指定该值Approved,请使用以下命令批准连接。 将 `<private-endpoint-connection-ID>` 替换为上述命令返回的 ID。az network private-endpoint-connection approve --id <private-endpoint-connection-ID> --description "Approved"

无法解析评分终结点

验证发出评分请求的客户端是否为可以访问 Azure 机器学习工作区的虚拟网络。

使用

nslookup命令在终结点主机名上以检索 IP 地址信息。nslookup <endpoint-name>.<endpoint-region>.inference.ml.azure.cn例如,命令可能类似于以下命令:

nslookup endpointname.westcentralus.inference.ml.azure.com响应中包含地址,该地址应在虚拟网络提供的范围内。

注意

- 对于 Kubernetes 联机终结点,终结点主机名应为 Kubernetes 群集中指定的 CName (域名)。

- 如果终结点使用 HTTP,则 IP 地址包含在可从工作室 UI 获取的终结点 URI 中。

- 有关获取终结点 IP 地址的更多方法,请参阅 使用 FQDN 更新 DNS。

如果

nslookup命令无法解析主机名,请采取以下部分之一的行动。

托管联机终结点

使用以下命令检查虚拟网络的专用域名系统(DNS)区域中是否存在 A 记录。

az network private-dns record-set list -z privatelink.api.azureml.ms -o tsv --query [].name结果应包含类似于

*.<GUID>.inference.<region>的条目。如果未返回推理值,请删除工作区的专用终结点,然后重新创建它。 有关详细信息,请参阅 如何配置专用终结点。

如果具有专用终结点的工作区 使用自定义 DNS 服务器,请运行以下命令以验证自定义 DNS 服务器的解析是否正常工作:

dig <endpoint-name>.<endpoint-region>.inference.ml.azure.com

Kubernetes 联机终结点

请检查 Kubernetes 群集中的 DNS 配置。

检查 Azure 机器学习推理路由器

azureml-fe是否按预期工作。 若要执行此检查,请执行以下步骤:在

azureml-fePod 中运行以下命令:kubectl exec -it deploy/azureml-fe -- /bin/bash运行下列命令之一:

curl -vi -k https://localhost:<port>/api/v1/endpoint/<endpoint-name>/swagger.json "Swagger not found"对于 HTTP,请使用以下命令:

curl https://localhost:<port>/api/v1/endpoint/<endpoint-name>/swagger.json "Swagger not found"

如果 curl HTTPS 命令失败或超时,但 HTTP 命令有效,请检查证书是否有效。

如果前面的进程未能解析为 A 记录,请使用以下命令验证从 Azure DNS 虚拟公共 IP 地址 168.63.129.16 解析是否有效:

dig @168.63.129.16 <endpoint-name>.<endpoint-region>.inference.ml.azure.com如果上述命令成功,请对自定义 DNS 上的 Azure 专用链接的条件转发器进行故障排除。

无法为联机部署评分

运行以下命令以查看无法评分的部署的状态:

az ml online-deployment show -e <endpoint-name> -n <deployment-name> --query '{name:name,state:provisioning_state}'Succeeded字段的值为state表示部署成功。对于成功的部署,使用以下命令检查流量是否已分配到部署:

az ml online-endpoint show -n <endpoint-name> --query traffic此命令的响应应列出分配给每个部署的流量百分比。

提示

如果在请求中使用

azureml-model-deployment标头将此部署定为目标,则不必执行此步骤。如果正确设置了流量分配或部署标头,请使用以下命令获取终结点的日志:

az ml online-deployment get-logs -e <endpoint-name> -n <deployment-name>检查日志,以了解在向部署提交请求时运行评分代码是否存在问题。

推理服务器问题

本部分提供有关 Azure 机器学习推理 HTTP 服务器的基本故障排除提示。

检查已安装的包

按照以下步骤解决已安装的包的问题:

收集有关为 Python 环境安装的包和版本的信息。

在环境文件中,检查指定的 Python 包的版本

azureml-inference-server-http。 在 Azure 机器学习推理 HTTP 服务器 启动日志中,检查显示的推理服务器的版本。 确认两个版本匹配。在某些情况下,pip 依赖项解析程序会安装意外的包版本。 可能需要运行

pip来更正已安装的包和版本。如果在环境中指定 Flask 或其依赖项,请删除这些项。

- 依赖包包括

flask、jinja2、itsdangerous、werkzeug、markupsafe和click。 - 包

flask在推理服务器包中列为依赖项。 最佳方法是允许推理服务器安装flask包。 - 当推理服务器配置为支持新版本的 Flask 时,推理服务器会在可用时自动接收包更新。

- 依赖包包括

检查推理服务器版本

azureml-inference-server-http 服务器包会发布到 PyPI。

PyPI 页列出了更改日志和包的所有版本。

如果使用早期包版本,请将配置更新到最新版本。 下表汇总了稳定版本、常见问题和建议的调整:

| 包版本 | 说明 | 问题 | 解决方案 |

|---|---|---|---|

| 0.4.x | 捆绑在 20220601 或更早日期的训练图像以及包 azureml-defaults 版本 0.1.34 到 1.43 中的内容。 最新稳定版本为 0.4.13。 |

对于低于 0.4.11 的服务器版本,可能会遇到 Flask 依赖项问题,例如 can't import name Markup from jinja2。 |

如果可能,请升级到版本 0.4.13 或 1.4.x(最新版本)。 |

| 0.6.x | 已预装在 20220516 及更早发布的推理映像中。 最新稳定版本为 0.6.1。 |

空值 | 空值 |

| 0.7.x | 支持 Flask 2。 最新稳定版本为 0.7.7。 | 空值 | 空值 |

| 0.8.x | 使用更新后的日志格式。 结束对 Python 3.6 的支持。 | 空值 | 空值 |

| 1.0.x | 结束对 Python 3.7 的支持。 | 空值 | 空值 |

| 1.1.x | 迁移到 pydantic 2.0。 |

空值 | 空值 |

| 1.2.x | 添加了对 Python 3.11 的支持。 将 gunicorn 更新到版本 22.0.0。 更新 werkzeug 至 3.0.3 版本及更高版本。 |

空值 | 空值 |

| 1.3.x | 添加了对 Python 3.12 的支持。 将certifi升级到版本 2024.7.4。 将 flask-cors 升级到版本 5.0.0。 升级 gunicorn 和 pydantic 包。 |

空值 | 空值 |

| 1.4.x | 将 waitress 升级到版本 3.0.1。 结束对 Python 3.8 的支持。 删除阻止 Flask 2.0 升级中断请求对象代码的兼容性层。 |

如果依赖于兼容性层,则请求对象代码可能不起作用。 | 将分数脚本迁移到 Flask 2。 |

检查包依赖项

azureml-inference-server-http 服务器包的最相关依赖包包括:

flaskopencensus-ext-azureinference-schema

如果在 Python 环境中指定 azureml-defaults 包,则 azureml-inference-server-http 包是依赖包。 依赖项已自动安装。

提示

如果使用适用于 Python 的 Azure 机器学习 SDK v1,并且未在 Python 环境中显式指定 azureml-defaults 包,则 SDK 可能会自动添加该包。 但是,包版本相对于 SDK 版本被锁定。 例如,如果 SDK 版本为 1.38.0,则 azureml-defaults==1.38.0 条目将添加到环境的 pip 要求中。

推理服务器启动期间出现类型错误

在推理服务器启动期间可能会遇到以下 TypeError:

TypeError: register() takes 3 positional arguments but 4 were given

File "/var/azureml-server/aml_blueprint.py", line 251, in register

super(AMLBlueprint, self).register(app, options, first_registration)

TypeError: register() takes 3 positional arguments but 4 were given

如果在 Python 环境中安装了 Flask 2,但 azureml-inference-server-http 包版本不支持 Flask 2,则会出现此错误。 0.7.0 包及更高版本中提供了 azureml-inference-server-http 对 Flask 2 的支持,以及 azureml-defaults 1.44 包及更高版本。

如果你未在 Azure 机器学习 Docker 映像中使用 Flask 2 包,请使用最新版本的

azureml-inference-server-http或azureml-defaults包。如果在 Azure 机器学习 Docker 映像中使用 Flask 2 包,请确认映像生成版本为

July 2022或更高版本。可以在容器日志中找到映像版本。 例如,请参阅以下日志语句:

2022-08-22T17:05:02,147738763+00:00 | gunicorn/run | AzureML Container Runtime Information 2022-08-22T17:05:02,161963207+00:00 | gunicorn/run | ############################################### 2022-08-22T17:05:02,168970479+00:00 | gunicorn/run | 2022-08-22T17:05:02,174364834+00:00 | gunicorn/run | 2022-08-22T17:05:02,187280665+00:00 | gunicorn/run | AzureML image information: openmpi4.1.0-ubuntu20.04, Materialization Build:20220708.v2 2022-08-22T17:05:02,188930082+00:00 | gunicorn/run | 2022-08-22T17:05:02,190557998+00:00 | gunicorn/run |映像生成日期显示在

Materialization Build表示法后面。 在前面的示例中,映像版本为202207082022 年 7 月 8 日。 在此示例中,该映像与 Flask 2 兼容。如果在容器日志中未看到类似的消息,则表明该映像已过期,应进行更新。 如果使用计算统一设备体系结构(CUDA)映像,但找不到较新的映像,请检查 AzureML 容器 存储库以查看映像是否已弃用。 可以查找已弃用映像的指定替换项。

如果将推理服务器与联机终结点配合使用,还可以在 Azure 机器学习工作室中找到日志。 在终结点的页面上,选择“ 日志 ”选项卡。

如果使用 SDK v1 进行部署,并且未在部署配置中显式指定映像,推理服务器会应用 openmpi4.1.0-ubuntu20.04 包,其版本与本地 SDK 工具集匹配。 但是,已安装的版本可能不是映像的最新可用版本。

对于 SDK 版本 1.43,推理服务器默认安装 openmpi4.1.0-ubuntu20.04:20220616 包版本,但此包版本与 SDK 1.43 不兼容。 请确保使用最新 SDK 进行部署。

如果无法更新映像,可以通过在环境文件中固定 azureml-defaults==1.43 或 azureml-inference-server-http~=0.4.13 条目来暂时避免此问题。 这些条目指示推理服务器使用 flask 1.0.x 安装较旧版本。

推理服务器启动期间 ImportError 或 ModuleNotFoundError

在推理服务器启动期间,可能会遇到特定模块(例如 ImportError、ModuleNotFoundError、opencensus或 jinja2)上的 markupsafe 或 click。 以下示例显示了此错误消息:

ImportError: cannot import name 'Markup' from 'jinja2'

使用版本 0.4.10 或更早版本的推理服务器时,如果没有将 Flask 依赖项固定到兼容版本,就会发生导入和模块错误。 若要防止此问题,请安装更高版本的推理服务器。

其他常见问题

其他常见的联机终结点问题与 conda 安装和自动缩放有关。

Conda 安装问题

通常,MLflow 部署问题源于 conda.yml 文件中指定的用户环境的安装问题。

若要调试 conda 安装问题,请尝试以下步骤:

- 检查 Conda 安装的日志。 如果容器崩溃或启动时间过长,则可能是 Conda 环境更新无法正确解析。

- 使用命令

conda env create -n userenv -f <CONDA_ENV_FILENAME>在本地安装 mlflow conda 文件。 - 如果本地存在错误,请尝试解析 conda 环境并创建一个功能性环境,然后再重新部署。

- 如果即使在本地解析,容器也会崩溃,则用于部署的 SKU 大小可能太小。

- Conda 包安装在运行时进行,因此如果 SKU 太小而无法容纳 conda.yml 环境文件中的所有包,则容器可能会崩溃。

- Standard_F4s_v2 VM 是一个很好的启动 SKU 大小,但可能需要更大的 SKU,具体取决于 Conda 文件中指定的依赖项。

- 对于 Kubernetes 联机终结点,Kubernetes 群集必须至少有 4 个 vCPU 核心和 8 GB 内存。

自动缩放问题

如果在自动缩放时遇到问题,请参阅排查 Azure Monitor 自动缩放问题。

对于 Kubernetes 联机终结点, Azure 机器学习推理路由器是一个前端组件,可处理 Kubernetes 群集上所有模型部署的自动缩放。 有关详细信息,请参阅自动缩放 Kubernetes 推理路由。