有时,Azure 流分析作业会意外地停止处理。 因此,能够解决此类事件是很重要的。 故障可能由意外的查询结果、与设备的连接问题或意外的服务中断导致。 流分析中的资源日志可以帮助用户在问题发生时确定原因并缩短恢复时间。

强烈建议为所有作业启用资源日志,因为这对调试和监视会有很大帮助。

日志类型

流分析提供两种类型的日志:

注意

可以使用 Azure 存储、Azure 事件中心和 Azure Monitor 日志等服务分析不一致的数据。 将根据这些服务的定价模式进行收费。

注意

本文最近已更新,从使用术语“Log Analytics”改为使用术语“Azure Monitor 日志”。 日志数据仍然存储在 Log Analytics 工作区中,并仍然由同一 Log Analytics 服务收集并分析。 我们正在更新术语,以便更好地反映 Azure Monitor 中的日志的角色。 有关详细信息,请参阅 Azure Monitor 术语更改。

使用活动日志调试

活动日志在默认情况下处于启用状态,提供对流分析作业执行的操作的深入见解。 活动日志中存在的信息可帮助找到影响作业的问题的根本原因。 执行以下步骤,在流分析中使用活动日志:

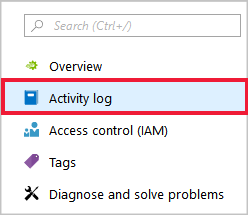

登录 Azure 门户并选择“概述”下的“活动日志”。

可看到已执行的操作的列表。 导致作业失败的所有操作都有一个红色信息气泡。

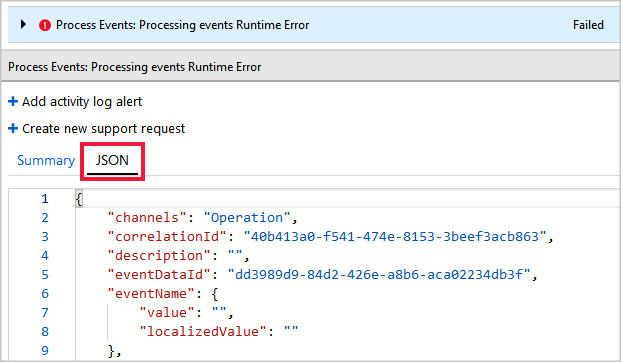

选择操作以查看其摘要视图。 此处的信息通常是有限的。 若要了解有关操作的更多详细信息,请选择“JSON”。

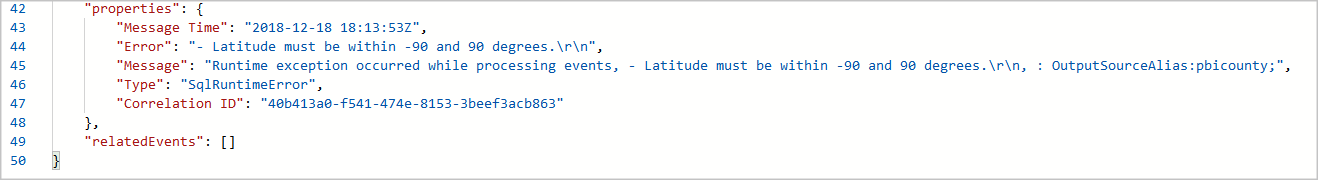

向下滚动到 JSON 的“属性”部分,其中提供导致失败操作的错误的详细信息。 在本示例中,失败的原因在于超出范围的纬度值的运行时错误。 流分析作业处理的数据不一致会导致数据错误。 你可以了解不同的输入和输出数据错误及其发生原因。

可以根据 JSON 中的错误消息采取纠正措施。 在本示例中,检查以确保纬度值介于 -90 度到 90 度之间,并需要将其添加到查询中。

如果活动日志中的错误消息对于确定根本原因没有帮助,请启用资源日志并使用 Azure Monitor 日志。

将诊断发送到 Azure Monitor 日志

强烈建议打开资源日志并将它们发送到 Azure Monitor 日志。 默认情况下,它们处于“关闭”状态。 若要打开它们,请完成以下步骤:

如果你还没有 Log Analytics 工作区,请创建一个。 建议使 Log Analytics 工作区与流分析作业位于同一区域中。

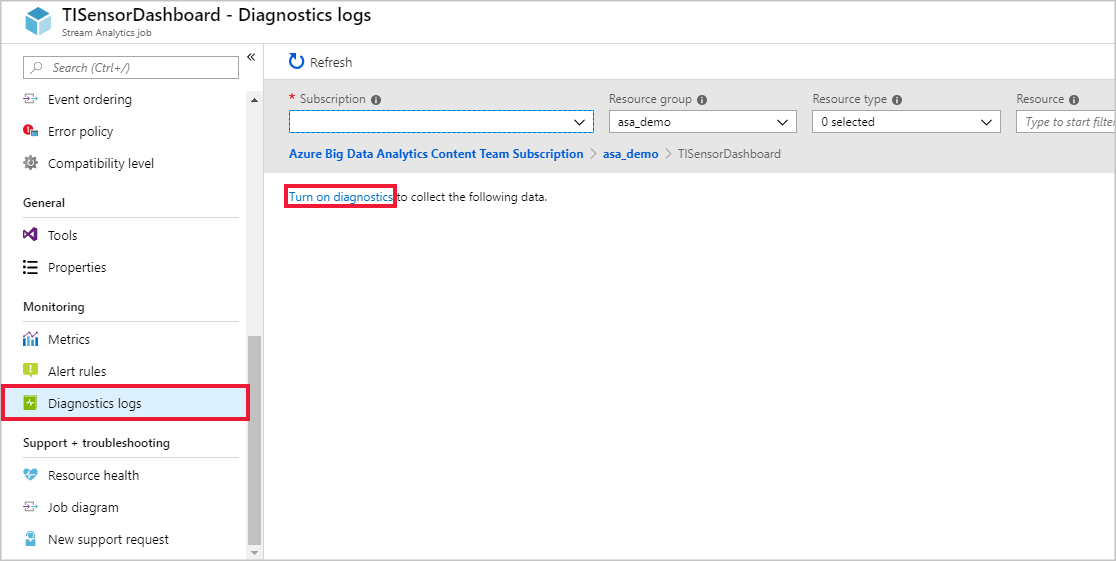

登录 Azure 门户,导航到流分析作业。 在“监视”下,选择“诊断日志”。 然后选择“启用诊断”。

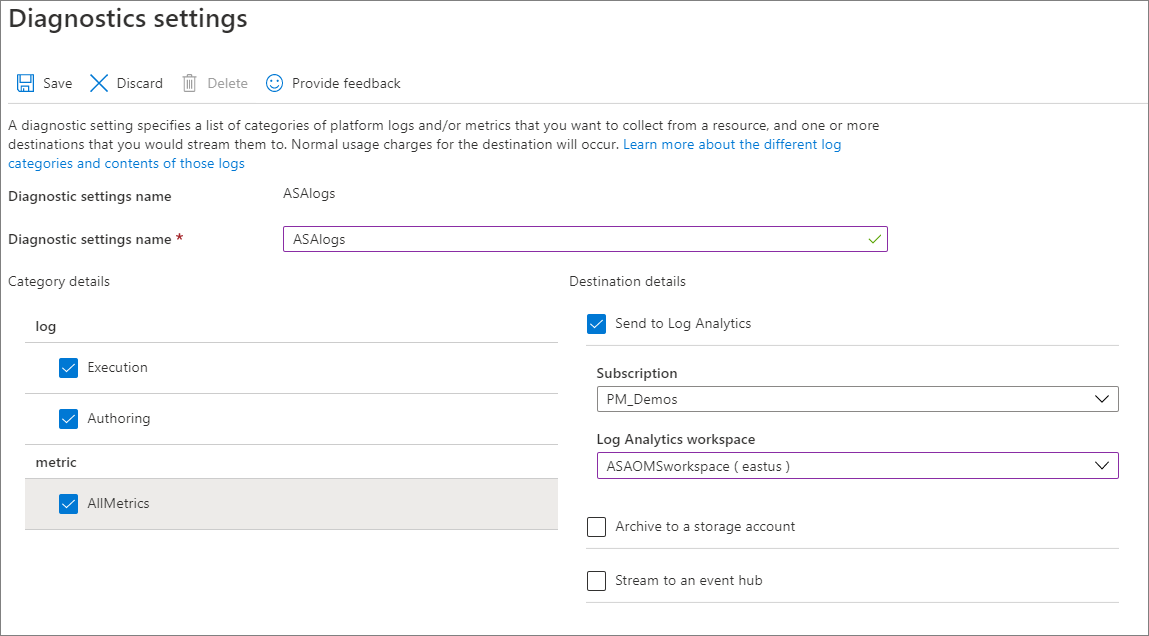

在“诊断设置名称”中提供“名称”,并选中“日志”下的“执行”和“授权”复选框,以及“指标”下的“AllMetrics”复选框 。 然后选择“发送到 Log Analytics”并选择工作区。 选择“保存”。

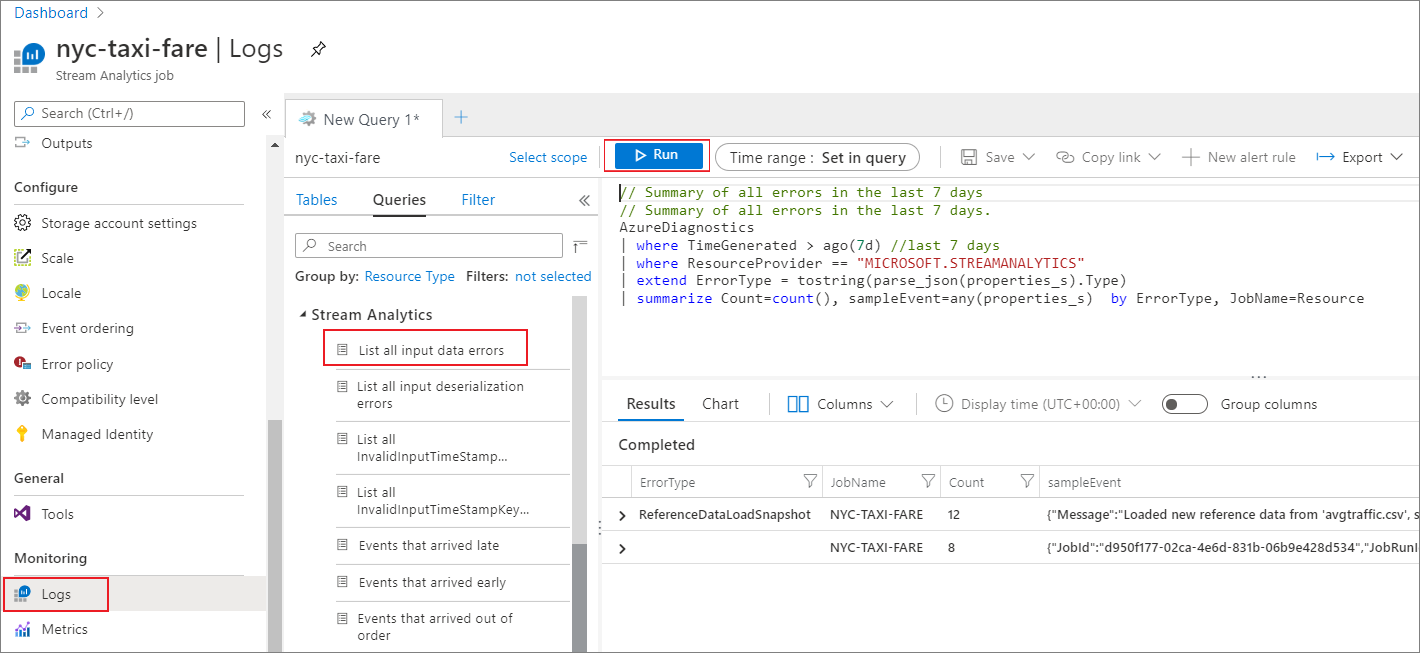

流分析作业开始时,资源日志会被路由到 Log Analytics 工作区。 若要查看作业的资源日志,请在“监视”部分下选择“日志”。

流分析提供预定义的查询,使你可以轻松搜索感兴趣的日志。 可以在左侧窗格中选择任意预定义的查询,然后选择“运行”。 底部窗格将显示查询结果。

资源日志类别

Azure 流分析捕获两种类别的资源日志:

创作:捕获与作业创作操作相关的日志事件,例如作业创建、添加和删除输入与输出、添加和更新查询,以及开始或停止作业。

执行:捕获作业执行期间发生的事件。

- 连接错误

- 数据处理错误,包括:

- 不符合查询定义的事件(字段类型和值不匹配、缺少字段等)

- 表达式计算错误

- 其他事件和错误

所有日志均以 JSON 格式存储。 若要了解资源日志的架构,请参阅资源日志架构。