本教程介绍如何使用 Azure 流分析来分析电话呼叫数据。 由客户端应用程序生成的电话呼叫数据包含欺诈性呼叫,这些呼叫由流分析作业检测。 本教程中的技术也适用于其他类型的欺诈检测,如信用卡欺诈或身份盗用。

将在本教程中执行以下任务:

- 生成示例性的电话呼叫数据并将其发送到 Azure 事件中心。

- 创建流分析作业。

- 配置作业输入和输出。

- 定义用于筛选欺诈性呼叫的查询。

- 测试和启动作业。

- 在 Power BI 中可视化结果。

先决条件

在开始之前,请确保已完成以下步骤:

- 如果没有 Azure 订阅,请创建试用版。

- 请从 Microsoft 下载中心下载电话呼叫事件生成器应用 TelcoGenerator.zip,或者从 GitHub 获取源代码。

- 需要一个 Power BI 帐户。

登录 Azure

登录到 Azure 门户。

创建事件中心

需要先将一些示例数据发送到事件中心,然后流分析才能分析欺诈性呼叫数据流。 在本教程中,你将使用 Azure 事件中心将数据发送到 Azure。

请按以下步骤创建一个事件中心,然后向该事件中心发送调用数据:

登录 Azure 门户。

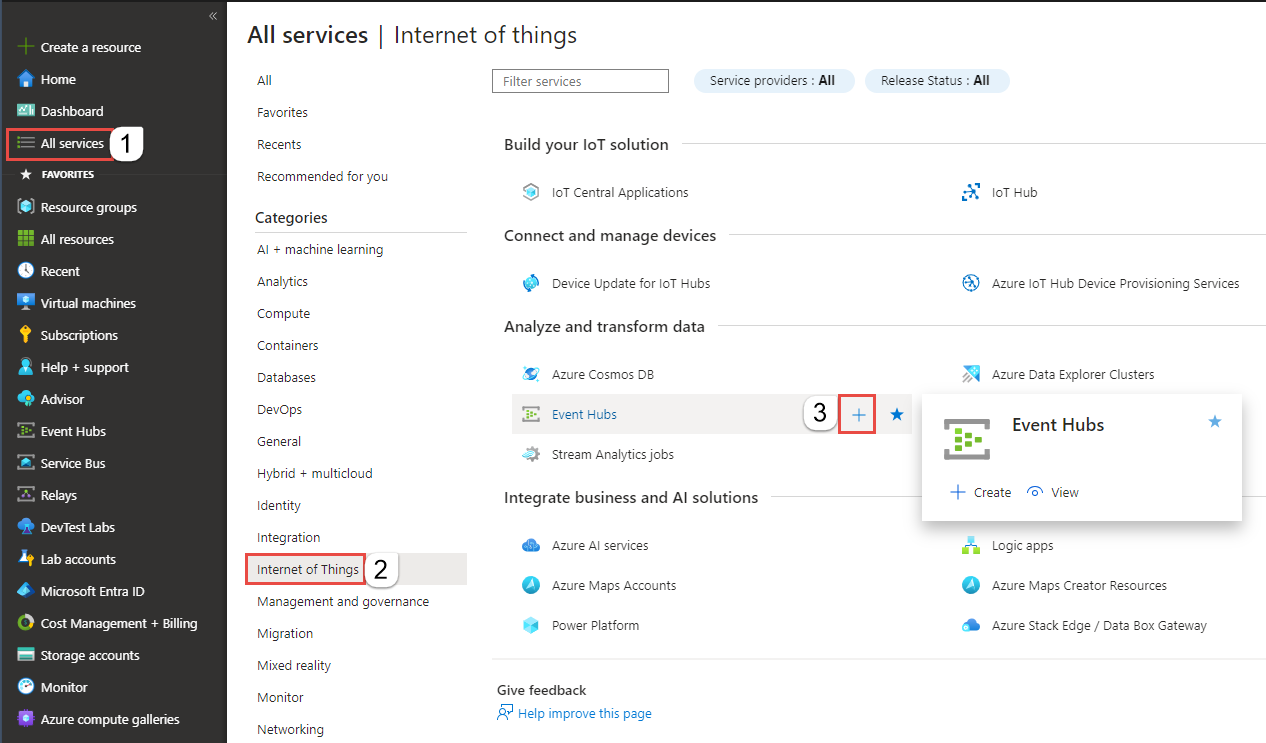

选择左侧菜单中的“所有服务”,选择“物联网”,将鼠标悬停在“事件中心”上,然后选择“+ (添加)”按钮。

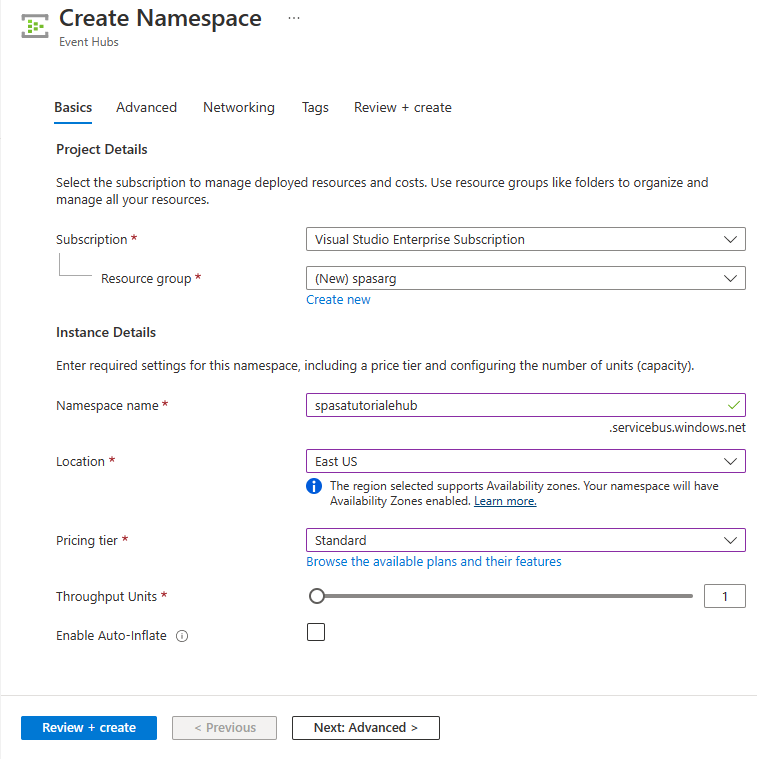

在“创建命名空间”页上执行以下步骤:

选择要在其中创建事件中心的 Azure 订阅。

对于“资源组”,请选择“新建”,然后输入资源组的名称。 事件中心命名空间将在此资源组中创建。

对于“命名空间名称”,请输入事件中心命名空间的唯一名称。

对于“位置”,请选择要在其中创建命名空间的区域。

对于“定价层”,请选择“标准”。

在页面底部选择“查看 + 创建”。

在命名空间创建向导的“查看 + 创建”页上,在查看所有设置后,选择页面底部的“创建”。

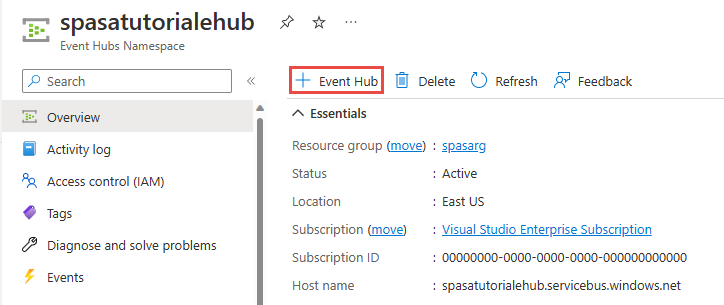

成功部署命名空间后,选择“转到资源”以导航到“事件中心命名空间”页。

在“事件中心命名空间”页上,选择命令栏中的“+事件中心”。

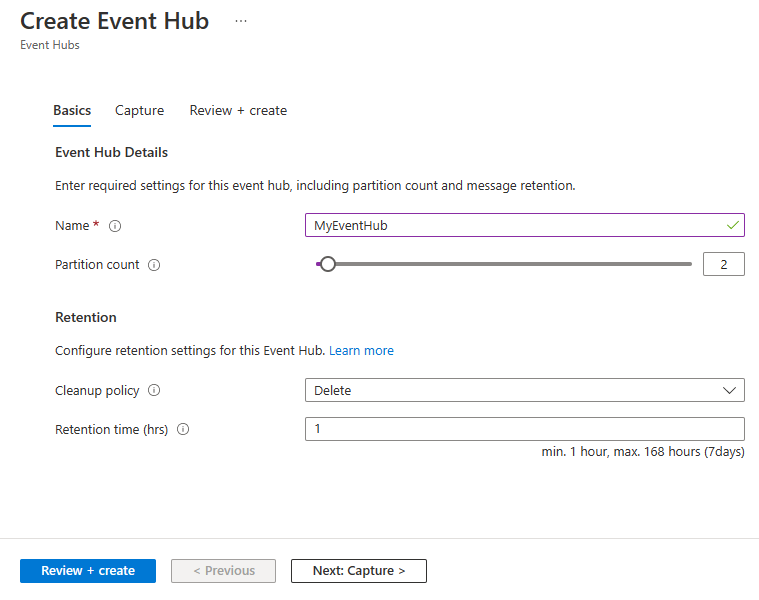

在“创建事件中心”页上,为事件中心输入一个名称。 将分区计数设置为 2。 对其余设置使用默认选项,然后选择“查看 + 创建”。

在“查看 + 创建”页上,选择页面底部的“创建”。 然后,等待部署成功完成。

授予对事件中心的访问权限,并获取连接字符串

在应用程序可以将数据发送到 Azure 事件中心之前,事件中心必须具有允许访问的策略。 访问策略生成包含授权信息的连接字符串。

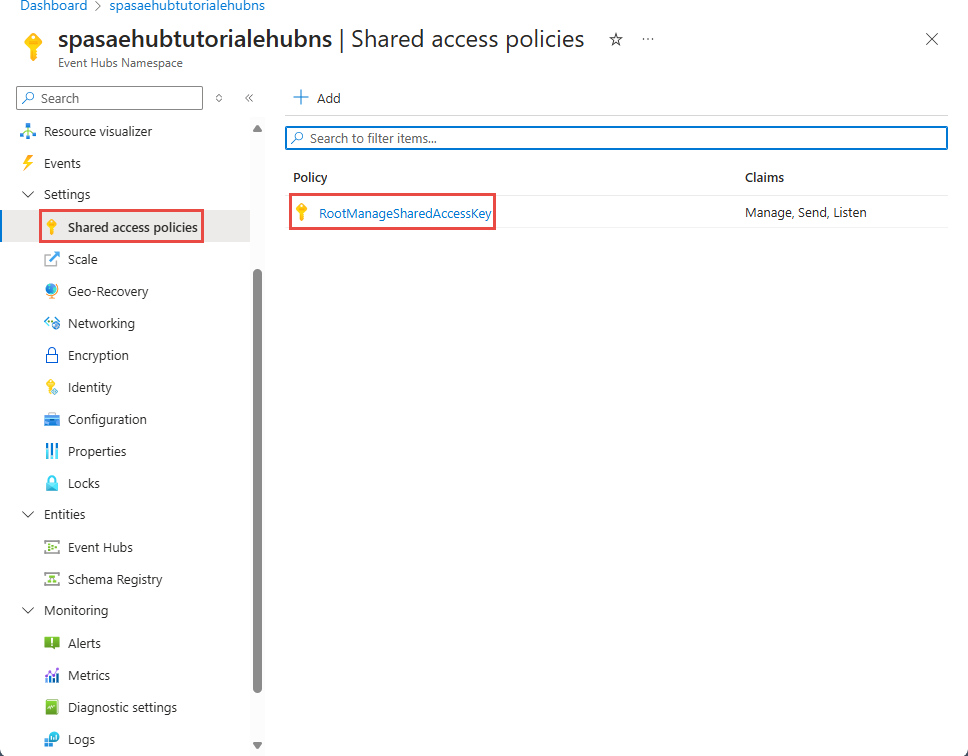

在“事件中心命名空间”页中的左侧菜单上选择“共享访问策略”。

从策略列表中选择 RootManageSharedAccessKey。

然后选择“连接字符串 - 主密钥”旁边的复制按钮。

将连接字符串粘贴到文本编辑器中。 需要在下一部分使用此连接字符串。

连接字符串如下所示:

Endpoint=sb://<Your event hub namespace>.servicebus.chinacloudapi.cn/;SharedAccessKeyName=<Your shared access policy name>;SharedAccessKey=<generated key>请注意,连接字符串包含多个用分号隔开的键值对:Endpoint、SharedAccessKeyName 和 SharedAccessKey。

启动事件生成器应用程序

在启动 TelcoGenerator 应用之前,应该对其进行配置,以便将数据发送到此前创建的 Azure 事件中心。

提取 TelcoGenerator.zip 文件的内容。

在所选文本编辑器中打开

TelcoGenerator\TelcoGenerator\telcodatagen.exe.config文件。有多个.config文件,因此请确保打开正确的文件。使用以下详细信息更新配置文件中的

<appSettings>元素:- 将 EventHubName 键的值设置为连接字符串末尾的 EntityPath 的值。

- 将 Microsoft.ServiceBus.ConnectionString 键的值设置为命名空间的连接字符串。 如果将连接字符串用于事件中心(而不是命名空间),请移除末尾的

EntityPath值(;EntityPath=myeventhub)。 别忘了删除 EntityPath 值前面的分号。

保存文件。

接下来打开命令窗口,转到解压缩 TelcoGenerator 应用程序的文件夹。 然后输入以下命令:

.\telcodatagen.exe 1000 0.2 2此命令采用以下参数:

- 每小时的呼叫数据记录数。

- 欺诈概率 (%),即应用模拟欺诈呼叫的频率。 值 0.2 表示大约有 20% 的通话记录似乎具有欺诈性。

- 持续时间(小时),即应用应运行的小时数。 还可以通过在命令行终止此过程 (Ctrl+C) 来随时停止该应用。

几秒钟后,当应用将电话通话记录发送到事件中心时,应用将开始在屏幕上显示通话记录。 这些电话呼叫数据包含以下字段:

记录 定义 CallrecTime 呼叫开始时间的时间戳。 SwitchNum 用于连接呼叫的电话交换机。 在此示例中,交换机是表示来源国家/地区(美国、中国、英国、德国或澳大利亚)的字符串。 CallingNum 呼叫方的电话号码。 CallingIMSI 国际移动用户标识 (IMSI)。 它是呼叫方的唯一标识符。 CalledNum 呼叫接收人的电话号码。 CalledIMSI 国际移动用户标识 (IMSI)。 它是呼叫接收人的唯一标识符。

创建流分析作业

有了呼叫事件流以后,即可创建流分析作业,以便从事件中心读取数据。

- 若要创建流分析作业,请导航到 Azure 门户。

- 选择“创建资源”并搜索“流分析作业” 。 选择“流分析作业”磁贴并选择“创建” 。

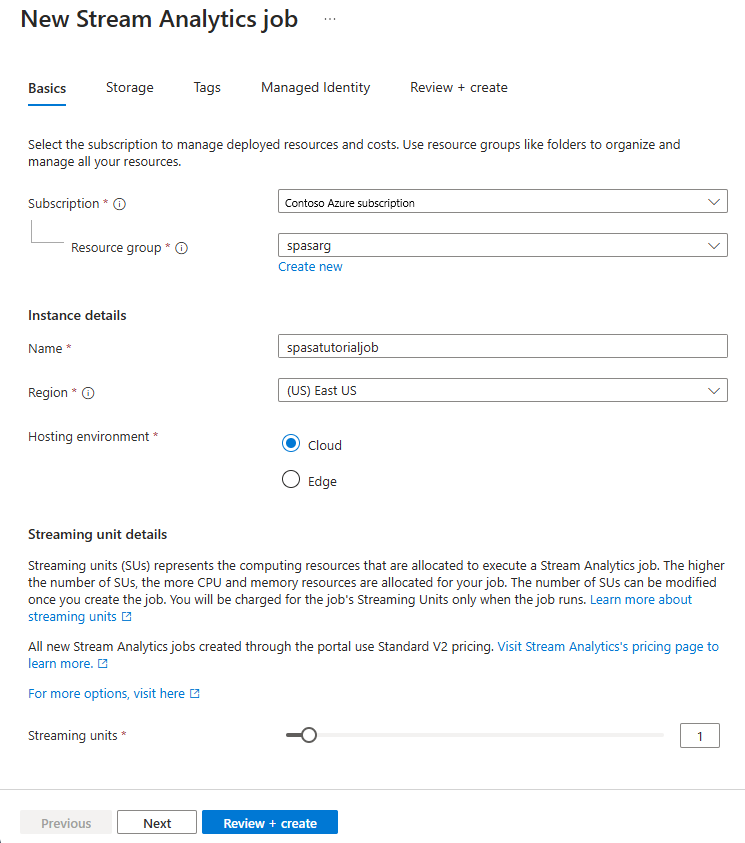

- 在“新建流分析作业”页上,执行以下步骤:

对于“订阅”,请选择包含该事件中心命名空间的订阅。

对于“资源组”,请选择先前创建的资源组。

在“实例详细信息”部分,对于“名称”,请输入流分析作业的唯一名称。

对于“区域”,请选择要在其中创建流分析作业的区域。 我们建议将作业和事件中心放在同一区域以获得最佳性能,这样还无需为不同区域之间的数据传输付费。

对于“托管环境 <”,请选择“云”(如果尚未选择)。 流分析作业可以部署到云或边缘设备。 你可以通过云部署到 Azure 云,利用 Edge 部署到 IoT Edge 设备。

对于“流单元”,请选择“1”。 流单元表示执行作业所需的计算资源。 默认情况下,此值设置为 1。 若要了解如何缩放流单元,请参阅了解和调整流单元一文。

在页面底部选择“查看 + 创建”。

- 在“查看 + 创建”页上查看设置,然后选择“创建”以创建流分析作业。

- 部署作业后,选择“转到资源”,以导航到“流分析作业”页。

配置作业输入

下一步是使用在上一部分创建的事件中心,为用于读取数据的作业定义输入源。

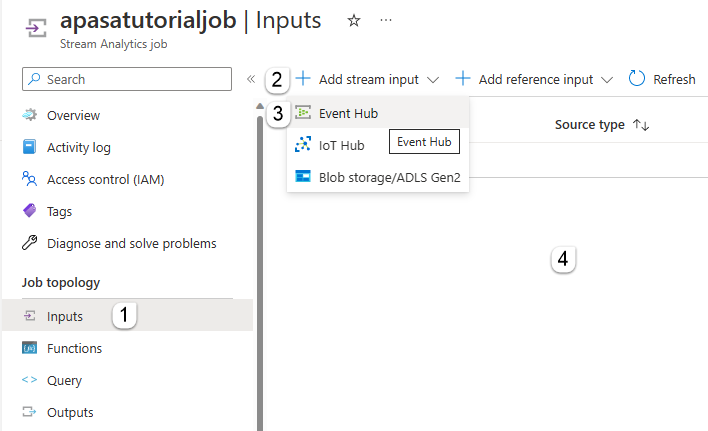

在“流分析作业”页上,在左侧菜单中的“作业拓扑”部分选择“输入”。

在“输入”页上,选择“+ 添加输入”和“事件中心”。

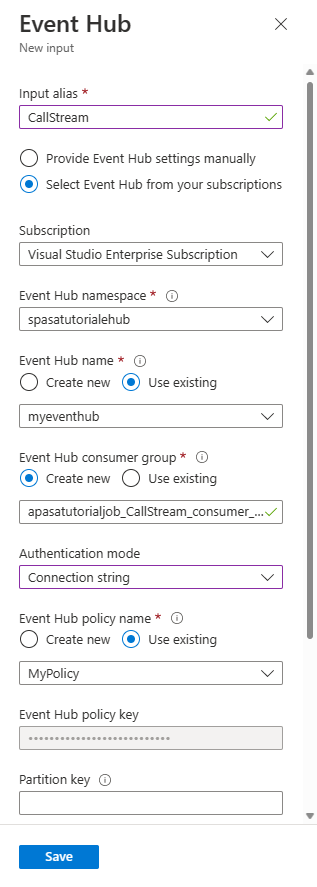

在“事件中心”页上执行以下步骤:

对于“输入别名”,请输入 CallStream。 输入别名是用于标识输入的易记名称。 输入别名只能包含字母数字字符、连字符和下划线,而且长度必须介于 3 到 63 个字符之间。

对于“订阅”,请选择在其中创建了事件中心的 Azure 订阅。 事件中心可以位于流分析作业所在的订阅中,也可以位于另一订阅中。

对于“事件中心命名空间”,请选择在上一部分创建的事件中心命名空间。 当前订阅中可用的所有命名空间均列在下拉列表中。

对于“事件中心名称”,请选择在上一部分创建的事件中心。 所选命名空间中可用的所有事件中心均列在下拉列表中。

对于“事件中心使用者组”,请保持选中“新建”选项,以便在事件中心上创建新的使用者组。 建议对每个流分析作业使用不同的使用者组。 如果未指定任何使用者组,流分析作业将使用

$Default使用者组。 如果作业包含自联接或具有多个输入,则稍后的某些输入可能会由多个读取器读取。 这种情况会影响单个使用者组中的读取器数量。对于“身份验证模式”,请选择“连接字符串”。 使用此选项可以更轻松地测试本教程。

对于“事件中心策略名称”,请选择“使用现有”,然后选择先前创建的策略。

选择页面底部的“保存” 。

配置作业输出

最后一步是定义作业的输出接收器,以便在其中写入转换后的数据。 在本教程中,请使用 Power BI 来输出和可视化数据。

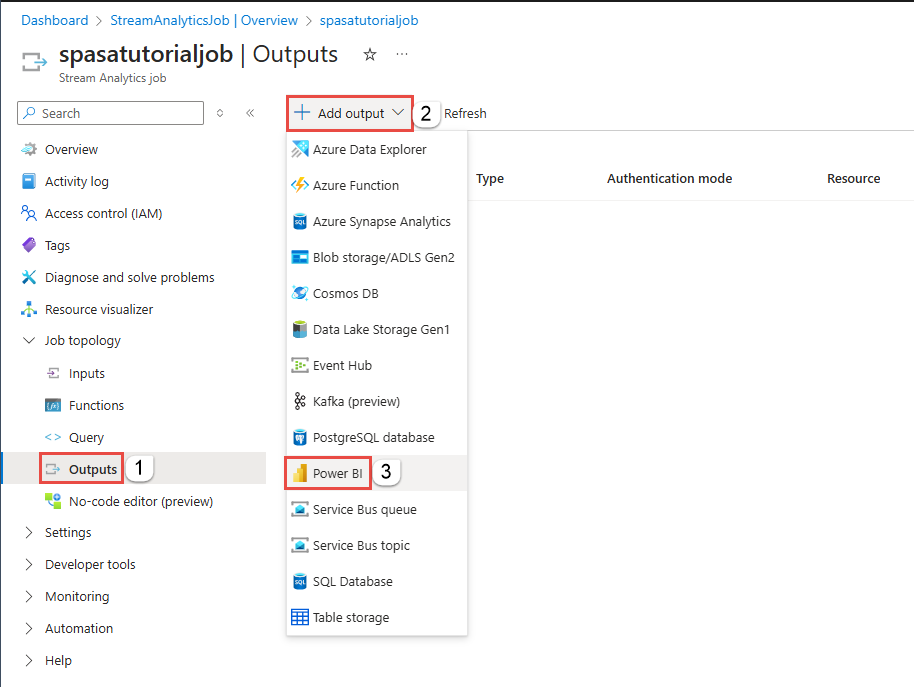

从 Azure 门户打开“所有资源”,然后选择 ASATutorial 流分析作业。

在“流分析作业”的“作业拓扑”部分,选择“输出”选项 。

选择“+ 添加输出”>“Power BI”。

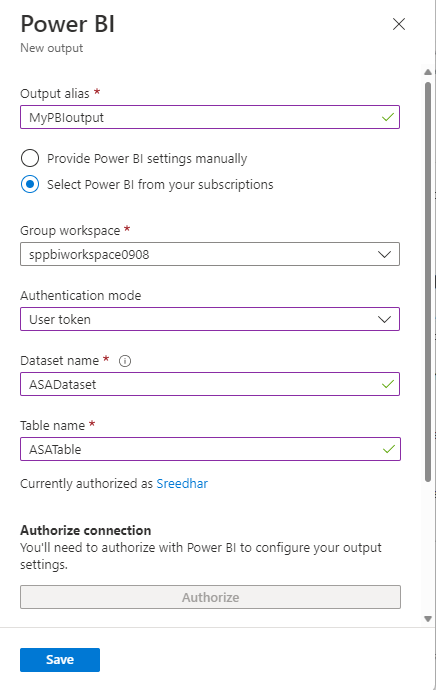

在输出表单中填写以下详细信息:

设置 建议的值 输出别名 MyPBIoutput 组工作区 我的工作区 数据集名称 ASAdataset 表名称 ASATable 身份验证模式 用户令牌 选择“授权”并按提示向 Power BI 进行身份验证。

选择 Power BI 页面底部的“保存”。

本教程采用用户令牌身份验证模式。

创建查询以转换实时数据

此时,设置一个流分析作业以读取传入数据流。 接下来创建一个分析实时数据的查询。 这些查询使用类似 SQL 的语言,该语言具有特定于流分析的一些扩展。

本教程的这一部分会创建并测试多个查询,展示可以转换输入流以便进行分析的几种方法。

此处创建的查询只会在屏幕中显示已转换的数据。 在后面的部分中,你将把转换后的数据写入 Power BI。

若要了解有关语言的详细信息,请参阅 Azure 流分析查询语言参考。

使用传递查询进行测试

如果想要将每个事件存档,可使用传递查询读取事件负载中的所有字段。

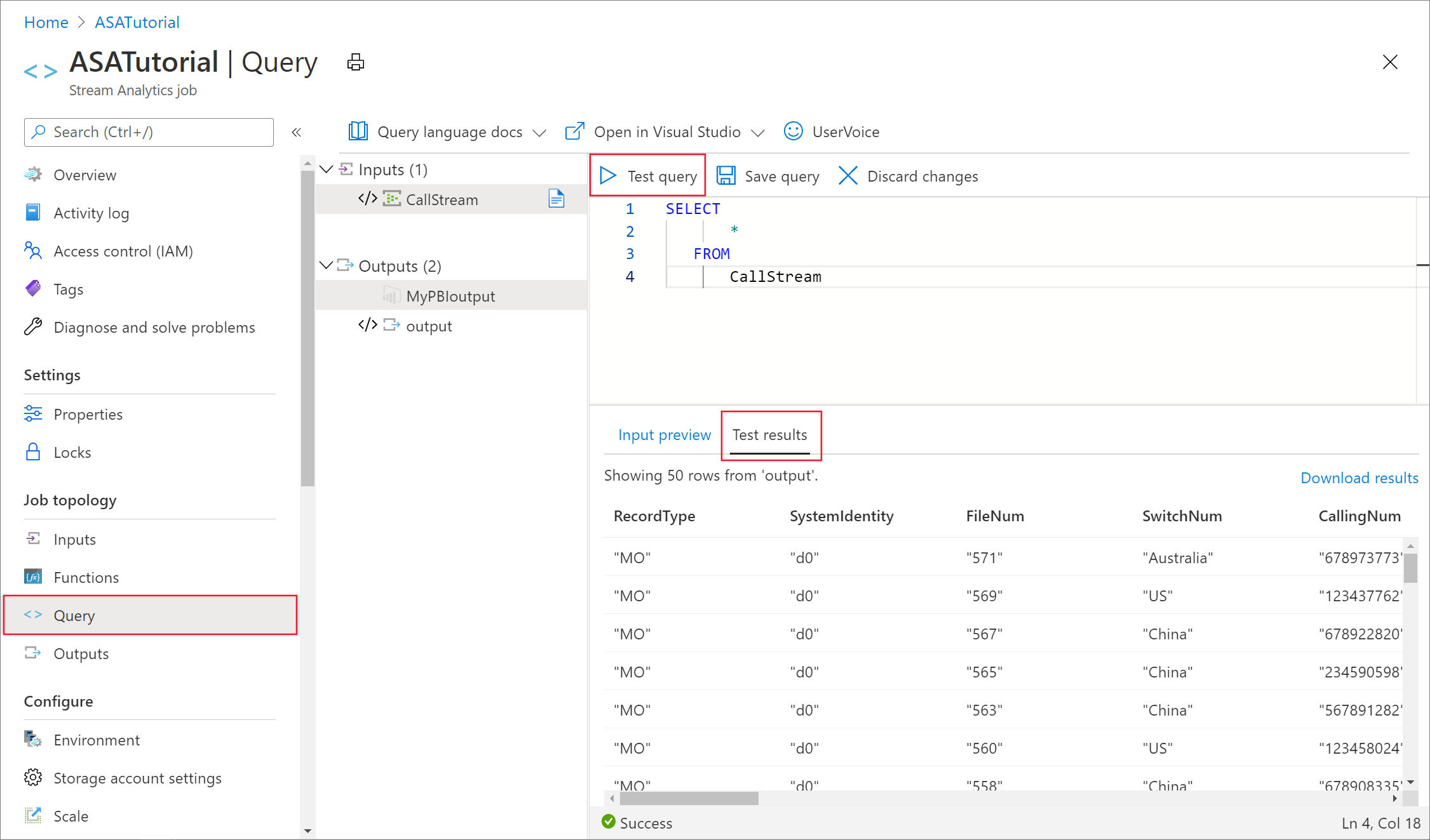

在 Azure 门户中导航到你的流分析作业,在左侧菜单上的“作业拓扑”下选择“查询”。

在查询窗口中输入以下查询:

SELECT * FROM CallStream注意

对于 SQL,关键字不区分大小写,空格也不重要。

在此查询中,

CallStream是创建输入时指定的别名。 如果使用了其他别名,请改为使用该名称。选择“测试查询”。

流分析作业对来自输入的示例数据运行查询,并在窗口底部显示输出。 结果表明事件中心和流分析作业配置正确。

你看到的确切记录数取决于你在采样中捕获的记录数。

减少使用列投影的字段数

在许多情况下,分析并不需要输入流中的所有列。 可以使用查询投影一组返回的字段,这些字段比传递查询中的字段要小。

运行以下查询,并注意输出。

SELECT CallRecTime, SwitchNum, CallingIMSI, CallingNum, CalledNum

INTO

[MyPBIoutput]

FROM

CallStream

按区域计算传入呼叫数:带聚合功能的翻转窗口

假设要计算每个区域的传入呼叫数。 在流数据中,当要执行聚合函数(如计数)时,需要将流划分为临时单位,因为数据流本身实际上是无限的。 使用流分析开窗函数执行此操作。 然后,可以使用该窗口中的数据作为一个单元。

此转换需要一个不重叠的时间窗口序列,每个窗口具有一组可对其进行分组和聚合的离散数据。 这种类型的窗口称为“翻转窗口”。 在翻转窗口中,可以获得按 SwitchNum(它表示发起呼叫的国家/地区)分组的传入呼叫的计数。

将以下查询粘贴到查询编辑器中:

SELECT System.Timestamp as WindowEnd, SwitchNum, COUNT(*) as CallCount FROM CallStream TIMESTAMP BY CallRecTime GROUP BY TUMBLINGWINDOW(s, 5), SwitchNum此查询在

FROM子句中使用Timestamp By关键字来指定输入流中要用于定义翻转窗口的时间戳字段。 在这种情况下,窗口按每条记录中的CallRecTime字段将数据划分为段。 (如果未指定任何字段,开窗操作将使用每个事件到达事件中心的时间。 请参阅流分析查询语言参考中的“到达时间与应用程序时间”。投影包括

System.Timestamp,后者将返回每个窗口结束时的时间戳。若要指定想要使用翻转窗口,请在

GROUP BY子句中使用 TUMBLINGWINDOW 函数。 在函数中,可以指定时间单位(从微秒到一天的任意时间)和窗口大小(单位数)。 在此示例中,翻转窗口由 5 秒时间间隔组成,因此你会收到按国家/地区的每 5 秒的呼叫计数。选择“测试查询”。 在结果中,请注意“WindowEnd”下的时间戳以 5 秒为增量。

使用自联接检测 SIM 欺诈

在此示例中,将欺诈使用情况视为来自同一用户的呼叫,但与另一个 5 秒内的呼叫位于不同的位置。 例如,同一用户不能合法地同时从美国和澳大利亚发起呼叫。

若要检查这些情况,可以使用流数据的自联接基于 CallRecTime 值将流联接到自身。 然后,可以查找 CallingIMSI 值(始发号码)相同但 SwitchNum 值(来源国家/地区)不同的呼叫记录。

当对流数据使用联接时,该联接必须对可以及时分隔匹配行的程度施加一定限制。 如前所述,流数据实际上是无限的。 使用 DATEDIFF 函数在联接的 ON 子句中指定关系的时间限制。 此示例中联接基于调用数据的 5 秒时间间隔。

将以下查询粘贴到查询编辑器中:

SELECT System.Timestamp AS WindowEnd, COUNT(*) AS FraudulentCalls INTO "MyPBIoutput" FROM "CallStream" CS1 TIMESTAMP BY CallRecTime JOIN "CallStream" CS2 TIMESTAMP BY CallRecTime ON CS1.CallingIMSI = CS2.CallingIMSI AND DATEDIFF(ss, CS1, CS2) BETWEEN 1 AND 5 WHERE CS1.SwitchNum != CS2.SwitchNum GROUP BY TumblingWindow(Duration(second, 1))除了联接中的

DATEDIFF函数以外,此查询与任何 SQL 联接类似。 此DATEDIFF版本特定于流分析,它必须显示在ON...BETWEEN子句中。 参数为时间单位(此示例中为秒)和联接的两个源的别名。 此函数与标准 SQLDATEDIFF函数不同。WHERE子句包含标志欺诈呼叫的条件:始发交换机不同。选择“测试查询”。 查看输出,然后选择“保存查询”。

启动作业并可视化输出

若要启动作业,请导航到作业“概述”,然后选择“启动” 。

选择“现在”作为作业输出启动时间,然后选择“启动”。 可以在通知栏中查看作业状态。

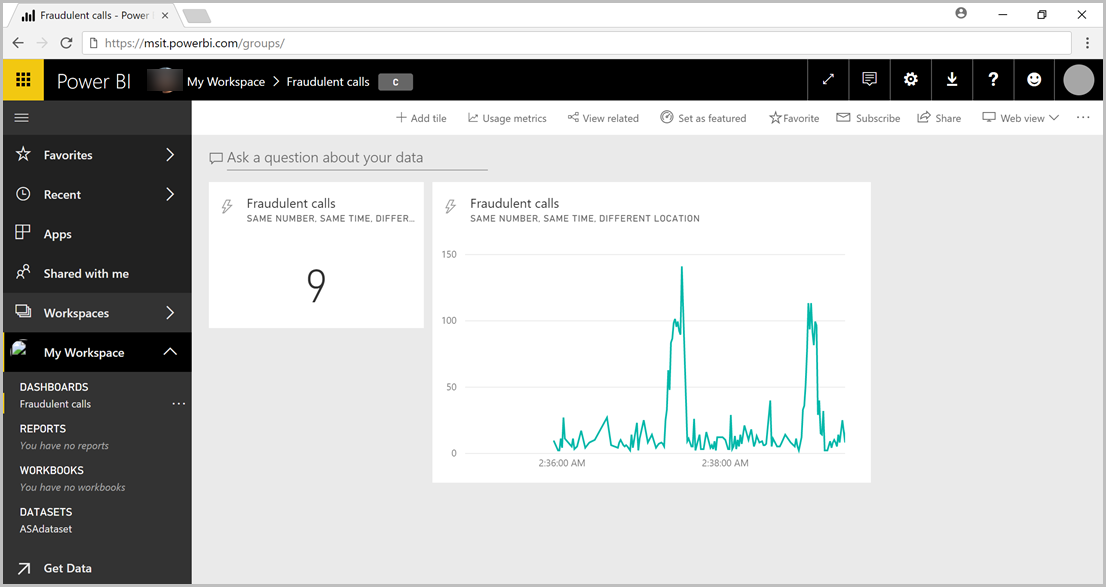

作业成功以后,请导航到 Power BI,然后使用工作或学校帐户登录。 如果流分析作业查询输出结果,则创建的 ASAdataset 数据集存在于“数据集”选项卡下。

从 Power BI 工作区选择“+ 创建”,创建名为“欺诈性呼叫”的新仪表板。

在窗口顶部,选择“编辑”和“添加磁贴” 。

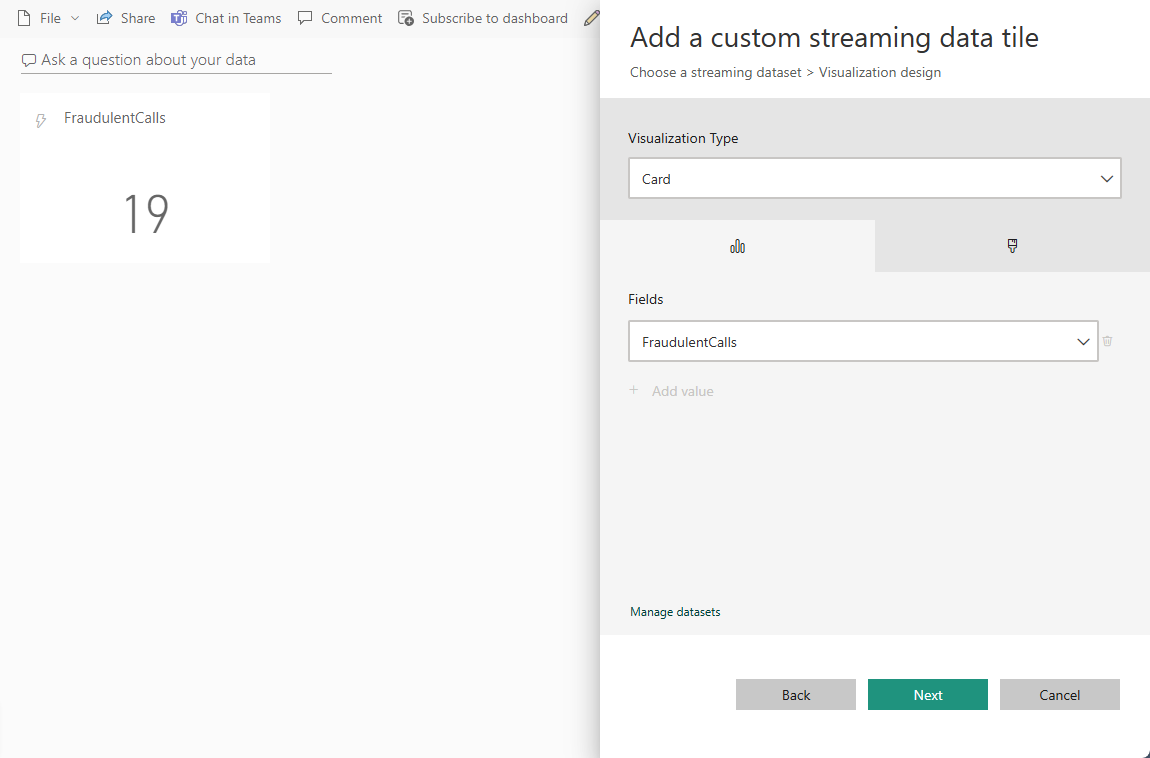

在“添加磁贴”窗口中,选择“自定义流式处理数据”和“下一步”。

在“你的数据集”下选择“ASAdataset”,然后选择“下一步”。

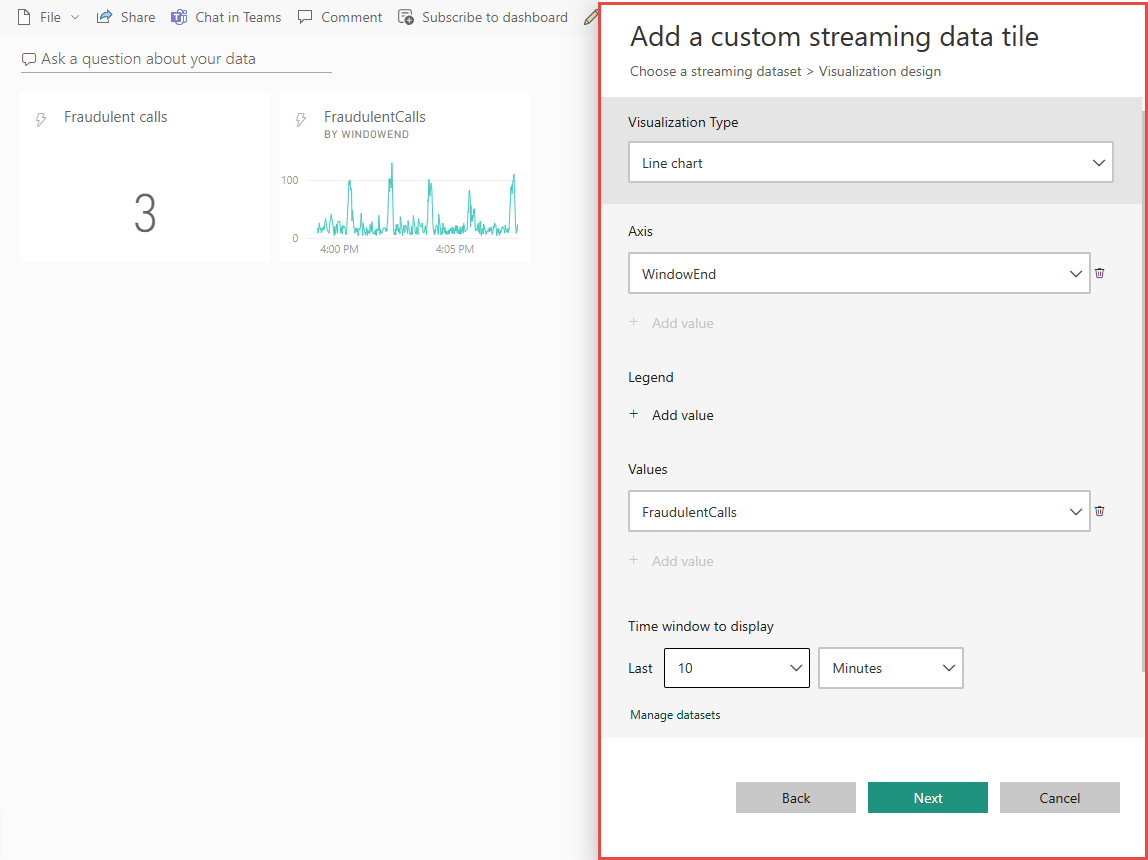

从“可视化效果类型”下拉列表中选择“卡”,向“字段”添加“欺诈性呼叫”,然后选择“下一步”。

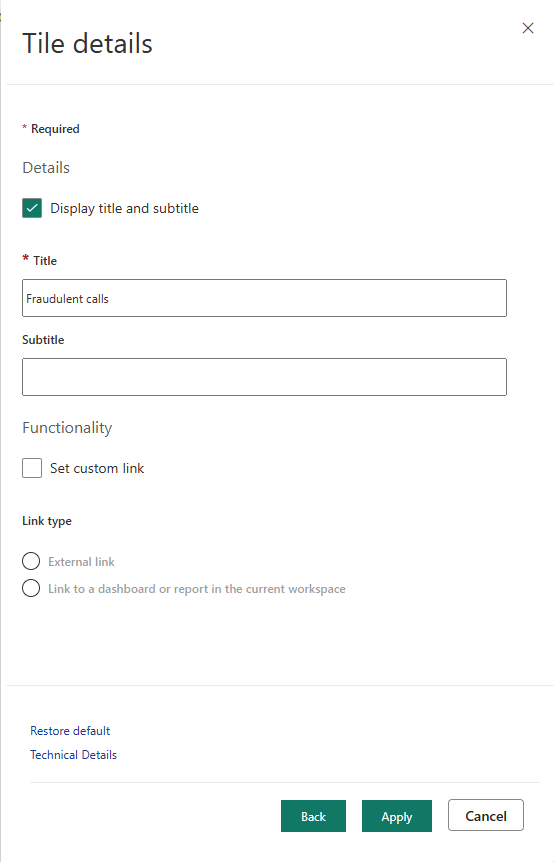

输入磁贴的名称(例如“欺诈性呼叫”),然后选择“应用”以创建该磁贴。

使用以下选项再次执行步骤 5:

- 转到“可视化效果类型”后,选择“折线图”。

- 添加轴,然后选择“windowend”。

- 添加值,然后选择“欺诈性电话”。

- 对于“要显示的时间窗口”,请选择最近 10 分钟。

添加两个磁贴以后,仪表板应该如以下示例所示。 请注意,如果事件中心发送器应用程序和流分析应用程序正在运行,则 Power BI 仪表板会在新数据到达时定期进行更新。

将 Power BI 仪表板嵌入到 Web 应用中

在本教程的此部分中,请使用 Power BI 团队创建的示例性 ASP.NET Web 应用程序来嵌入仪表板。 有关如何嵌入仪表板的详细信息,请参阅使用 Power BI 嵌入一文。

若要设置应用程序,请访问 Power BI-Developer-Samples GitHub 存储库,然后按照“用户拥有数据”部分的说明操作(请使用 integrate-web-app 子部分下的重定向 URL 和主页 URL)。 由于我们使用的是“仪表板”示例,因此请使用 GitHub 存储库中的 integrate-web-app 示例代码。 在浏览器中运行应用程序后,请执行以下步骤,将此前创建的仪表板嵌入网页中:

选择“登录到 Power BI”,以便授予应用程序访问 Power BI 帐户中的仪表板的权限。

选择“获取仪表板”按钮,此时会在表中显示帐户的仪表板。 找到此前创建的仪表板的名称 powerbi-embedded-dashboard,然后复制相应的 EmbedUrl。

最后,将 EmbedUrl 粘贴到相应的文本字段中,然后选择“嵌入仪表板”。 现在可以查看嵌入 Web 应用程序中的同一仪表板了。

后续步骤

本教程介绍了如何创建简单的流分析作业、如何分析传入数据,以及如何在 Power BI 仪表板中展示结果。 若要详细了解流分析作业,请继续阅读下一教程: