Azure 虚拟机网络吞吐量确定应用程序可用于网络通信的带宽。 Azure 提供各种虚拟机大小和类型,每个虚拟机具有以每秒兆位(Mbps)为单位的不同网络性能功能。 了解带宽分配的工作原理有助于优化应用程序性能,并为工作负荷要求选择合适的 VM 大小。

每个虚拟机大小都有不同的性能功能组合。 其中一种是网络吞吐量(也称带宽),以兆位/秒 (Mbps) 表示。 由于虚拟机托管在共享硬件上,因此网络容量必须在共享同一硬件的虚拟机中公平地共享。 在分配时,较大的虚拟机相对于较小的虚拟机会获得相对较多的带宽。

分配给每个虚拟机的网络带宽是根据来自虚拟机的出口流量(出站)流量来测量的。 从虚拟机流出的所有网络流量均计入分配限制,不管流向哪个目标。 例如,如果虚拟机有 1000-Mbps 的限制,则无论出站流量是发往同一虚拟网络中的其他虚拟机,还是发往 Azure 外部,该限制都适用。

入口流量并未被直接测量或限制。 但是,还有其他因素,例如 CPU 和存储限制,可能会影响虚拟机处理传入数据的能力。

加速网络是一项旨在改进网络性能(包括延迟、吞吐量和 CPU 使用率)的功能。 虽然加速网络可以提高虚拟机的吞吐量,但只能达到虚拟机分配的带宽。 若要详细了解如何使用加速网络,请查看适用于 Windows 或 Linux 虚拟机的加速网络。

Azure 虚拟机必须有一个网络接口,但可能会连接多个。 分配给某个虚拟机的带宽是流经所有网络接口(已连接到该虚拟机)的所有出站流量的总和。 换言之,分配的带宽是针对每个虚拟机的,不管为该虚拟机连接了多少网络接口。 若要了解不同的 Azure VM 大小支持的网络接口数,请查看 Azure Windows 和 Linux VM 大小。

预期的网络吞吐量

若要详细了解每种 VM 大小支持的预期出站吞吐量和网络接口数,请查看 Azure Windows 和 Linux VM 大小。 选择一个类型(例如“常规用途”),然后在生成的页面上选择大小和系列,例如“Dv2 系列”。 每个系列提供一个表,表中的最后一列包含网络规格,列标题为,

最大 NIC 数/预期网络性能 (Mbps)。

吞吐量限制适用于虚拟机。 吞吐量不受以下因素影响:

网络接口数:带宽限制是源自虚拟机的所有出站流量的累积。

加速网络:尽管此功能有助于流量达到已发布的限制,但不会更改限制。

流量目标:所有目标都计入出站限制。

协议:基于所有协议的所有出站流量都计入限制。

网络流限制

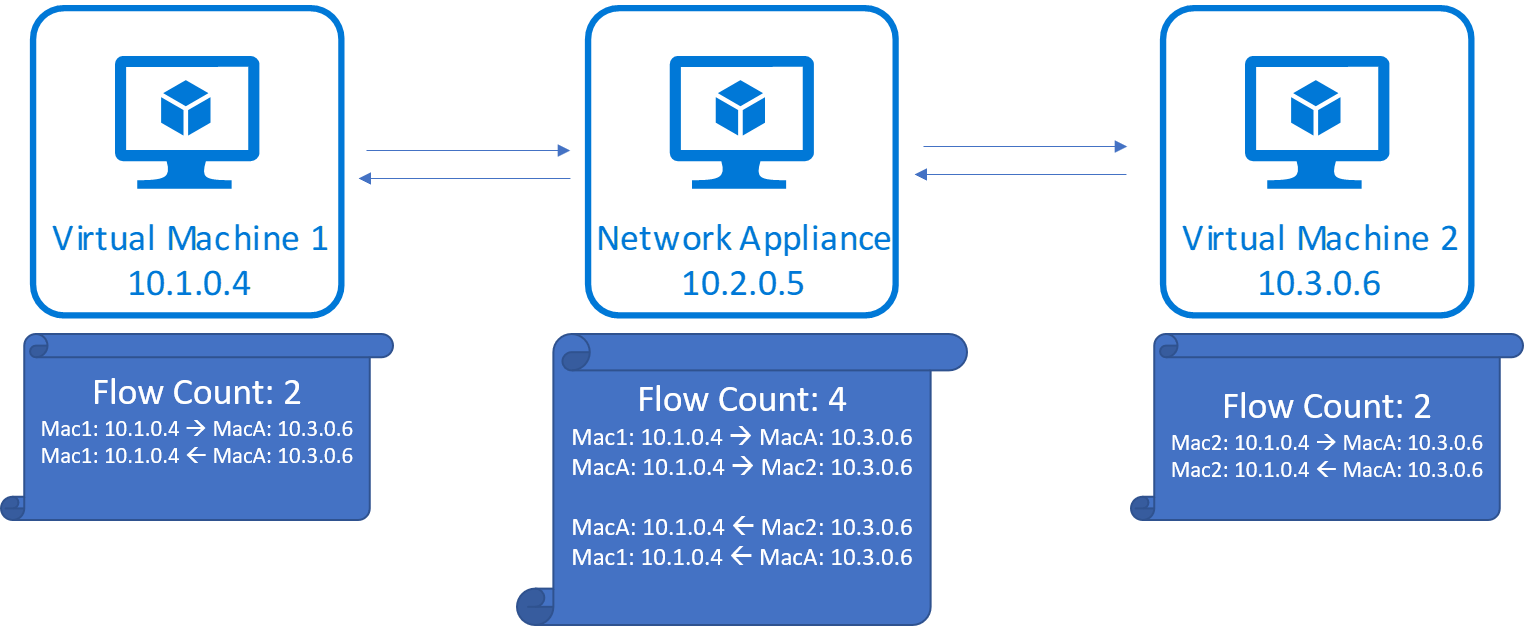

虚拟机上的网络连接数随时可能会影响其网络性能。 Azure 网络堆栈使用称为 流 的数据结构来跟踪 TCP/UDP 连接的每个方向。 对于典型的 TCP/UDP 连接,它会创建两个流:一个用于入站流量,另一个用于出站流量。 由协议、本地 IP 地址、远程 IP 地址、本地端口和远程端口组成的五元组标识每个流。

在终结点之间进行数据传输时,除了执行数据传输的流以外,还必须创建多个流。 例如,为 DNS 解析创建的流,以及为负载均衡器运行状况探测创建的流。 网关、代理和防火墙等网络虚拟设备 (NVA) 会会看到为在设备处终止和由设备发起的连接所创建的流。

流限制和活动连接建议

目前,Azure 网络堆栈支持所有 VM 大小的至少 50 万个总连接(50 万入站 + 50 万个出站流)。 对于最小规格(2-7 个 vCPU),建议您的负载使用总连接数不超过 100K。 建议的连接限制因 VM vCPU 计数而异,下面共享。

使用 MANA 的 Azure 提升 VM 大小

| VM 大小(#vCPUs) | 建议的连接限制 |

|---|---|

| 2-7 | 100,000 |

| 8-15 | 500,000 |

| 16-31 | 700,000 |

| 32-63 | 800,000 |

| 64+ | 2,000,000 |

其他 VM 大小

| VM 大小(#vCPUs) | 建议的连接限制 |

|---|---|

| 2-7 | 100,000 |

| 8-15 | 500,000 |

| 16-31 | 700,000 |

| 32-63 | 800,000 |

| 64+ | 1,000,000 |

网络优化型 VM 尺寸提供了不同于上述限制的网络连接性能改进。 有关网络优化 VM 连接限制的信息 ,请参阅以下文章

超过建议限制时,连接可能会中断或性能可能会下降。 连接建立速度和终止速度也可能影响网络性能,因为连接的建立和终止与包处理例程共享 CPU。 建议针对预期的流量模式对工作负荷进行基准测试,并根据性能需要对工作负荷进行相应的横向扩展。 Microsoft发布了一个工具,以便更轻松地执行此作,请参阅 NCPS 工具 以获取更多详细信息。

请注意,与典型的客户端-服务器通信相比,转发流量的 NVA(例如网关、代理、防火墙和其他应用程序)应使用建议的连接限制的一半,因为转发流量消耗了流数的两倍。

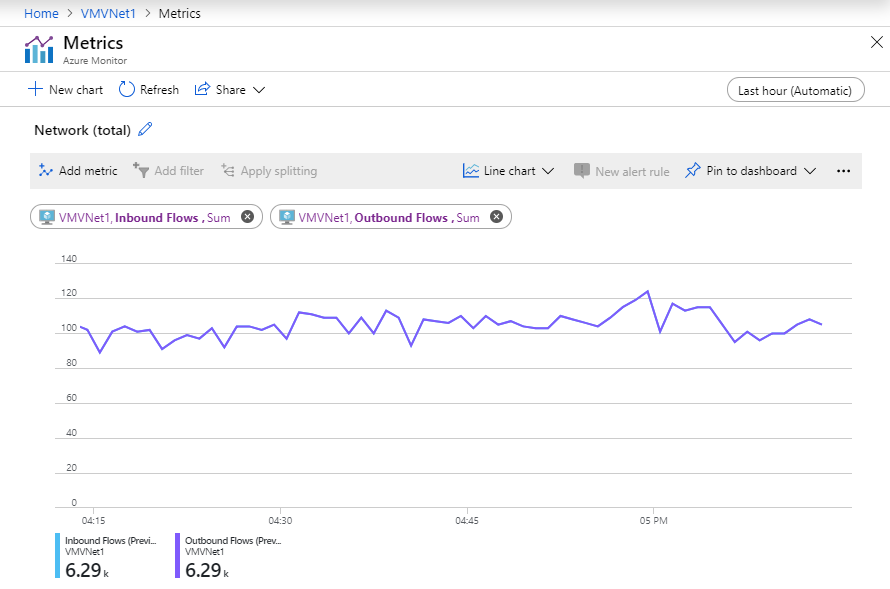

Azure Monitor 中提供的指标可用于跟踪 VM 或虚拟机规模集实例上的网络流数量和流创建速率。 由于各种原因,VM 来宾 OS 跟踪的流数可能与 Azure 网络堆栈跟踪的流数不同。 若要确保网络连接不会中断,请使用入站和出站流量指标。

后续步骤

针对虚拟机测试网络吞吐量。