Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Applies to:

SQL Server on Azure VM

A Windows Server Failover Cluster is used for high availability and disaster recovery (HADR) with SQL Server on Azure Virtual Machines (VMs).

This article provides cluster configuration best practices for both failover cluster instances (FCIs) and availability groups when you use them with SQL Server on Azure VMs.

To learn more, see the other articles in this series: Checklist, VM size, Storage, Security, HADR configuration, Collect baseline.

Checklist

Review the following checklist for a brief overview of the HADR best practices that the rest of the article covers in greater detail.

High availability and disaster recovery (HADR) features, such as the Always On availability group and the failover cluster instance rely on underlying Windows Server Failover Cluster technology. Review the best practices for modifying your HADR settings to better support the cloud environment.

For your Windows cluster, consider these best practices:

- Deploy your SQL Server VMs to multiple subnets whenever possible to avoid the dependency on an Azure Load Balancer or a distributed network name (DNN) to route traffic to your HADR solution.

- Change the cluster to less aggressive parameters to avoid unexpected outages from transient network failures or Azure platform maintenance. To learn more, see heartbeat and threshold settings. For Windows Server 2012 and later, use the following recommended values:

- SameSubnetDelay: 1 second

- SameSubnetThreshold: 40 heartbeats

- CrossSubnetDelay: 1 second

- CrossSubnetThreshold: 40 heartbeats

- Place your VMs in an availability set or different availability zones. To learn more, see VM availability settings.

- Use a single NIC per cluster node.

- Configure cluster quorum voting to use 3 or more odd number of votes. Don't assign votes to DR regions.

- Carefully monitor resource limits to avoid unexpected restarts or failovers due to resource constraints.

- Ensure your OS, drivers, and SQL Server are at the latest builds.

- Optimize performance for SQL Server on Azure VMs. Review the other sections in this article to learn more.

- Reduce or spread out workload to avoid resource limits.

- Move to a VM or disk that has higher limits to avoid constraints.

For your SQL Server availability group or failover cluster instance, consider these best practices:

- If you're experiencing frequent unexpected failures, follow the performance best practices outlined in the rest of this article.

- If optimizing SQL Server VM performance doesn't resolve your unexpected failovers, consider relaxing the monitoring for the availability group or failover cluster instance. However, doing so may not address the underlying source of the issue and could mask symptoms by reducing the likelihood of failure. You may still need to investigate and address the underlying root cause. For Windows Server 2012 or higher, use the following recommended values:

- Lease timeout: Use this equation to calculate the maximum lease time-out value:

Lease timeout < (2 * SameSubnetThreshold * SameSubnetDelay).

Start with 40 seconds. If you're using the relaxedSameSubnetThresholdandSameSubnetDelayvalues recommended previously, don't exceed 80 seconds for the lease timeout value. - Max failures in a specified period: Set this value to 6.

- Lease timeout: Use this equation to calculate the maximum lease time-out value:

- When using the virtual network name (VNN) and an Azure Load Balancer to connect to your HADR solution, specify

MultiSubnetFailover = truein the connection string, even if your cluster only spans one subnet.- If the client doesn't support

MultiSubnetFailover = Trueyou may need to setRegisterAllProvidersIP = 0andHostRecordTTL = 300to cache client credentials for shorter durations. However, doing so may cause additional queries to the DNS server.

- If the client doesn't support

- To connect to your HADR solution using the distributed network name (DNN), consider the following:

- You must use a client driver that supports

MultiSubnetFailover = True, and this parameter must be in the connection string. - Use a unique DNN port in the connection string when connecting to the DNN listener for an availability group.

- You must use a client driver that supports

- Use a database mirroring connection string for a basic availability group to bypass the need for a load balancer or DNN.

- Validate the sector size of your VHDs before deploying your high availability solution to avoid having misaligned I/Os. See KB3009974 to learn more.

- If the SQL Server database engine, Always On availability group listener, or failover cluster instance health probe are configured to use a port between 49,152 and 65,536 (the default dynamic port range for TCP/IP), add an exclusion for each port. Doing so prevents other systems from being dynamically assigned the same port. The following example creates an exclusion for port 59999:

netsh int ipv4 add excludedportrange tcp startport=59999 numberofports=1 store=persistent

To compare the HADR checklist with the other best practices, see the comprehensive Performance best practices checklist.

VM availability settings

To reduce the effect of downtime, consider the following VM best availability settings:

- Use proximity placement groups together with accelerated networking for lowest latency.

- Place virtual machine cluster nodes in separate availability zones to protect from datacenter-level failures or in a single availability set for lower-latency redundancy within the same datacenter.

- Use premium-managed OS and data disks for VMs in an availability set.

- Configure each application tier into separate availability sets.

Quorum

Although a two-node cluster functions without a quorum resource, customers are strictly required to use a quorum resource to have production support. Cluster validation doesn't pass any cluster without a quorum resource.

Technically, a three-node cluster can survive a single node loss (down to two nodes) without a quorum resource, but after the cluster is down to two nodes, if there's another node loss or communication failure, then there's a risk that the clustered resources will go offline to prevent a split-brain scenario. Configuring a quorum resource allows the cluster to continue online with only one node online.

The disk witness is the most resilient quorum option, but to use a disk witness on a SQL Server on Azure VM, you must use an Azure Shared Disk, which imposes some limitations to the high availability solution. As such, use a disk witness when you're configuring your failover cluster instance with Azure Shared Disks, otherwise use a cloud witness whenever possible.

The following table lists the quorum options available for SQL Server on Azure VMs:

| Cloud witness | Disk witness | File share witness | |

|---|---|---|---|

| Supported OS | Windows Server 2016+ | All | All |

- The cloud witness is ideal for deployments in multiple sites, multiple zones, and multiple regions. Use a cloud witness whenever possible, unless you're using a shared-storage cluster solution.

- The disk witness is the most resilient quorum option and is preferred for any cluster that uses Azure Shared Disks (or any shared-disk solution like shared SCSI, iSCSI, or fiber channel SAN). A Clustered Shared Volume can't be used as a disk witness.

- The fileshare witness is suitable for when the disk witness and cloud witness are unavailable options.

To get started, see Configure cluster quorum.

Quorum Voting

It's possible to change the quorum vote of a node participating in a Windows Server Failover Cluster.

When modifying the node vote settings, follow these guidelines:

| Quorum voting guidelines |

|---|

| Start with each node having no vote by default. Each node should only have a vote with explicit justification. |

| Enable votes for cluster nodes that host the primary replica of an availability group, or the preferred owners of a failover cluster instance. |

| Enable votes for automatic failover owners. Each node that might host a primary replica or FCI as a result of an automatic failover should have a vote. |

| If an availability group has more than one secondary replica, only enable votes for the replicas that have automatic failover. |

| Disable votes for nodes that are in secondary disaster recovery sites. Nodes in secondary sites shouldn't contribute to the decision of taking a cluster offline if there's nothing wrong with the primary site. |

| Have an odd number of votes, with three quorum votes minimum. Add a quorum witness for an additional vote if necessary in a two-node cluster. |

| Reassess vote assignments post-failover. You don't want to fail over into a cluster configuration that doesn't support a healthy quorum. |

Connectivity

To match the on-premises experience for connecting to your availability group listener or failover cluster instance, deploy your SQL Server VMs to multiple subnets within the same virtual network. Having multiple subnets negates the need for the extra dependency on an Azure Load Balancer, or a distributed network name to route your traffic to your listener.

To simplify your HADR solution, deploy your SQL Server VMs to multiple subnets whenever possible. To learn more, see Multi-subnet AG, and Multi-subnet FCI.

If your SQL Server VMs are in a single subnet, it's possible to configure either a virtual network name (VNN) and an Azure Load Balancer, or a distributed network name (DNN) for both failover cluster instances and availability group listeners.

The distributed network name is the recommended connectivity option, when available:

- The end-to-end solution is more robust since you no longer have to maintain the load balancer resource.

- Eliminating the load balancer probes minimizes failover duration.

- The DNN simplifies provisioning and management of the failover cluster instance or availability group listener with SQL Server on Azure VMs.

Consider the following limitations:

- The client driver must support the

MultiSubnetFailover=Trueparameter. - The DNN feature is available starting with SQL Server 2016 SP3, SQL Server 2017 CU25, and SQL Server 2019 CU8 on Windows Server 2016 and later.

To learn more, see the Windows Server Failover Cluster overview.

To configure connectivity, see the following articles:

- Availability group: Configure DNN, Configure VNN

- Failover cluster instance: Configure DNN, Configure VNN.

Most SQL Server features work transparently with FCI and availability groups when using the DNN, but there are certain features that might require special consideration. See FCI and DNN interoperability and AG and DNN interoperability to learn more.

Tip

Set the MultiSubnetFailover parameter = true in the connection string even for HADR solutions that span a single subnet to support future spanning of subnets without needing to update connection strings.

Heartbeat and threshold

Change the cluster heartbeat and threshold settings to relaxed settings. The default heartbeat and threshold cluster settings are designed for highly tuned on-premises networks and don't consider the possibility of increased latency in a cloud environment. The heartbeat network is maintained with UDP 3343, which is traditionally far less reliable than TCP and more prone to incomplete conversations.

Therefore, when running cluster nodes for SQL Server on Azure VM high availability solutions, change the cluster settings to a more relaxed monitoring state to avoid transient failures due to the increased possibility of network latency or failure, Azure maintenance, or hitting resource bottlenecks.

The delay and threshold settings have a cumulative effect to total health detection. For example, setting CrossSubnetDelay to send a heartbeat every 2 seconds and setting the CrossSubnetThreshold to 10 missed heartbeats before taking recovery means the cluster can have a total network tolerance of 20 seconds before recovery action is taken. In general, continuing to send frequent heartbeats but having greater thresholds is preferred.

To ensure recovery during legitimate outages while providing greater tolerance for transient issues, relax your delay and threshold settings to the recommended values detailed in the following table:

| Setting | Windows Server 2012 or later | Windows Server 2008 R2 |

|---|---|---|

| SameSubnetDelay | 1 second | 2 second |

| SameSubnetThreshold | 40 heartbeats | 10 heartbeats (max) |

| CrossSubnetDelay | 1 second | 2 second |

| CrossSubnetThreshold | 40 heartbeats | 20 heartbeats (max) |

Use PowerShell to change your cluster parameters:

(get-cluster).SameSubnetThreshold = 40

(get-cluster).CrossSubnetThreshold = 40

Use PowerShell to verify your changes:

get-cluster | fl *subnet*

Consider the following:

- This change is immediate, restarting the cluster or any resources isn't required.

- Same subnet values shouldn't be greater than cross subnet values.

- SameSubnetThreshold <= CrossSubnetThreshold

- SameSubnetDelay <= CrossSubnetDelay

Choose relaxed values based on how much down time is tolerable and how long before a corrective action should occur depending on your application, business needs, and your environment. If you're not able to exceed the default Windows Server 2019 values, then at least try to match them, if possible:

For reference, the following table details the default values:

| Setting | Windows Server 2019 | Windows Server 2016 | Windows Server 2008 - 2012 R2 |

|---|---|---|---|

| SameSubnetDelay | 1 second | 1 second | 1 second |

| SameSubnetThreshold | 20 heartbeats | 10 heartbeats | 5 heartbeats |

| CrossSubnetDelay | 1 second | 1 second | 1 second |

| CrossSubnetThreshold | 20 heartbeats | 10 heartbeats | 5 heartbeats |

To learn more, see Tuning Failover Cluster Network Thresholds.

Relaxed monitoring

If tuning your cluster heartbeat and threshold settings as recommended is insufficient tolerance and you're still seeing failovers due to transient issues rather than true outages, you can configure your AG or FCI monitoring to be more relaxed. In some scenarios, it might be beneficial to temporarily relax the monitoring for a period of time given the level of activity. For example, you might want to relax the monitoring when you're doing IO intensive workloads such as database backups, index maintenance, DBCC CHECKDB, etc. Once the activity is complete, set your monitoring to less relaxed values.

Warning

Changing these settings might mask an underlying problem, and should be used as a temporary solution to reduce, rather than eliminate, the likelihood of failure. Underlying issues should still be investigated and addressed.

Start by increasing the following parameters from their default values for relaxed monitoring, and adjust as necessary:

| Parameter | Default value | Relaxed Value | Description |

|---|---|---|---|

| Healthcheck timeout | 30000 | 60000 | Determines health of the primary replica or node. The cluster resource DLL sp_server_diagnostics returns results at an interval that equals 1/3 of the health-check timeout threshold. If sp_server_diagnostics is slow or isn't returning information, the resource DLL waits for the full interval of the health-check timeout threshold before determining that the resource is unresponsive, and initiating an automatic failover, if configured to do so. |

| Failure-Condition Level | 3 | 2 | Conditions that trigger an automatic failover. There are five failure-condition levels, which range from the least restrictive (level one) to the most restrictive (level five) |

Use Transact-SQL (T-SQL) to modify the health check and failure conditions for both AGs and FCIs.

For availability groups:

ALTER AVAILABILITY GROUP AG1 SET (HEALTH_CHECK_TIMEOUT =60000);

ALTER AVAILABILITY GROUP AG1 SET (FAILURE_CONDITION_LEVEL = 2);

For failover cluster instances:

ALTER SERVER CONFIGURATION SET FAILOVER CLUSTER PROPERTY HealthCheckTimeout = 60000;

ALTER SERVER CONFIGURATION SET FAILOVER CLUSTER PROPERTY FailureConditionLevel = 2;

Specific to availability groups, start with the following recommended parameters, and adjust as necessary:

| Parameter | Default value | Relaxed Value | Description |

|---|---|---|---|

| Lease timeout | 20000 | 40000 | Prevents split-brain. |

| Session timeout | 10000 | 20000 | Checks communication issues between replicas. The session-timeout period is a replica property that controls how long (in seconds) that an availability replica waits for a ping response from a connected replica before considering the connection to have failed. By default, a replica waits 10 seconds for a ping response. This replica property applies to only the connection between a given secondary replica and the primary replica of the availability group. |

| Max failures in specified period | 2 | 6 | Used to avoid indefinite movement of a clustered resource within multiple node failures. Too low of a value can lead to the availability group being in a failed state. Increase the value to prevent short disruptions from performance issues as too low a value can lead to the AG being in a failed state. |

Before making any changes, consider the following:

- Don't lower any timeout values below their default values.

- Use this equation to calculate the maximum lease time-out value:

Lease timeout < (2 * SameSubnetThreshold * SameSubnetDelay).

Start with 40 seconds. If you're using the relaxedSameSubnetThresholdandSameSubnetDelayvalues recommended previously, don't exceed 80 seconds for the lease timeout value. - For synchronous-commit replicas, changing session-timeout to a high value can increase HADR_sync_commit waits.

Lease timeout

Use the Failover Cluster Manager to modify the lease timeout settings for your availability group. See the SQL Server availability group lease health check documentation for detailed steps.

Session timeout

Use Transact-SQL (T-SQL) to modify the session timeout for an availability group:

ALTER AVAILABILITY GROUP AG1

MODIFY REPLICA ON 'INSTANCE01' WITH (SESSION_TIMEOUT = 20);

Max failures in specified period

Use the Failover Cluster Manager to modify the Max failures in specified period value:

- Select Roles in the navigation pane.

- Under Roles, right-click the clustered resource and choose Properties.

- Select the Failover tab, and increase the Max failures in specified period value as desired.

Resource limits

VM or disk limits could result in a resource bottleneck that impacts the health of the cluster, and impedes the health check. If you're experiencing issues with resource limits, consider the following:

- Ensure your OS, drivers, and SQL Server are at the latest builds.

- Optimize SQL Server on Azure VM environment as described in the performance guidelines for SQL Server on Azure Virtual Machines

- Reduce or spread out the workload to reduce utilization without exceeding resource limits

- Tune the SQL Server workload if there's any opportunity, such as

- Add/optimize indexes

- Update statistics if needed and if possible, with Full scan

- Use features like resource governor (starting with SQL Server 2014, enterprise only) to limit resource utilization during specific workloads, such as backups or index maintenance.

- Move to a VM or disk that has higher limits to meet or exceed the demands of your workload.

Networking

Deploy your SQL Server VMs to multiple subnets whenever possible to avoid the dependency on an Azure Load Balancer or a distributed network name (DNN) to route traffic to your HADR solution.

Use a single NIC per server (cluster node). Azure networking has physical redundancy, which makes additional NICs unnecessary on an Azure virtual machine guest cluster. The cluster validation report warns you that the nodes are reachable only on a single network. You can ignore this warning on Azure virtual machine guest failover clusters.

Bandwidth limits for a particular VM are shared across NICs and adding an additional NIC doesn't improve availability group performance for SQL Server on Azure VMs. As such, there's no need to add a second NIC.

The non-RFC-compliant DHCP service in Azure can cause the creation of certain failover cluster configurations to fail. This failure happens because the cluster network name is assigned a duplicate IP address, such as the same IP address as one of the cluster nodes. This is an issue when you use availability groups, which depend on the Windows failover cluster feature.

Consider the scenario when a two-node cluster is created and brought online:

- The cluster comes online, and then NODE1 requests a dynamically assigned IP address for the cluster network name.

- The DHCP service doesn't give any IP address other than NODE1's own IP address, because the DHCP service recognizes that the request comes from NODE1 itself.

- Windows detects that a duplicate address is assigned both to NODE1 and to the failover cluster's network name, and the default cluster group fails to come online.

- The default cluster group moves to NODE2. NODE2 treats NODE1's IP address as the cluster IP address and brings the default cluster group online.

- When NODE2 tries to establish connectivity with NODE1, packets directed at NODE1 never leave NODE2 because it resolves NODE1's IP address to itself. NODE2 can't establish connectivity with NODE1, and then loses quorum and shuts down the cluster.

- NODE1 can send packets to NODE2, but NODE2 can't reply. NODE1 loses quorum and shuts down the cluster.

You can avoid this scenario by assigning an unused static IP address to the cluster network name in order to bring the cluster network name online and add the IP address to Azure Load Balancer.

Configure probe port

When using an Azure Load Balancer to support a virtual network name (VNN) resource, you must configure the cluster to reply to the health probe requests for your Always On availability group listener or your failover cluster instance. If the health probe fails to get a response from a backend instance, then no new connections are sent to that backend instance until the health probe succeeds again.

Configure port exclusion

If you're using a virtual network name (VNN) resource with an Azure Load Balancer, when using a health probe port between 49,152 and 65,536 (the default dynamic port range for TCP/IP), you must configure port exclusion for your Always On availability group listener, or your failover cluster instance.

Configuring port exclusion prevents events such as Event ID: 1069 with status 10048, which can be caused by an internal process taking the port defined as the probe port.

The following example shows Event ID 1069 with status 10048 in the cluster logs:

Cluster resource '<IP name in AG role>' of type 'IP Address' in cluster role '<AG Name>' failed.

An Event ID: 1069 with status 10048 can be identified from cluster logs with events like:

Resource IP Address 10.0.1.0 called SetResourceStatusEx: checkpoint 5. Old state OnlinePending, new state OnlinePending, AppSpErrorCode 0, Flags 0, nores=false

IP Address <IP Address 10.0.1.0>: IpaOnlineThread: **Listening on probe port 59999** failed with status **10048**

Status 10048 happens if an application attempts to bind a socket to an IP address/port that has already been used for an existing socket.

Known issues

Review the resolutions for some commonly known issues and errors.

Resource contention (IO in particular) causes failover

Exhausting I/O or CPU capacity for the VM can cause your availability group to fail over. Identifying the contention that happens right before the failover is the most reliable way to identify what is causing automatic failover.

Follow these steps to review the Azure VM Overall IO Exhaustion event:

Navigate to your Virtual Machine in the Azure portal - not the SQL virtual machines.

Select Metrics under Monitoring to open the Metrics page.

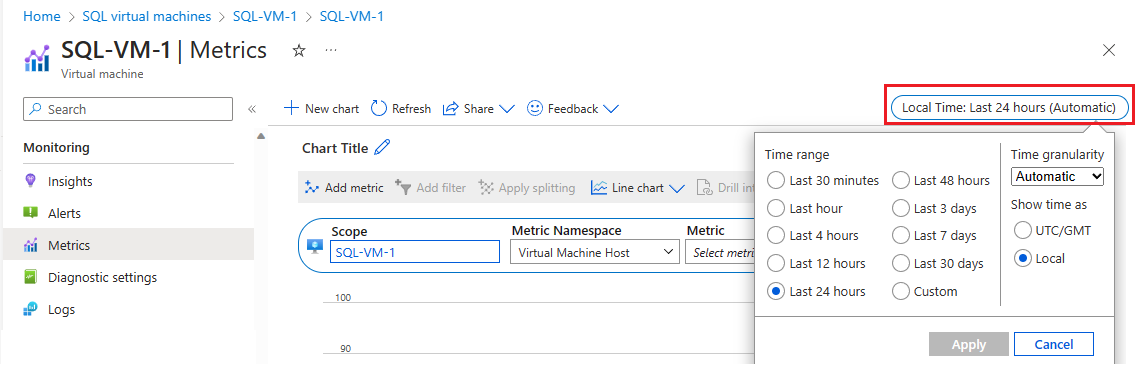

Select Local time to specify the time range you're interested in, and the time zone, either local to the VM, or UTC/GMT.

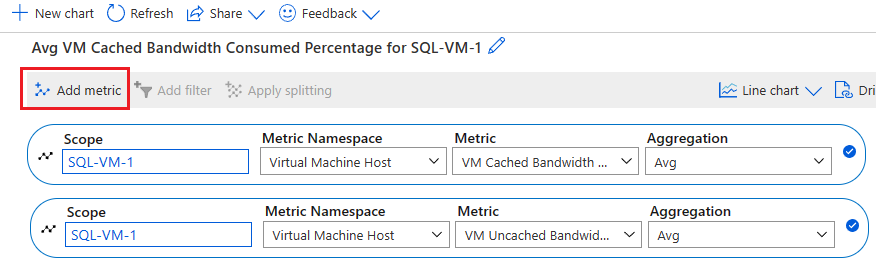

Select Add metric to add the following two metrics to see the graph:

- VM Cached Bandwidth Consumed Percentage

- VM Uncached Bandwidth Consumed Percentage

Azure VM HostEvents causes failover

It's possible that an Azure VM HostEvent causes your availability group to fail over. If you believe an Azure VM HostEvent caused a failover, you can check the Azure Monitor Activity log, and the Azure VM Resource Health overview.

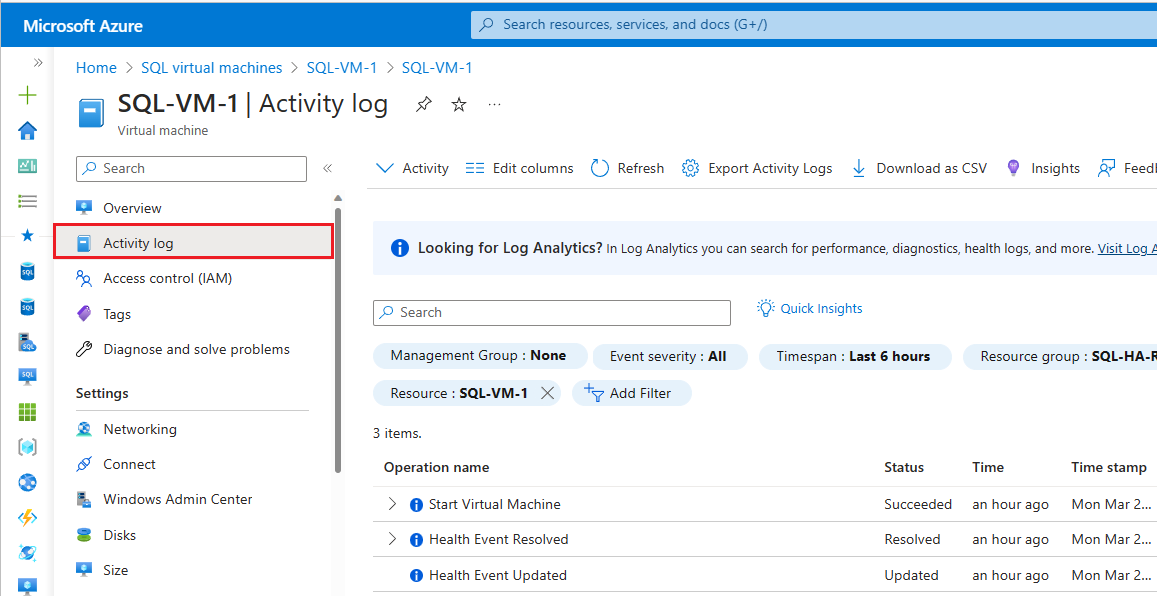

The Azure Monitor activity log is a platform log, in Azure, which provides insight into subscription-level events. The activity log includes information such as when a resource is modified, or a virtual machine is started. You can view the activity log in the Azure portal, or retrieve entries with PowerShell and the Azure CLI.

To check the Azure Monitor activity log, follow these steps:

Navigate to your Virtual Machine in Azure portal

Select Activity Log on the Virtual Machine pane

Select Timespan and then choose the time frame when your availability group failed over. Select Apply.

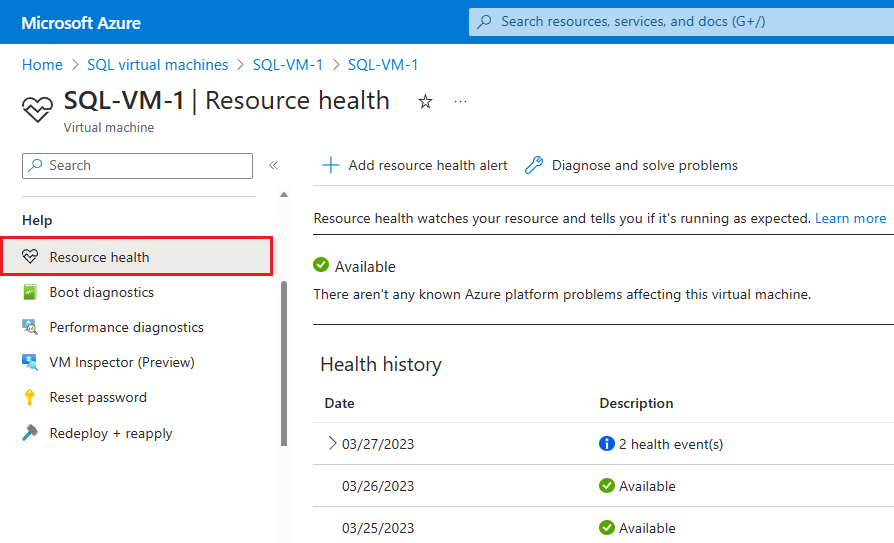

If Azure has further information about the root cause of a platform-initiated unavailability, that information might be posted on the Azure VM - Resource Health overview page up to 72 hours after the initial unavailability. This information is only available for virtual machines at this time.

- Navigate to your Virtual Machine in Azure portal

- Select Resource Health under the Health pane.

You can also configure alerts based on health events from this page.

Cluster node removed from membership

If the Windows Cluster heartbeat and threshold settings are too aggressive for your environment, you might see following message in the system event log frequently.

Error 1135

Cluster node 'Node1' was removed from the active failover cluster membership.

The Cluster service on this node may have stopped. This could also be due to the node having

lost communication with other active nodes in the failover cluster. Run the Validate a

Configuration Wizard to check your network configuration. If the condition persists, check

for hardware or software errors related to the network adapters on this node. Also check for

failures in any other network components to which the node is connected such as hubs, switches, or bridges.

For more information, review Troubleshooting cluster issue with Event ID 1135.

Lease has expired / Lease is no longer valid

If monitoring is too aggressive for your environment, you might see frequent availability group or FCI restarts, failures, or failovers. Additionally for availability groups, you might see the following messages in the SQL Server error log:

Error 19407: The lease between availability group 'PRODAG' and the Windows Server Failover Cluster has expired.

A connectivity issue occurred between the instance of SQL Server and the Windows Server Failover Cluster.

To determine whether the availability group is failing over correctly, check the corresponding availability group

resource in the Windows Server Failover Cluster

Error 19419: The renewal of the lease between availability group '%.*ls' and the Windows Server Failover Cluster

failed because the existing lease is no longer valid.

Connection timeout

If the session timeout is too aggressive for your availability group environment, you might see following messages frequently:

Error 35201: A connection timeout has occurred while attempting to establish a connection to availability

replica 'replicaname' with ID [availability_group_id]. Either a networking or firewall issue exists,

or the endpoint address provided for the replica is not the database mirroring endpoint of the host server instance.

Error 35206

A connection timeout has occurred on a previously established connection to availability

replica 'replicaname' with ID [availability_group_id]. Either a networking or a firewall issue

exists, or the availability replica has transitioned to the resolving role.

Group not failing over

If the Maximum Failures in the Specified Period value is too low and you're experiencing intermittent failures due to transient issues, your availability group could end in a failed state. Increase this value to tolerate more transient failures.

Not failing over group <Resource name>, failoverCount 3, failoverThresholdSetting <Number>, computedFailoverThreshold 2.

Event 1196 - Network name resource failed registration of associated DNS name

- Check the NIC settings for each of your cluster nodes to make sure there are no external DNS records present

- Ensure the A record for your cluster exists on your internal DNS servers. If not, create a new A Record manual in DNS Server for the Cluster Access Control object and check the Allow any authenticated users to update DNS Records with the same owner name.

- Take the Resource "Cluster Name" with IP Resource offline and fix it.

Event 157 - Disk has been surprised removed.

This can happen if the Storage Spaces property AutomaticClusteringEnabled is set to True for an AG environment. Change it to False. Also, running a Validation Report with Storage option can trigger the disk reset or surprise removed event. The storage system Throttling can also trigger the disk surprise remove event.

Event 1206 - Cluster network name resource can't be brought online.

The computer object associated with the resource couldn't be updated in the domain. Make sure you have appropriate permissions on domain

Windows Clustering errors

You might encounter issues while setting up a Windows failover cluster or its connectivity if you don't have Cluster Service Ports open for communication.

If you are on Windows Server 2019, and you don't see a Windows Cluster IP, you have configured Distributed Network Name, which is only supported on SQL Server 2019. If you have previous versions of SQL Server, you can remove and Recreate the Cluster using Network Name.

Review other Windows Failover Failover Clustering system log events and their solutions.

Next steps

To learn more, see:

- HADR settings for SQL Server on Azure VMs

- Windows Server Failover Cluster with SQL Server on Azure VMs

- Always On availability groups with SQL Server on Azure VMs

- Windows Server Failover Cluster with SQL Server on Azure VMs

- Failover cluster instances with SQL Server on Azure VMs

- Failover cluster instance overview