Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

This article details how you can prepare for an Azure regional outage by replicating your Azure Data Explorer resources, management, and ingestion in different Azure regions. An example of data ingestion with Azure Event Hubs is given. Cost optimization is also discussed for different architecture configurations. For a more in-depth look at architecture considerations and recovery solutions, see the business continuity overview.

Prepare for Azure regional outage to protect your data

Azure Data Explorer doesn't support automatic protection against the outage of an entire Azure region. This disruption can happen during a natural disaster, like an earthquake. If you require a solution for a disaster recovery situation, do the following steps to ensure business continuity. In these steps, you'll replicate your clusters, management, and data ingestion in two Azure paired regions.

- Create two or more independent clusters in two Azure paired regions.

- Replicate all management activities such as creating new tables or managing user roles on each cluster.

- Ingest data into each cluster in parallel.

Create multiple independent clusters

Create more than one Azure Data Explorer cluster in more than one region. Make sure that at least two of these clusters are created in Azure paired regions.

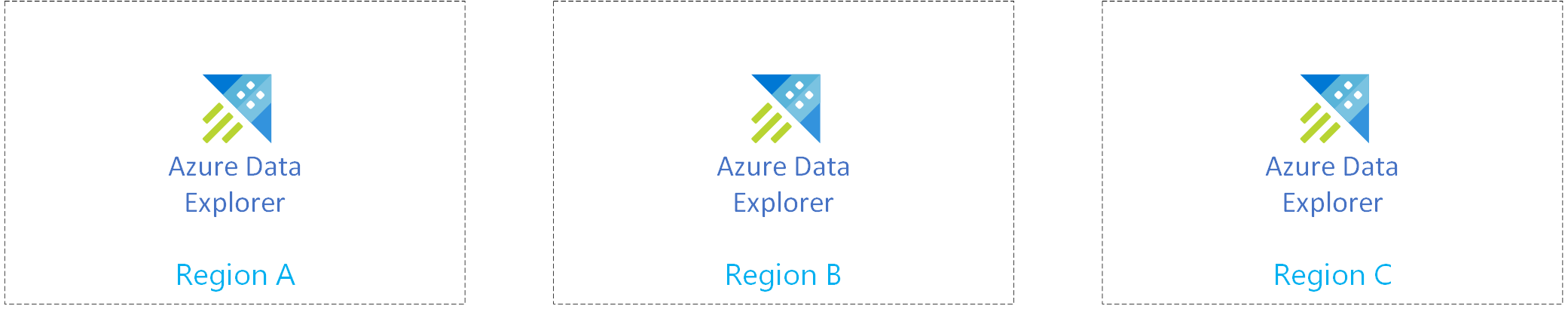

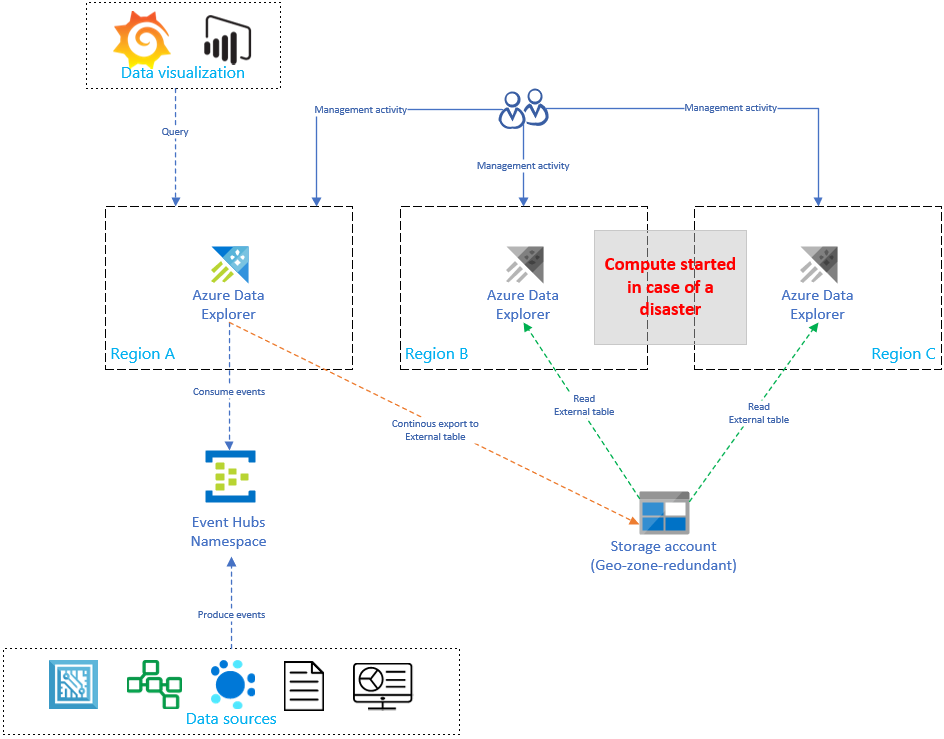

The following image shows replicas, three clusters in three different regions.

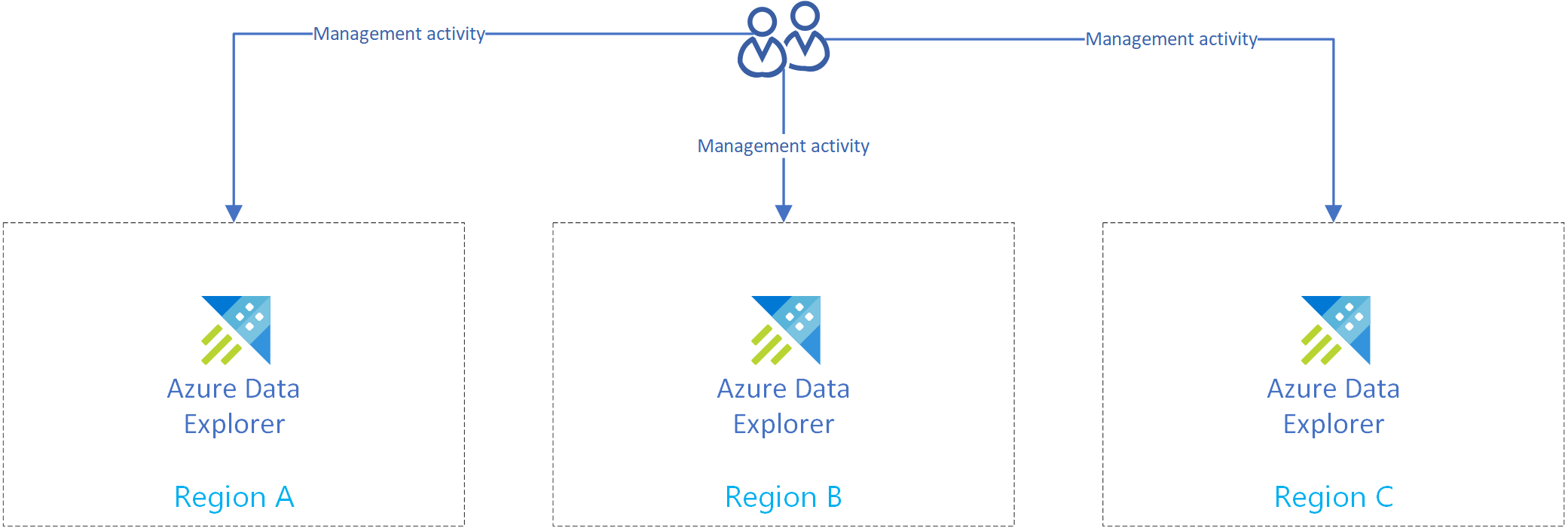

Replicate management activities

Replicate the management activities to have the same cluster configuration in every replica.

Create on each replica the same:

- Databases: You can use the Azure portal or one of our SDKs to create a new database.

- Tables

- Mappings

- Policies

Manage the authentication and authorization on each replica.

Disaster recovery solution using event hub ingestion

Once you've completed Prepare for Azure regional outage to protect your data, your data and management are distributed to multiple regions. If there's an outage in one region, Azure Data Explorer will be able to use the other replicas.

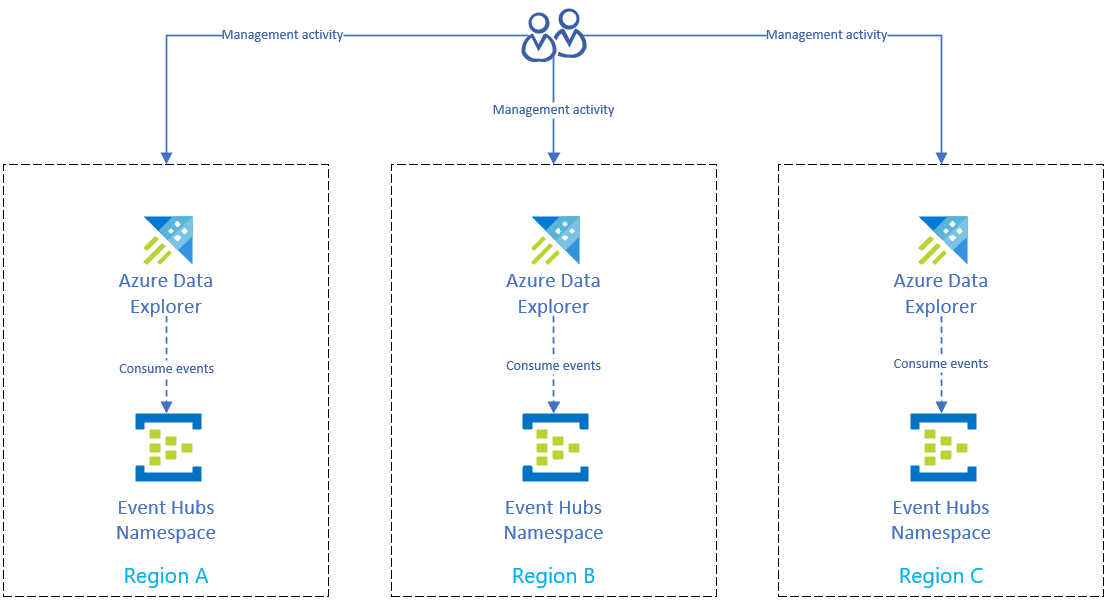

Set up ingestion using an event hub

To ingest data from Azure Event Hubs into each region's Azure Data Explorer cluster, first replicate your Azure Event Hubs setup in each region. Then configure each region's Azure Data Explorer replica to ingest data from its corresponding Event Hubs.

Note

Ingestion via Azure Event Hubs/IoT Hub/Storage is robust. If a cluster isn't available for a period of time, it will catch up at a later time and insert any pending messages or blobs. This process relies on checkpointing.

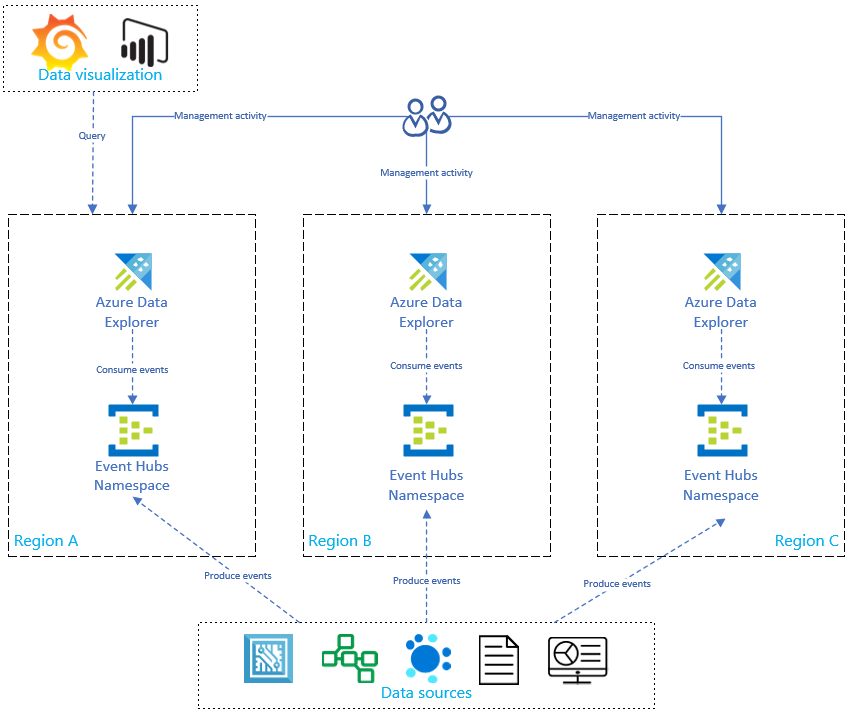

As shown in the diagram below, your data sources produce events to event hubs in all regions, and each Azure Data Explorer replica consumes the events. Data visualization components like Power BI, Grafana, or SDK powered WebApps can query one of the replicas.

Optimize costs

Now you're ready to optimize your replicas using some of the following methods:

- Create an on-demand data recovery configuration

- Start and stop the replicas

- Implement a highly available application service

- Optimize cost in an active-active configuration

Create an on-demand data recovery configuration

Replicating and updating the Azure Data Explorer setup will linearly increase the cost with the number of replicas. To optimize cost, you can implement an architectural variant to balance time, failover, and cost. In an on-demand data recovery configuration, cost optimization has been implemented by introducing passive Azure Data Explorer replicas. These replicas are only turned on if there's a disaster in the primary region (for example, region A). The replicas in Regions B and C don't need to be active 24/7, reducing the cost significantly. However, in most cases, the performance of these replicas won't be as good as the primary cluster. For more information, see On-demand data recovery configuration.

In the image below, only one cluster is ingesting data from the event hub. The primary cluster in Region A performs continuous data export of all data to a storage account. The secondary replicas have access to the data using external tables.

Start and stop the replicas

You can start and stop the secondary replicas using one of the following methods:

The Stop button in the Overview tab in the Azure portal. For more information, see Stop and restart the cluster.

Azure CLI:

az kusto cluster stop --name=<clusterName> --resource-group=<rgName> --subscription=<subscriptionId>"

Implement a highly available application service

Create the Azure App Service BCDR client

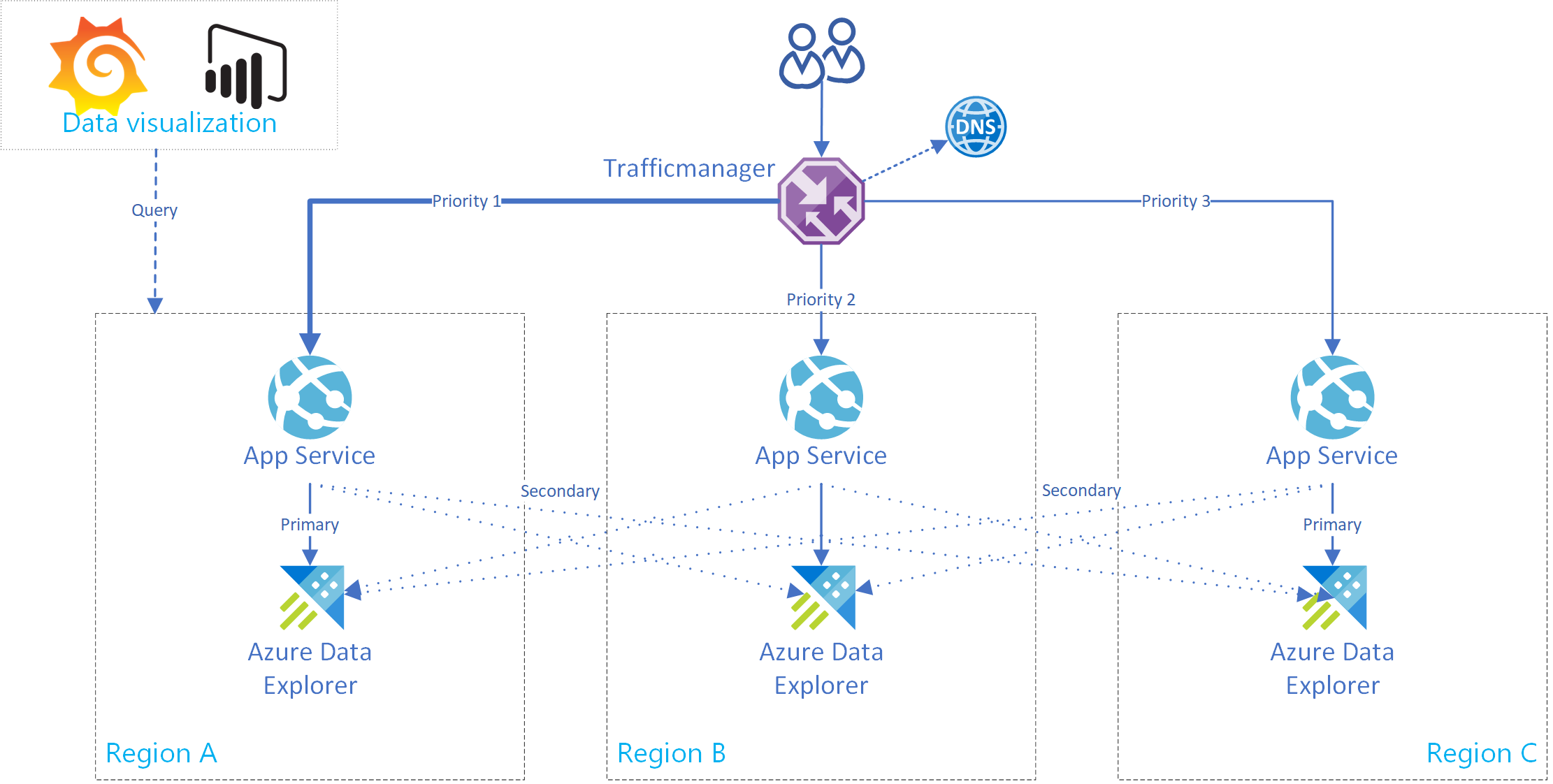

This section shows you how to create an Azure App Service that supports a connection to a single primary and multiple secondary Azure Data Explorer clusters. The following image illustrates the Azure App Service setup.

Tip

Having multiple connections between replicas in the same service gives you increased availability. This setup isn't only useful in instances of regional outages.

Use this boilerplate code for an app service. To implement a multi-cluster client, the AdxBcdrClient class has been created. Each query that is executed using this client will be sent first to the primary cluster. If there's a failure, the query will be sent to secondary replicas.

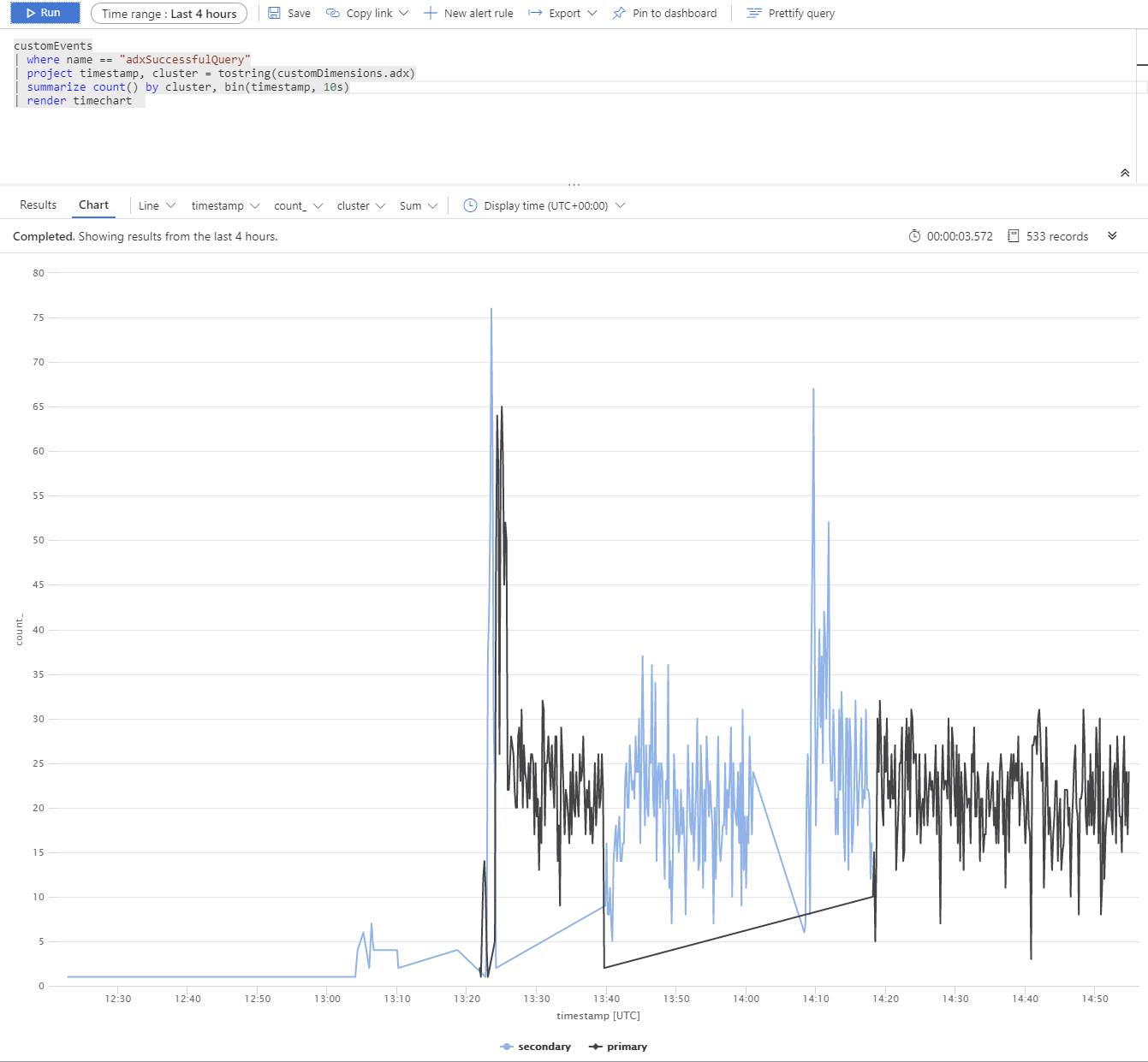

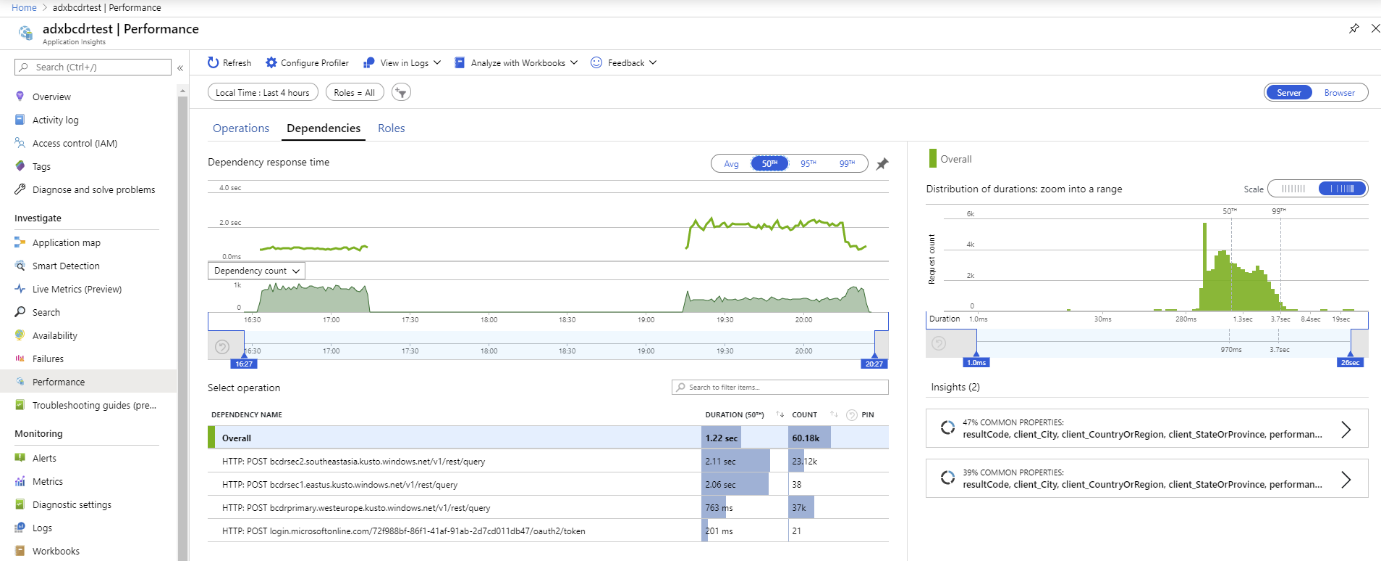

Use custom application insights metrics to measure performance, and request distribution to primary and secondary clusters.

Test the Azure App Service BCDR client

We ran a test using multiple Azure Data Explorer replicas. After a simulated outage of primary and secondary clusters, you can see that the app service BCDR client is behaving as intended.

The Azure Data Explorer clusters are distributed across West Europe (2xD14v2 primary), South East Asia, and East US (2xD11v2).

Note

Slower response times are due to different SKUs and cross planet queries.

Perform dynamic or static routing

Use Azure Traffic Manager routing methods for dynamic or static routing of the requests. Azure Traffic Manager is a DNS-based traffic load balancer that enables you to distribute app service traffic. This traffic is optimized to services across global Azure regions, while providing high availability and responsiveness.

Optimize cost in an active-active configuration

Using an active-active configuration for disaster recovery increases the cost linearly. The cost includes nodes, storage, markup, and increased networking cost for bandwidth.

Use optimized autoscale to optimize costs

Use the optimized autoscale feature to configure horizontal scaling for the secondary clusters. They should be dimensioned so they can handle the ingestion load. Once the primary cluster isn't reachable, the secondary clusters will get more traffic and scale according to the configuration.

Using optimized autoscale in this example saved roughly 50% of the cost in comparison to having the same horizontal and vertical scale on all replicas.