Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

APPLIES TO:

Azure CLI ml extension v2 (current)

Azure CLI ml extension v2 (current)

Python SDK azure-ai-ml v2 (current)

Python SDK azure-ai-ml v2 (current)

Big data requires a service that can orchestrate and operationalize processes to refine these enormous stores of raw data into actionable business insights. The Azure Data Factory managed cloud service handles these complex hybrid extract-transform-load (ETL), extract-load-transform (ELT), and data integration projects.

Azure Data Factory allows you to create pipelines that can orchestrate multiple data transformations and manage them as a single unit. Batch endpoints are an excellent candidate to become a step in such processing workflow.

In this article, learn how to use batch endpoints in Azure Data Factory activities by relying on the Web Invoke activity and the REST API.

Tip

When you use data pipelines in Fabric, you can invoke batch endpoint directly using the Azure Machine Learning activity. We recommend using Fabric for data orchestration whenever possible to take advantage of the newest capabilities. The Azure Machine Learning activity in Azure Data Factory can only work with assets from Azure Machine Learning V1.

Prerequisites

A model deployed as a batch endpoint. Use the heart condition classifier created in Using MLflow models in batch deployments.

An Azure Data Factory resource. To create a data factory, follow the steps in Quickstart: Create a data factory by using the Azure portal.

After creating your data factory, browse to it in the Azure portal and select Launch studio:

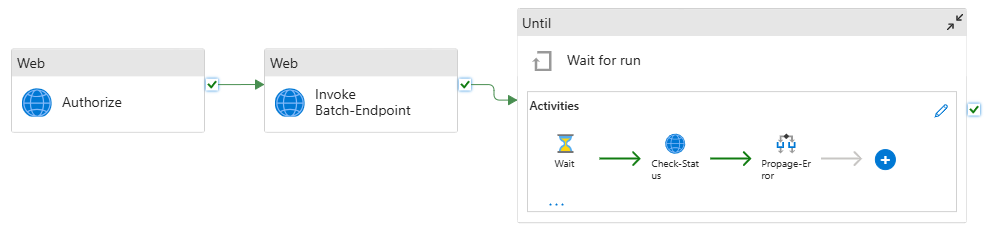

Authenticate against batch endpoints

Azure Data Factory can invoke the REST APIs of batch endpoints by using the Web Invoke activity. Batch endpoints support Microsoft Entra ID for authorization and the request made to the APIs require a proper authentication handling. For more information, see Web activity in Azure Data Factory and Azure Synapse Analytics.

You can use a service principal or a managed identity to authenticate against batch endpoints. We recommend using a managed identity because it simplifies the use of secrets.

You can use Azure Data Factory managed identity to communicate with batch endpoints. In this case, you only need to make sure that your Azure Data Factory resource was deployed with a managed identity.

If you don't have an Azure Data Factory resource or it was already deployed without a managed identity, follow this procedure to create it: System-assigned managed identity.

Caution

It isn't possible to change the resource identity in Azure Data Factory after deployment. If you need to change the identity of a resource after you create it, you need to recreate the resource.

After deployment, grant access for the managed identity of the resource you created to your Azure Machine Learning workspace. See Grant access. In this example, the service principal requires:

- Permission in the workspace to read batch deployments and perform actions over them.

- Permissions to read/write in data stores.

- Permissions to read in any cloud location (storage account) indicated as a data input.

About the pipeline

In this example, you create a pipeline in Azure Data Factory that can invoke a given batch endpoint over some data. The pipeline communicates with Azure Machine Learning batch endpoints using REST. For more information about how to use the REST API of batch endpoints, see Create jobs and input data for batch endpoints.

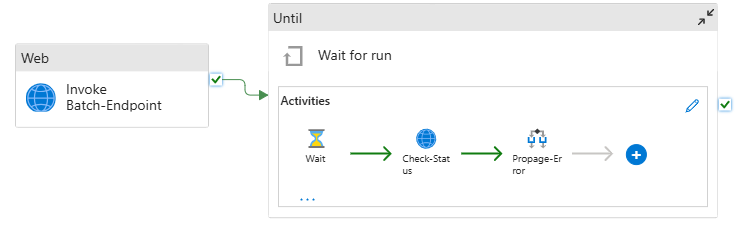

The pipeline looks as follows:

The pipeline contains the following activities:

Run Batch-Endpoint: A Web Activity that uses the batch endpoint URI to invoke it. It passes the input data URI where the data is located and the expected output file.

Wait for job: It's a loop activity that checks the status of the created job and waits for its completion, either as Completed or Failed. This activity, in turns, uses the following activities:

- Check status: A Web Activity that queries the status of the job resource that was returned as a response of the Run Batch-Endpoint activity.

- Wait: A Wait Activity that controls the polling frequency of the job's status. We set a default of 120 (2 minutes).

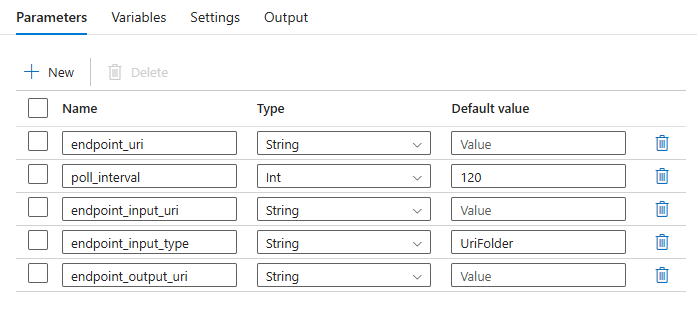

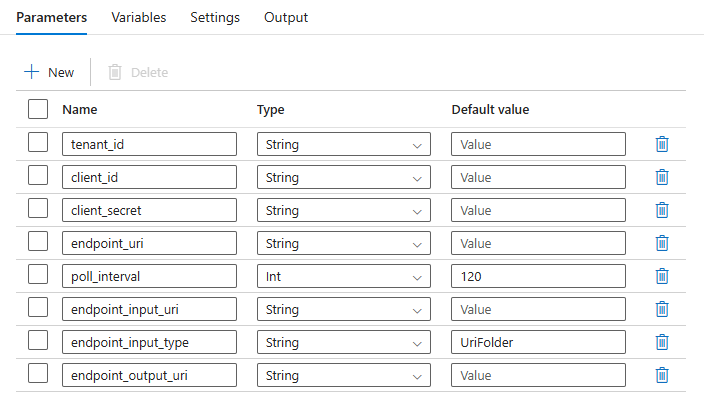

The pipeline requires you to configure the following parameters:

| Parameter | Description | Sample value |

|---|---|---|

endpoint_uri |

The endpoint scoring URI | https://<endpoint_name>.<region>.inference.studio.ml.azure.cn/jobs |

poll_interval |

The number of seconds to wait before checking the job status for completion. Defaults to 120. |

120 |

endpoint_input_uri |

The endpoint's input data. Multiple data input types are supported. Ensure that the managed identity that you use to execute the job has access to the underlying location. Alternatively, if you use Data Stores, ensure the credentials are indicated there. | azureml://datastores/.../paths/.../data/ |

endpoint_input_type |

The type of the input data you're providing. Currently batch endpoints support folders (UriFolder) and File (UriFile). Defaults to UriFolder. |

UriFolder |

endpoint_output_uri |

The endpoint's output data file. It must be a path to an output file in a Data Store attached to the Machine Learning workspace. No other type of URIs is supported. You can use the default Azure Machine Learning data store, named workspaceblobstore. |

azureml://datastores/workspaceblobstore/paths/batch/predictions.csv |

Warning

Remember that endpoint_output_uri should be the path to a file that doesn't exist yet. Otherwise, the job fails with the error the path already exists.

Create the pipeline

To create this pipeline in your existing Azure Data Factory and invoke batch endpoints, follow these steps:

Ensure the compute where the batch endpoint runs has permissions to mount the data Azure Data Factory provides as input. The entity that invokes the endpoint still grants access.

In this case, it's Azure Data Factory. However, the compute where the batch endpoint runs needs to have permission to mount the storage account your Azure Data Factory provides. See Accessing storage services for details.

Open Azure Data Factory Studio. Select the pencil icon to open the Author pane and, under Factory Resources, select the plus sign.

Select Pipeline > Import from pipeline template.

Select a .zip file.

A preview of the pipeline shows up in the portal. Select Use this template.

The pipeline is created for you with the name Run-BatchEndpoint.

Configure the parameters of the batch deployment:

Warning

Ensure that your batch endpoint has a default deployment configured before you submit a job to it. The created pipeline invokes the endpoint. A default deployment needs to be created and configured.

Tip

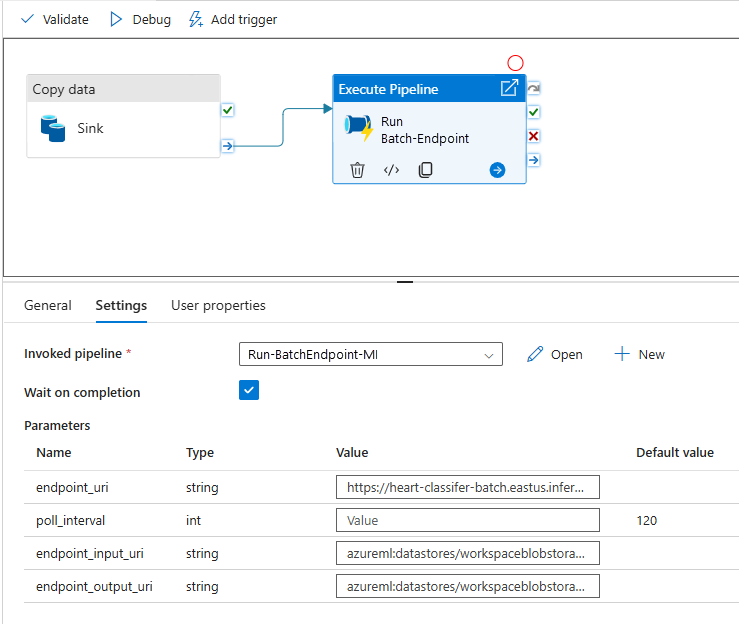

For best reusability, use the created pipeline as a template and call it from within other Azure Data Factory pipelines by using the Execute pipeline activity. In that case, don't configure the parameters in the inner pipeline but pass them as parameters from the outer pipeline as shown in the following image:

Your pipeline is ready to use.

Limitations

When you use Azure Machine Learning batch deployments, consider the following limitations:

Data inputs

- Only Azure Machine Learning data stores or Azure Storage Accounts (Azure Blob Storage, Azure Data Lake Storage Gen2) are supported as inputs. If your input data is in another source, use the Azure Data Factory Copy activity before the execution of the batch job to sink the data to a compatible store.

- Batch endpoint jobs don't explore nested folders. They can't work with nested folder structures. If your data is distributed in multiple folders, you have to flatten the structure.

- Make sure that your scoring script provided in the deployment can handle the data as it's expected to be fed into the job. If the model is MLflow, for the limitations on supported file types, see Deploy MLflow models in batch deployments.

Data outputs

- Only registered Azure Machine Learning data stores are supported. We recommend that you to register the storage account your Azure Data Factory is using as a Data Store in Azure Machine Learning. In that way, you can write back to the same storage account where you're reading.

- Only Azure Blob Storage Accounts are supported for outputs. For instance, Azure Data Lake Storage Gen2 isn't supported as output in batch deployment jobs. If you need to output the data to a different location or sink, use the Azure Data Factory Copy activity after you run the batch job.