Azure Functions 提供与 Azure Application Insights 的内置集成,用于监视函数执行。 本文概述了 Azure 提供的用于监视 Azure Functions 的监视功能。

Application Insights 可收集日志、性能和错误数据。 通过自动检测性能异常并使用强大的分析工具,你可以更轻松地诊断问题并更好地了解函数的使用方式。 这些工具设计用来帮助你持续提高函数的性能与可用性。 你甚至可以在本地函数应用项目开发过程中使用 Application Insights。 有关详细信息,请参阅 Application Insights 简介。

由于 Application Insights 检测内置于 Azure Functions 中,因此你只需要一个有效的检测密钥即可将函数应用连接到 Application Insights 资源。 在 Azure 中创建函数应用资源时,检测密钥会添加到应用程序设置中。 如果函数应用还没有此密钥,则可以进行手动设置。

还可以使用 Azure Monitor 监视函数应用本身。 若要了解详细信息,请参阅监视 Azure Functions。

Application Insights 定价和限制

你可以免费试用 Application Insights 与 Azure Functions 的集成,但每天免费处理的数据量有限制。

如果在开发过程中启用 Application Insights,则在测试过程中可能会达到此限制。 当你达到每日限制时,Azure 会提供门户和电子邮件通知。 如果错过了这些警报并达到限制,则 Application Insights 查询中不会显示新日志。 请注意限制,以免在故障排除上花费不必要的时间。 有关详细信息,请参阅 Application Insights 计费。

重要

Application Insights 具有采样功能,可以防止在峰值负载时为已完成的执行生成过多的遥测数据。 采样功能默认处于启用状态。 如果你看起来缺少数据,则可能需要调整采样设置来适应你的具体监视方案。 若要了解详细信息,请参阅配置采样。

Application Insights 集成

通常,你在创建函数应用时创建 Application Insights 实例。 在本例中,集成所需的检测密钥已设置为名为 APPINSIGHTS_INSTRUMENTATIONKEY 的应用程序设置。 如果你的函数应用由于某个原因未设置检测密钥,则你需要启用 Application Insights 集成。

重要

主权云(例如 Azure 政府)需要使用 Application Insights 连接字符串 (APPLICATIONINSIGHTS_CONNECTION_STRING) 而不是检测密钥。 若要了解详细信息,请参阅 APPLICATIONINSIGHTS_CONNECTION_STRING 参考。

下表详细介绍了可用于监视函数应用的 Application Insights 支持的功能:

| Azure Functions 运行时版本 | 1.x | 4.x+ |

|---|---|---|

| 自动集合 | ||

| • 请求 | ✓ | ✓ |

| • 异常 | ✓ | ✓ |

| • 性能计数器 | ✓ | ✓ |

| • 依赖项 | ||

| — HTTP | ✓ | |

| — 服务总线 | ✓ | |

| — 事件中心 | ✓ | |

| — SQL* | ✓ | |

| 受支持的功能 | ||

| • QuickPulse/LiveMetrics | 是 | 是 |

| — 安全控制通道 | 是 | |

| • 采样 | 是 | 是 |

| • 检测信号 | 是 | |

| 相关性 | ||

| • 服务总线 | 是 | |

| • 事件中心 | 是 | |

| 可配置 | ||

| •完全可配置 | 是 |

* 若要启用 SQL 查询字符串文本的集合,请参阅启用 SQL 查询集合。

收集遥测数据

启用 Application Insights 集成后,遥测数据将发送到所连接的 Application Insights 实例。 此数据包括由 Functions 主机生成的日志、从你的函数代码写入的跟踪,以及性能数据。

注意

除了来自你的函数和 Functions 主机的数据之外,你还可以收集来自 Functions 缩放控制器的数据。

日志级别和类别

从应用程序代码写入跟踪时,你应当为跟踪分配一个日志级别。 日志级别为你提供了一种限制从跟踪中收集的数据量的方法。

为每个日志分配日志级别。 该值是表示相对重要性的整数:

| LogLevel | Code | 说明 |

|---|---|---|

| 跟踪 | 0 | 包含最详细消息的日志。 这些消息可能包含敏感应用程序数据。 这些消息默认情况下处于禁用状态,并且绝不应在生产环境中启用。 |

| 调试 | 1 | 在开发过程中用于交互式调查的日志。 这些日志应主要包含对调试有用的信息,并且没有长期价值。 |

| 信息 | 2 | 跟踪应用程序的常规流的日志。 这些日志应具有长期价值。 |

| 警告 | 3 | 突出显示应用程序流中的异常或意外事件,但不会导致应用程序执行停止的日志。 |

| 错误 | 4 | 当前执行流因失败而停止时突出显示的日志。 这些错误应指示当前活动中的故障,而不是应用程序范围内的故障。 |

| 严重 | 5 | 描述不可恢复的应用程序/系统崩溃或需要立即引起注意的灾难性故障的日志。 |

| 无 | 6 | 禁用指定类别的日志记录。 |

Host.json 文件配置确定函数应用发送到 Application Insights 的日志记录数量。

若要详细了解日志级别,请参阅配置日志级别。

通过将所记录的项分配到某个类别,可以更好地在函数应用中控制从特定源生成的遥测数据。 使用类别,可以更轻松地对所收集的数据运行分析。 从函数代码写入的跟踪将根据函数名称分配给各个类别。 若要详细了解类别,请参阅配置类别。

自定义遥测数据

在 C#、JavaScript 和 Python 中,可以使用 Application Insights SDK 编写自定义遥测数据。

依赖项

从 Functions 2.x 开始,Application Insights 会自动收集使用某些客户端 SDK 的绑定的依赖项数据。 Application Insights 收集以下依赖项的数据:

- Azure Cosmos DB

- Azure 事件中心

- Azure 服务总线

- Azure 存储服务(Blob、队列和表)

还将捕获使用 SqlClient 的 HTTP 请求和数据库调用。 有关 Application Insights 支持的依赖项的完整列表,请参阅自动跟踪的依赖项。

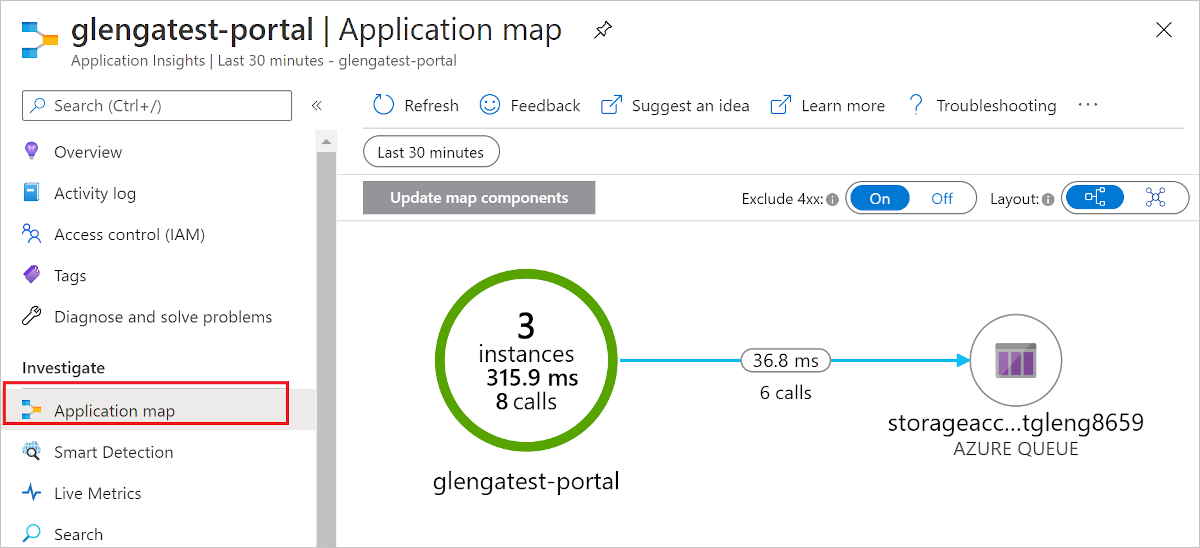

Application Insights 生成收集的依赖项数据的应用程序映射。 下面是包含队列存储输出绑定的 HTTP 触发器函数的应用程序映射示例。

依赖项是在 Information 级别写入的。 如果在Warning级或更高级别进行筛选,则看不到依赖项数据。 此外,自动收集依赖项的操作在非用户范围内进行。 若要捕获依赖项数据,请确保在主机中将级别至少设置为用户范围 (Information) 外部的 Function.<YOUR_FUNCTION_NAME>.User。

除了自动收集依赖项数据外,还可以使用特定于语言的 Application Insights SDK 之一将自定义依赖项信息写入到日志中。 有关如何编写自定义依赖项的示例,请参阅以下特定于语言的示例之一:

性能计数器

在 Linux 上运行时,不支持自动收集性能计数器。

写入到日志

写入到日志的方式和使用的 API 取决于函数应用项目的语言。 请参阅适用于你的语言的开发人员指南,详细了解如何从函数写入日志。

分析数据

默认情况下,从函数应用中收集的数据会存储在 Application Insights 中。 在 Azure 门户中,Application Insights 提供了一组全面的遥测数据可视化效果。 你可以深入查看错误日志,并且可以查询事件和指标。 若要了解详细信息,包括关于如何查看和查询已收集数据的示例,请参阅在 Application Insights 中分析 Azure Functions 遥测数据。

流式处理日志

开发应用程序时,通常需要了解在 Azure 中运行时近实时地写入日志的内容。

可以通过两种方式查看通过执行函数生成的日志数据的流。

内置日志流式处理:借助应用服务平台即可查看应用程序日志文件流。 此流等同于在本地开发期间调试函数时以及在门户中使用“测试”选项卡时看到的输出。 此时将显示所有基于日志的信息。 有关详细信息,请参阅流式处理日志。 这种流式处理方法仅支持单个实例,不能用于在消耗计划中的 Linux 上运行的应用。

实时指标流:当函数应用连接到 Application Insights 时,你可以在 Azure 门户中使用实时指标流近乎实时地查看日志数据和其他指标。 当监视在多个实例或消耗计划中的 Linux 上运行的函数时,请使用此方法。 此方法使用抽样数据。

可以在门户和大多数本地开发环境中查看日志流。 若要了解如何启用日志流,请参阅在 Azure Functions 中启用流式处理执行日志。

诊断日志

Application Insights 允许你将遥测数据导出到长期存储或其他分析服务。

由于 Functions 还与 Azure Monitor 集成,因此你还可以使用诊断设置将遥测数据发送到各种目标(包括 Azure Monitor 日志)。 若要了解详细信息,请参阅监视 Azure Functions。

缩放控制器日志

Azure Functions 缩放控制器监视运行应用的 Azure Functions 主机的实例。 此控制器根据当前性能决定何时添加或删除实例。 可以让缩放控制器将日志发出到 Application Insights,以便更好地了解缩放控制器为函数应用做出的决策。 你还可以将生成的日志存储在 Blob 存储中,供其他服务分析。

若要启用此功能,请将名为 SCALE_CONTROLLER_LOGGING_ENABLED 的应用程序设置添加到函数应用设置中。 若要了解详细方法,请参阅配置缩放控制器日志。

Azure Monitor 指标

除了 Application Insights 收集的基于日志的遥测数据外,还可以从 Azure Monitor 指标获取有关函数应用运行方式的数据。 若要了解详细信息,请参阅监视 Azure Functions。

报告问题

若要报告 Functions 中的 Application Insights 集成问题,或提出建议或请求,请在 GitHub 中创建问题。

后续步骤

有关详细信息,请参阅以下资源: