本文介绍如何使用事件网格数据连接将 blob 从存储帐户引入到 Azure 数据资源管理器。 你将创建一个用于设置 Azure 事件网格订阅的事件网格数据连接。 事件网格订阅通过 Azure 事件中心将事件从存储帐户路由到 Azure 数据资源管理器。

注意

引入支持的最大文件大小为 6 GB。 建议引入 100 MB 到 1 GB 的文件。

若要了解如何使用 Kusto SDK 创建连接,请参阅使用 SDK 创建事件网格数据连接。

有关如何从事件网格引入 Azure 数据资源管理器的一般信息,请参阅连接到事件网格。

注意

若要使用事件网格连接实现最佳性能,请通过 Blob 元数据设置 rawSizeBytes 引入属性。 有关详细信息,请参阅引入属性。

先决条件

- Azure 订阅。 创建 Azure 帐户。

- Azure 数据资源管理器群集和数据库。 创建群集和数据库。

- 一个目标表。 创建表或使用现有表。

- 表的引入映射。

- 一个存储帐户。 可以在

BlobStorage、StorageV2或 Data Lake Storage Gen2 的 Azure 存储帐户上设置事件网格通知订阅。 - 注册事件网格资源提供程序。

创建事件网格数据连接

在本部分,你需要在事件网格与 Azure 数据资源管理器表之间建立连接。

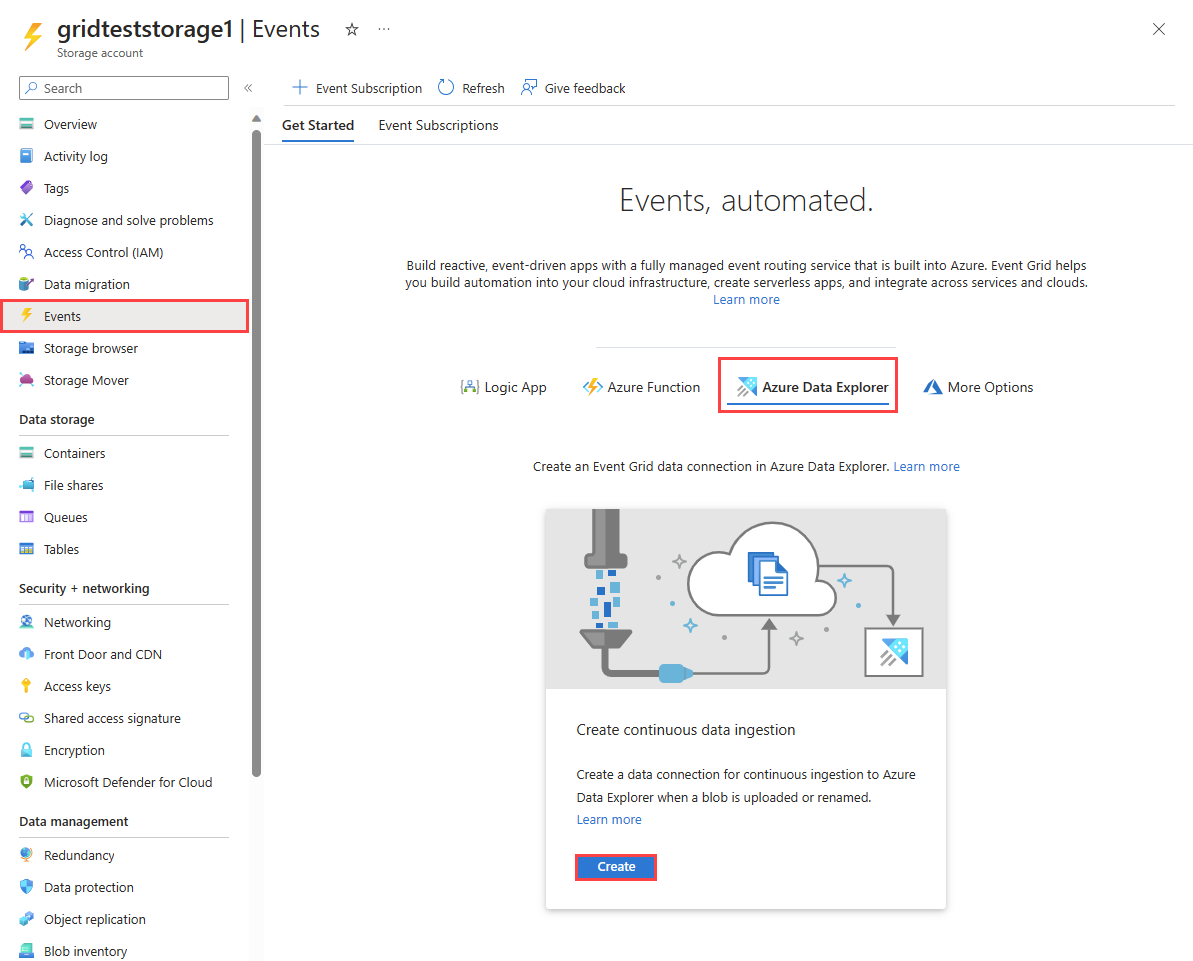

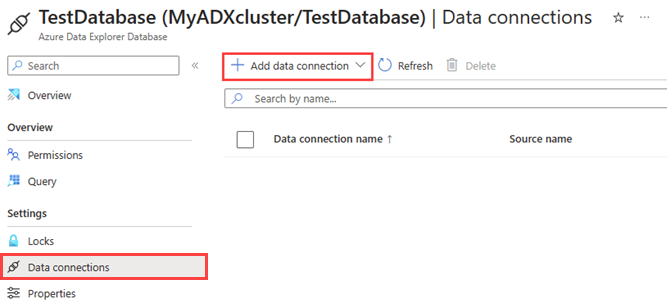

在 Azure 门户中,转到 Azure 数据资源管理器群集。

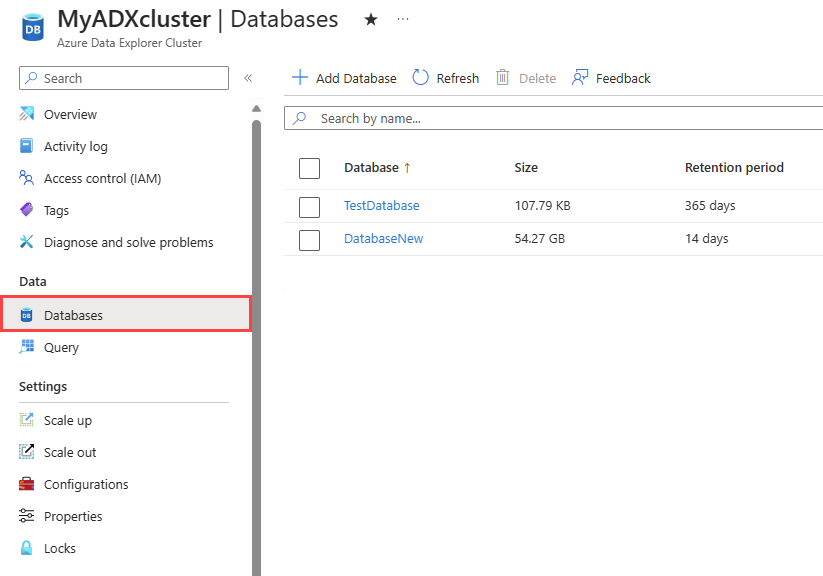

在“数据”下,选择“数据库”“TestDatabase”。>

在“设置”下,选择“数据连接”,然后选择“添加数据连接”“事件网格(Blob 存储)”。>

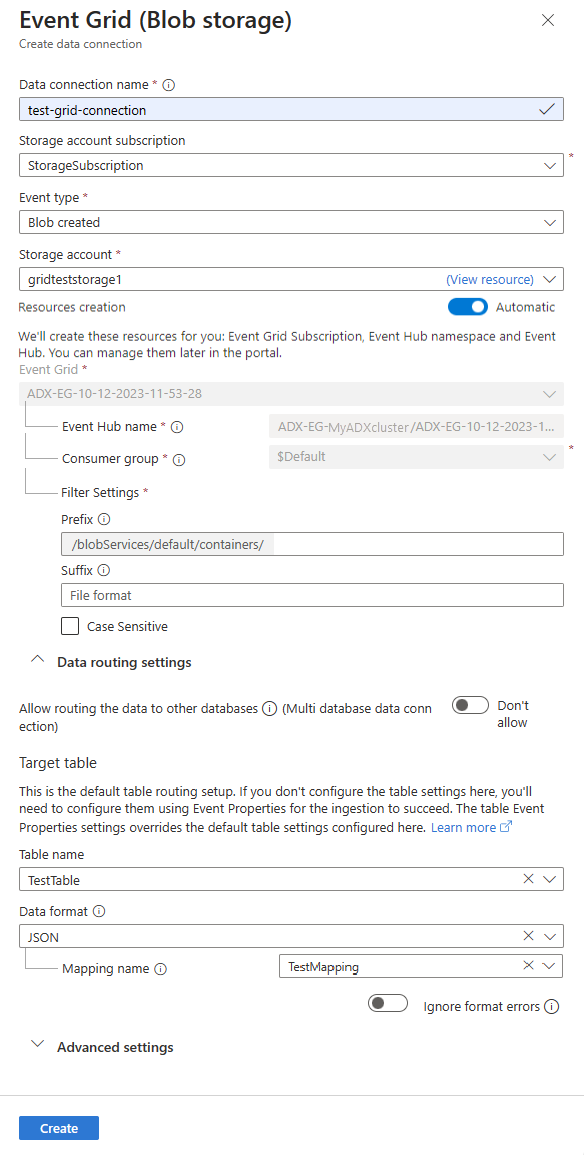

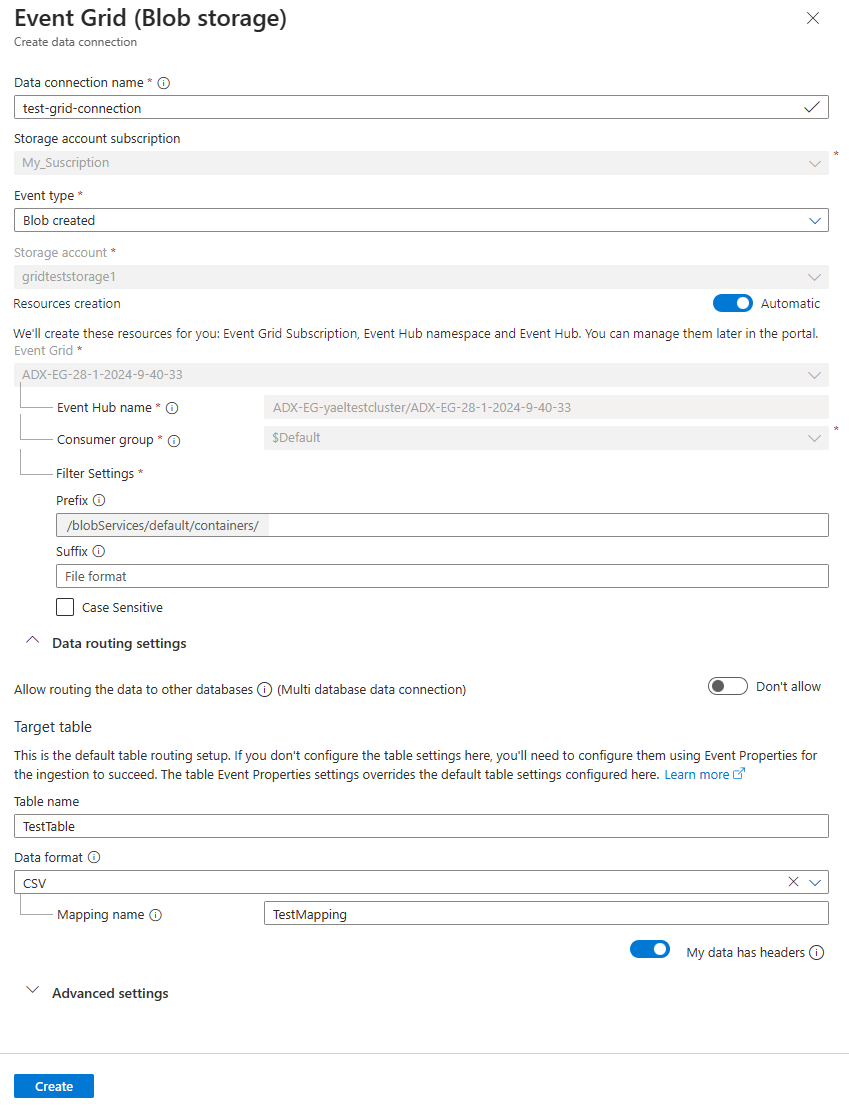

使用以下信息填写“事件网格数据连接”窗体:

设置 建议的值 字段说明 数据连接名称 test-grid-connection 要在 Azure 数据资源管理器中创建的连接的名称。 数据连接名称只能包含字母数字、短划线和点字符,且长度不得超过 40 个字符。 存储帐户订阅 订阅 ID 存储帐户所在的订阅 ID。 事件类型 “已创建 Blob”或“已重命名 Blob” 触发引入的事件类型。 “已重命名 Blob”仅支持 ADLSv2 存储。 若要重命名 blob,请导航到 Azure 门户中的 blob,右键单击该 blob 并选择“重命名”。 支持的类型为:Microsoft.Storage.BlobCreated 或 Microsoft.Storage.BlobRenamed。 存储帐户 gridteststorage1 前面创建的存储帐户的名称。 资源创建 自动 启用自动资源创建意味着 Azure 数据资源管理器会为你创建事件网格订阅、事件中心命名空间和事件中心。 否则,需要手动创建这些资源,以确保创建数据连接。 请参阅手动创建资源以实现事件网格引入 (可选)可以跟踪特定的事件网格主题。 按如下所述设置通知筛选器:

- 前缀字段是主题的文本前缀。 由于应用的模式是 startswith,因此可以跨越多个容器、文件夹或 Blob 应用。 不允许通配符。

- 若要在 Blob 容器上定义筛选器,必须按如下所示设置字段:。

- 若要在 Blob 前缀(或 Azure Data Lake Gen2 中的文件夹)上定义筛选器,必须按如下所示设置字段:。

- “后缀”字段是 Blob 的文本后缀。 不允许通配符。

- “区分大小写”字段指示前缀和后缀筛选器是否区分大小写

有关筛选事件的详细信息,请参阅 Blob 存储事件。

- 前缀字段是主题的文本前缀。 由于应用的模式是 startswith,因此可以跨越多个容器、文件夹或 Blob 应用。 不允许通配符。

(可选)可根据以下信息指定“数据路由设置”。 无需指定所有数据路由设置。 部分设置也是接受的。

设置 建议的值 字段说明 允许将数据路由到其他数据库(多数据库数据连接) 不允许 如果想要替代与数据连接关联的默认目标数据库,请启用此选项。 有关数据库路由的详细信息,请参阅事件路由。 表名 TestTable 在“TestDatabase”中创建的表。 数据格式 JSON 支持的格式为 APACHEAVRO、Avro、CSV、JSON、ORC、PARQUET、PSV、RAW、SCSV、SOHSV、TSV、TSVE、TXT 和 W3CLOG。 支持的压缩选项为 zip 和 gzip。 映射名称 TestTable_mapping 在“TestDatabase”中创建的映射,可将传入的数据映射到“TestTable”的列名称和数据类型。 如果未指定,会自动生成从表的架构派生的标识数据映射。 忽略格式错误 忽略 如果要忽略 JSON 数据格式的格式错误,请启用此选项。 注意

表名称和映射名称区分大小写。

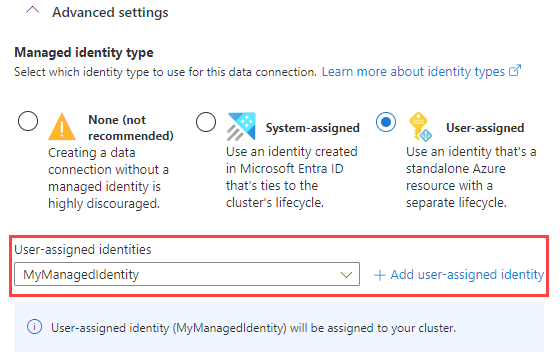

(可选)在“高级设置”下,可以指定数据连接使用的“托管标识类型”。 默认情况下,“系统分配”处于选定状态。

如果你选择“用户分配”,则需要手动分配托管标识。 如果选择尚未分配给群集的用户,将会自动分配该用户。 有关详细信息,请参阅配置 Azure 数据资源管理器群集的托管标识。

如果选择“无”,则存储帐户和事件中心将通过连接字符串进行身份验证。 建议不要使用此方法。

选择“创建”

使用事件网格数据连接

本部分介绍如何在创建 Blob 或重命名 Blob 后,触发从 Azure Blob 存储或 Azure Data Lake Gen 2 到群集的引入。

根据用于上传 Blob 的存储 SDK 类型选择相关的选项卡。

以下代码示例使用 Azure Blob 存储 SDK 将文件上传到 Azure Blob 存储。 上传会触发事件网格数据连接,该连接将数据引入到 Azure 数据资源管理器中。

var azureStorageAccountConnectionString = <storage_account_connection_string>;

var containerName = <container_name>;

var blobName = <blob_name>;

var localFileName = <file_to_upload>;

var uncompressedSizeInBytes = <uncompressed_size_in_bytes>;

var mapping = <mapping_reference>;

// Create a new container if it not already exists.

var azureStorageAccount = new BlobServiceClient(azureStorageAccountConnectionString);

var container = azureStorageAccount.GetBlobContainerClient(containerName);

container.CreateIfNotExists();

// Define blob metadata and uploading options.

IDictionary<String, String> metadata = new Dictionary<string, string>();

metadata.Add("rawSizeBytes", uncompressedSizeInBytes);

metadata.Add("kustoIngestionMappingReference", mapping);

var uploadOptions = new BlobUploadOptions

{

Metadata = metadata,

};

// Upload the file.

var blob = container.GetBlobClient(blobName);

blob.Upload(localFileName, uploadOptions);

注意

Azure 数据资源管理器在引入后不会删除 blob。 使用 Azure Blob 存储生命周期管理 blob 删除,将 blob 保留三到五天。

注意

启用了分层命名空间功能的存储帐户不支持在 CopyBlob 操作后触发引入。

重要

我们强烈建议不要从自定义代码生成存储事件并将其发送到事件中心。 如果选择这样做,请确保生成的事件严格遵循相应的存储事件架构和 JSON 格式规范。

删除事件网格数据连接

若要在 Azure 门户中删除事件网格连接,请执行以下步骤:

- 转到你的群集。 在左侧菜单中选择“数据库”。 然后选择包含目标表的数据库。

- 从左侧菜单选择“数据连接”。 然后,选中相关的事件网格数据连接旁边的复选框。

- 从顶部菜单栏中选择“删除”。