适用于: Azure 数据工厂

Azure 数据工厂  Azure Synapse Analytics

Azure Synapse Analytics

If 条件活动可提供 if 语句在编程语言中提供的相同功能。 当条件计算结果为 true 时,它会执行一组活动;当条件计算结果为 false 时,它会执行另一组活动。

使用 UI 创建 If 条件活动

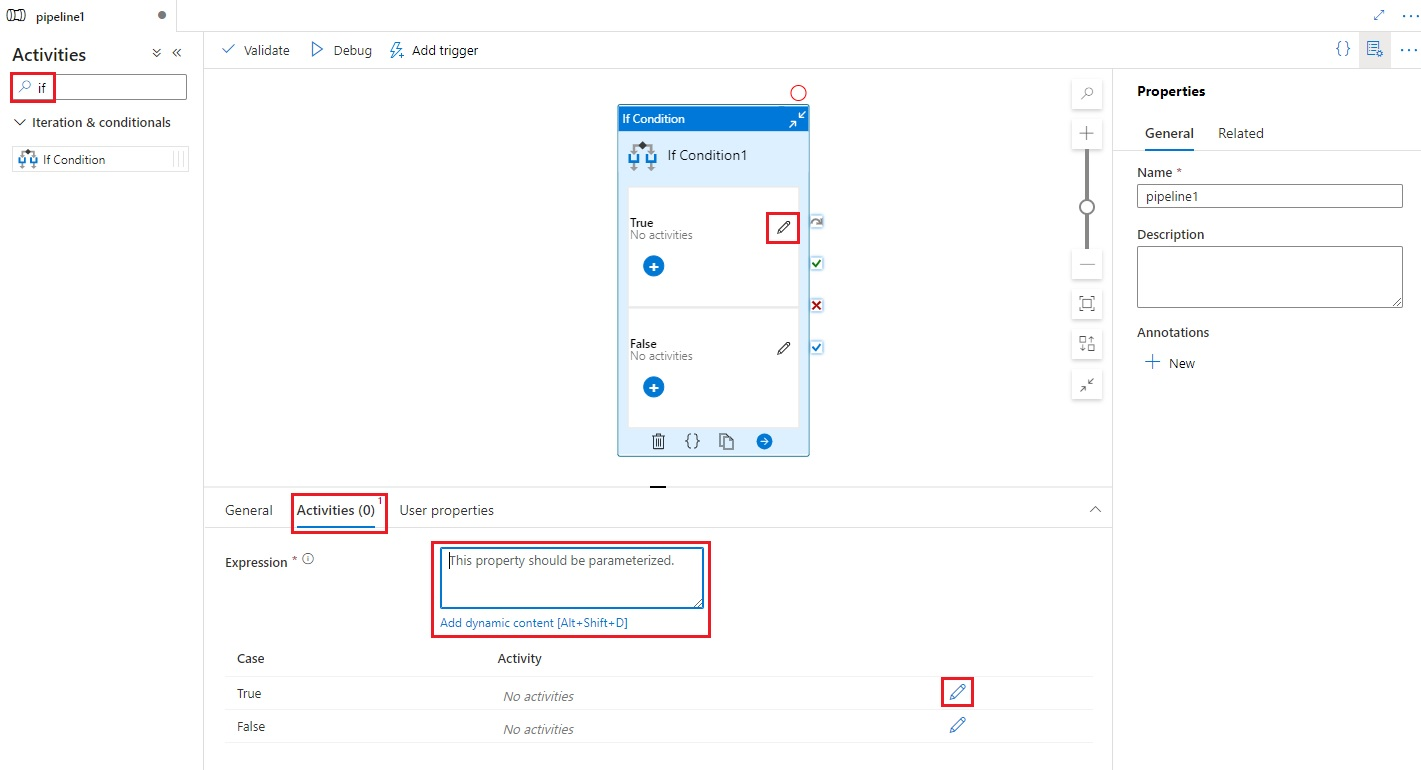

若要在管道中使用 If 条件活动,请完成以下步骤:

在“管道活动”窗格中搜索“If”,然后将 If 条件活动拖到管道画布上。

如果尚未选择画布上的新 If 条件活动,请选择该活动及其“活动”选项卡,以编辑其详细信息。

在 If 条件的“活动”选项卡上选择“编辑活动”按钮,或直接从管道画布上的 If 条件中选择该按钮,以添加当表达式的计算结果为

true或false时将执行的活动。

语法

{

"name": "<Name of the activity>",

"type": "IfCondition",

"typeProperties": {

"expression": {

"value": "<expression that evaluates to true or false>",

"type": "Expression"

},

"ifTrueActivities": [

{

"<Activity 1 definition>"

},

{

"<Activity 2 definition>"

},

{

"<Activity N definition>"

}

],

"ifFalseActivities": [

{

"<Activity 1 definition>"

},

{

"<Activity 2 definition>"

},

{

"<Activity N definition>"

}

]

}

}

类型属性

| 属性 | 说明 | 允许的值 | 必需 |

|---|---|---|---|

| name | if-condition 活动名称。 | 字符串 | 是 |

| 类型 | 必须设置为IfCondition | 字符串 | 是 |

| 表达式 | 计算结果必须为 true 或 false 的表达式 | 具有结果类型布尔的表达式 | 是 |

| ifTrueActivities | 表达式计算结果为 true 时将执行的活动集。 |

数组 | 是 |

| ifFalseActivities | 表达式计算结果为 false 时将执行的活动集。 |

数组 | 是 |

示例

此示例中的管道可将数据从输入文件夹复制到一个输出文件夹。 输出文件夹由管道参数的值决定:routeSelection。 如果 routeSelection 的值为 true,数据将复制到 outputPath1。 而如果 routeSelection 的值为 false,数据将复制到 outputPath2。

注意

本部分提供运行管道的 JSON 定义和示例 PowerShell 命令。 有关使用 Azure PowerShell 和 JSON 定义创建管道的分步说明演练,请参阅教程:使用 Azure PowerShell 创建数据工厂。

管道与 IF-Condition 活动 (Adfv2QuickStartPipeline.json)

{

"name": "Adfv2QuickStartPipeline",

"properties": {

"activities": [

{

"name": "MyIfCondition",

"type": "IfCondition",

"typeProperties": {

"expression": {

"value": "@bool(pipeline().parameters.routeSelection)",

"type": "Expression"

},

"ifTrueActivities": [

{

"name": "CopyFromBlobToBlob1",

"type": "Copy",

"inputs": [

{

"referenceName": "BlobDataset",

"parameters": {

"path": "@pipeline().parameters.inputPath"

},

"type": "DatasetReference"

}

],

"outputs": [

{

"referenceName": "BlobDataset",

"parameters": {

"path": "@pipeline().parameters.outputPath1"

},

"type": "DatasetReference"

}

],

"typeProperties": {

"source": {

"type": "BlobSource"

},

"sink": {

"type": "BlobSink"

}

}

}

],

"ifFalseActivities": [

{

"name": "CopyFromBlobToBlob2",

"type": "Copy",

"inputs": [

{

"referenceName": "BlobDataset",

"parameters": {

"path": "@pipeline().parameters.inputPath"

},

"type": "DatasetReference"

}

],

"outputs": [

{

"referenceName": "BlobDataset",

"parameters": {

"path": "@pipeline().parameters.outputPath2"

},

"type": "DatasetReference"

}

],

"typeProperties": {

"source": {

"type": "BlobSource"

},

"sink": {

"type": "BlobSink"

}

}

}

]

}

}

],

"parameters": {

"inputPath": {

"type": "String"

},

"outputPath1": {

"type": "String"

},

"outputPath2": {

"type": "String"

},

"routeSelection": {

"type": "String"

}

}

}

}

表达式的另一个示例是:

"expression": {

"value": "@equals(pipeline().parameters.routeSelection,1)",

"type": "Expression"

}

Azure 存储链接服务 (AzureStorageLinkedService.json)

{

"name": "AzureStorageLinkedService",

"properties": {

"type": "AzureStorage",

"typeProperties": {

"connectionString": "DefaultEndpointsProtocol=https;AccountName=<Azure Storage account name>;AccountKey=<Azure Storage account key>;EndpointSuffix=core.chinacloudapi.cn",

}

}

}

参数化的 Azure Blob 数据集 (BlobDataset.json)

管道将 folderPath 设置为管道参数 outputPath1 或 outputPath2 的值。

{

"name": "BlobDataset",

"properties": {

"type": "AzureBlob",

"typeProperties": {

"folderPath": {

"value": "@{dataset().path}",

"type": "Expression"

}

},

"linkedServiceName": {

"referenceName": "AzureStorageLinkedService",

"type": "LinkedServiceReference"

},

"parameters": {

"path": {

"type": "String"

}

}

}

}

管道参数 JSON (PipelineParameters.json)

{

"inputPath": "adftutorial/input",

"outputPath1": "adftutorial/outputIf",

"outputPath2": "adftutorial/outputElse",

"routeSelection": "false"

}

PowerShell 命令

注意

建议使用 Azure Az PowerShell 模块与 Azure 交互。 请参阅安装 Azure PowerShell 以开始使用。 若要了解如何迁移到 Az PowerShell 模块,请参阅 将 Azure PowerShell 从 AzureRM 迁移到 Az。

这些命令假设你已将 JSON 文件保存到文件夹 C:\ADF。

Connect-AzAccount -Environment AzureChinaCloud

Select-AzSubscription "<Your subscription name>"

$resourceGroupName = "<Resource Group Name>"

$dataFactoryName = "<Data Factory Name. Must be globally unique>";

Remove-AzDataFactoryV2 $dataFactoryName -ResourceGroupName $resourceGroupName -force

Set-AzDataFactoryV2 -ResourceGroupName $resourceGroupName -Location "China East 2" -Name $dataFactoryName

Set-AzDataFactoryV2LinkedService -DataFactoryName $dataFactoryName -ResourceGroupName $resourceGroupName -Name "AzureStorageLinkedService" -DefinitionFile "C:\ADF\AzureStorageLinkedService.json"

Set-AzDataFactoryV2Dataset -DataFactoryName $dataFactoryName -ResourceGroupName $resourceGroupName -Name "BlobDataset" -DefinitionFile "C:\ADF\BlobDataset.json"

Set-AzDataFactoryV2Pipeline -DataFactoryName $dataFactoryName -ResourceGroupName $resourceGroupName -Name "Adfv2QuickStartPipeline" -DefinitionFile "C:\ADF\Adfv2QuickStartPipeline.json"

$runId = Invoke-AzDataFactoryV2Pipeline -DataFactoryName $dataFactoryName -ResourceGroupName $resourceGroupName -PipelineName "Adfv2QuickStartPipeline" -ParameterFile C:\ADF\PipelineParameters.json

while ($True) {

$run = Get-AzDataFactoryV2PipelineRun -ResourceGroupName $resourceGroupName -DataFactoryName $DataFactoryName -PipelineRunId $runId

if ($run) {

if ($run.Status -ne 'InProgress') {

Write-Host "Pipeline run finished. The status is: " $run.Status -foregroundcolor "Yellow"

$run

break

}

Write-Host "Pipeline is running...status: InProgress" -foregroundcolor "Yellow"

}

Start-Sleep -Seconds 30

}

Write-Host "Activity run details:" -foregroundcolor "Yellow"

$result = Get-AzDataFactoryV2ActivityRun -DataFactoryName $dataFactoryName -ResourceGroupName $resourceGroupName -PipelineRunId $runId -RunStartedAfter (Get-Date).AddMinutes(-30) -RunStartedBefore (Get-Date).AddMinutes(30)

$result

Write-Host "Activity 'Output' section:" -foregroundcolor "Yellow"

$result.Output -join "`r`n"

Write-Host "\nActivity 'Error' section:" -foregroundcolor "Yellow"

$result.Error -join "`r`n"

相关内容

参阅支持的其他控制流活动: