HDInsight 群集生成各种日志文件。 例如,Apache Hadoop 和相关服务(如 Apache Spark)会生成详细的作业执行日志。 日志文件管理是维护正常运行的 HDInsight 群集的一部分。 日志存档还可以有法规要求。 由于日志文件的数量和大小,优化日志存储和存档有助于服务成本管理。

管理 HDInsight 群集日志包括保留有关群集环境的各个方面的信息。 此信息包括所有关联的 Azure 服务日志、群集配置、作业执行信息、任何错误状态和其他数据。

HDInsight 日志管理中的典型步骤包括:

- 步骤 1:确定日志保留策略

- 步骤 2:管理群集服务版本配置日志

- 步骤 3:管理群集作业执行日志文件

- 步骤 4:预测日志卷存储大小和成本

- 步骤 5:确定日志存档策略和进程

步骤 1:确定日志保留策略

创建 HDInsight 群集日志管理策略的第一步是收集有关业务方案和作业执行历史记录存储要求的信息。

集群详细信息

以下群集详细信息有助于收集日志管理策略中的信息。 从在特定的 Azure 帐户中创建的所有 HDInsight 群集中收集此信息。

- 群集名称

- 群集区域和 Azure 可用性区域

- 群集状态,包括上次状态更改的详细信息

- 为主节点、核心节点和任务节点指定的 HDInsight 实例的类型和数量

可以使用 Azure 门户获取大部分此顶级信息。 或者,可以使用 Azure CLI 获取有关 HDInsight 群集的信息:

az hdinsight list --resource-group <ResourceGroup>

az hdinsight show --resource-group <ResourceGroup> --name <ClusterName>

还可以使用 PowerShell 查看此信息。 有关详细信息,请参阅 使用 Azure PowerShell 在 HDInsight 中管理 Hadoop 群集。

了解群集上运行的工作负荷

请务必了解 HDInsight 群集上运行的工作负荷类型,以便为每个类型设计适当的日志记录策略。

- 工作负荷试验性(如开发或测试)还是生产质量?

- 生产质量工作负载通常运行的频率是多少?

- 是否有任何任务资源密集或长时间执行?

- 任何工作负荷是否都使用生成多种类型的日志的复杂 Hadoop 服务集?

- 是否有任何工作负荷具有相关的法规执行世系要求?

日志保留模式和做法示例

请考虑通过将标识符添加到每个日志条目或其他技术来维护数据世系跟踪。 这样,就可以跟踪数据的原始源和操作,并在每个阶段跟踪数据以了解其一致性和有效性。

考虑如何从群集或多个群集收集日志,并出于审核、监视、规划和警报等目的对其进行整理。 可以使用自定义解决方案定期访问和下载日志文件,并合并和分析它们以提供仪表板显示。 还可以添加其他功能来提醒安全或故障检测。 可以使用 PowerShell、HDInsight SDK 或访问 Azure 经典部署模型的代码生成这些实用工具。

考虑监视解决方案或服务是否是一个有用的好处。 Microsoft System Center 提供 HDInsight 管理包

https://systemcenter.wiki/?Get_ManagementPackBundle=Microsoft.HDInsight.mpb&FileMD5=10C7D975C6096FFAA22C84626D211259。 还可以使用 Apache Chukwa 和 Ganglia 等第三方工具收集和集中日志。 许多公司提供服务来监视基于 Hadoop 的大数据解决方案,例如:CenterityCompuware APM、Sematext SPM 和 Zettaset Orchestrator。

步骤 2:管理群集服务版本并查看日志

典型的 HDInsight 群集使用多个服务和开源软件包(例如 Apache HBase、Apache Spark 等)。 对于某些工作负荷(如生物信息学),除了作业执行日志之外,可能需要保留服务配置日志历史记录。

使用 Ambari UI 查看群集配置设置

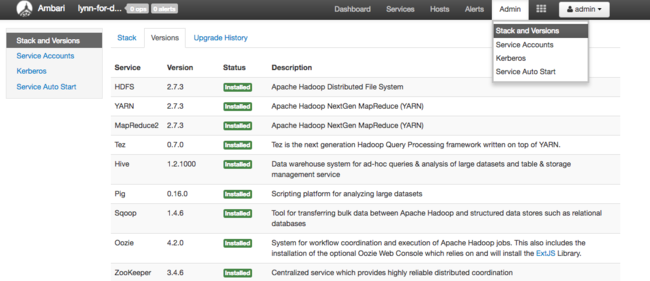

Apache Ambari 通过提供 Web UI 和 REST API 简化了 HDInsight 群集的管理、配置和监视。 Ambari 包含在基于 Linux 的 HDInsight 群集上。 选择 Azure 门户 HDInsight 页上的 “群集仪表板 ”窗格,打开 “群集仪表板 ”链接页。 接下来,选择 HDInsight 群集仪表板 窗格以打开 Ambari UI。 系统会提示输入群集登录凭据。

若要打开服务视图列表,请选择 HDInsight 的 Azure 门户页上的 Ambari 视图 窗格。 此列表因已安装的库而异。 例如,你可能会看到 YARN 队列管理器、Hive 视图和 Tez 视图。 选择任何服务链接以查看配置和服务信息。 Ambari UI 堆栈和版本 页提供有关群集服务的配置和服务版本历史记录的信息。 若要导航到 Ambari UI 的此部分,请选择 “管理 ”菜单,然后选择 “堆栈”和“版本”。 选择“ 版本 ”选项卡以查看服务版本信息。

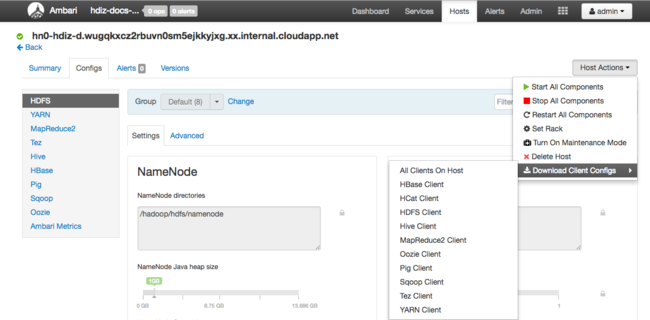

使用 Ambari UI,可以下载群集中特定主机(或节点)上运行的任何(或所有)服务的配置。 选择“ 主机 ”菜单,然后选择感兴趣的主机的链接。 在该主机的页面上,选择“ 主机作 ”按钮,然后选择 “下载客户端配置”。

查看脚本作日志

HDInsight 脚本操作 在群集上手动运行脚本或在指定时运行脚本。 例如,脚本作可用于在群集上安装其他软件,或者更改默认值中的配置设置。 脚本作日志可以深入了解在设置群集期间发生的错误,以及配置设置的更改,这些更改可能会影响群集性能和可用性。 若要查看脚本操作的状态,请选择 Ambari UI 上的 运维 按钮,或访问默认存储帐户中的状态日志。 存储日志可以查看在 /STORAGE_ACCOUNT_NAME/DEFAULT_CONTAINER_NAME/custom-scriptaction-logs/CLUSTER_NAME/DATE.

查看 Ambari 警报状态日志

Apache Ambari 将警报状态更改写入到 ambari-alerts.log. 完整路径为 /var/log/ambari-server/ambari-alerts.log。 若要为日志启用调试,请在 /etc/ambari-server/conf/log4j.properties. 中更改属性,然后更改 # Log alert state changes 下的条目:

log4j.logger.alerts=INFO,alerts

to

log4j.logger.alerts=DEBUG,alerts

步骤 3:管理群集作业执行日志文件

下一步是查看各种服务的作业执行日志文件。 服务可能包括 Apache HBase、Apache Spark 和其他许多服务。 Hadoop 群集生成大量详细日志,因此确定哪些日志非常有用(哪些日志不是)可能非常耗时。 了解日志记录系统对于日志文件的定向管理非常重要。 下图是一个示例日志文件。

访问 Hadoop 日志文件

HDInsight 将其日志文件存储在群集文件系统和 Azure 存储中。 可以通过打开与群集的 SSH 连接并浏览文件系统,或使用远程头节点服务器上的 Hadoop YARN 状态门户来检查群集中的日志文件。 可以使用任何可从 Azure 存储访问和下载数据的工具检查 Azure 存储中的日志文件。 示例包括 AzCopy、 CloudXplorer 和 Visual Studio Server Explorer。 还可以使用 PowerShell 和 Azure 存储客户端库或 Azure .NET SDK 访问 Azure Blob 存储中的数据。

Hadoop 在群集中的各个节点上将作业作为任务尝试来运行。 HDInsight 可以启动推理任务尝试,终止未首先完成的任何其他任务尝试。 这会即时生成记录到控制器、stderr 和 syslog 日志文件的重要活动。 此外,多个任务尝试同时运行,但日志文件只能线性显示结果。

写入 Azure Blob 存储的 HDInsight 日志

HDInsight 群集配置为使用 Azure PowerShell cmdlet 或 .NET 作业提交 API 提交的任何作业将任务日志写入 Azure Blob 存储帐户。 如果通过 SSH 将作业提交到群集,则执行日志记录信息将存储在 Azure 表中,如上一部分所述。

除了 HDInsight 生成的核心日志文件外,已安装的服务(如 YARN)还会生成作业执行日志文件。 日志文件的数量和类型取决于安装的服务。 常见服务是 Apache HBase、Apache Spark 等。 调查每个服务的作业日志执行文件,以了解群集上可用的总体日志记录文件。 每个服务都有自己的日志记录方法和位置,用于存储日志文件。 例如,以下部分讨论了访问最常见的服务日志文件(来自 YARN)的详细信息。

YARN 生成的 HDInsight 日志

YARN 跨工作器节点上的所有容器聚合日志,并将这些日志存储为每个工作器节点的一个聚合日志文件。 应用程序完成后,该日志存储在默认文件系统上。 应用程序可以使用数百或数千个容器,但单个工作节点上运行的所有容器的日志始终聚合到单个文件。 每个工作器节点只有一个日志可供应用程序使用。 默认情况下,日志聚合在 HDInsight 群集 3.0 及更高版本上启用。 聚合日志位于群集的默认存储中。

/app-logs/<user>/logs/<applicationId>

聚合日志不可直接读取,因为它们以容器索引的二进制格式编写 TFile 。 使用 YARN ResourceManager 日志或 CLI 工具将这些日志视为感兴趣的应用程序或容器的纯文本。

YARN CLI 工具

若要使用 YARN CLI 工具,必须先使用 SSH 连接到 HDInsight 群集。 运行这些命令时,请分别指定<applicationId>、<user-who-started-the-application>、<containerId>和<worker-node-address>信息。 可以使用以下命令之一以纯文本形式查看日志:

yarn logs -applicationId <applicationId> -appOwner <user-who-started-the-application>

yarn logs -applicationId <applicationId> -appOwner <user-who-started-the-application> -containerId <containerId> -nodeAddress <worker-node-address>

YARN 资源管理器 UI

YARN 资源管理器 UI 在群集头节点上运行,并通过 Ambari Web UI 访问。 使用以下步骤查看 YARN 日志:

- 在 Web 浏览器中导航到

https://CLUSTERNAME.azurehdinsight.cn。 将 CLUSTERNAME 替换为 HDInsight 群集的名称。 - 从左侧的服务列表中,选择 YARN。

- 从“快速链接”下拉列表中,选择其中一个群集头节点,然后选择 Resource Manager 日志。 你会看到一系列指向 YARN 日志的链接。

步骤 4:预测日志卷存储大小和成本

完成上述步骤后,您已了解 HDInsight 集群正在生成的日志文件的类型和数量。

接下来,分析一段时间内密钥日志存储位置中的日志数据量。 例如,可以分析在 30、60 和 90 天周期内的数据量和增长。 在电子表格中记录此信息或使用 Visual Studio、Azure 存储资源管理器或 Power Query for Excel 等其他工具。

现在,你有足够的信息来创建密钥日志的日志管理策略。 使用电子表格(或所选工具)预测日志大小增长和日志存储 Azure 服务成本。 另请考虑检查的日志集的任何日志保留要求。 现在,可以在确定哪些日志文件可以删除(如果有)以及哪些日志应保留并归档到更便宜的 Azure 存储之后,重新预测未来的日志存储成本。

步骤 5:确定日志存档策略和进程

确定可以删除哪些日志文件后,可以调整许多 Hadoop 服务的日志记录参数,以便在指定时间段后自动删除日志文件。

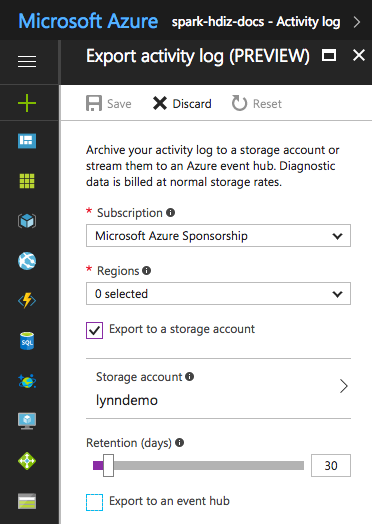

对于某些日志文件,可以使用价格较低的日志文件存档方法。 对于 Azure 资源管理器活动日志,可以使用 Azure 门户浏览此方法。 通过在 Azure 门户中为 HDInsight 实例选择 活动日志 链接来设置资源管理器日志的存档。 在“活动日志搜索”页顶部,选择“ 导出 ”菜单项以打开 “导出活动日志 ”窗格。 填写订阅、区域、是否导出到存储帐户以及保留日志的天数。 在此同一窗格中,还可以指示是否导出到事件中心。

或者,可以使用 PowerShell 编写日志存档脚本。

访问 Azure 存储指标

可将 Azure 存储配置为记录存储操作和访问。 可以使用这些详细日志进行容量监视和规划,以及审核对存储的请求。 记录的信息包括延迟详细信息,使你能够监视和微调解决方案的性能。 可以使用适用于 Hadoop 的 .NET SDK 检查为 Azure 存储生成的日志文件,用于保存 HDInsight 群集的数据。

控制旧日志文件的备份索引的大小和数量

若要控制保留的日志文件的大小和数量,请设置以下属性 RollingFileAppender:

-

maxFileSize是文件的关键大小,该文件是滚动的。 默认值为 10 MB。 -

maxBackupIndex指定要创建的备份文件数,默认值 1。

其他日志管理技术

为了避免磁盘空间不足,可以使用某些 OS 工具(如 logrotate )来管理日志文件。 可以配置为 logrotate 每天运行,压缩日志文件并删除旧日志文件。 你的方法取决于你的要求,例如在本地节点上保留日志文件的时间。

还可以检查是否为一个或多个服务启用了 DEBUG 日志记录,这大大增加了输出日志大小。

要将日志从所有节点收集到一个中心位置,可以创建数据流,例如将所有日志条目引入 Solr。