与 HDInsight 3.6 相比,HDInsight 4.0 具有几项更为显著的优势。 下面将概述 HDInsight 4.0 中的新增功能。

本文介绍了将 Hive 工作负载从 HDInsight 3.6 迁移到 4.0 的步骤,包括

- Hive 元存储复制和架构升级

- 安全迁移以与 ACID 兼容

- 保留 Hive 安全策略

新旧 HDInsight 群集必须有权访问相同的存储帐户。

将 Hive 表迁移到新的存储帐户需要作为一个单独的步骤进行。 请参阅跨存储帐户迁移 Hive。

Hive 3 中的更改和新增功能:

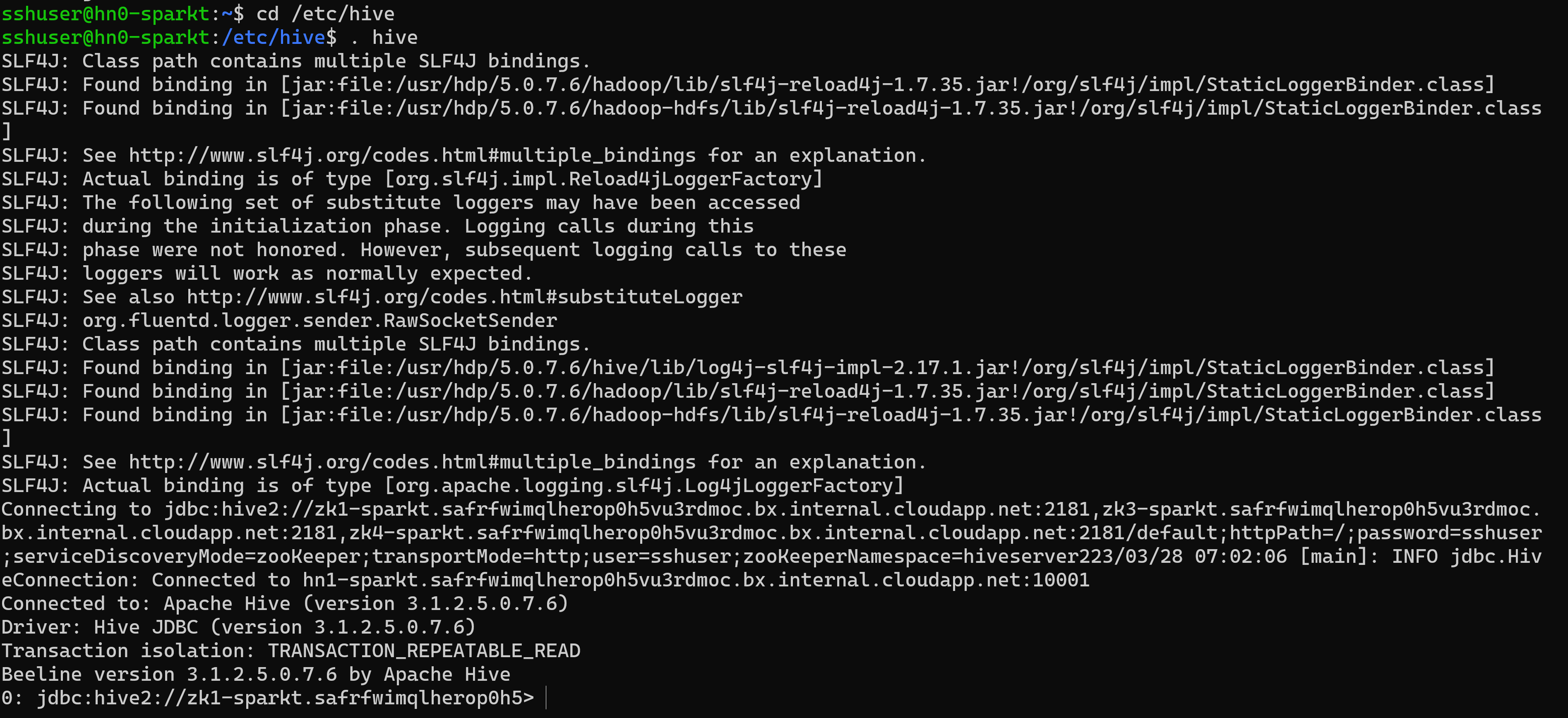

Hive 客户端更改

Hive 3 仅支持瘦客户端、用于运行查询的 Beeline 和来自命令行的 Hive 管理命令。 Beeline 使用与 HiveServer 的 JDBC 连接来执行所有命令。 分析、编译和执行操作在 HiveServer 中发生。

以 Hive 用户身份使用 Hive 关键字调用 Beeline 或使用 beeline -u <JDBC URL> 调用 Beeline,可以输入支持的 Hive CLI 命令。 可以从 Ambari Hive 页面获取 JDBC URL。

使用 Beeline(而不是不再受支持的胖客户端 Hive CLI)有几个优点,包括:

- 只能维护 JDBC 客户端,而不是维护整个 Hive 代码库。

- 使用 Beeline 可降低启动开销,因为不涉及整个 Hive 代码库。

还可以执行位于目录“/usr/bin”下的 Hive 脚本,该脚本使用 JDBC URL 调用 Beeline 连接。

瘦客户端体系结构有助于保护以下位置的数据

- 会话状态、内部数据结构、密码等驻留在客户端而不是服务器上。

- 执行查询所需的少量守护程序简化了监视和调试。

HiveServer 强制实施允许列表和阻止列表设置,你可以使用 SET 命令更改这些设置。 使用阻止列表,可以限制内存配置,以防止 Hive 服务器不稳定。 可以使用不同的允许列表和阻止列表配置多个 HiveServer 实例,以建立不同级别的稳定性。

Hive 元存储更改

Hive 现在仅支持远程元存储,而不是嵌入式元存储(HS2 JVM 中)。 Hive 元存储驻留在 Ambari 作为 HDInsight 堆栈的一部分管理的群集中的节点上。 不支持群集外部的独立服务器。 不再在命令行上设置 key=value 命令来配置 Hive 元存储。 基于“hive.metastore.uris=' ''”中配置的值,使用了 HMS 服务并建立了连接。

执行引擎更改

Apache Tez 取代 MapReduce 作为默认 Hive 执行引擎。 从 Hive 2.0 开始,MapReduce 已弃用,请参阅 HIVE-12300。 使用有向无环图 (DAG) 和数据传输基元的表达式,在 Tez 下执行 Hive 查询可以提高性能。 提交到 Hive 的 SQL 查询如下所示执行

- Hive 编译查询。

- Tez 执行查询。

- YARN 跨群集为应用程序分配资源,并为 YARN 队列中的 Hive 作业启用授权。

- Hive 更新 ABFS 或 WASB 中的数据。

- Hive 通过 JDBC 连接返回查询结果。

如果旧脚本或应用程序指定执行 MapReduce,则发生异常,如下所示

注意

大多数用户定义的函数 (UDF) 不需要更改就可以在 Tez 而不是 MapReduce 上执行。

与 ACID 事务和 CBO 相关的更改:

ACID 表是 HDInsight 4.x 中的默认表类型,没有性能或操作重载。

简化的应用程序开发,具有更强大事务保证的操作,以及 SQL 命令的更简单语义

Hive 内部负责处理 HDInsight 4.1 中 ACID 表的分桶,从而消除维护开销。

高级优化 - CBO 中的升级

自动查询缓存。 用于启用查询缓存的属性为

hive.query.results.cache.enabled。 需要将此属性设置为 true。 Hive 将查询结果缓存存储在/tmp/hive/__resultcache__/.中。默认情况下,Hive 为查询结果缓存分配 2 GB。 可以通过配置以下参数(以字节为单位)来更改此设置:hive.query.results.cache.max.size。有关详细信息,请参阅迁移到 Azure HDInsight 4.0 的好处。

具体化视图重写

有关详细信息,请参阅 Hive - 具体化视图

升级到 Apache Hive 3 后的更改

若要在升级后查找并使用 Apache Hive 3 表,需要了解升级过程中发生的更改。 更改表的管理和位置、对表目录的权限、表类型和 ACID 合规性问题。

表的 Hive 管理

Hive 3 比 Hive 2 对表的控制更多,并且要求托管表遵守严格的定义。 Hive 接管表的控制级别与传统数据库相同。 Hive 可自行了解数据的增量更改;此控制框架可增强性能。

例如,如果 Hive 知道解析查询不需要扫描表中的新数据,Hive 将从 Hive 查询结果缓存返回结果。 当具体化视图中的基础数据发生更改时,Hive 需要重新生成具体化视图。 ACID 属性准确地显示哪些行发生了更改,哪些行需要处理并添加到具体化视图中。

Hive 对 ACID 属性的更改

Hive 2.x 和 3.x 具有事务(托管)和非事务(外部)表。 事务表具有原子、一致、隔离和持久 (ACID) 属性。 在 Hive 2.x 中,ACID 事务处理的初始版本为 ACID v1。 在 Hive 3.x 中,默认表将使用 ACID v2。

本机和非本机存储格式

存储格式是表类型升级更改的一个因素。 Hive 2.x 和 3.x 支持以下 Hadoop 本机和非本机存储格式

本机:Hive 中具有内置支持的表,采用以下文件格式

- 文本

- 序列文件

- RC 文件

- AVRO 文件

- ORC 文件

- Parquet 文件

非本机:使用存储处理程序的表,例如 DruidStorageHandler 或 HBaseStorageHandler

HDInsight 4.x 将更改升级到表类型

下表比较了从 HDInsight 3.x 升级之前和升级到 HDInsight 4.x 之后的 Hive 表类型和 ACID 操作。 Hive 表文件的所有权是确定升级后的表类型和 ACID 操作的一个因素

HDInsight 3.x 和 HDInsight 4.x 表类型比较

| HDInsight 3.x | - | - | - | HDInsight 4.x | - |

|---|---|---|---|---|---|

| 表类型 | ACID v1 | 格式 | Hive 表文件的所有者(用户) | 表类型 | ACID v2 |

| 外部 | 否 | 本机或非本机 | Hive 或非 Hive | 外部 | 否 |

| 托管 | 是 | ORC | Hive 或非 Hive | 托管、可更新 | 是 |

| 托管 | 否 | ORC | Hive | 托管、可更新 | 是 |

| 托管 | 否 | ORC | 非 Hive | 外部,删除数据 | 是 |

| 托管 | 否 | 本机(但非 ORC) | Hive | 托管,仅插入 | 是 |

| 托管 | 否 | 本机(但非 ORC) | 非 Hive | 外部,删除数据 | 否 |

| 托管 | 否 | 非本机 | Hive 或非 Hive | 外部,删除数据 | 否 |

Hive 模拟

Hive 2 (doAs=true) 默认启用 Hive 模拟,在 Hive 3 中默认禁用。 Hive 模拟是否以最终用户身份运行 Hive。

其他 HDInsight 4.x 升级更改

- 不属于 Hive 用户的托管 ACID 表在升级后仍保留托管表,但 Hive 将成为所有者。

- 升级后,Hive 表的格式与升级前的格式相同。 例如,本机表或非本机表分别保持本机或非本机状态。

位置更改

升级后,托管表或分区的位置在以下任一条件下都不会更改:

- 升级前,旧表或分区目录不在默认位置 /apps/hive/warehouse 中。

- 旧表或分区与新仓库目录位于不同的文件系统中。

- 旧表或分区目录与新仓库目录位于不同的加密区域中。

否则,托管表或分区的位置会更改。 升级过程会将托管文件移动到 /hive/warehouse/managed。 默认情况下,Hive 将你在 HDInsight 4.x 中创建的任何新外部表置于 /hive/warehouse/external 中

/apps/hive directory 是 Hive 2.x 仓库以前的位置,可能存在也可能不存在于 HDInsight 4.x 中

以下方案适用于位置更改

方案 1

如果该表是 HDInsight-3.x 中的托管表,并且它存在于位置 /apps/hive/warehouse 中,并在 HDInsight-4.x 中转换为外部表,则该位置在 HDInsight 4.x 中也是相同的 /apps/hive/warehouse。 它不会改变任何位置。 执行此步骤后,如果执行 alter table 命令以将其转换为托管 (acid) 表,届时该表将存在于同一位置 /apps/hive/warehouse。

方案 2

如果该表是 HDInsight-3.x 中的托管表,并且该表位于位置 /apps/hive/warehouse 中,并已转换为 HDInsight 4.x 中的托管 (ACID) 表,则位置为 /hive/warehouse/managed。

方案 3 如果要在 HDInsight-4.x 中创建外部表,但不指定任何位置,则它存在于位置 /hive/warehouse/external 中。

表转换

升级后,若要将非事务表转换为 ACID v2 事务表,请使用 ALTER TABLE 命令并将表属性设置为

transaction'='true' and 'EXTERNAL'='false

- HDInsight-3.x 中的非 ACID、ORC 格式并且由非 Hive 用户拥有的托管表将转换为 HDInsight-4.x 中的外部非 ACID 表。

- 如果用户希望将外部表(非 ACID)更改为 ACID,则还应将外部表更改为托管和 ACID。 因为在 HDInsight-4.x 中,所有托管表默认严格为 ACID。 无法将外部表(非 ACID)转换为 ACID 表。

注意

该表必须是 ORC 表。

若要将外部表(非 ACID)转换为托管 (ACID) 表,

- 使用以下命令将外部表转换为托管表,并且 acid 等于 true:

alter table <table name> set TBLPROPERTIES ('EXTERNAL'='false', 'transactional'='true'); - 如果尝试对外部表执行以下命令,则会收到以下错误。

方案 1

考虑表 rt 是外部表(非 ACID)。 如果表是非 ORC 表,

alter table rt set TBLPROPERTIES ('transactional'='true');

ERROR : FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. Unable to alter table. The table must be stored using an ACID compliant format (such as ORC): work.rt

The table must be ORC format

方案 2

>>>> alter table rt set TBLPROPERTIES ('transactional'='true'); If the table is ORC table.

ERROR:

Error: Error while processing statement: FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. Unable to alter table. work.rt can't be declared transactional because it's an external table (state=08S01,code=1)

发生此错误的原因是表 rt 是外部表,并且你无法将外部表转换为 ACID。

方案 3

>>>> alter table rt set TBLPROPERTIES ('EXTERNAL'='false');

ERROR:

Error: Error while processing statement: FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. Unable to alter table. Table work.rt failed strict managed table checks due to the following reason: Table is marked as a managed table but isn't transactional. (state=08S01,code=1)

下面,我们尝试先将外部表更改为托管表。 在 HDInsight 4.x 中,它应该是严格管理的表(这意味着它应该是 ACID)。 所以,这里出现了僵局。 将外部表 (NON_ACID) 转换为托管 (ACID) 表的唯一方法是必须遵循以下命令:

alter table rt set TBLPROPERTIES ('EXTERNAL'='false', 'transactional'='true');

语法和语义

创建表 为了提高可用性和功能,Hive 3 更改了表创建。 Hive 已通过以下方式更改表创建

- 创建符合 ACID 的表,这是 HDP 中的默认设置

- 支持简单写入和插入

- 写入多个分区

- 在单个 SELECT 语句中插入多个数据更新

- 无需分桶。

如果有 ETL 管道在 Hive 中创建表,则表将创建为 ACID。 Hive 现在严格控制访问,并定期对表执行压缩

升级前 在 HDInsight 3.x 中,默认情况下,CREATE TABLE 创建非 ACID 表。

升级后 默认情况下,CREATE TABLE 以 ORC 格式创建完整的 ACID 事务表。

所需操作 若要从 Spark 访问 Hive ACID 表,请使用 Hive Warehouse Connector (HWC) 连接到 Hive。 若要从 Spark 将 ACID 表写入 Hive,请使用 HWC 和 HWC API

转义

db.table引用需要更改使用 db.table 引用的查询,以防止 Hive 将整个 db.table 字符串解释为表名。 Hive 3.x 在 SQL 查询中拒绝

db.table。 表名中不允许使用点 (.)。 应将数据库名称和表名括在反引号中。 查找具有有问题的表引用的表。 CREATE TABLE 语句中显示的math.students。 应将数据库名称和表名括在反引号中。TABLE `math`.`students` (name VARCHAR(64), age INT, gpa DECIMAL(3,2));强制转换时间戳 对于 Hive 2 和 Hive 3,将数值强制转换为时间戳的应用程序的结果是不同的。 Apache Hive 更改了 CAST 的行为,以符合 SQL 标准,该标准不会将时区与时间戳类型相关联。

升级前 将数值类型值强制转换为时间戳可用于生成反映群集时区的结果。 例如,1597217764557 为 2020-08-12 00:36:04 PDT。 运行以下查询会将数字转换为 PDT 格式的时间戳:

SELECT CAST(1597217764557 AS TIMESTAMP);| 2020-08-12 00:36:04 |升级后 将数值类型值强制转换为时间戳会生成反映 UTC 而不是群集时区的结果。 运行查询会将数值转换为 UTC 格式的时间戳。

SELECT CAST(1597217764557 AS TIMESTAMP);| 2020-08-12 07:36:04.557 |所需操作 更改应用程序。 请勿从数值强制转换以获取本地时区。 内置函数 from_utc_timestamp 和 to_utc_timestamp 可用于在升级之前模拟行为。

检查列更改的兼容性 默认配置更改可能导致更改列类型的应用程序失败。

升级前 在 HDInsight 3.x 中,Hive.metastore.disallow.incompatible.col.type.changes 默认为 false,以允许更改为不兼容的列类型。 例如,可以将 STRING 列更改为不兼容类型的列,例如 MAP<STRING, STRING>。 不会发生错误。

升级后 默认情况下,hive.metastore.disallow.incompatible.col.type.changes 为 true。 Hive 禁止更改为不兼容的列类型。 不阻止兼容的列类型更改,例如 INT、STRING、BIGINT。

所需操作 更改应用程序以禁止不兼容的列类型更改,从而防止可能出现的数据损坏。

删除分区

CASCADE 子句中用于删除分区的 OFFLINE 和 NO_DROP 关键字会导致性能问题,不再受支持。

升级前 可以在 CASCADE 子句中使用 OFFLINE 和 NO_DROP 关键字来防止读取或删除分区。

升级后 CASCADE 子句不支持 OFFLINE 和 NO_DROP。

所需操作 更改应用程序以从 CASCADE 子句中删除 OFFLINE 和NO_DROP。 使用授权方案(如 Ranger)来防止删除或读取分区。

重命名表 升级后 仅当表是在没有

LOCATION子句的情况下创建的,并且位于其数据库目录下时,重命名托管表才会移动其位置。

与 CBO 相关的限制

我们看到,select 输出在几个列中提供尾随零。 例如,如果表列的数据类型为 decimal(38,4),并且如果将数据插入为 38,则会添加尾随零,并提供结果 38.0000。按照 https://issues.apache.org/jira/browse/HIVE-12063 和 https://issues.apache.org/jira/browse/HIVE-24389,想法是保留小数位数和精度,而不是在十进制列中运行包装器。 这是 Hive 2 中的默认行为。 若要解决此问题,可以遵循以下选项。

- 修改源级别的数据类型,将精度调整为 col1(decimal(38,0))。 此值提供的结果为 38,不含尾随零。 但是,如果以 35.0005 的形式插入数据,则为 0.0005,并且仅提供值 38 1。删除有问题的列的尾随零,然后强制转换为字符串,

- 使用 select TRIM(cast(<column_name> AS STRING))+0 FROM <table_name>;

- 使用正则表达式。

- 修改源级别的数据类型,将精度调整为 col1(decimal(38,0))。 此值提供的结果为 38,不含尾随零。 但是,如果以 35.0005 的形式插入数据,则为 0.0005,并且仅提供值 38 1。删除有问题的列的尾随零,然后强制转换为字符串,

在查询中使用 UNIX_TIMESTAMP 时,Hive 查询失败,并显示“不支持的子查询表达式”。 例如,如果我们运行查询,则会引发错误“不支持的子查询表达式”

select * from (SELECT col_1 from table1 where col_2 >= unix_timestamp('2020-03-07','yyyy-MM-dd'));此问题的根本原因是,当前 Hive 代码库引发分析 UNIX_TIMESTAMP 的异常,因为

HiveTypeSystemImpl.java code中没有用于 Calcite 识别为BIGINT的UNIX_TIMESTAMP精度的精度映射。 但以下查询可以正常工作select * from (SELECT col_1 from table1 where col_2 >= 1);由于 col_2 是一个整数,因此此命令执行成功。 上述问题已在 hdi-3.1.2-4.1.12(4.1 堆栈)和 hdi-3.1.2-5.0.8(5.0 堆栈)中解决

升级步骤

1.准备数据

默认情况下,HDInsight 3.6 不支持 ACID 表。 但是,如果存在 ACID 表,则对它们运行“MAJOR”压缩。 有关压缩的详细信息,请参阅 Hive 语言手册。

属性 Value Bash 脚本 URI https://hdiconfigactions.blob.core.chinacloudapi.cn/linuxhivemigrationv01/hive-adl-expand-location-v01.sh节点类型 头 parameters

2.复制 SQL 数据库

如果群集使用默认的 Hive 元存储,请根据本指南将元数据导出到外部元存储。 然后,创建外部元存储的副本以进行升级。

3.升级元存储架构

此步骤使用 HDInsight 4.0 中的 Hive Schema Tool 来升级元存储架构。

警告

此步骤不可逆。 请仅在元存储的副本上运行此步骤。

创建临时 HDInsight 4.0 群集以访问 4.0 Hive

schematool。 可以在此步骤中使用默认的 Hive 元存储。在 HDInsight 4.0 群集中,执行

schematool以升级目标 HDInsight 3.6 元存储。 编辑以下 shell 脚本以添加 SQL 服务器名称、数据库名称、用户名和密码。 在头节点上打开 SSH 会话并运行它。SERVER='servername.database.chinacloudapi.cn' # replace with your SQL Server DATABASE='database' # replace with your 3.6 metastore SQL Database USERNAME='username' # replace with your 3.6 metastore username PASSWORD='password' # replace with your 3.6 metastore password STACK_VERSION=$(hdp-select status hive-server2 | awk '{ print $3; }') /usr/hdp/$STACK_VERSION/hive/bin/schematool -upgradeSchema -url "jdbc:sqlserver://$SERVER;databaseName=$DATABASE;trustServerCertificate=false;encrypt=true;hostNameInCertificate=*.database.chinacloudapi.cn;" -userName "$USERNAME" -passWord "$PASSWORD" -dbType "mssql" --verbose注意

此实用程序使用客户端

beeline在/usr/hdp/$STACK_VERSION/hive/scripts/metastore/upgrade/mssql/upgrade-*.mssql.sql中执行 SQL 脚本。这些脚本中的 SQL 语法不一定与其他客户端工具兼容。 例如,SSMS 和 Azure 门户上的查询编辑器都要求在每个命令后输入关键字

GO。如果有脚本因资源容量或事务超时而失败,则纵向扩展 SQL 数据库。

使用查询

select schema_version from dbo.version验证最终版本。输出应与 HDInsight 4.0 群集中以下 bash 命令的输出一致。

grep . /usr/hdp/$(hdp-select --version)/hive/scripts/metastore/upgrade/mssql/upgrade.order.mssql | tail -n1 | rev | cut -d'-' -f1 | rev删除临时的 HDInsight 4.0 群集。

4.部署新的 HDInsight 4.0 群集

创建新的 HDInsight 4.0 群集,选择升级后的 Hive 元存储和相同的存储帐户。

新群集不需要具有相同的默认文件系统。

如果元存储包含驻留在多个存储帐户中的表,则需要将这些存储帐户添加到新群集来访问这些表。 请参阅将额外存储帐户添加到 HDInsight。

如果 Hive 作业因存储无法访问而失败,请验证表位置是否在添加到群集的存储帐户中。

使用下面的 Hive 命令来标识表位置:

SHOW CREATE TABLE ([db_name.]table_name|view_name);

5.转换表以符合 ACID

HDInsight 4.0 上的托管表必须符合 ACID 标准。 在 HDInsight 4.0 上运行 strictmanagedmigration,以将所有非 ACID 托管表转换为具有属性 'external.table.purge'='true' 的外部表。 从头节点执行:

sudo su - hive

STACK_VERSION=$(hdp-select status hive-server2 | awk '{ print $3; }')

/usr/hdp/$STACK_VERSION/hive/bin/hive --config /etc/hive/conf --service strictmanagedmigration --hiveconf hive.strict.managed.tables=true -m automatic --modifyManagedTables

6. 未找到类,错误为 MultiDelimitSerDe

问题

在某些情况下,运行 Hive 查询时,你可能会收到 java.lang.ClassNotFoundException,它表示找不到 org.apache.hadoop.hive.contrib.serde2.MultiDelimitSerDe 类。 当客户从 HDInsight 3.6 迁移到 HDInsight 4.0 时,会出现此错误。 SerDe 类 org.apache.hadoop.hive.contrib.serde2.MultiDelimitSerDe(hive-contrib-1.2.1000.2.6.5.3033-1.jar 的一部分)在 HDInsight 3.6 中已被移除,我们使用的是 org.apache.hadoop.hive.serde2.MultiDelimitSerDe 类,它是 HDI-4.0 中 hive-exec jar 的一部分。 当我们启动服务时,hive-exec jar 将默认加载到 HS2。

故障排除步骤

- 检查文件夹下是否有 JAR 包含该类(JAR 很可能应该在 Hive 库文件夹下,后者在 HDInsight 中是

/usr/hdp/current/hive/lib)。 - 检查解决方案中提到的

org.apache.hadoop.hive.contrib.serde2.MultiDelimitSerDe和org.apache.hadoop.hive.serde2.MultiDelimitSerDe类。

解决方案

尽管 JAR 文件是一个二进制文件,你仍可按如下方式结合使用

grep命令和-Hrni开关来搜索特定类名grep -Hrni "org.apache.hadoop.hive.contrib.serde2.MultiDelimitSerDe" /usr/hdp/current/hive/lib如果找不到此类,则不会返回任何输出。 如果它在 JAR 文件中找到此类,则会返回输出

以下是取自 HDInsight 4.x 集群的示例

sshuser@hn0-alters:~$ grep -Hrni "org.apache.hadoop.hive.serde2.MultiDelimitSerDe" /usr/hdp/4.1.9.7/hive/lib/ Binary file /usr/hdp/4.1.9.7/hive/lib/hive-exec-3.1.0.4.1-SNAPSHOT.jar matches从上面的输出中,我们可以确认没有 jar 包含

org.apache.hadoop.hive.contrib.serde2.MultiDelimitSerDe类,且 hive-exec jar 包含org.apache.hadoop.hive.serde2.MultiDelimitSerDe。尝试创建行格式 DerDe 为

ROW FORMAT SERDE org.apache.hadoop.hive.serde2.MultiDelimitSerDe的表此命令将解决此问题。 如果已创建表,可使用以下命令对其进行重命名

Hive => ALTER TABLE TABLE_NAME SET SERDE 'org.apache.hadoop.hive.serde2.MultiDelimitSerDe' Backend DB => UPDATE SERDES SET SLIB='org.apache.hadoop.hive.serde2.MultiDelimitSerDe' where SLIB='org.apache.hadoop.hive.contrib.serde2.MultiDelimitSerDe';

update 命令是在后端 DB 中手动更新详细信息,alter 命令用于在 beeline 或 Hive 中使用新的 SerDe 类来修改表。

Hive 后端数据库架构比较脚本

可以在完成迁移后运行以下脚本。

后端数据库中可能缺少少量列,从而导致查询失败。 如果架构升级未正确进行,则我们可能会遇到“列名无效”问题。 以下脚本从客户后端数据库提取列名称和数据类型,并在有任何列缺失或数据类型不正确时提供输出。

以下路径包含 schemacompare_final.py 和 test.csv 文件。 脚本存在于“schemacompare_final.py”文件中,文件“test.csv”包含所有表的所有列名称和数据类型,这些表应存在于 Hive 后端数据库中。

https://hdiconfigactions2.blob.core.chinacloudapi.cn/hiveschemacompare/schemacompare_final.py

https://hdiconfigactions2.blob.core.chinacloudapi.cn/hiveschemacompare/test.csv

请从链接下载这两个文件。 将这些文件复制到运行 Hive 服务的头节点之一。

执行脚本的步骤:

在“/tmp”目录下创建名为“schemacompare”的目录。

将“schemacompare_final.py”和“test.csv”放入文件夹“/tmp/schemacompare”。 执行“ls -ltrh /tmp/schemacompare/”并验证文件是否存在。

若要执行 Python 脚本,请使用命令“python schemacompare_final.py”。 此脚本开始执行脚本,只需不到 5 分钟即可完成。 上述脚本会自动连接到后端数据库,并从每个表提取详细信息,Hive 会使用这些表并更新名为“return.csv”的新 csv 文件中的详细信息。 创建文件 return.csv 后,它会将数据与文件“test.csv”进行比较,并在表名下缺少任何内容时打印列名或数据类型。

执行脚本后,可以看到以下行,指示已提取表的详细信息,并且脚本正在进行中

KEY_CONSTRAINTS

Details Fetched

DELEGATION_TOKENS

Details Fetched

WRITE_SET

Details Fetched

SERDES

Details Fetched

你可以在“DIFFERENCE DETAILS:”行下查看差异详细信息。 如果有任何差异,则输出

PART_COL_STATS;

('difference', ['BIT_VECTOR', 'varbinary'])

The line with semicolon PART_COL_STATS; is the table name. And under the table name you can find the differences as ('difference', ['BIT_VECTOR', 'varbinary']) if there are any difference in column or datatype.

如果表中没有差异,则输出为

BUCKETING_COLS;

('difference', [])

PARTITIONS;

('difference', [])

在此输出中,可以找到缺少或错误的列名。 可以在后端数据库中运行以下查询,以验证列是否缺失一次。

SELECT * FROM INFORMATION_SCHEMA.columns WHERE TABLE_NAME = 'PART_COL_STATS';

如果表中缺少任何列,例如,如果我们运行 insert 或 insert overwrite 等查询,则将自动计算统计信息,并尝试更新统计信息表,例如 PART_COL_STATS 和 TAB_COL_STATS。 如果表中缺少“BIT_VECTOR”等列,则会失败并出现“列名无效”错误。 可以添加列,如以下命令中所述。 若要解决该问题,可以通过设置以下属性来禁用统计信息,这些属性无法更新后端数据库中的统计信息。

hive.stats.autogather=false;

hive.stats.column.autogather=false;

To Fix this issue, run the following two queries on backend SQL server (Hive metastore DB):

ALTER TABLE PART_COL_STATS ADD BIT_VECTOR VARBINARY(MAX);

ALTER TABLE TAB_COL_STATS ADD BIT_VECTOR VARBINARY(MAX);

此步骤可避免查询失败,在迁移后,查询会失败并出现错误“列名无效”。

跨 HDInsight 版本保护 Hive

HDInsight 可以选择性地使用 HDInsight 企业安全性套餐 (ESP) 来与 Microsoft Entra ID 集成。 ESP 使用 Kerberos 和 Apache Ranger 来管理群集中特定资源的权限。 可使用以下步骤,将针对 HDInsight 3.6 中的 Hive 部署的 Ranger 策略迁移到 HDInsight 4.0:

- 在 HDInsight 3.6 群集中导航到 Ranger 服务管理器面板。

- 导航到名为 HIVE 的策略,并将该策略导出到某个 JSON 文件。

- 确保导出的策略 JSON 中引用的所有用户都存在于新群集中。 如果该策略 JSON 中引用的某个用户不存在于新群集中,请将该用户添加到新群集,或者从策略中删除引用。

- 在 HDInsight 4.0 群集中导航到“Ranger 服务管理器”面板。

- 导航到名为 HIVE 的策略,并导入步骤 2 中导出的 Ranger 策略 JSON。

HDInsight 4.0 中可能需要更改应用程序的 Hive 变动

请参阅使用 Hive Warehouse Connector 进行其他配置,以在 Spark 和 Hive 之间共享 ACID 表的元存储。

HDInsight 4.0 使用基于存储的授权。 如果修改文件权限或以不同于 Hive 的用户身份创建文件夹,可能会遇到基于存储权限的 Hive 错误。 若要修复此错误,请向用户授予

rw-访问权限。 请参阅 HDFS 权限指南。HiveCLI替换为Beeline。

请参阅 HDInsight 4.0 公告,了解其他更改。

迁移后

请确保在完成迁移后执行以下步骤。

表完整性

- 使用 CTAS 或 IOW 在 Hive 3.1 中重新创建表以更改表类型,而不是更改表属性。

- 将 doAs 保留为 false。

- 确保托管表/数据所有权属于“hive”用户。

- 如果表格式为 ORC,则使用托管 ACID 表;对于非 ORC 类型,则使用托管非 ACID。

- 在重新创建的表上重新生成统计信息,因为迁移会导致不正确的统计信息。

群集运行状况

如果多个群集共享相同的存储和 HMS DB,则应仅在一个群集中启用自动压缩/压缩线程,并在其他任何位置禁用。

优化元存储以减少其 CPU 使用率。

禁用事务性事件侦听器。

注意

仅当未使用 Hive 复制功能时,才执行以下步骤。

- 在 Ambari UI 中,删除 hive.metastore.transactional.event.elisteners 的值。

- 默认值:

org.apache.hive.hcatalog.listener.DbNotificationListener - 新值:

<Empty>

禁用 Hive PrivilegeSynchronizer

- 在 Ambari UI 中,将 hive.privilege.synchronizer 设置为 false。

- 默认值:

true - 新值:

false

优化分区修复功能

- 禁用分区修复 - 此功能用于将存储位置中 Hive 表的分区与 Hive 元存储同步。 如果在数据引入后使用了“msck repair”,则可以禁用此功能。

- 若要禁用该功能,请使用 ALTER TABLE 在表属性下添加“discover.partitions=false”。 或者(如果无法禁用该功能)

- 提高分区修复频率。

在 Ambari UI 中,增大“metastore.partition.management.task.frequency”的值(以秒为单位)。

注意

此更改可能会延迟某些引入存储的分区的可见性。

- 默认值:

60 - 建议的值:

3600

- 默认值:

高级优化 在应用到生产环境之前,需要在较低(非生产)环境中测试以下选项。

- 如果未使用具体化视图,请删除与具体化视图相关的侦听器。

- 在 Ambari UI 中,添加自定义属性(在自定义 hive-site.xml 中),并删除不需要的后台元存储线程。

- 属性名称:metastore.task.threads.remote

- 默认值:

N/A (it uses few class names internally) - 新值:

org.apache.hadoop.hive.metastore.txn.AcidHouseKeeperService,org.apache.hadoop.hive.metastore.txn.AcidOpenTxnsCounterService,org.apache.hadoop.hive.metastore.txn.AcidCompactionHistoryService,org.apache.hadoop.hive.metastore.txn.AcidWriteSetService,org.apache.hadoop.hive.metastore.PartitionManagementTask

如果禁用复制,则禁用后台线程。

- 在 Ambari UI 中,添加自定义属性(在自定义 hive-site.xml 中),并删除不需要的线程。

- 属性名称:metastore.task.threads.always

- 默认值:

N/A (it uses few class names internally) - 新值:

org.apache.hadoop.hive.metastore.RuntimeStatsCleanerTask

查询优化

- 保留 Hive 的默认配置,以便在查询针对 TPC-DS 工作负载进行优化时运行查询。 仅当查询失败或运行缓慢时,才需要查询级别优化。

- 确保统计信息是最新的,以避免错误的计划或错误的结果。

- 避免在联接类型的查询中混合使用外部和托管 ACID 表。 在这种情况下,请尝试通过重新创建将外部表转换为托管的非 ACID 表。

- 在 Hive-3 中,在可能有产品 bug 的矢量化、CBO、带区域的时间戳等方面进行了大量工作。 因此,如果任何查询给出错误的结果,请尝试禁用矢量化、CBO、映射联接等,看看这是否有帮助。

迁移后要遵循的其他步骤,可解决结果不正确和性能不佳的问题

问题 Hive 查询提供不正确的结果。 即使 select count(*) 查询也提供不正确的结果。

原因 默认情况下,属性“hive.compute.query.using.stats”设置为 true。 如果将其设置为 true,则它使用存储在元存储中的统计信息来执行查询。 如果统计信息不是最新的,则会导致不正确的结果。

解决方法 在表级别和列级别使用

alter table <table_name> compute statics;命令收集托管表的统计信息。 参考链接 - https://cwiki.apache.org/confluence/display/hive/statsdev#StatsDev-TableandPartitionStatistics问题 执行 Hive 查询需要很长时间。

原因 如果查询具有联接条件,则 Hive 会根据表大小和联接条件创建是使用映射联接还是合并联接的计划。 如果其中一个表包含较小的大小,则它将该表加载到内存中并执行联接操作。 这样,与合并联接相比,查询执行速度更快。

解决方法 确保设置属性“hive.auto.convert.join=true”,这是默认值。 将其设置为 false 会使用合并联接,并可能导致性能不佳。 Hive 根据群集中设置的以下属性决定是否使用映射联接

set hive.auto.convert.join=true; set hive.auto.convert.join.noconditionaltask=true; set hive.auto.convert.join.noconditionaltask.size=<value>; set hive.mapjoin.smalltable.filesize = <value>;如果小型表的估计大小小于 Hive,则当

hive.auto.convert.join.noconditionaltask=true时,通用联接可以自动转换为映射联接。auto.convert.join.noconditionaltask.size(默认值为 10000000 MB)。如果将属性

hive.auto.convert.join设置为 true 时遇到与 OOM 相关的任何问题,则建议仅在会话级别而不是群集级别将该特定查询的属性设置为 false。 如果统计信息错误,并且 Hive 决定根据统计信息使用映射联接,则可能会出现此问题。

问题 如果查询具有联接条件,并且所涉及的表具有 null 或空值,则 Hive 查询会提供不正确的结果。

原因 有时,如果查询中涉及的表具有大量 null 值,则可能会出现与 null 值相关的问题。 Hive 错误地执行查询优化,涉及的 null 值导致不正确的结果。

解决方法 如果收到任何不正确的结果,则建议尝试在会话级别设置属性

set hive.cbo.returnpath.hiveop=true。 此配置引入了对联接键的非 null 筛选。 如果表具有许多 null 值,为了优化多个表之间的联接操作,我们可以启用此配置,以便它只考虑非 null 值。问题 如果查询具有多个联接条件,Hive 查询会提供不正确的结果。

原因 有时,每当映射联接多次存在相同的联接时,Tez 会生成错误的运行时计划。

解决方法 当将

hive.merge.nway.joins设置为 false 时,可能得到不正确的结果。 尝试仅针对受影响的查询将其设置为 true。 这有助于查询同一条件下的多个联接,将联接合并到单个联接运算符中。 如果使用大型随机联接以避免重排阶段,此方法非常有用。问题 与之前的运行相比,查询执行时间逐日增加。

原因 如果小文件的数量增加,则可能会出现此问题。 因此,Hive 需要一段时间来读取所有文件并处理数据,从而导致执行时间增加。

解决方法 请确保经常为托管表运行压缩。 此步骤可避免使用小文件并提高性能。

问题 当客户对托管的 acid orc 表和托管的非 ACID orc 表使用联接条件时,Hive 查询会提供不正确的结果。

原因 从 HIVE 3 开始,严格要求将所有托管表保留为一个 acid 表。 如果想将其保留为一个 acid 表,则表格式必须是 orc,这是主要标准。 但是,如果将严格托管表属性“hive.strict.managed.tables”禁用为 false,则可以创建托管的非 ACID 表。 在某些情况下,客户创建外部 ORC 表,或者在迁移后将表转换为外部表,禁用严格的托管表属性并将其转换为托管表。 此时,该表已转换为非 ACID 托管 orc 格式。

解决方法 如果将表与非 ACID 托管 ORC 表或者 acid 托管 orc 表联接,Hive 优化将出错。

如果要将外部表转换为托管表,

- 请勿将属性“hive.strict.managed.tables”设置为 false。 如果设置,则可以创建非 ACID 托管表,但在 HIVE-3 中不会请求它

- 使用以下 alter 命令(而不是

alter table <table_name> set TBLPROPERTIES ('EXTERNAL'='false');)将外部表转换为托管表

alter table rt set TBLPROPERTIES ('EXTERNAL'='false', 'transactional'='true');

故障排除指南

适用于 Hive 工作负载的 HDInsight 3.6 到 4.0 故障排除指南提供了将 Hive 工作负载从 HDInsight 3.6 迁移到 HDInsight 4.0 时所遇到的常见问题的解答。