适用范围: Azure CLI ml 扩展 v2(最新版)

Azure CLI ml 扩展 v2(最新版) Python SDK azure-ai-ml v2(最新版)

Python SDK azure-ai-ml v2(最新版)

了解如何通过联机终结点在 Azure 机器学习中使用 NVIDIA Triton 推理服务器。

Triton 是针对推理进行了优化的多框架开放源软件。 它支持 TensorFlow、ONNX 运行时、PyTorch、NVIDIA TensorRT 等常用的机器学习框架。 它可用于 CPU 工作负载或 GPU 工作负载。

将 Triton 模型部署到联机终结点时,主要可以采用两种方法来利用 Triton 模型:无代码部署或完整代码(自带容器)部署。

- Triton 模型的无代码部署是一种简单的部署方式,因为你只需提供 Triton 模型进行部署即可。

- Triton 模型的完整代码部署(自带容器)是更高级的部署方式,因为你可以完全控制如何自定义可用于 Triton 推理服务器的配置。

对于这两个选项,Triton 推理服务器都将基于 NVIDIA 定义的 Triton 模型执行推理。 例如,系综模型可用于更高级的方案。

托管联机终结点和 Kubernetes 联机终结点都支持 Triton。

本文介绍如何使用 Triton 的无代码部署将模型部署到托管联机终结点。 本文提供有关使用 CLI(命令行)、Python SDK v2 和 Azure 机器学习工作室的信息。 若要使用 Triton 推理服务器的配置进一步自定义,请参阅使用自定义容器部署模型和适用于 Triton 的 BYOC 示例(部署定义和端到端脚本)。

先决条件

pip install numpy

pip install tritonclient[http]

pip install pillow

pip install gevent

访问 Azure 订阅的 NCv3 系列虚拟机。

重要

可能需要为订阅请求增加配额,然后才能使用此系列虚拟机。 有关详细信息,请参阅 NCv3 系列。

NVIDIA Triton 推理服务器需要特定的模型存储库结构,其中包含每个模型的目录和模型版本子目录。 每个模型版本子目录的内容由模型的类型以及支持模型的后端的要求决定。 要查看所有模型存储库结构,请参阅 https://github.com/triton-inference-server/server/blob/main/docs/user_guide/model_repository.md#model-files

本文档中的信息基于使用以 ONNX 格式存储的模型,因此模型存储库的目录结构为 <model-repository>/<model-name>/1/model.onnx。 具体而言,此模型执行图像识别。

本文中的信息基于 azureml-examples 存储库中包含的代码示例。 若要在不复制/粘贴 YAML 和其他文件的情况下在本地运行命令,请克隆存储库,然后将目录更改为存储库中的 cli 目录:

git clone https://github.com/Azure/azureml-examples --depth 1

cd azureml-examples

cd cli

如果尚未为 Azure CLI 指定默认设置,则应保存默认设置。 若要避免多次传入订阅、工作区和资源组的值,请使用以下命令。 将以下参数替换为特定配置的值:

- 将

<subscription> 替换为你的 Azure 订阅 ID。

- 将

<workspace> 替换为 Azure 机器学习工作区名称。

- 将

<resource-group> 替换为包含你的工作区的 Azure 资源组。

- 将

<location> 替换为包含你的工作区的 Azure 区域。

提示

可以使用 az configure -l 命令查看当前的默认值。

az account set --subscription <subscription>

az configure --defaults workspace=<workspace> group=<resource-group> location=<location>

适用范围: Python SDK azure-ai-ml v2(最新版)

Python SDK azure-ai-ml v2(最新版)

Azure 机器学习工作区。 有关创建工作区的步骤,请参阅 “创建工作区”。

适用于 Python v2 的 Azure 机器学习 SDK。 若要安装 SDK,请使用以下命令:

pip install azure-ai-ml azure-identity

要将 SDK 的现有安装更新到最新版本,请使用以下命令:

pip install --upgrade azure-ai-ml azure-identity

有关详细信息,请参阅 适用于 Python 的 Azure 机器学习包客户端库。

正常工作的 Python 3.8(或更高版本)环境。

必须安装其他 Python 包才能进行评分,可以使用以下代码安装它们。 它们包括:

- NumPy - 数组和数值计算库

-

Triton 推理服务器客户端 - 促进对 Triton 推理服务器的请求

- Pillow - 用于图像操作的库

- Gevent - 连接到 Triton 服务器时使用的网络库

pip install numpy

pip install tritonclient[http]

pip install pillow

pip install gevent

访问 Azure 订阅的 NCv3 系列虚拟机。

重要

可能需要为订阅请求增加配额,然后才能使用此系列虚拟机。 有关详细信息,请参阅 NCv3 系列。

本文中的信息基于 azureml-examples 存储库中包含的 online-endpoints-triton.ipynb 笔记本内容。 若要在不复制/粘贴文件的情况下在本地运行命令,请克隆存储库,然后将目录更改为存储库中的 sdk/endpoints/online/triton/single-model/ 目录:

git clone https://github.com/Azure/azureml-examples --depth 1

cd azureml-examples/sdk/python/endpoints/online/triton/single-model/

定义部署配置

适用于: Azure CLI ml 扩展 v2(当前)

Azure CLI ml 扩展 v2(当前)

本节演示如何使用 Azure CLI 和机器学习扩展 (v2) 部署到托管联机终结点。

要避免在多个命令中键入路径,请使用以下命令设置 BASE_PATH 环境变量。 此变量指向模型和关联的 YAML 配置文件所在的目录:

BASE_PATH=endpoints/online/triton/single-model

使用以下命令设置要创建的终结点的名称。 在此示例中,将为终结点创建一个随机名称:

export ENDPOINT_NAME=triton-single-endpt-`echo $RANDOM`

为终结点创建 YAML 配置文件。 以下示例配置终结点的名称和身份验证模式。 以下命令中使用的配置文件位于之前克隆的 azureml-examples 存储库的 /cli/endpoints/online/triton/single-model/create-managed-endpoint.yml 中:

create-managed-endpoint.yaml

$schema: https://azuremlschemas.azureedge.net/latest/managedOnlineEndpoint.schema.json

name: my-endpoint

auth_mode: aml_token

为部署创建 YAML 配置文件。 以下示例将配置名为 blue 的部署,并将其部署到在上一步中定义的终结点。 以下命令中使用的配置文件位于之前克隆的 azureml-examples 存储库的 /cli/endpoints/online/triton/single-model/create-managed-deployment.yml 中:

重要

要使 Triton 无代码部署 (NCD) 正常工作,需要将 type 设置为 triton_model,type: triton_model。 有关详细信息,请参阅 CLI (v2) 模型 YAML 架构。

此部署使用 Standard_NC6s_v3 虚拟机。 可能需要为订阅请求增加配额,然后才能使用此虚拟机。 有关详细信息,请参阅 NCv3 系列。

$schema: https://azuremlschemas.azureedge.net/latest/managedOnlineDeployment.schema.json

name: blue

endpoint_name: my-endpoint

model:

name: sample-densenet-onnx-model

version: 1

path: ./models

type: triton_model

instance_count: 1

instance_type: Standard_NC6s_v3

适用范围: Python SDK azure-ai-ml v2(最新版)

Python SDK azure-ai-ml v2(最新版)

本部分介绍如何使用 Azure 机器学习 Python SDK (v2) 定义 Triton 部署,使其部署到托管联机终结点。

若要连接到工作区,我们需要提供标识符参数 - 订阅、资源组和工作区名称。

subscription_id = "<SUBSCRIPTION_ID>"

resource_group = "<RESOURCE_GROUP>"

workspace_name = "<AML_WORKSPACE_NAME>"

使用以下命令设置要创建的终结点的名称。 在此示例中,将为终结点创建一个随机名称:

import random

endpoint_name = f"endpoint-{random.randint(0, 10000)}"

我们将在 MLClient 的 azure.ai.ml 中使用这些详细信息来获取所需 Azure 机器学习工作区的句柄。 请查看此配置笔记本,了解有关如何配置凭据和连接到工作区的更多详细信息。

from azure.ai.ml import MLClient

from azure.identity import DefaultAzureCredential

ml_client = MLClient(

DefaultAzureCredential(),

subscription_id,

resource_group,

workspace_name,

)

创建一个 ManagedOnlineEndpoint 对象以配置终结点。 以下示例配置终结点的名称和身份验证模式。

from azure.ai.ml.entities import ManagedOnlineEndpoint

endpoint = ManagedOnlineEndpoint(name=endpoint_name, auth_mode="key")

创建一个 ManagedOnlineDeployment 对象以配置部署。 以下示例将配置名为 blue 的部署,将其部署到在上一步中定义的终结点并定义本地模型内联。

from azure.ai.ml.entities import ManagedOnlineDeployment, Model

model_name = "densenet-onnx-model"

model_version = 1

deployment = ManagedOnlineDeployment(

name="blue",

endpoint_name=endpoint_name,

model=Model(

name=model_name,

version=model_version,

path="./models",

type="triton_model"

),

instance_type="Standard_NC6s_v3",

instance_count=1,

)

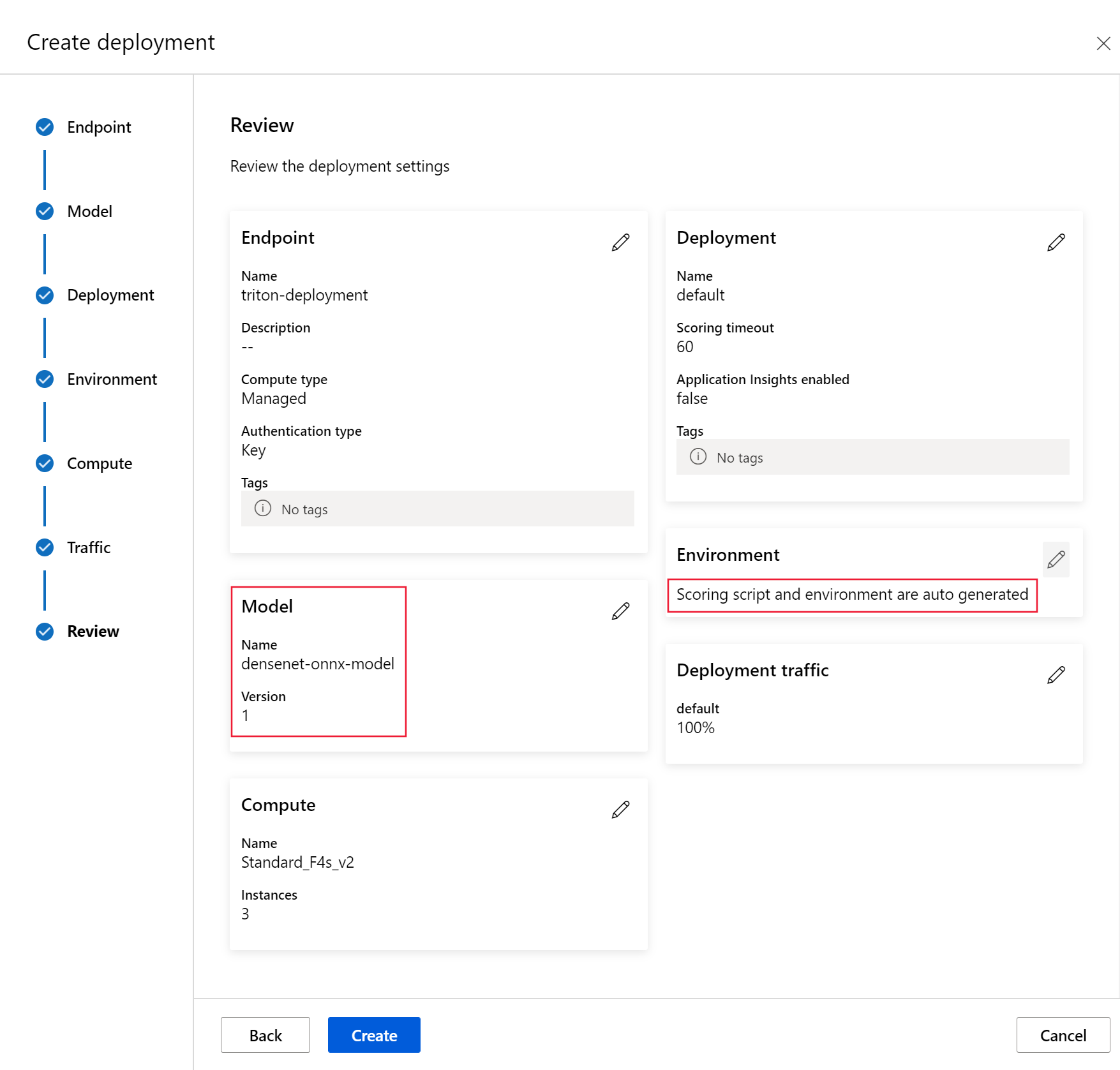

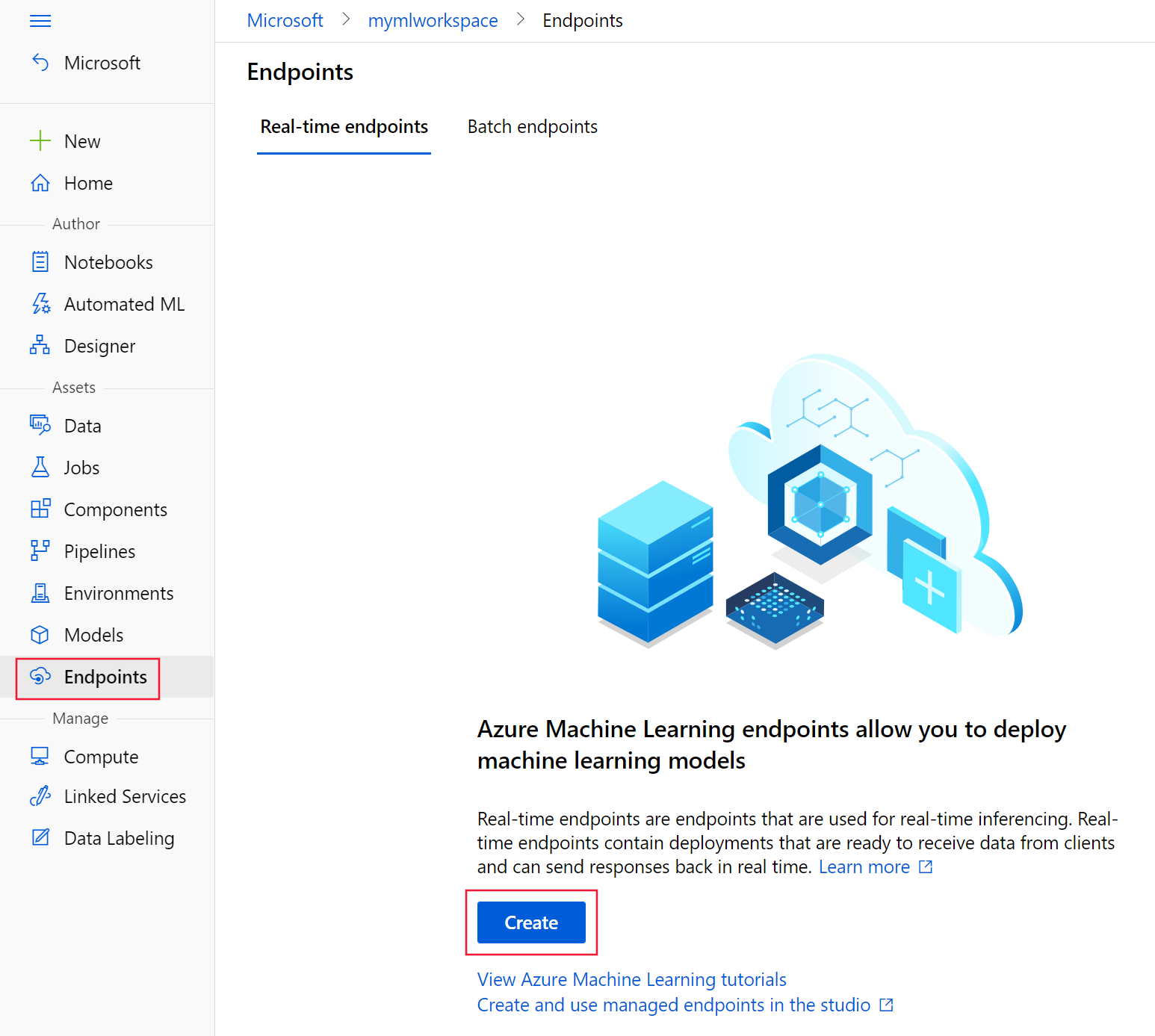

本部分演示如何使用 Azure 机器学习工作室在托管联机终结点上定义 Triton 部署。

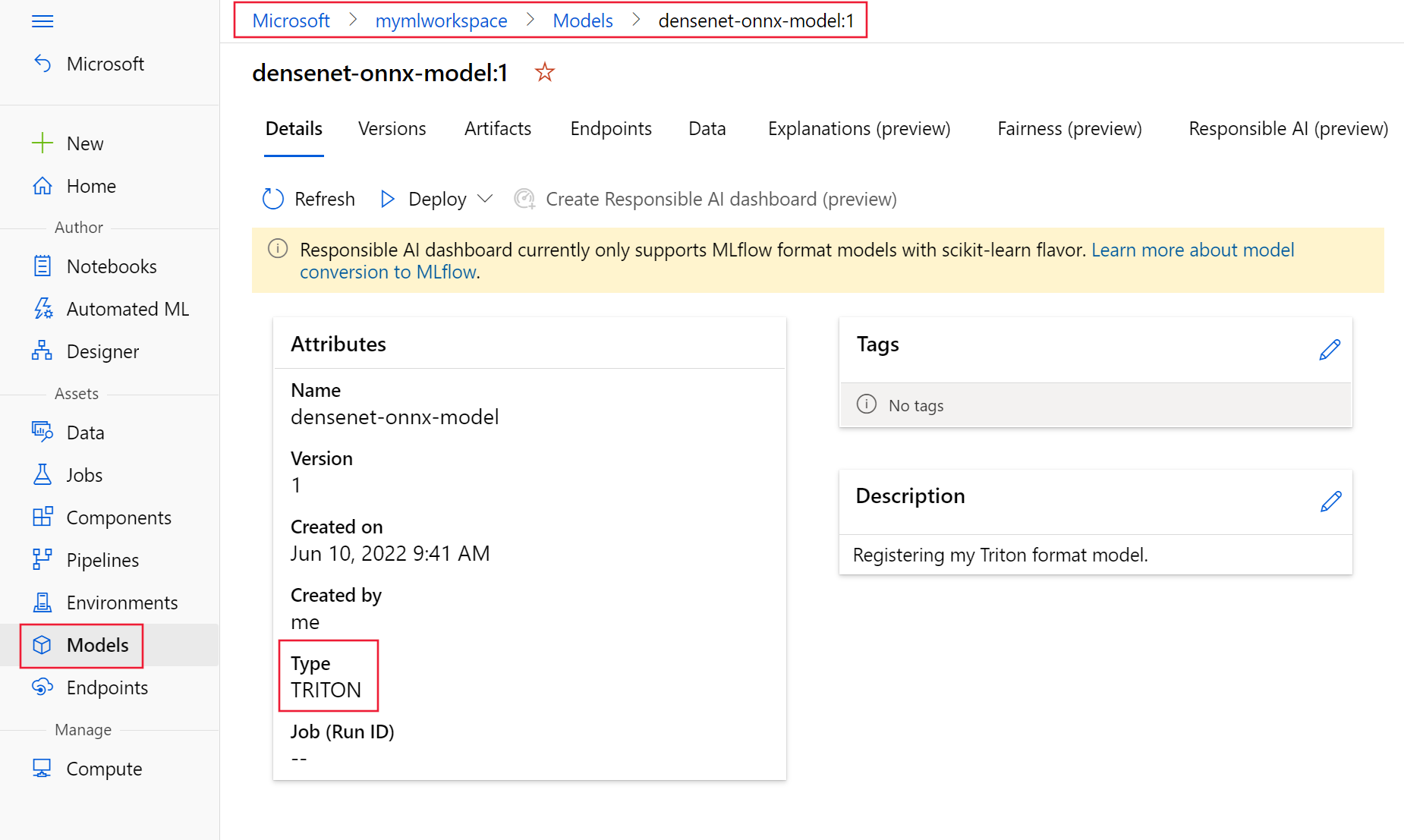

使用以下 YAML 和 CLI 命令以 Triton 格式注册模型。 YAML 使用来自 https://github.com/Azure/azureml-examples/tree/main/cli/endpoints/online/triton/single-model 的 densenet-onnx 模型

create-triton-model.yaml

name: densenet-onnx-model

version: 1

path: ./models

type: triton_model

description: Registering my Triton format model.

az ml model create -f create-triton-model.yaml

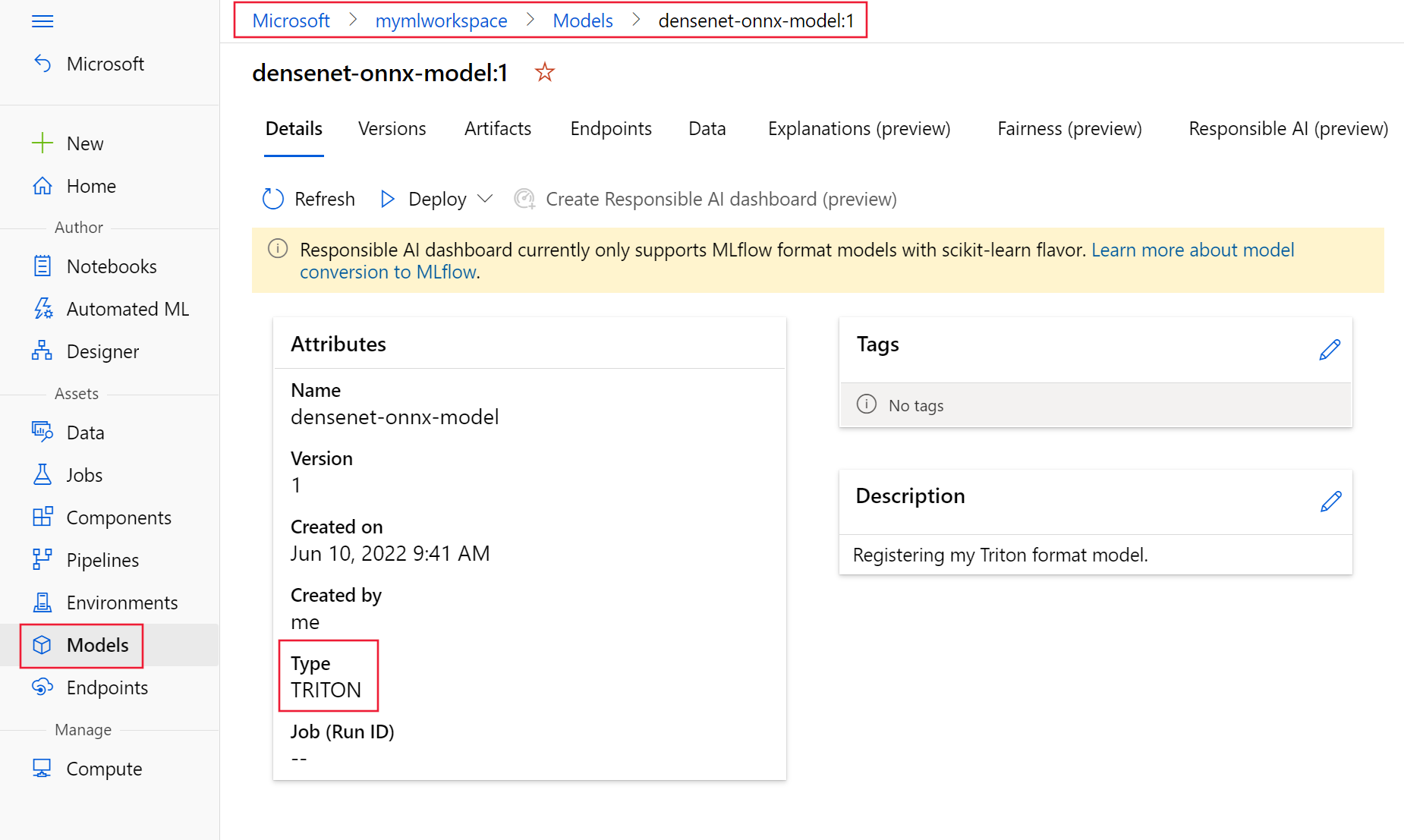

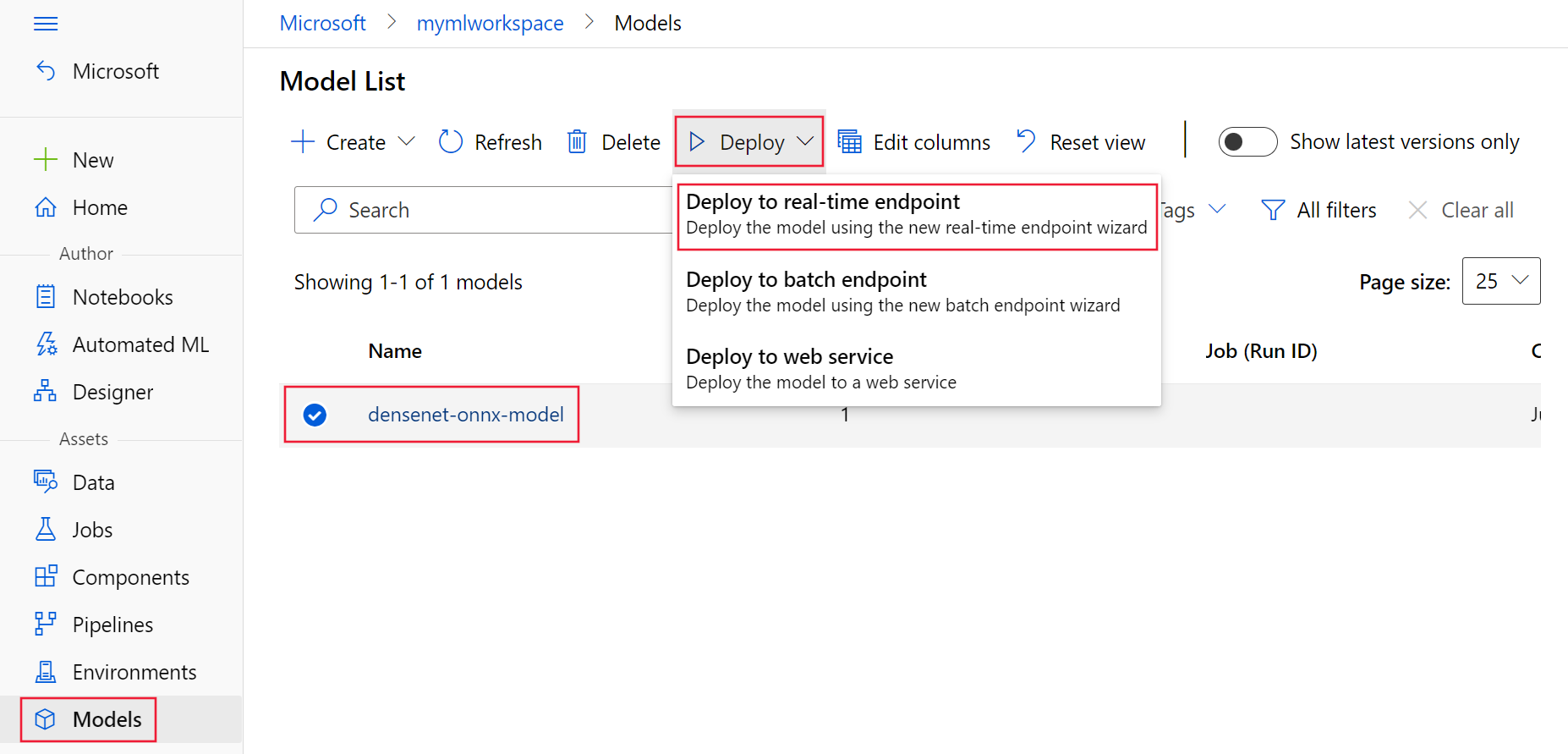

以下屏幕截图显示了已注册的模型在 Azure 机器学习工作室的“模型”页面中的外观。

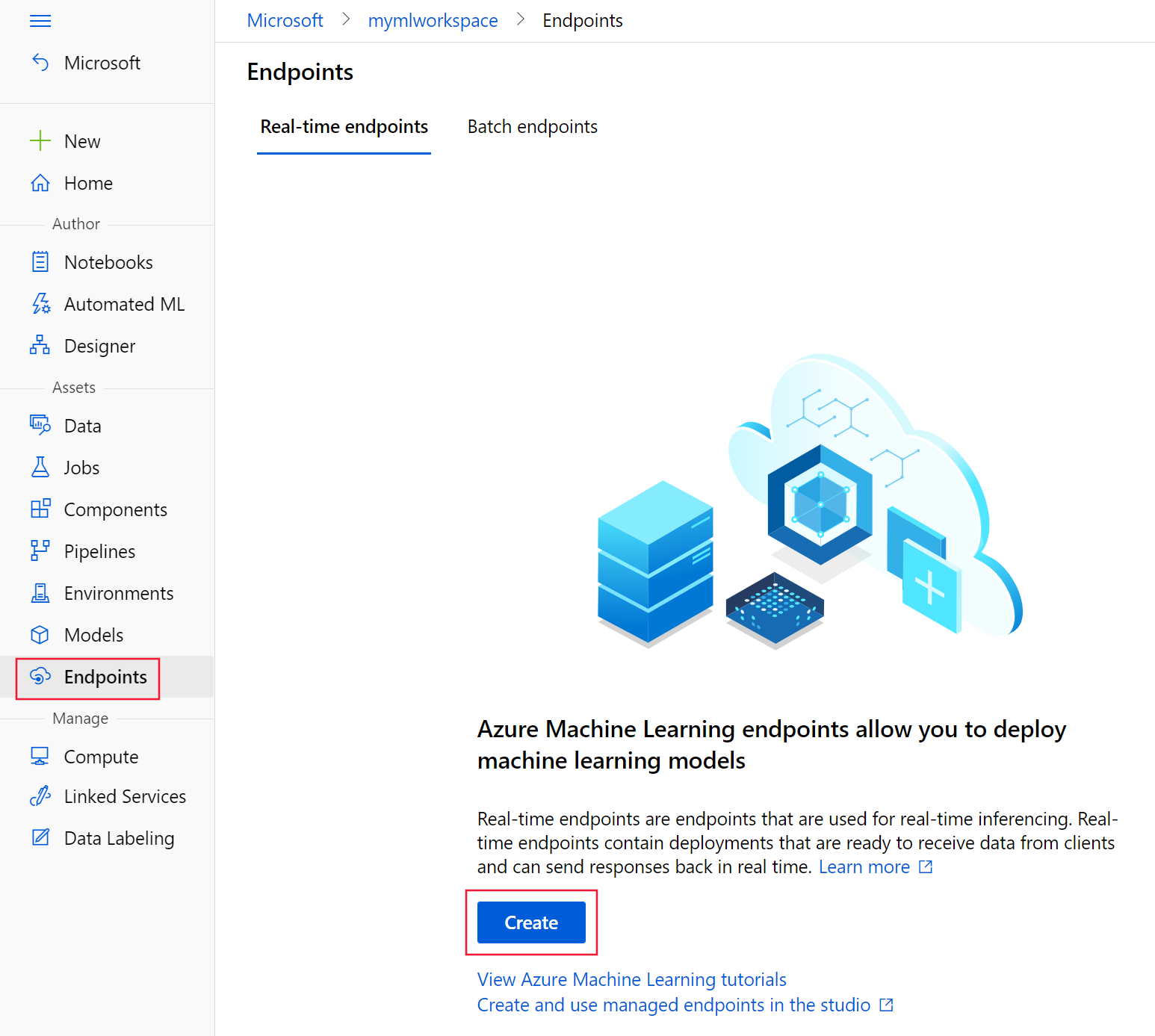

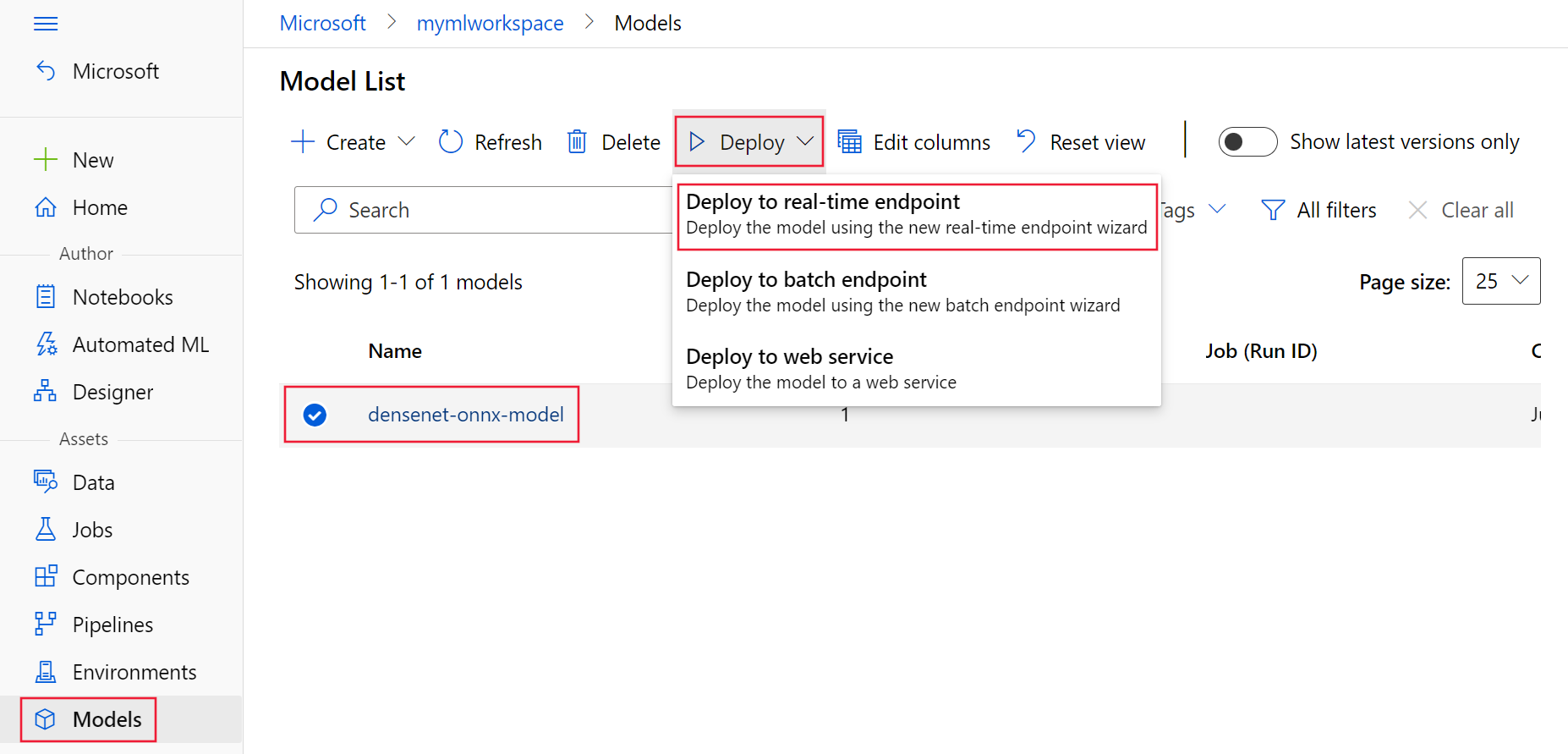

在工作室中,选择你的工作区,然后在“终结点”或“模型”页面中创建终结点部署:

在“终结点”页面中选择“+ 创建”。

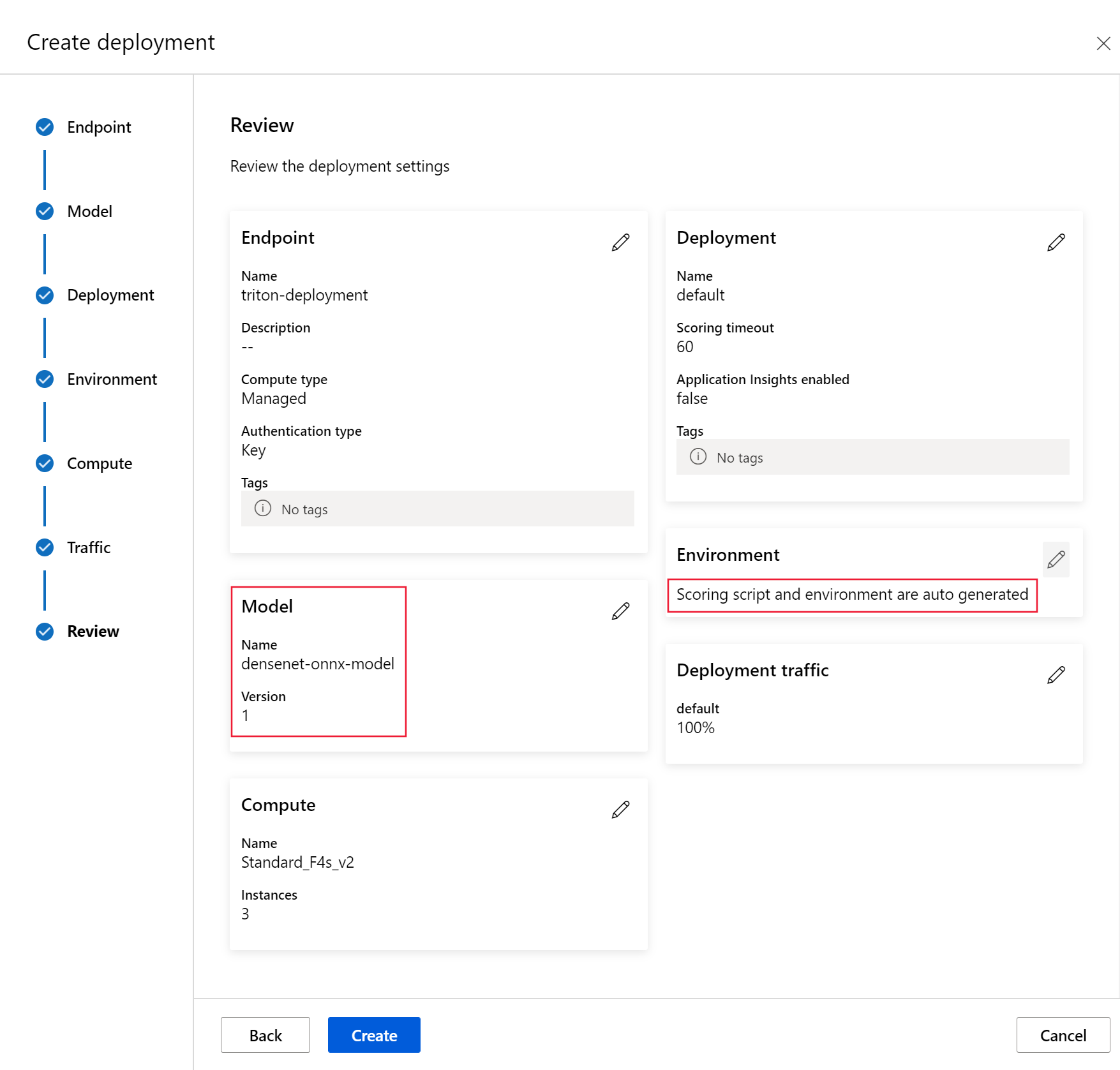

提供终结点的名称和身份验证类型,然后选择“下一步”。

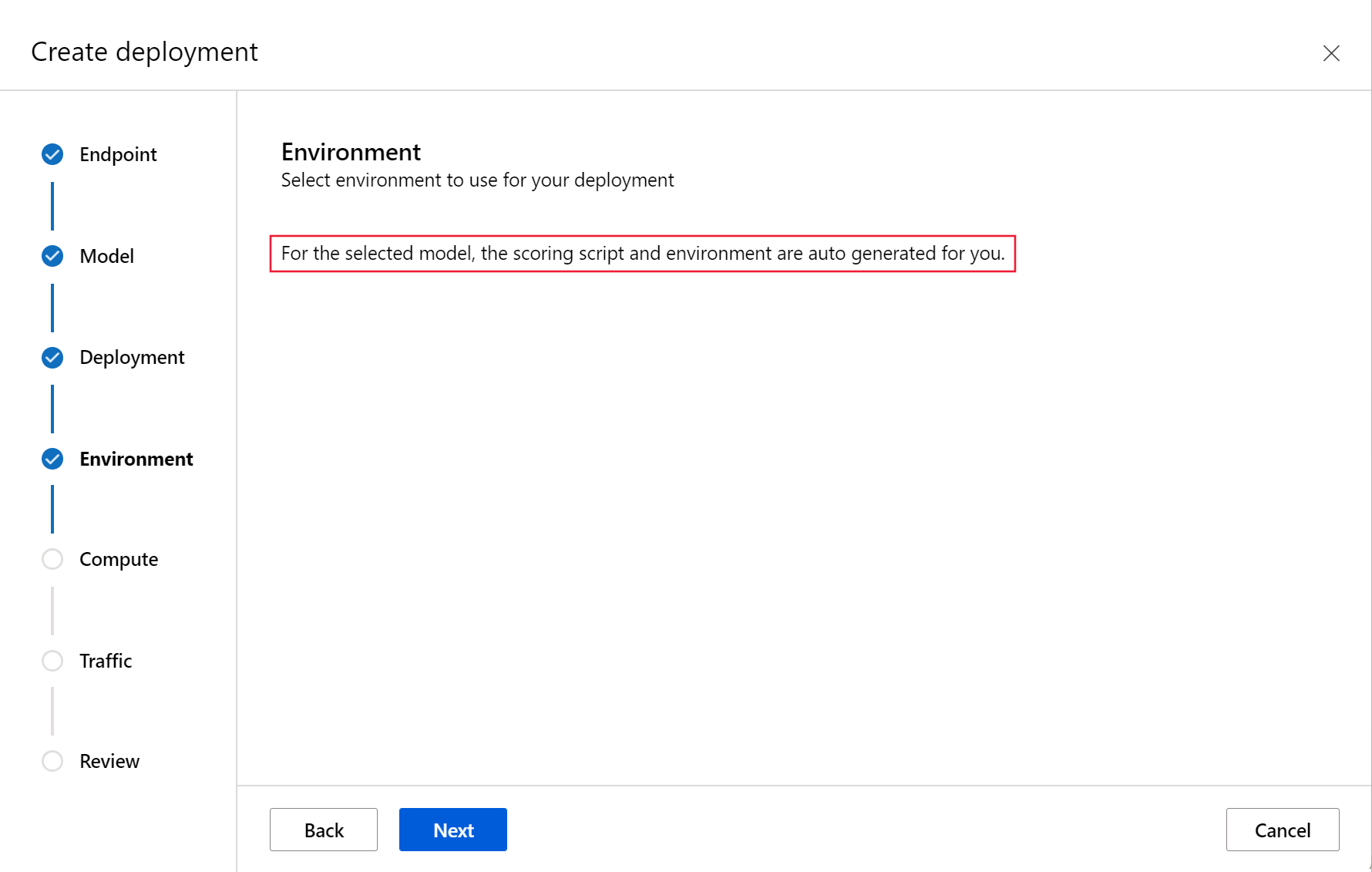

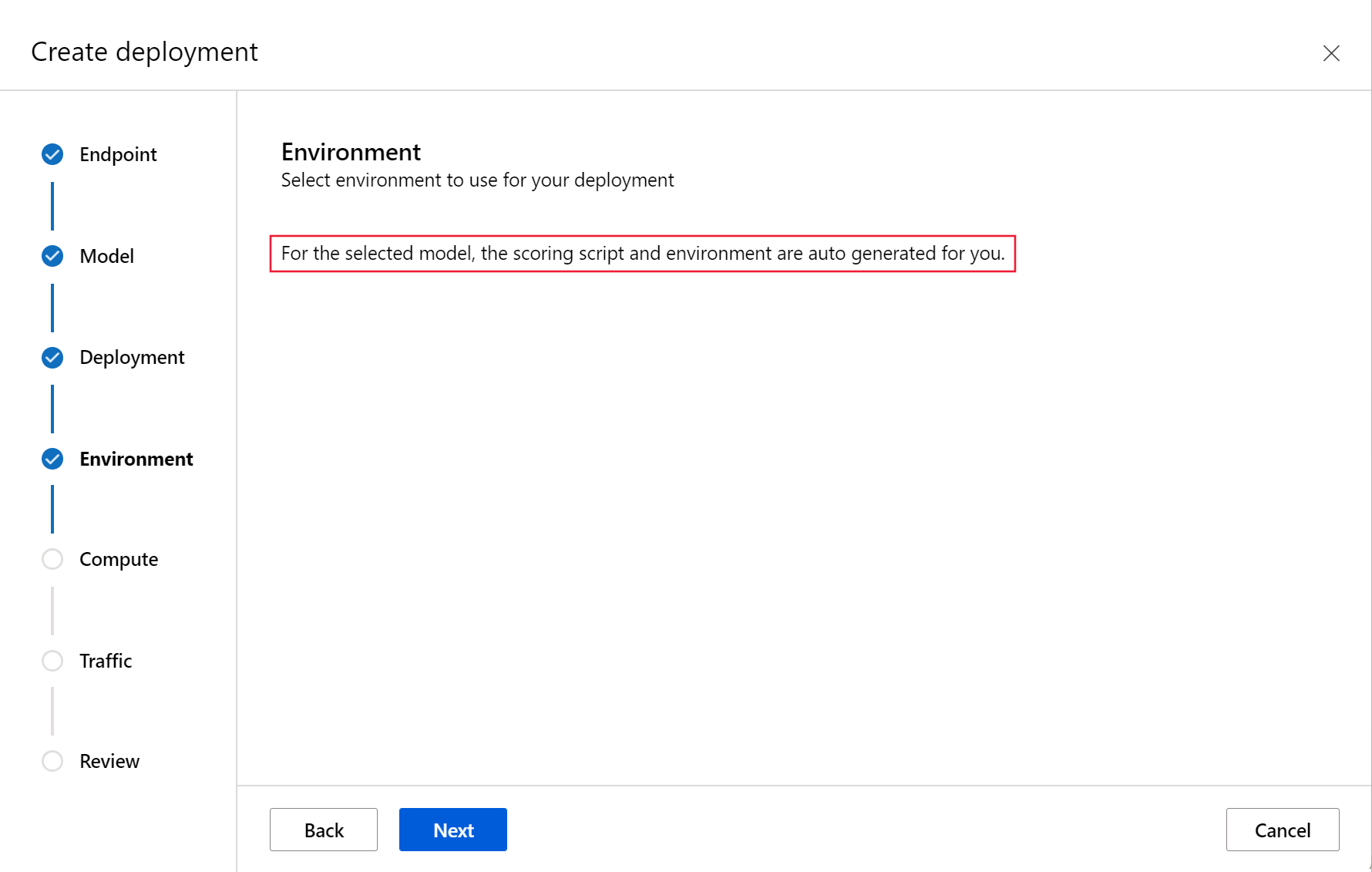

选择模型时,请选择之前注册的 Triton 模型。 选择“下一步”继续。

选择以 Triton 格式注册的模型时,在向导的“环境”步骤中,不需要评分脚本和环境。

选择 Triton 模型,然后选择“部署”。 出现提示时,选择“部署到实时终结点”。

“部署到 Azure”

适用于: Azure CLI ml 扩展 v2(当前)

Azure CLI ml 扩展 v2(当前)

要使用 YAML 配置创建新的终结点,请使用以下命令:

az ml online-endpoint create -n $ENDPOINT_NAME -f $BASE_PATH/create-managed-endpoint.yaml

要使用 YAML 配置创建部署,请使用以下命令:

az ml online-deployment create --name blue --endpoint $ENDPOINT_NAME -f $BASE_PATH/create-managed-deployment.yaml --all-traffic

适用范围: Python SDK azure-ai-ml v2(最新版)

Python SDK azure-ai-ml v2(最新版)

要使用 ManagedOnlineEndpoint 对象创建新的终结点,请使用以下命令:

endpoint = ml_client.online_endpoints.begin_create_or_update(endpoint)

要使用 ManagedOnlineDeployment 对象创建部署,请使用以下命令:

ml_client.online_deployments.begin_create_or_update(deployment)

部署完成后,其流量值将设置为 0%。 将流量更新为 100%。

endpoint.traffic = {"blue": 100}

ml_client.online_endpoints.begin_create_or_update(endpoint)

完成向导以部署到终结点。

部署完成后,其流量值将设置为 0%。 在第二个菜单行上单击 Update Traffic,从“终结点”页将流量更新为 100%。

测试终结点

适用于: Azure CLI ml 扩展 v2(当前)

Azure CLI ml 扩展 v2(当前)

部署完成后,使用以下命令向部署的终结点发出评分请求。

提示

azureml-examples 存储库中的 /cli/endpoints/online/triton/single-model/triton_densenet_scoring.py 文件用于评分。 传递到终结点的图像需要经过预处理以满足大小、类型和格式要求,还需要经过后处理以显示预测的标签。

triton_densenet_scoring.py 使用 tritonclient.http 库与 Triton 推理服务器通信。

要获取终结点评分 URI,请使用以下命令:

scoring_uri=$(az ml online-endpoint show -n $ENDPOINT_NAME --query scoring_uri -o tsv)

scoring_uri=${scoring_uri%/*}

要获取身份验证密钥,请使用以下命令:

auth_token=$(az ml online-endpoint get-credentials -n $ENDPOINT_NAME --query accessToken -o tsv)

要使用终结点对数据进行评分,请使用以下命令。 它将孔雀的图像 (https://aka.ms/peacock-pic) 提交到终结点:

python $BASE_PATH/triton_densenet_scoring.py --base_url=$scoring_uri --token=$auth_token --image_path $BASE_PATH/data/peacock.jpg

脚本的响应类似于以下文本:

Is server ready - True

Is model ready - True

/azureml-examples/cli/endpoints/online/triton/single-model/densenet_labels.txt

84 : PEACOCK

适用范围: Python SDK azure-ai-ml v2(最新版)

Python SDK azure-ai-ml v2(最新版)

要获取终结点评分 URI,请使用以下命令:

endpoint = ml_client.online_endpoints.get(endpoint_name)

scoring_uri = endpoint.scoring_uri

要获取身份验证密钥,请使用以下命令:keys = ml_client.online_endpoints.list_keys(endpoint_name) auth_key = keys.primary_key

以下评分代码使用 Triton 推理服务器客户端将孔雀的图像提交到终结点。 本示例的配套笔记本中提供了此脚本 - 使用 Triton 将模型部署到联机终结点。

# Test the blue deployment with some sample data

import requests

import gevent.ssl

import numpy as np

import tritonclient.http as tritonhttpclient

from pathlib import Path

import prepost

img_uri = "http://aka.ms/peacock-pic"

# We remove the scheme from the url

url = scoring_uri[8:]

# Initialize client handler

triton_client = tritonhttpclient.InferenceServerClient(

url=url,

ssl=True,

ssl_context_factory=gevent.ssl._create_default_https_context,

)

# Create headers

headers = {}

headers["Authorization"] = f"Bearer {auth_key}"

# Check status of triton server

health_ctx = triton_client.is_server_ready(headers=headers)

print("Is server ready - {}".format(health_ctx))

# Check status of model

model_name = "model_1"

status_ctx = triton_client.is_model_ready(model_name, "1", headers)

print("Is model ready - {}".format(status_ctx))

if Path(img_uri).exists():

img_content = open(img_uri, "rb").read()

else:

agent = f"Python Requests/{requests.__version__} (https://github.com/Azure/azureml-examples)"

img_content = requests.get(img_uri, headers={"User-Agent": agent}).content

img_data = prepost.preprocess(img_content)

# Populate inputs and outputs

input = tritonhttpclient.InferInput("data_0", img_data.shape, "FP32")

input.set_data_from_numpy(img_data)

inputs = [input]

output = tritonhttpclient.InferRequestedOutput("fc6_1")

outputs = [output]

result = triton_client.infer(model_name, inputs, outputs=outputs, headers=headers)

max_label = np.argmax(result.as_numpy("fc6_1"))

label_name = prepost.postprocess(max_label)

print(label_name)

脚本的响应类似于以下文本:

Is server ready - True

Is model ready - True

/azureml-examples/sdk/endpoints/online/triton/single-model/densenet_labels.txt

84 : PEACOCK

Triton Inference Server 需要使用 Triton 客户端进行推理,并支持张量类型的输入。 Azure 机器学习工作室目前不支持此功能。 而是使用 CLI 或 SDK 通过 Triton 调用终结点。

删除终结点和模型

适用于: Azure CLI ml 扩展 v2(当前)

Azure CLI ml 扩展 v2(当前)

使用完终结点后,使用以下命令将其删除:

az ml online-endpoint delete -n $ENDPOINT_NAME --yes

使用以下命令存档模型:

az ml model archive --name $MODEL_NAME --version $MODEL_VERSION

适用范围: Python SDK azure-ai-ml v2(最新版)

Python SDK azure-ai-ml v2(最新版)

删除终结点。 删除终结点也会删除任何子部署,但不会存档关联的环境或模型。

ml_client.online_endpoints.begin_delete(name=endpoint_name)

使用以下代码存档模型。

ml_client.models.archive(name=model_name, version=model_version)

在终结点页面中,单击终结点名称下方第二行中的“Delete”。

在模型页面中,单击模型名称下方第一行中的“Delete”。

后续步骤

若要了解更多信息,请查看下列文章:

Azure CLI ml 扩展 v2(最新版)

Azure CLI ml 扩展 v2(最新版) Python SDK azure-ai-ml v2(最新版)

Python SDK azure-ai-ml v2(最新版)