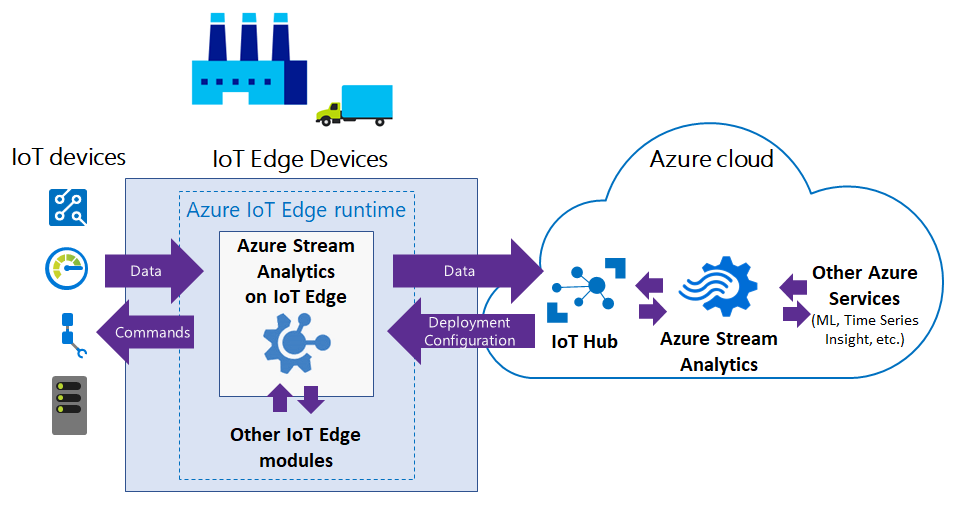

IoT Edge 上的 Azure 流分析可让开发人员将准实时分析智能更近地部署到 IoT 设备,以便他们能够使设备生成的数据发挥出全部价值。 Azure 流分析专为实现低延迟、复原能力、有效使用带宽和合规性而设计。 企业可以将控制逻辑部署到接近工业运营的位置,并补充在云中完成的大数据分析。

IoT Edge 上的 Azure 流分析在 Azure IoT Edge 框架中运行。 在流分析中创建作业后,便可使用 IoT 中心对该作业进行部署和管理。

常见方案

本部分介绍 IoT Edge 上的流分析的常见方案。 下图显示 IoT 设备与 Azure 云之间的数据流。

低延迟命令和控制

生产安全系统响应运行数据时发生的延迟必须超低。 借助 IoT Edge 上的流分析,可以近实时地分析传感器数据,并在检测到异常情况时发出命令,从而停止计算机或触发警报。

与云的连接受限

任务关键型系统(如远程采矿设备、连接的船舶或海上钻井)需要分析数据并对数据做出反应,即使云连接是间歇性的也是如此。 使用流分析,流式处理逻辑可独立于网络连接运行,并且你可以选择要将哪些内容发送到云以进行进一步处理或存储。

有限的带宽

由喷气引擎或联网汽车生成的数据量可能非常大,因此,在将数据发送到云之前必须对数据进行筛选或预处理。 使用流分析,可以筛选或聚合需要发送到云的数据。

合规性

监管符合性可能需要在将一些数据发送到云之前对其进行本地匿名或聚合处理。

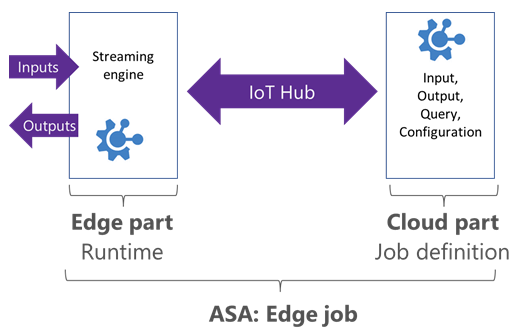

Azure 流分析作业中的 Edge 作业

流分析 Edge 作业在部署到 Azure IoT Edge 设备的容器中运行。 Edge 作业由两部分组成:

负责作业定义的云部分:用户在云中定义输入、输出、查询和其他设置(例如,无序事件)。

在 IoT 设备上运行的模块。 该模块包含流分析引擎,并从云接收作业定义。

流分析使用 IoT 中心将 Edge 作业部署到设备。 有关详细信息,请参阅 IoT Edge 部署。

Edge 作业限制

目标是在 IoT Edge 作业和云作业之间进行平衡。 大多数 SQL 查询语言功能都支持 Edge 和云。 但是,以下功能不支持 Edge 作业:

- JavaScript 中的用户定义函数 (UDF)。

- 用户定义聚合 (UDA)。

- Azure ML 函数。

- 用于输入/输出的 AVRO 格式。 目前仅支持 CSV 和 JSON。

- 以下 SQL 运算符:

- PARTITION BY

- GetMetadataPropertyValue

- 延迟到达策略

运行时和硬件要求

若要在 IoT Edge 上运行流分析,需要具备可以运行 Azure IoT Edge 的设备。

流分析和 Azure IoT Edge 使用 Docker 容器来提供在多个主机操作系统(Windows、Linux)上运行的可移植解决方案。

IoT Edge 上的流分析作为 Windows 和 Linux 映像提供,运行于 x86-64 或 ARM(高级 RISC 计算机)架构之上。

输入和输出

流分析 Edge 作业可以从在 IoT Edge 设备上运行的其他模块获取输入和输出。 要与特定模块实现相互连接,你可以在部署时设置路由配置。 有关详细信息,请参阅 IoT Edge 模块组成文档。

输入和输出均支持 CSV 和 JSON 格式。

对于在流分析作业中创建的每个输入和输出流,都将在部署的模块上创建相应的终结点。 这些终结点可以用于部署的路由。

支持的流输入类型为:

- 事件中心。

- IoT 中心。

支持的流输出类型为:

- SQL 数据库

- 事件中心

- Blob 存储/ADLS Gen2

参考输入支持参考文件类型。 可以使用下游的云作业访问其他输出。

许可证和第三方通知

Azure 流分析模块映像信息

此版本信息的上次更新时间为 2020-09-21:

映像:

mcr.microsoft.com/azure-stream-analytics/azureiotedge:1.0.9-linux-amd64- 基础映像:mcr.microsoft.com/dotnet/core/runtime:2.1.13-alpine

- 平台:

- 体系结构:amd64

- os:linux

映像:

mcr.microsoft.com/azure-stream-analytics/azureiotedge:1.0.9-linux-arm32v7- 基础映像:mcr.microsoft.com/dotnet/core/runtime:2.1.13-bionic-arm32v7

- 平台:

- 体系结构:arm

- os:linux

映像:

mcr.microsoft.com/azure-stream-analytics/azureiotedge:1.0.9-linux-arm64- 基础映像:mcr.microsoft.com/dotnet/core/runtime:3.0-bionic-arm64v8

- 平台:

- 体系结构:arm64

- os:linux