Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

APPLIES TO:

MongoDB

Note

If you are planning a data migration to Azure Cosmos DB and all that you know is the number of vcores and servers in your existing sharded and replicated database cluster, please also read about estimating request units using vCores or vCPUs

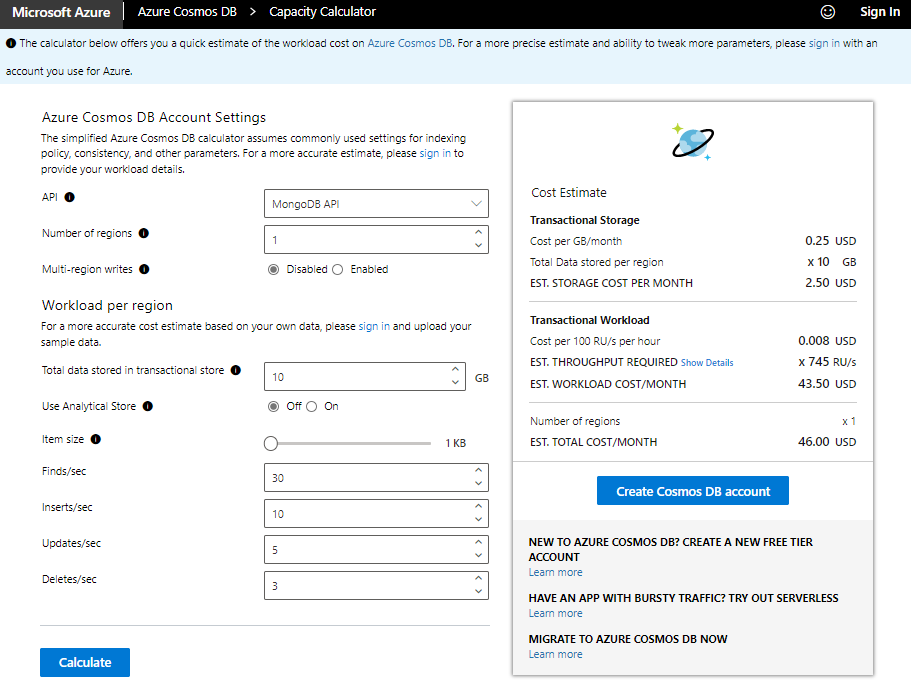

Configuring your databases and collections with the right amount of provisioned throughput, or Request Units (RU/s), for your workload is essential to optimizing cost and performance. This article describes how to use the Azure Cosmos DB capacity planner to get an estimate of the required RU/s and cost of your workload when using the Azure Cosmos DB for MongoDB. If you are using API for NoSQL, see how to use capacity calculator with API for NoSQL article.

Capacity planner modes

| Mode | Description |

|---|---|

| Basic | Provides a quick, high-level RU/s and cost estimate. This mode assumes the default Azure Cosmos DB settings for indexing policy, consistency, and other parameters. Use basic mode for a quick, high-level estimate when you are evaluating a potential workload to run on Azure Cosmos DB. To learn more, see how to estimate cost with basic mode. |

Estimate provisioned throughput and cost using basic mode

To get a quick estimate for your workload using the basic mode, navigate to the capacity planner. Input the following parameters based on your workload:

| Input | Description |

|---|---|

| API | Choose API for MongoDB |

| Number of regions | Azure Cosmos DB for MongoDB is available in all Azure China regions. Select the number of regions required for your workload. You can associate any number of regions with your account. See multiple-region distribution for more details. |

| Multi-region writes | If you enable multi-region writes, your application can read and write to any Azure region. If you disable multi-region writes, your application can write data to a single region. Enable multi-region writes if you expect to have an active-active workload that requires low latency writes in different regions. For example, an IOT workload that writes data to the database at high volumes in different regions. Multi-region writes guarantees 99.999% read and write availability. Multi-region writes require more throughput when compared to the single write regions. To learn more, see how RUs are different for single and multiple-write regions article. |

| Total data stored in transactional store | Total estimated data stored(GB) in the transactional store in a single region. |

| Use analytical store | Choose On if you want to use Synapse analytical store. Enter the Total data stored in analytical store, it represents the estimated data stored (GB) in the analytical store in a single region. |

| Item size | The estimated size of the documents, ranging from 1 KB to 2 MB. |

| Finds/sec | Number of find operations expected per second per region. |

| Inserts/sec | Number of insert operations expected per second per region. |

| Updates/sec | Number of update operations expected per second per region. When you choose automatic indexing, the estimated RU/s for the update operation is calculated as one property being changed per an update. |

| Deletes/sec | Number of delete operations expected per second per region. |

After filling the required details, select Calculate. The Cost Estimate tab shows provisioned throughput. You can expand the Show Details link in this tab to get the breakdown of the throughput required for different CRUD and query requests. Each time you change the value of any field, select Calculate to recalculate the estimated cost.

Next steps

If all you know is the number of vcores and servers in your existing database cluster, read about estimating request units using vCores or vCPUs

Learn more about Azure Cosmos DB's pricing model.

Create a new Azure Cosmos DB account, database, and container.

Learn how to optimize provisioned throughput cost.

Trying to do capacity planning for a migration to Azure Cosmos DB? You can use information about your existing database cluster for capacity planning.